THEWHITEBOX

TLDR;

New Year, new predictions. As with previous years, I’ve collected my thoughts and the progress we’ve made in 2025 to see how we did on our past predictions and how that can inform this year’s prognostications.

We’ll cover technology, markets, and products, leaving no stone unturned, from the first big AI IPO coming in 2026, my personal bets for companies that will do great in 2026, the next big trade, what’s next after LLMs, my fears of what might go wrong with AI, and finally, things that won’t happen in 2026.

Let’s dive in.

The Future of Shopping? AI + Actual Humans.

AI has changed how consumers shop by speeding up research. But one thing hasn’t changed: shoppers still trust people more than AI.

Levanta’s new Affiliate 3.0 Consumer Report reveals a major shift in how shoppers blend AI tools with human influence. Consumers use AI to explore options, but when it comes time to buy, they still turn to creators, communities, and real experiences to validate their decisions.

The data shows:

Only 10% of shoppers buy through AI-recommended links

87% discover products through creators, blogs, or communities they trust

Human sources like reviews and creators rank higher in trust than AI recommendations

The most effective brands are combining AI discovery with authentic human influence to drive measurable conversions.

Affiliate marketing isn’t being replaced by AI, it’s being amplified by it.

How Did I Do in 2025?

Let’s start by taking a brief look at how I did in my ‘predictions for 2025’ piece back on December 30th, 2024. A humbling way to see what real progress we’ve made this year.

Release of True "LLM + Search" Models

My first prediction was that future reasoning models would use search algorithms to search the solution space. I’ll give me half of the points on this one. Not because I was wrong about the essence, but I was wrong about the structure.

Human intelligence is a combination of three things: intuition, search, and feedback. Our experiences and knowledge build intuition, which is essentially pattern recognition that suggests how to approach a problem.

But intuition only takes us so far. With complex problems, we need time to think. This introduces the idea of intuition-guided search. Using our intuition of what might be good ways to solve a problem, we search for the optimal solution.

But human intelligence has one last feature: the ability to adapt to feedback, something AIs do not yet have, because it’s a very problematic procedure that usually leads to performance decreases (known as catastrophic forgetting).

Humans continuously experience the consequences of their actions, shaping their beliefs and thus priming them for better predictions in the future. AIs do not encode or hold beliefs (at least not explicitly), nor do they adapt to new data in real-time (for now).

Knowing all this, the prediction was that, in 2025, we would see AI conquering steps 1 and 2; an LLM serving as the intuition machine, using explicit search over possible solutions at runtime.

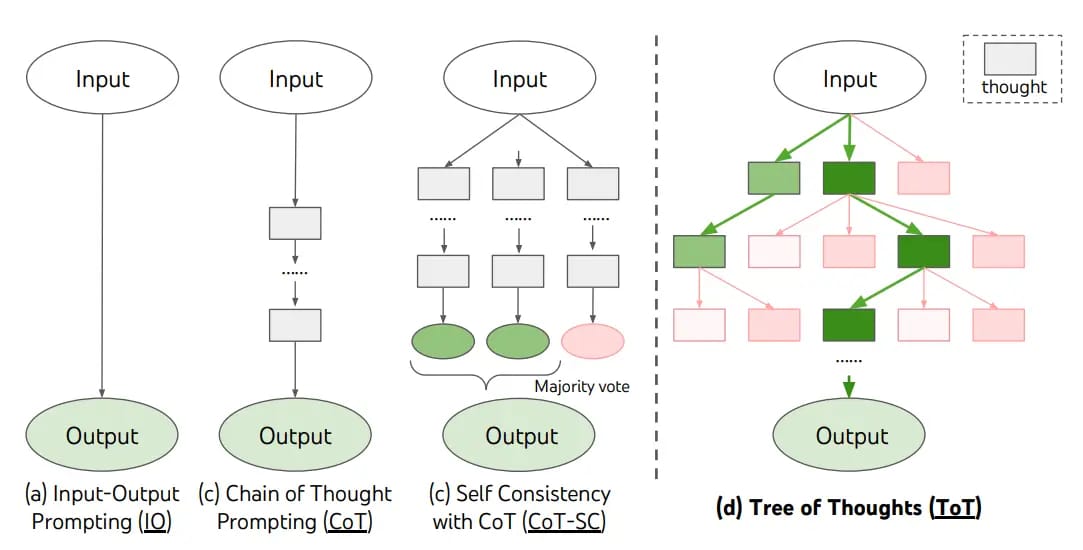

At that time, most models behaved like an option second-to-the-left, just a straight line of thoughts. Instead, I predicted models would evolve into the thing on the right, where they would explicitly reevaluate their thoughts and decide which reasoning chains to pursue and which to “kill”, creating a “tree of thoughts”.

And do top models look like today? Well, not one, not the other, but the one in between, self-consistency models.

Today, most models behave the same way they did one year ago, but with two big chances:

An improved chain of thought, incorporating things like self-reflection that allow them to “change their mind” midway through a reasoning chain, and also, for the most expensive models, the ability to run the task many times in parallel.

Put another way, they are still a single chain of thought, but a more powerful one that imitates the tree-of-thoughts implicitly, not by discarding reasoning chains and opening new ones, but self-correcting in the same chain.

And for the most expensive models, we increase statistical coverage (reducing the likelihood that we happen to run the wrong reasoning chain) by running the model multiple times on the task and keeping the most consistent answer (hereby the term ‘self-consistency’). Known examples include Grok 4 Heavy, Gemini 3 Deep Think, and GPT-5.2 Pro.

Therefore, the only reason I don’t give myself full points here is that, even though the most advanced models use verifiers and other stuff very reminiscent of a tree of thoughts, Labs explicitly rejected the use of MCTS-style search (Monte Carlo Tree Search), despite my intuitions.

The MCTS craze dried up pretty fast, actually, when DeepSeek “killed” the idea back in March with the release of DeepSeek R1 and explicitly saying MCTS was not a good idea, saying it scales poorly. Since, the discussions around MCTS died down a lot.

The Era of Inductive Scientific Discovery

I first predicted that 2025 should see the first AI-led discoveries, even some worthy of Nobel Prizes. This prediction was accurate… sort of.

In 2025, AI did, in fact, present itself as a great tool for discovery, but not in the flamboyant, spectacular way I predicted. However, it did:

Help a famous mathematician develop a proof, with a key technical step in a proof in his and Freek Witteveen’s paper on “Limits to black-box amplification in QMA.”

Beyond Aaronson’s proof, here’s a wide range of other examples where AI has been proven useful to math research

Discover zero-day vulnerabilities in code while providing a patch to solve the issue

AI-designed antibodies that can turn on or off membrane signaling proteins implicated in many diseases. The first ever AI-designed drug?

AlphaEvolve, one of 2025’s highlights and which I covered in detail here, discovered a new matrix-multiplication procedure: it found a way to multiply two 4×4 complex-valued matrices using 48 scalar multiplications, described as the first improvement in 56 years over Strassen’s approach in that specific setting.

And others.

So did AI help discover things? 100%.

Were they world-changing breakthroughs? Not quite, at least not confirmed to be as such.

Thus, I’ll give myself half points on this one. Not sure if they are “Nobel-worthy” today, but in my view, there’s no doubt that people assisted by AIs will win most Nobel Prizes in the future.

Even if AIs aren’t the ones that actually came across the solution, they can help you think, which has always been my primary use case for this technology and for me and many others.

Skyrocketing Subscription Costs ($2,000/month)

This one’s fast. Full scores, because Factory AI released a $2,000/month subscription a few months ago, called the Ultra subscription.

But will they get more expensive? We’ll answer that in our 2026 predictions section.

The Rise of “Intelligence Efficiency”

I believe this was spot on. In 2025, all AI Labs were pressured to focus not just on brute-force intelligence but on intelligence-per-cost.

Probably nothing exemplifies this better than the cost comparison between o3-preview, from around a year ago, and Gemini 3 Flash, released just a few weeks ago, which has similar nominal scores in the ARC-AGI 1 benchmark, yet costs just $0.17/task. At the same time, the former required $4.5k/task for similar results, meaning Gemini 3 Flash represents a 26,000x reduction in the “cost of intelligence.”

2024 ended with o3-preview “telling the world” that progress was becoming too expensive. Thus, in 2025, we saw a clear effort by AI Labs to be more frugal and improve intelligence-per-cost.

Continued Stock Market Rally

Full scores, although I don’t feel like a genius predicting the S&P would go up, seeing its performance over the last decade.

Instead, I’m much more proud of the fact that:

We called the bottom of Google’s stock price in April (we made our first investment on April 7th, a resounding—and of course, also lucky—success).

We predicted, also back in April, that AMD would outperform, just before its rally started. By November, the stock had almost doubled.

We foresaw Intel’s growing importance to US national security interests and the memory supercycle, which led to our decisive investment in what I believe will be the stock of 2026 (and it’s already starting to become true). This third point will become particularly important for the 2026 predictions below.

The truth is, the only reason we are performing so well is that AI has absorbed all attention, and AI is precisely what I’m good at. As they say, everyone is a genius in a bull market, so even though we’ve been choosing big winners consistently, let’s keep ourselves humble.

The First 1 Gigawatt Data Center

Full scores. The first gigawatt data center came from xAI, with Colossus 2. Now, this site is planning to grow much larger, and they just raised another $20 billion, which signals to me that they are probably going to be the first to reach 2 GW deployed on a single site.

But why such large data centers? As Jensen briefly hinted at during CES, NVIDIA’s Rubin platform is expected to support training models with up to ten trillion parameters, the size he expects the next frontier to lie in (and thus preparing its hardware for).

Training such a model requires an equally impressive training budget, likely exceeding 1028 FLOPs, about ten times the size of Grok 4’s training. But how much is that? This is a very coarse estimate, but Transformers require around {6xN} FLOPs per training prediction, where ‘N’ is the number of parameters.

This means that if the training budget is that size, we can write 1028 = 6×10×1012*D, where ‘D’ is the number of training tokens. Assuming a 10 trillion-parameter model, that gives us a size for D of 1.67×1014, or a dataset with 167 trillion tokens.

This sounds like a lot, because it is. And that is why you have to make models bigger. For instance, if we use a model that is ten times smaller, like China’s largest models today (Kimi K2 has 1 trillion parameters), that would still require a dataset ten times larger than the one above to achieve the desired training budget.

It’s important to note that researchers aren’t seeking to make models larger; they want to increase training budgets, which “forces” models to be bigger. These two sound the same but aren’t the same.

Naturally, this is a very coarse estimate that doesn’t account for the fact that models are mixture-of-experts (reducing activated parameter count), use tools during training, and, more importantly, it doesn’t consider Reinforcement Learning (RL) at all, a type of training that skyrockets traning budgets without having to make training datasets larger.

Consequently, the actual dataset is likely much smaller than the number above, as Labs increasingly rely on the RL part of the training process. Rather, the takeaway is that training budgets are still huge and thus require many concurrent accelerators to process the dataset in a manageable time.

The reason is that, as we recently calculated, data centers need to grow to humongous sizes to accommodate all this training, in an effort to reduce what would otherwise take centuries to months using small data centers.

The Collapse of Traditional SaaS

Overall, I was correct on this prediction, but I won’t give myself full credit because I wasn't specific enough about what ‘traditional’ meant.

When I shared my very bearish sentiment regarding SaaS, I was thinking of companies like Salesforce or Adobe, which indeed had a terrible year (see below), but to get full scores, I should have mentioned that some SaaS companies, especially those more linked with AI, would still shine.

Nonetheless, pure AI plays like Palantir were one of the best-performing stocks of all industries and sectors. Cloudflare’s omnipresence on the web and its role as a gatekeeper of data from agents ensured it had a great year too; Shopify's unequivocal embrace of AI is paying off; and data platforms for AI, like MongoDB or Snowflake, or mission-critical businesses like CrowdStrike, did, unsurprisingly, absolutely fine.

The idea was correct, but saying “SaaS will die in 2025” was an exaggeration.

US Ban on Chinese Open-Source & APIs

Wrong. It’s something I do not discard could happen in 2026, but it nevertheless did not occur in 2025.

Integration of Video World Models with LRMs

Correct, as demonstrated by complex world-model systems like Waymo, which leverage video and text models to drive cars. More on this later.

The Norm of Declarative Software

Wrong. I predicted that 2025 would see a shift in how we “build software” toward declarative software, one where the entire logic of the application is controlled by LLMs. This is still not the case today, even though I still believe it is inevitable.

Overall, a not too shabby score of 6.5 out of 10 (considering 0.5 for partial scores). And now, it's time for the 2026 predictions, which, as you’ll see, hold several key insights to watch out for.

Predictions for 2026

We are off to a strong start here with the rise of state capitalism in the West and its strong impact on AI.

The Rise of State Capitalism

National security has become the center point of all decisions since Trump's explicit embrace of the Monroe Doctrine, proclaimed by US President James Monroe in 1823, a foundational US foreign policy that warned European powers against further colonization or interference in the Western Hemisphere.

However, the exception is that the newer version, the playfully referred to as “Donroe” Doctrine, is mainly aimed at China and Russia, not at the European countries whose best days are gone unless they execute profound reform, and which have completely lost the AI train.

And what does this have to do with AI? Well, everything.

Trump, initially much less interested in foreign interventionism and instead being a more “profits-focused” president, seems to have veered back into what the US does best: make decisions based on national interest, trying to secure the supply chains of the technologies and assets considered a priority.

In one way, one could say the US is starting to behave as if it were at war, similar to how it behaved for much of the 20th century. This may not sound particularly transformative, but let’s not forget that John Kerry, Obama’s Secretary of State, said in 2013 that “the era of the Monroe Doctrine is over.”

Well, it’s back on the menu, which means that, from now on, not considering the geopolitical angle in any meaningful AI discussion should automatically consider the argument flawed.

More specifically, with respect to AI, this means we now have confirmation that AI is under the flag of state capitalism, a technology in which the government’s role will be huge.

By the way, I believe that, if you think this move is partisan, you are most likely wrong. I have insisted (and many in the industry have too) that this shift should happen if the US wishes to compete with China in the long run.

Interestingly, among all the things that divide US citizens, hawkishness toward China is bipartisan, so that even if Trump were not a larger-than-life figure who draws all the attention, this decision would have happened either way.

And guess what technology falls neatly in all this? Semiconductors.

Amongst all the many battles that will be “fought” in the US-China war for supremacy, semiconductor supply chains are, without out doubt, one of the main ones. If Operation Barbarossa represented the beginning of the end for the Axis powers in 1941 during the Second World War, the US's failure to secure the semiconductor supply chain could be its particular Barbarossa failure.

Fun fact, my late grandfather fought at Stalingrad at just 20 years old, and was the only person in the entire regiment to return home, despite being shot, too. The fact that I’m alive and that you’re reading these words is, statistically speaking, a miracle.

The problem is that one of China’s biggest levers over the US today, besides its tight grip on rare Earth refining, is the threat it poses to the global semiconductor chain, which is extremely bottlenecked in chip manufacturing and packaging in Taiwan.

For the US, a country that wants to maintain its supremacy, this is intolerable, and they urgently need to diversify out of Taiwan (while still securing the island for years to come, because this dependency is not ending anytime soon).

Put mildly, the world’s dependence on Taiwanese semiconductor production is absolute, even though Taiwan’s national chip champion, TSMC, has opened fabs in the US. But let me be clear, the relief that the US might feel on this is an illusion. At the end of the day, it’s a Taiwanese company defending Taiwan's interests, which, in this case, are not aligned with US interests.

For instance, Taiwan enforces an “N-2” rule that prohibits technology less than two generations from the frontier, leaving the island—the rationale is simple: the more important Taiwan is to chips, the less convenient it is to invade.

For all these reasons, nothing matters more than being absolutely “AI and chip independent”, so I predict interventionism in AI markets will become the norm, a term known in geopolitical circles as ‘state capitalism’, best represented by China, be that via direct investments or subsidies (the former is much more likely, given Trump’s well-known dislike of subsidies). I believe this support will be mostly based on providing liquidity at any cost, which could be very inflationary, but that’s another story.

Moreover, the US could also start looking at/pressuring other countries for turbines and access to power in general, potentially offshoring large-scale data center projects and keeping MW-sized data centers inside the US, with the Middle East being a clear-cut candidate (especially considering data centers aren’t popular infrastructure investments because they generate few long-lasting jobs).

Likely candidates for intervention? The AI Labs, especially OpenAI. And talking about AI Labs…

AI’s First Big IPO

I predict we will see two big AI Lab IPOs in 2026 because liquidity isn’t eternal. The best proof of this is China, which is well underway with its AI Lab IPO craze, with the upcoming IPOs of Zhipu and Minimax.

And while liquidity in US private markets remains strong, seeing the very recent xAI $20 billion Series E, the capital requirements are so enormous that these Labs can’t raise the funds needed to fund their ambitions. For instance, OpenAI alone has $1.4 trillion in commitments for +40GW.

So, who is going to IPO? Well, funnily enough, it’s probably not OpenAI, but two other much more likely candidates you might not expect. And the answers are Anthropic and Mistral.

For the latter, their bet to concentrate efforts on coding and agents, meaning models are developed almost exclusively for those use cases, is paying off.

Not only are the models the best in those areas, giving them pricing power and better revenue per user (Anthropic’s models are way more expensive than the rest, despite, ironically, being worth it), but, crucially, it also allows them to concentrate and minimize R&D compute.

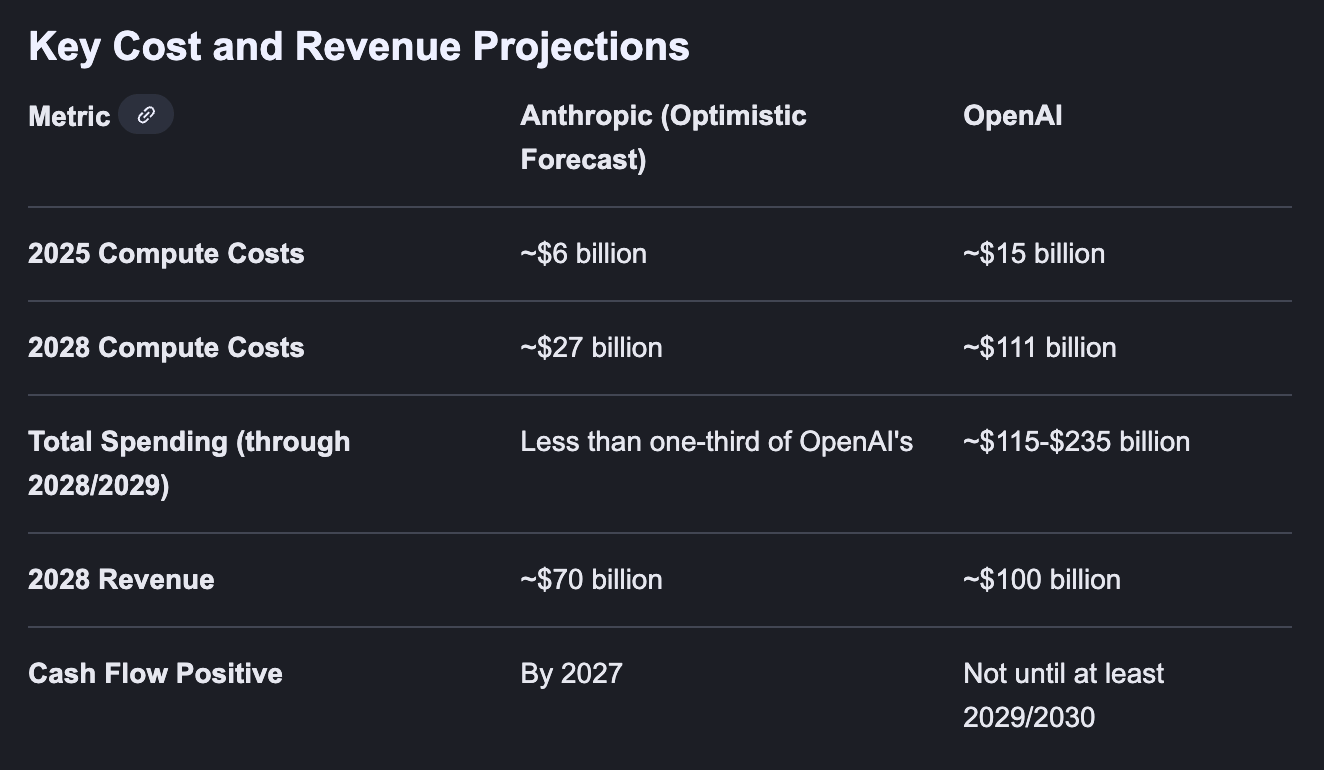

This last point is critical. As we suspected from OpenAI’s cost structure leaked by The Information (shown below), and as we have also learned from the IPO filings coming from China, R&D compute, the costs of running experiments, trying out new stuff to see what works, are one of the largest expenses.

For Anthropic, this is not as big a problem because they can concentrate efforts and teams in two simple directions: making their AI models code and call tools better.

All this is to say that Anthropic is likely to be way more profitable (or, to be more specific, less unprofitable) than OpenAI. In fact, their respective internal cost projections reveal this huge disparity:

Source: The Information

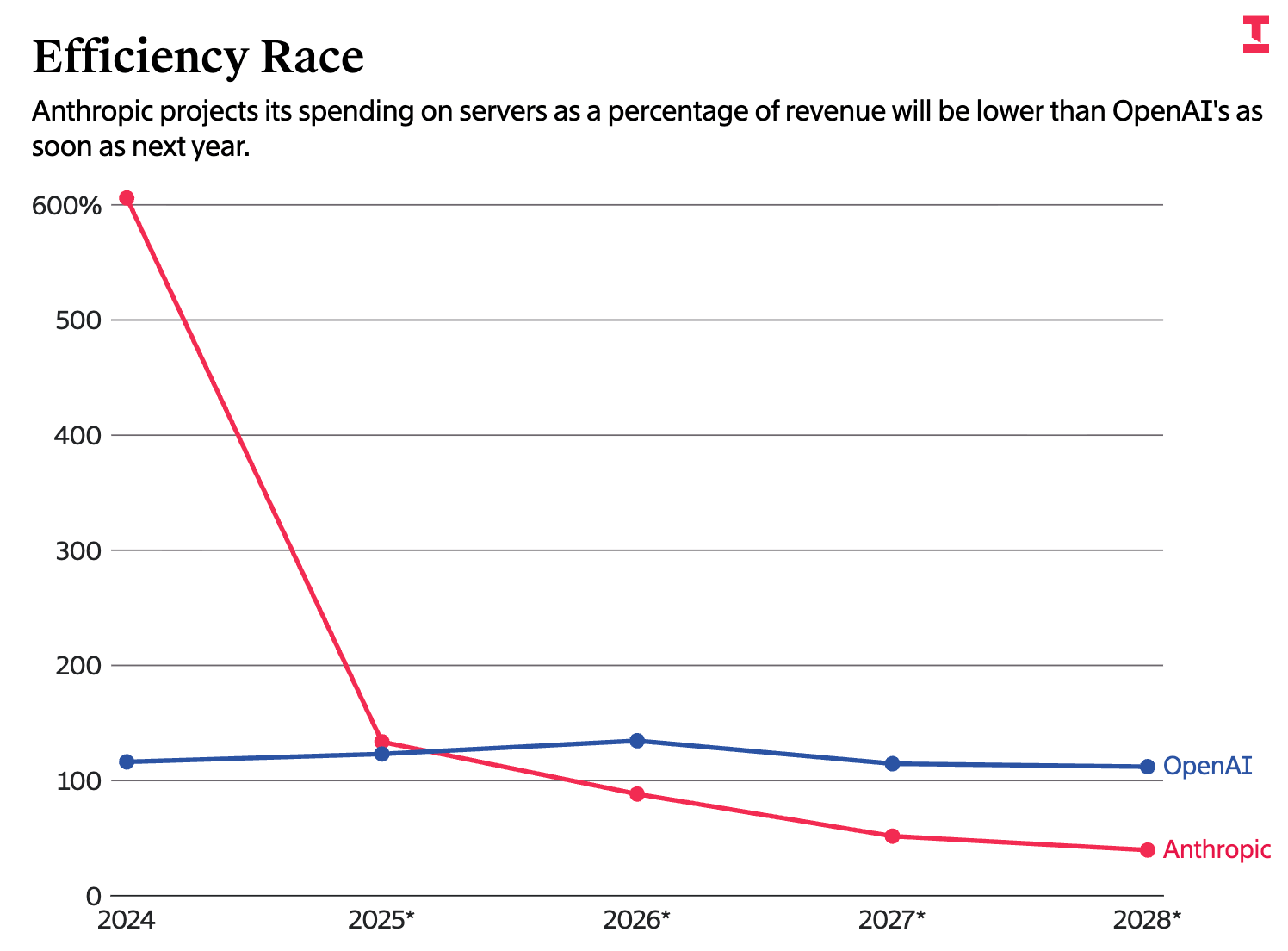

More visually, this just means Anthropic is expected to be much more efficient than OpenAI over the long run, measured by the percentage of revenue server spending represents:

Source: The Information

These better overall business numbers, coupled with the fact that Anthropic doesn’t have nearly as much access to liquidity in private primary and secondary markets as OpenAI (i.e., their cost of capital is higher), make their IPO much more likely.

On the other hand, we have Mistral. Let me be clear, this prediction is totally based on vibes and zero evidence.

I believe they will also IPO in 2026, but for very different reasons: Their revenue numbers are likely to be horrifically bad relative to rivals, and they desperately need access to huge liquidity to grow (the AI Lab market is very much grow-or-die). On the margin level, their numbers are probably not as bad and thus won’t look terrible on an IPO; their issues are likely mostly about top-line growth.

And speaking of OpenAI losing races, 2026 could also see them lose their majority share in one of the most important Generative AI use cases today.

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- NO ADS

- An additional insights email on Tuesdays

- Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more