Writer RAG tool: build production-ready RAG apps in minutes

RAG in just a few lines of code? We’ve launched a predefined RAG tool on our developer platform, making it easy to bring your data into a Knowledge Graph and interact with it with AI. With a single API call, writer LLMs will intelligently call the RAG tool to chat with your data.

Integrated into Writer’s full-stack platform, it eliminates the need for complex vendor RAG setups, making it quick to build scalable, highly accurate AI workflows just by passing a graph ID of your data as a parameter to your RAG tool.

FUTURE

Top 10 Predictions for 2025

Since ChatGPT was released, massive changes have been made in the industry and, thereby, worldwide every year.

As the year ends, we are covering what I believe will be the ten most significant events in 2025. We will cover three areas: technology, markets, and products, providing a rich overview of what’s to come.

From the emergence of intelligence efficiency, stocks, and the US escalating the AI Cold War with China with new methods after China’s recent groundbreaking achievement (absolutely terrible to the interests of both the US as a whole and, particularly, NVIDIA), a new AI system that could be the future of robotics, and ending with a commitment I’m doing to you to transform your life completely next year.

Let’s dive in.

Technology

Over the last months, we feared AI progress was stagnating. But those fears have been quickly erased over the previous few weeks. Next year, we will experience the biggest changes in our lives caused by AI: the year AI transitions from projections to reality.

The First Big Discovery

One of my biggest claims is that AI’s true discovery powers are yet to be seen. While everyone looks at ChatGPT, the real disruption will come from AI’s acceleration of scientific discovery.

In particular, I’m adamant that AI will accelerate scientific discovery like nothing we’ve ever seen, and I believe this big prediction will become a reality next year.

So far, AI has:

Helped us discover new antibiotics

Accelerated the discovery of new materials

Shown great promise in accelerating inventions

Rediscovered the laws of gravity

And many, many more. But this is nothing to what’s coming. The last few months have seen the emergence of a new AI foundation that will shape what’s to come. Everyone is talking about Large Reasoner Models (LRMs), but other crucial insights will also play a role, like:

state-space models like Mamba or Liquid Foundation Models, which allow for more efficient implementations of LLMs/LRMs,

graph-based reasoning models, which transform knowledge into graphs, facilitating models like ChatGPT to process this data much more efficiently, even using Beethoven music or a Kandinsky paint to discover new materials through isomorphisms,

And all this added to the release of several giga datasets like The Well, which provide researchers with insane amounts of new data to take AI models to new heights.

All in all, our capacity to process vast amounts of data and find new hidden patterns introduces a new, superhuman way of discovery, inductive scientific discovery (finding new laws based on big data), instead of the centuries-old deductive scientific discovery process (experimental scientific discovery, from hypothesized laws to proof based on small data, which takes much longer and requires genius intuition by a select few) is finally within our reach in 2025.

Scientific discovery makes industries much more efficient and enables humanity’s progress. Finally, this disruption's incentives and practicality are aligned for 2025, so we will see our first truly remarkable AI discovery, even leading to new Nobel Prizes. Whether in physics, biochemistry, or healthcare, it’s coming.

Moving on, we must remove the genie from the bottle because the most powerful AI models are not available right now, but they will soon be.

The First LLM + Search Model at Scale

LRMs were in the spotlight in the last quarter of 2024. Among the many presented LRMs were models like OpenAI’s o family, DeepSeek’s DeepSeek v3, Alibaba’s QwQ, and Gemini 2.0 Thinking, all considerably better than anything we had seen before.

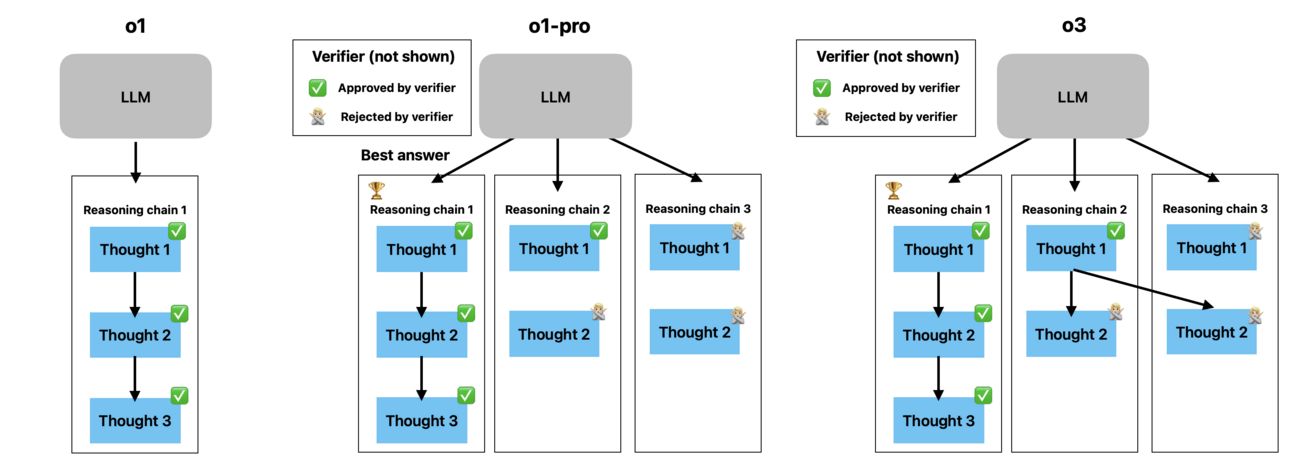

These models are LLMs that think for longer by approaching problem-solving as a multi-step process, generating multiple concatenated ‘thoughts’ that allow the model to take its time to solve the problem and, importantly, reflect on previous thoughts and correct itself.

However, these presentations have mostly been announcements, not releases—if I may, for bragging purposes. And those models that have indeed been released, like OpenAI o1 or Gemini 2.0 Flash Thinking, are distilled versions of the real deal: the LLM+Search models.

Distillation is the technique that takes a stronger model (teacher) and uses an imitation learning objective to train a smaller yet weaker model (student).

In layman’s terms, we train smaller models on data generated by larger models, thereby becoming ‘Pareto optimized’: you get 80% of the performance for the price of 20% (illustrative numbers). However, distillation implies that we are leaving performance off the table.

In a way, it’s a trick to continue progressing steadily while acknowledging something better is coming.

In practical terms, currently-released LRMs are LLMs that meet the aforementioned definition of an LRM but with a limiting twist: no search.

As we’ve commented several times, part of solving a complex problem involves searching the space of solutions; you can’t expect to choose the correct solving path on the first try every time you tackle a problem. Most of the time, you must iterate and explore to find the correct solving path.

Therefore, the natural evolution of our current LRMs is models like o3, which already tackle problems in a multi-step, “reasoned” approach but combined with search algorithms like MCTS (Monte Carlo Tree Search) that allow for this exploration (for more information on how this works, read here).

To give you an idea, not even the $200/month o1-pro fits this model criteria. It performs majority sampling, which involves generating several tries to solve the problem and choosing the best one. However, it’s not actually searching; it's increasing the likelihood of getting the right response by trying several times.

Three types of LRMs

In 2025, models like o3 and their counterparts from other labs will be released, which could revolutionize the work of millions of knowledge workers.

While I expect this release to be closer to the end of the year, as the economics of running these models are terrible and will require more advanced GPUs like NVIDIA’s Blackwell (which improves inference performance up to 30 times), added to the need of pushing more HBM (RAM memory) into our GPUs, I do believe that the hype is too high for these ‘LLM+search’ models not to be released in 2025.

Improved inference will be key to LRMs. Source: NVIDIA (Blackwell vs Hopper GPU comparison)

However, I expect them to be insanely expensive. I wouldn’t be surprised to see a $2,000/month ChatGPT subscription by next year.

Interestingly, LRMs are not larger than traditional LLMs but smaller, thus making them easier to store. The issue is the model’s cache (KV Cache), which literally explodes (more on that later). This is no small problem though, with extensive sequences, which will become the norm in text and especially with video models (autoregressive like Genie and one-prediction video like Sora).

For reference, a one million token sequence with no attention efficiency methods like GQA (although this is the standard today), a BF16-precision model like Meta’s Llama 3.1 405B would require a KV Cache of, brace yourself, 8.2 TeraBytes, or 8257 GB of KV Cache. For reference, that would require 13 state-of-the-art tDGX H100 servers (a purchasing price point of $2.5 million) to process just one sequence. If you care to understand how I got that number, read here (only Premium members).

In this new paradigm, the memory constraints become even more daunting.

The need to run these models at scale by next year leads us to the next prediction: intelligence efficiency.

The Emergence of Intelligence Efficiency

When I say OpenAI will release o3, I’m not saying they are releasing the version we saw. The AI Frontier Lab textbook procedure is very clear: They publish frontier research to maintain the hype, and later, they figure out how to deploy the beast.

With examples like distillation, we see how AI labs are working hard to compress intelligence into smaller portions that users can consume.

However, increasing intelligence per unit of compute (making cheaper-to-run models more intelligent) will increase in importance as investors pressure these companies to monetize their research.

It’s not a coincidence that, lately, we have seen a growing number of Pareto graphs with model releases, in which models are compared based on their “intelligence” and the cost of serving them.

Below, we can see that DeepSeek is basically on par (performance-wise) with Claude 3.5 Sonnet, but its average price is considerably lower, making it a better overall model.

DeepSeek v3’s Pareto superiority to other frontier models

This is what I call intelligence efficiency, the idea that intelligence cannot come at any cost; it must be economically profitable to serve.

For instance, we have long commented on o3’s impressive results, but they are unfeasible to monetize as running the model can cost millions of dollars on just one benchmark; simply put, power users of the technology could spend hundreds of thousands of dollars per month on these models if they were released today.

Solving inference is so important that it even led Google to pay billions to Noam Shazeer to buy Character.ai. Yes, it wasn’t the love chatbots that got Google to pay, but the fact that this company had found a way to run these models cheaply.

Therefore, as commented in the o3 review, the industry needs to transition from raw intelligence to ‘deployable intelligence,’ models that, while still very intelligent, don’t bankrupt users of the company serving them.

For this reason, I believe that in 2025, most intelligence discussions will focus on whether this intelligence is practical, not only based on how many percentage points better your model got in {insert benchmark}.

2025 is the year of intelligence efficiency.

Markets and Geopolitics

Moving on to markets, we must discuss the stock market and overall spending.

Stocks Should Continue to Rally

As long as the AI hype endures, Big Tech companies (which are also massively cutting spending while increasing revenues) will continue to thrive.

This means the entire market will rally because of their weight in the index. The Magnificent Seven (Apple, NVIDIA, Microsoft, Amazon, Meta, Tesla, and Google) account for almost one-third of the S&P500 (31%).

Notably, all their valuations are intrinsically linked to AI; in cases like Microsoft, one could very well say that their investment in OpenAI has added one trillion of market capitalization alone despite the AI efforts of these companies, except NVIDIA, have not been met with actual AI-direct revenue growth.

However, the announcement of o3 has renewed optimism that LRMs are the path to AGI. Thus, the industry's hype is as high as ever, which implies that AI spending will only accelerate.

The Year of the 1 GW Data Center

In 2024, four companies (Amazon, Google, Microsoft, and Meta) have spent around $200 billion directly on AI, mainly in buying land and AI accelerators to build huge data centers to train and serve these gigantic models.

With the advent of LRMs in 2025, there will be many reasons to increase AI spending. Nobody will justify spending on ‘just LLMs,’ but the promises made by o3 and other LRMs tell us that markets are more than willing to sustain huge CAPEX expenditures if they lead to models like o3 or even more advanced ones.

Importantly, as mentioned earlier, LRMs are much more expensive to serve than LLMs. It is estimated that o1 models generate up to twenty times more tokens per response than LLMs.

That means several things:

Larger KV Cache (memory). As commented, the temporary memory to store for every text sequence these models complete grows by 20 if sequences grow to those sizes (the model’s cache has O(L) complexity, where ‘L’ is sequence length).

More complex distributed settings. With LLMs, one user equals one sequence. With LRMs, that’s not the case anymore, meaning that one single user task could saturate an entire cluster with its KV Cache, making infrastructure management a nightmare. Scaling LRMs is a hard—unsolved—problem.

LRMs also become essential tools for training other models, increasing spending. Notably, while LLMs have insane amounts of ‘free’ data available, most high-quality LRM data has to be built from scratch, increasing expenditures exponentially.

Therefore, due to the immense compute demands, we should expect our first 1 GigaWatt data center in 2025, which would consume as much energy as the city of San Francisco.

But while 2025 could also be an excellent year for some industries… it could also be cataclysmically bad for others we’ll cover below. And it’s a crucial year for the US too, which could lose its place as the dominant force in AI to China, leading them to take unprecedented measures in the escalating AI Cold War.

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- NO ADS

- An additional insights email on Tuesdays

- Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more