Get the tools, gain a teammate

Impress clients with online proposals, contracts, and payments.

Simplify your workload, workflow, and workweek with AI.

Get the behind-the-scenes business partner you deserve.

THEWHITEBOX

TLDR;

Welcome back! In this insights rundown, we examine Apple’s and Anthropic’s new AI plans, CoreWeave's troubled public journey, and a personal reflection on the lameness of modern AI startups, among other insights.

But more importantly, we’ll focus on AlphaEvolve, the most exciting AI release not only in 2025, but maybe in years?

THEWHITEBOX

Things You’ve Missed By Not Being Premium

On Tuesday, we looked at the fast progress we are seeing in humanoid robotics, concerning ‘bubbly’ trends we are starting to see in the data center business, the rapid fall into obsolescence of the hottest AI job, and the first great defeat of an AI early adopter, among other news.

FRONTIER MODELS

Anthropic’s Upcoming Models Will “Think” A Lot

According to an exclusive by The Information, Anthropic is nearing the release of two models, new versions of Opus and Sonnet, to try to regain the lead it once held in the LLM arena.

According to the source, the key change will be the fact that, during inference, the model will be capable of backtracking and retrying to a much larger threshold than what is currently allowed for these models.

For example, if the model gets stuck with a tool failure (it tries to use a tool, but the tool returns an error), it will reflect on the error and try to self-correct.

TheWhiteBox’s takeaway:

This exclusive article could have been a simple one-liner: Anthropic plans to release new models with much higher inference-time compute thresholds. In other words, if we recall that ‘reasoning models’ are just LLMs that:

Think for longer on a task

Approach problem-solving using multi-step thinking

And basically, if you’ll excuse my language, Anthorpic's new models will be levering the former to the tits. This is just another proof that modern progress is just naive compute increases, aka throwing all the compute we can at the same models that have existed for almost eight years.

There’s only one rule in AI right now: Want better model → Compute budget goes up → Model gets better.

While this is clearly beneficial and indicative of progress, I worry that this lack of real innovation will become a problem once these models run into the same ‘wall’ the previous scaling law (want better model → Train larger model → Results get better).

This release is probably caused by pressure from investors to deploy something fast, as Anthropic’s product shipping rate has fallen off a cliff compared to competing labs like OpenAI or Google, to the point of public embarrassment.

AI WRAPPERS

In AI, Ideas Are Worth Zero.

Are AI application startups, or dare I say AI wrappers, converging into the same company? That thought has been circling my mind recently based on how two ‘AI native’ companies, Granola and Notion, are basically merging into the same company.

Almost simultaneously, Granola launched a one-place wiki eerily similar to Notion… the day after Notion launched a meeting transcriptor AI (which is basically what Granola is).

This is just a small example, but I can’t help but realize that all AI startups look alike; they are all simply copying each other.

OpenAI is building a chat/coding IDE (acquired Windsurf)/Google competitor

Anthropic, Google, and xAI are building that same thing, too,

Anthropic led the way with Claude Code for terminal-based coding agents, which OpenAI followed with CodexCLI

Google pioneered Deep Research, to which OpenAI, Anthropic, and xAI soon followed.

All robotics companies are building the exact same thing: humanoids.

But the application layer takes this ‘let’s all copy each other’ to the next level.

The Browser Company, Perplexity, and Google are all building the same product. Identical.

Not only does Cursor have Windsurf, Cline, Zed, and even Microsoft itself with Copilot as competitors, but also Google with Gemini Code Assist, and we’ll soon also have Amazon joining the fray.

But Cursor also inspired the birth of products like Bolt, Lovable, or Replit Agent, which take app coding to the next level by having the AI do almost everything (leading to the ‘vibe coding’ craze).

This has also incentivized threatened incumbents to enter the same automated app-building space.

Figma with Figma make

Canva with Canva AI,

Wiz launched Website Builder…

And here’s the thing. While I have listed many companies, I’ve literally described, at most, three truly different products (a chatbot, a search engine, and an app builder); the entire industry is just three big ideas being built over and over again.

In a nutshell, everybody is building the same damn thing while, hysterically, they are all ripping ungodly valuations as they have all lured VCs into their realm thanks to breakneck initial sales growth.

The truth? It’s all coming down sooner or later. I don’t believe that most of these companies will ever grow into their valuations.

Is AI in a clear bubble? We’ll soon answer that.

BCI

Apple’s BCI Play

According to the Wall Street Journal, Apple is considering using Brain-Computer Interfaces to allow users to control their devices through their thoughts.

The idea would be similar to Neuralinks’s BCI chips, allowing people with severe disabilities to move cursors on a screen.

TheWhiteBox’s takeaway:

You may wonder what this has to do with AI. Well, everything, because BCIs are nothing more than AI models that, unlike ChatGPT, which predicts the next word based on the previous ones, predict the next cursor movement based on brain patterns.

Think of it this way: the model is basically picking up patterns in brain signals, to the point it can predict things like ‘based on the current brain signal, it seems the human wants to move the cursor slightly to the left.’

From the business perspective, I don’t know what to think of Apple anymore. They have lots of ideas but zero execution. They are already heavily fumbling the Generative AI business, and instead of getting their act together, they seem to be gaslighting everyone with grandiose visions of ‘mind-reading devices.’

The fact this company is trading at a Price-to-earnings ratio of 33 with stagnant growth and zero new growth segments baffles me; the only place they are truly innovating right now is on desktop computers, where they are undeniably the best around. But that’s basically it.

AI & BLOCKCHAIN

The First Fully Decentralized RL Model

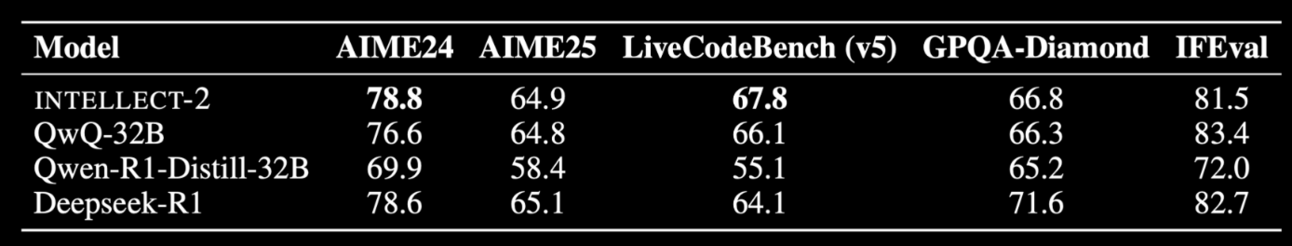

Prime Intellect has done it again. The GPU cloud company, which has become a pioneer in training models in a decentralized manner, has achieved a significant milestone: post-training a reasoning AI model, INTELLECT-2, that matches the state-of-the-art performance at its size.

The first decentralized-trained SOTA model. But what do we mean by ‘decentralized’?

Instead of one company consolidating all compute into a single location or a few of them, people worldwide were allowed to join and collaborate. As the three musketeers would say, “All for one, and one for all.” Training a model in such a way, with poorly communicated GPU clusters, is a highly challenging engineering problem, so the achievement is remarkable.

TheWhiteBox’s takeaway:

In case you’re wondering, this is blockchain’s vital play in AI, pushing forward mechanisms that allow people to collaborate (and earn money from) training and serving AI models.

Nonetheless, although they didn’t call it a blockchain, Prime Intellect did use a decentralized ledger to manage how each cluster connected/disconnected from the training run and also what workload each GPU worker received.

This is an engineering marvel; there’s no other way to describe it. But what would blockchain’s role be here?

Blockchains offer a decentralized, tamper-proof way of attributing workloads, so they act as guarantors of who does what. Via the blockchain, people participating with data or compute in models that are then used by others would receive payments with the cryptocurrency associated with that blockchain.

Let me be clear that blockchains aren’t inherently necessary for this to be true; it’s just that if we aren’t using a blockchain, the ledger would still be under the control of a handful of centralized owners (making it modifiable (tampered with) by them if they wanted to).

VENTURE CAPITAL

Perplexity Closes Round at $14 Billion

Perplexity, the AI-powered search engine company that delivers summarized answers with linked sources, has finalized a $500 million funding round led by Accel, which would raise its valuation to $14 billion, over 50% higher than its $9 billion valuation in November 2024 (just 7 months ago).

Besides the search business, they are also developing its web browser, Comet, to further challenge industry giants like Google Chrome and Apple Safari.

This news comes at an interesting time, as the rise of generative AI tools has caused notable disruptions in the search market; Apple executive Eddy Cue recently noted a two-decade-first decline in Google searches on Safari, attributing it to increased use of ChatGPT and Perplexity.

TheWhiteBox’s takeaway:

The article does not mention Perplexity’s ARR (Annual Recurrent Revenue, the current month’s revenue times twelve, it’s a projection of potential annual sales), but it’s estimated to be around $100 million (growing from $5 million to just over a year, which is impressive).

If so, that sets a valuation multiple of 140. For comparison, that is:

six times larger than OpenAI’s latest multiple ($300 vs $12)

two times larger than Anthropic’s latest multiple ($61.5 vs $1-ish)

Maybe I’m not cut for VC, but that valuation feels absurd for a company that has not yet proven to have a viable business. They do have sales, but they are all subscription-based, and it seems that the trend in the search industry is toward freemium services powered by ads (OpenAI’s latest hire, Fidji Simo, is a clear declaration of intentions). Even Perplexity has toyed with the idea via revenue-sharing mechanisms.

Thus, I’m more confident about OpenAI and Google making that transition than Perplexity because this is starting to look more and more like a capital race.

PUBLIC MARKETS

CoreWeave Seals $4 Billion Contract with OpenAI

CoreWeave’s stock surged after news of a new massive contract with OpenAI, to be paid until 2029. The jump helped it recover from the initial loss after it was made known that CapEx would be four times higher than expected in 2025.

The enormous gap between capital investments and revenues, currently being expanded to 4 or 5 to 1 (despite CoreWeave seeing a 420% revenue grwoth), has consistently scared investors.

TheWhiteBox’s takeaway:

The recently IPOed data center building and management company has generated mixed feelings since its launch in March. CoreWeave’s extreme volatility serves as a prescient exemplar of how Wall Street feels about AI.

They think it’s the next big thing but aren’t ready to fully commit; investors are pretty much like the guy with commitment issues regarding AI.

While I’m bullish on CW, I share the fears that too much spending could backfire.

According to their own estimates, they expect up to $23 billion in CapEx for this year, an ungodly amount considering it’s not that far off from the CapEx values of the Hyperscalers (all between $70 and $100 billion per year).

However, the latter companies have multiple dozen times more revenue (hundreds of billions versus a projection of 5-ish billion) and, unlike CoreWeave, are highly profitable, while the latter isn’t even breaking even, and it’s not expecting to reach that parity soon.

TREND OF THE WEEK

AlphaEvolve: AI’s Highlight of the Year

Yesterday, Google released AlphaEvolve, a fascinating new system that has, amongst many other discoveries, improved a solution to a well-known problem that has resisted humans for 60 years.

AlphaEvolve is special, an ‘it’ moment that could soon be compared to AlphaGo’s ‘Move 37’ or the discovery of the Transformer architecture that underpins modern AI.

Moreover, it’s also an AI system that forces us to accept what seems undeniable: using AI for discovery could be its most significant contribution and one that, ironically, may not need AI to be intelligent at all. Wait, what?

Let’s answer that and more today.

AIs for Scientific Discovery

Few people in the AI industry hold more weight than Demis Hassabis, Google Deepmind’s CEO. A Nobel laureate in Chemistry thanks to AlphaFold, an AI that produces folded proteins from amino acid sequences, he always points to using AI for scientific discovery as the most important use case.

Not conversational AI companions, not coding agents, but as a tool for discovery.

And AlphaEvolve is the latest product that meets this vision.

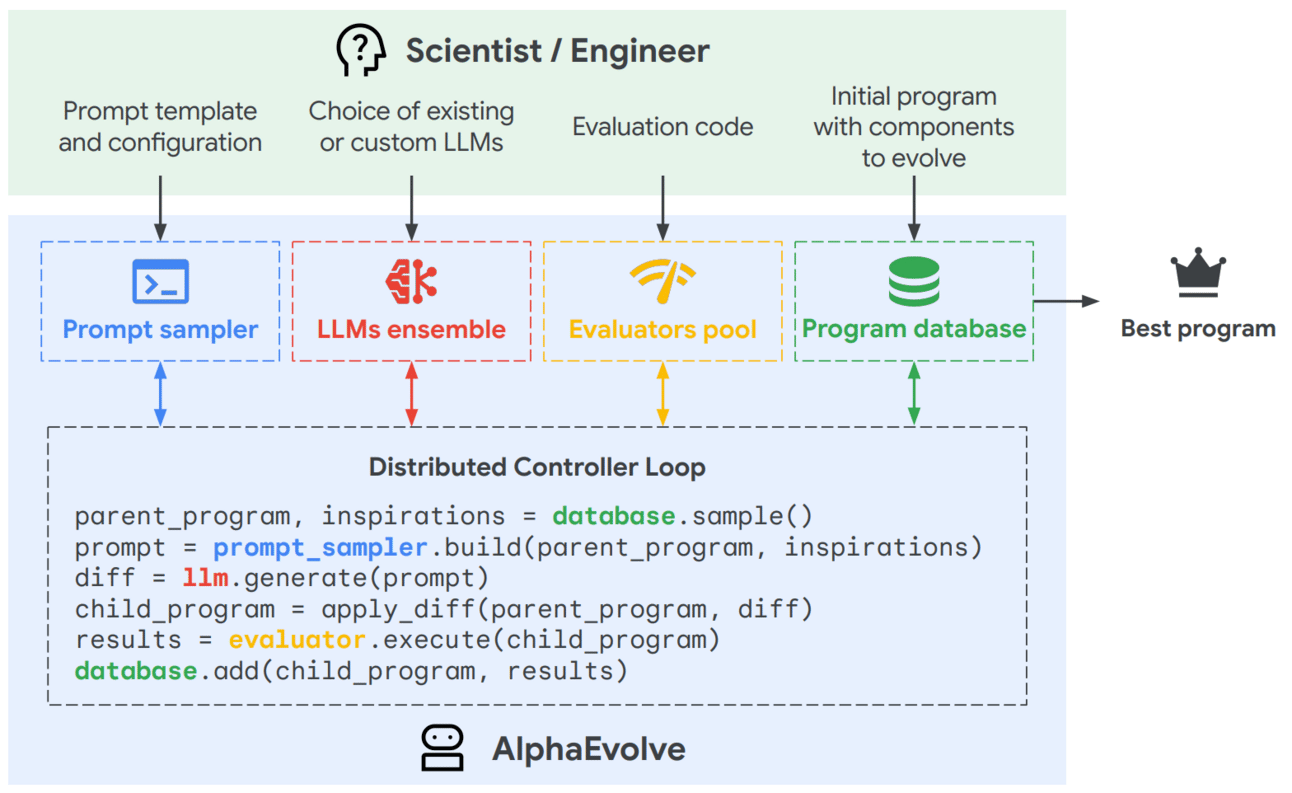

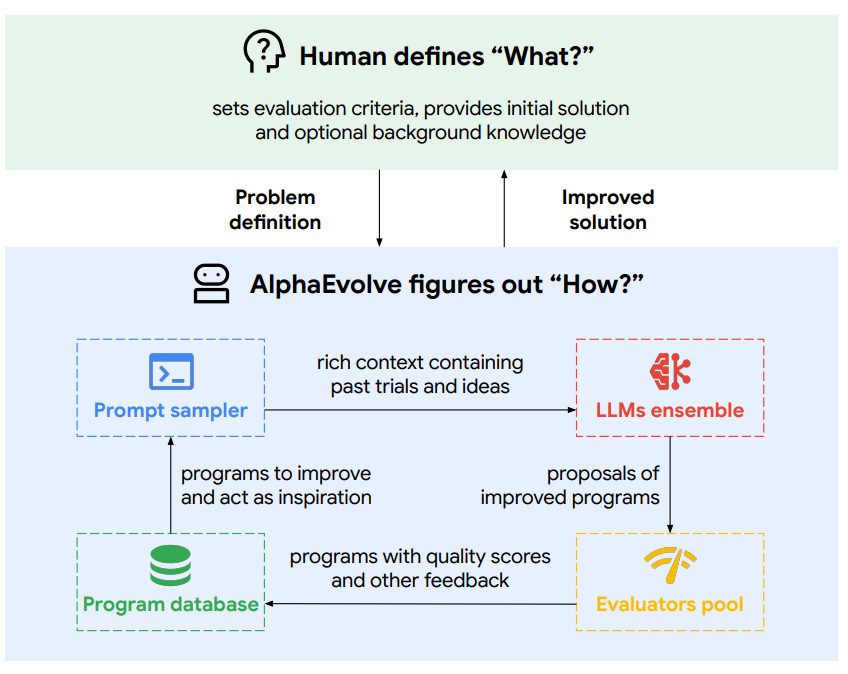

In succinct terms, AlphaEvolve is an iterative search system that uses Large Language Models (LLMs), like Gemini, to iterate over code to find new solutions to given open problems.

In layman’s terms, it’s an orchestrator system that takes in a human task and uses an LLM or set of LLMs to propose new changes to the code, evaluating the quality of the changes, and readapting, creating a recursive self-improving loop that has yielded incredible results.

To drive this home, let’s look at a real example. While introducing AlphaEvolve, I mentioned that it had found an improved solution to an open problem that had remained the same for almost 60 years (56, to be exact).

This open problem concerns matrix multiplication, or finding new ways to perform matrix multiplications as fast as possible with the fewest computations.

Until AlphaEvolve, that crown belonged to the Strassen algorithm; a method developed back in 1969 that allows performing matrix multiplications requiring fewer computations than the naive approach (the approach you would use to multiply two matrices), reducing that number from 64 to 49 using clever recursive tricks.

Now, AlphaEvolve has decreased that number to 48 for 4×4 complex-valued matrices, the first time in 56 years that a new algorithm has improved over Strassen’s.

Unless you’re a mathematics geek, you probably think, "So what?” And I wouldn’t blame you, but if you know you know, but in case you don’t know, matrix multiplications are EVERYWHERE around you. More importantly, it is the essential mathematical operation in Generative AI.

In fact, one could confidently say that all ChatGPT is a bunch of matrix multiplications, and they would be right.

Thus, speeding matrix multiplication is a deeply researched problem that has now been improved thanks to AlphaEvolve, an AI system. Simply put, AI has gone beyond human capacities once again.

And, once again, like in previous occasions for ‘superhuman AI,’ Google is behind it.

And to think this company is trading at the cheapest earnings multiple out of all Big Tech companies!

But AlphaEvolve’s contributions aren’t limited to speeding up matrix multiplications.

AlphaEvolve was exposed to 50 known open problems in mathematics, all considered optimal constructs (examples with specific properties considered optimal under the open problem, like Strassen’s algorithm’s 49 multiplications).

And the results were impressive:

In 75% of the problems, AlphaEvolve reached at least the previously considered optimal result, matching the best performance to date.

In 20%, AlphaEvolve defined a new state-of-the-art solution for the problem.

For instance, Google used AlphaEvolve to improve AI modeling by improving how matrices are broken down into smaller chunks, known as tiles, to perform matrix multiplications faster, resulting in a 23% speedup, huge for their own AI training.

All this is just undeniable evidence that, once again, AI can be used for discovery, or as Sam Altman would put it, “pushing the veil of ignorance back and the frontier of discovery forward.”

But to me, AlphaEvolve is much more than increased kernel speedups and reduced matrix multiplications. It has made me rethink what we’re building with AI.

You Don’t Have to ‘Understand’ to ‘Discover’

When we try to define how AI should behave to consider it ‘AGI’ or ‘superhuman,’ we always anchor on human intelligence; we are automatically led to believe that AIs should mimic human intelligence to be intelligent.

But do they have to?

Demis Hassabis, a neuroscientist by study, has been challenging this view for a while, and AlphaEvolve has finally helped me understand what he was implying.

To understand this, we need to acknowledge AI’s limitations. AIs cannot reason about what they don’t know and can’t propose things they haven’t seen. This is unequivocally true.

But if they don’t know what they don’t know, how on Earth can they discover a single thing?

Simple, by leveraging a clever trick: search. Its creators instead focused on what AIs can do: they don’t know what they don’t know but know how to discover it.

In the researchers' words, AlphaEvolve's key innovation is evolving the heuristic search code from which the discoveries emerge instead of evolving the discovery itself.

But what does that esoteric phrase even mean?

Imagine a chef who wants to invent the lowest-calorie recipe.

Instead of inventing meals directly (constrained by prior knowledge), the chef builds a system with a few heuristics, such as "every meal must have protein, carbs, and healthy fats.” This automated system tries different ingredient combos, tests the result, sees if the calories drop, and then tweaks the search system to see if it can lower the value more.

Over time, the system learns things like: “reducing fats tends to lower calories.” So, the generator updates itself to favor those options for the next run.

Eventually, it converges on a recipe that not only the chef but no human on Earth has ever devised. And importantly, not even the AI knew what would come out!

AlphaEvolve is that system. But for math and science.

That's the key takeaway: Even if the AI has no idea what that recipe will be, it can optimize the algorithm that finds it.

In other words, the LLMs in AlphaEvolve aren’t proposing the exact new solution (again, they can’t do that because it’s certainly not in their training data) but iteratively refining the code that searches for the solution.

It’s an unbelievably smart trick:

We know the LLM cannot devise a novel solution, but it can tune the search algorithm that finds it.

It doesn’t understand the solution, but it can discover it.

Put in even simpler words:

The LLM doesn’t have the answer, but it knows how to write code that might find it.

Do We Need Real Intelligence?

And this is the critical intuition I want you to take from all of this: we have been obsessed with making machines as smart as us, adaptable, sequential multi-step reasoners (like o3), and first-principle thinkers.

And still, we have yet to see a single instance in AI history where an AI generalizes out of its distribution. In other words, every single AI model prediction in history has been entirely based on what the AI has already seen.

More formally, every single ‘new thing’ an AI does is a compositional interpolation between “known knowns”. I always give the example of Shakespeare’s iPhone poem. AIs can write such a poem even if it doesn’t exist in its training data simply by combining their knowledge of Shakespearean poetry and their knowledge on iPhones.

But can they create an entirely new form of poetry or design an entirely new hardware product? No.

Put simply, they don’t work if they don’t know what to do.

But here’s the thing. What if… we don’t need AIs to achieve that? What if we just need them to search?

Search? Search what?

When humans don’t know what to do, we search for solutions. Unlike AIs, we can interact with the environment and adapt on the fly, learning from this new experience on the fly. This is the basic principle of human intelligence.

Thus, we don’t search randomly. We have intuition (what society calls common sense), which is nothing but identifying patterns ‘on the fly’ that narrow the search down.

But what do we mean by intuition-guided search?

Suppose you lose your car keys at home. Instead of flipping over every object randomly (brute-force), you start by searching your jacket pocket, the kitchen counter, or the entrance table.

Why?

Because your intuition (pattern recognition shaped by experience) tells you those are the most likely spots. This is human search: constrained, informed, and efficient because we can’t afford to search everything.

For humans, choosing high-quality solutions is crucial because we are severely energy-constrained. We need to think fast and be quick on our feet. This came out of pure necessity during evolution (i.e., our ancestors ran into lions and had to be quick on their feet to avoid death).

Therefore, what Google has essentially proven is that AI does not need to understand or know something to discover it.

It's a 'non-human' path to scientific discovery. And it works.

But why is it ‘non-human’?

As mentioned, humans are energy-constrained, have only one brain, and have serial thought processing, severely limiting our ability to search for many solutions. So, we evolved to be pretty damn smart about how we choose candidates.

AI is not limited by this, so the goal isn't about creating AIs with human-level intuition, but more about 'scalable' search. That is, finding a 'good-enough' search system and throwing a lot of compute into it.

AlphaEvolve is a beautiful illustration of this: it probably doesn’t even understand most of the discoveries it has made, but it still makes them!

The takeaway?

AIs don’t need to understand to discover; they don’t need to be ‘intelligent’ to make ‘intelligent discoveries.’

Put in more doomerish terms, the collective dumb intelligence of AI could surpass the smarter-yet-more-constrained human intelligence by brute force.

I don’t know whether we have to call this “a new type of intelligence”, but I now finally understand when some researchers reject the idea of human-intelligence pursuit; that may be a lost cause (or not), but the point is that we don’t need human-level intelligence for AI to change the world nonetheless.

Whether that scares you or not, that’s another story for another day.

THEWHITEBOX

Join Premium Today!

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]