FUTURE

Thoughts on o3 and o4-mini

As you’ve probably heard or seen by now, OpenAI has released its new flagship reasoning models, o3 and o4-mini, which are heralded by some as a huge leap in model intelligence. Some, like famous economist Tyler Cowen, claim the arrival of AGI.

Thus, today, I’m going to give you all that is known, mine and that of the community, on these models. There have been two totally different reactions:

Those that claim OpenAI has built the closest thing we have to AGI, or even AGI,

Surprisingly (or not), those who claim these models prove that OpenAI is no longer in the lead.

Two wildly different reactions, right? But which one is correct?

Today, we are going beyond the hype to truly understand this release from a more holistic view, enough to make up your mind of what to do of all this, mainly:

Describe reasoning models in clear fashion to understand why reasoning models are now dictating how hardware is built, and not otherwise, helping us comprehend and predict hardware company roadmaps,

How o3 is ironically an extra step into China’s AI plan,

Understanding o3/o4-mini’s biggest new feature, thinking with images,

The fundamental limitations these models continue to exhibit,

An updated ‘state of the art of AI analysis’. The hard data that OpenAI doesn’t want you to see,

And one last reflection on what all this means geopolitically, considering the future of the US dollar in what are dark times for the world’s reserve currency.

Let’s dive in.

A Primer on Reasoning Models

First and foremost, let’s settle the grounds around reasoning models. As you probably know, modern AI assistant models like ChatGPT work by responding to a sequence of text, pixels (images), or video that you provide them.

For example, ChatGPT will respond with a sequence of words (text) or a sequence of pixels (images) to any question or request you may have.

But what makes reasoning models particularly unique?

Reasoning Models 101

The core working principle of LLMs is that they are trained to provide a semantically valid response to the user’s input. If the user asks a question, the assistant answers that question, not something totally unrelated.

With non-reasoning models, this response is immediate; the model does not reflect on the question and simply responds with the most likely continuation to that input.

This worked great for knowledge-related tasks. If the model knows the response, it only makes sense that the response is fast and immediate; there is no added benefit to thinking longer about a task.

Think of this from a human perspective:

Thinking for longer on a task such as ‘give me the capital of Poland’ doesn’t make it more likely you answer correctly if you don’t know Poland’s capital. Either you know or you don’t know (yes, you can take some time to recall the answer, but you’re still depending on knowing the answer or not).

As another example, answering “2+2” is such an intuitive, memorized response that you can respond immediately without needing to think through the addition (by counting with your fingers, for instance).

You get the point. This is how Large Language Models like GPT-4o, Grok 3, or Gemini work, they provide the most likely response to the user’s input based on ‘maximum likelihood estimation’. In layman’s terms, they have been trained to estimate the most likely response to an input—’Capital of Poland?’ → Warsaw—and immediately respond.

If you observe, when describing non-reasoning models, I’ve used words such as ‘immediate’, ‘fast’, and ‘intuitive’; this is eerily similar to one of the two thinking modes of the brain the late Daniel Kahneman proposed, System 1 and 2 of thinking:

System 1 is fast, intuitive, immediate; your brain instantly responds, such as when someone asks your name. It’s unconscious (although I’m going to avoid the topic of consciousness completely because it’s a pointless discussion to have on current AIs in my view) but in the sense that you don’t have to think your response actively.

System 2 is slow, deliberate, and conscious. Humans engage their pre-frontal cortex to actively think about their response to more complex tasks, such as solving a complex math proof or drafting a plan for their kid’s birthday party.

Long story short, some tasks benefit from being thought about for longer. This is what the industry has been defining as ‘reasoning,’ although don’t take it too literally, because the definition of ‘reasoning’ is like an opinion: everyone and their grandmother has one.

But to understand the language and terms being used by incumbents, this is what they mean by ‘reasoning,’ thinking for longer on a task.

At this point, you can already tell what ‘reasoning models’ are: they are AI assistants that, instead of answering ‘the first thing that comes to mind’ they think for longer on a task before they feel they are ready to answer, the AI industry’s best bet at creating System 2 models.

Under the hood, they are identical to their System 1 counterparts (they are still LLMs), but have been trained not to commit to an answer immediately and instead ramble a bit on the question until they have certainty about the response.

To do this, we perform a straightforward yet enourmously powerful technique: reinforcement learning.

I’ve talked about it multiple times so I’ll avoid you my personal rambling session, but the idea is that we train the model by ‘reinforcing’ good responses and punishing bad ones. This incentivizes the model to ‘search’ or ‘explore’ the space of possible solutions, eventually learning the preferred ways to answer certain questions.

The complicated part of this process is defining the reward signal, knowing what to reinforce and what to punish. This is so complicated that, currently, we have only learned to do this on verifiable tasks like coding or maths—tasks where the correctness of the outcome can be verified. Consequently, our current reasoning models only “reason” in those areas and struggle elsewhere.

Incredibly, when we do this, the model autonomously learns ‘reasoning priors’ like self-reflection or correcting its own mistakes, staples of what human reasoning represents.

If you’re struggling to understand why this reinforcement pipeline works so well, it’s because of two things:

The backbone of reasoning models is an LLM that, as we have recalled, is pretty intuitive (it has good intuition on how a task might be solved) and is also knowledgeable (it knows many things).

This model is then given a problem with a known and verifiable answer, and it performs trial and error at scale until it achieves that response, learning in the process how to reason.

Consequently, if we combine a model with good intuition with “infinite” trials on how to solve a problem, it eventually finds its way.

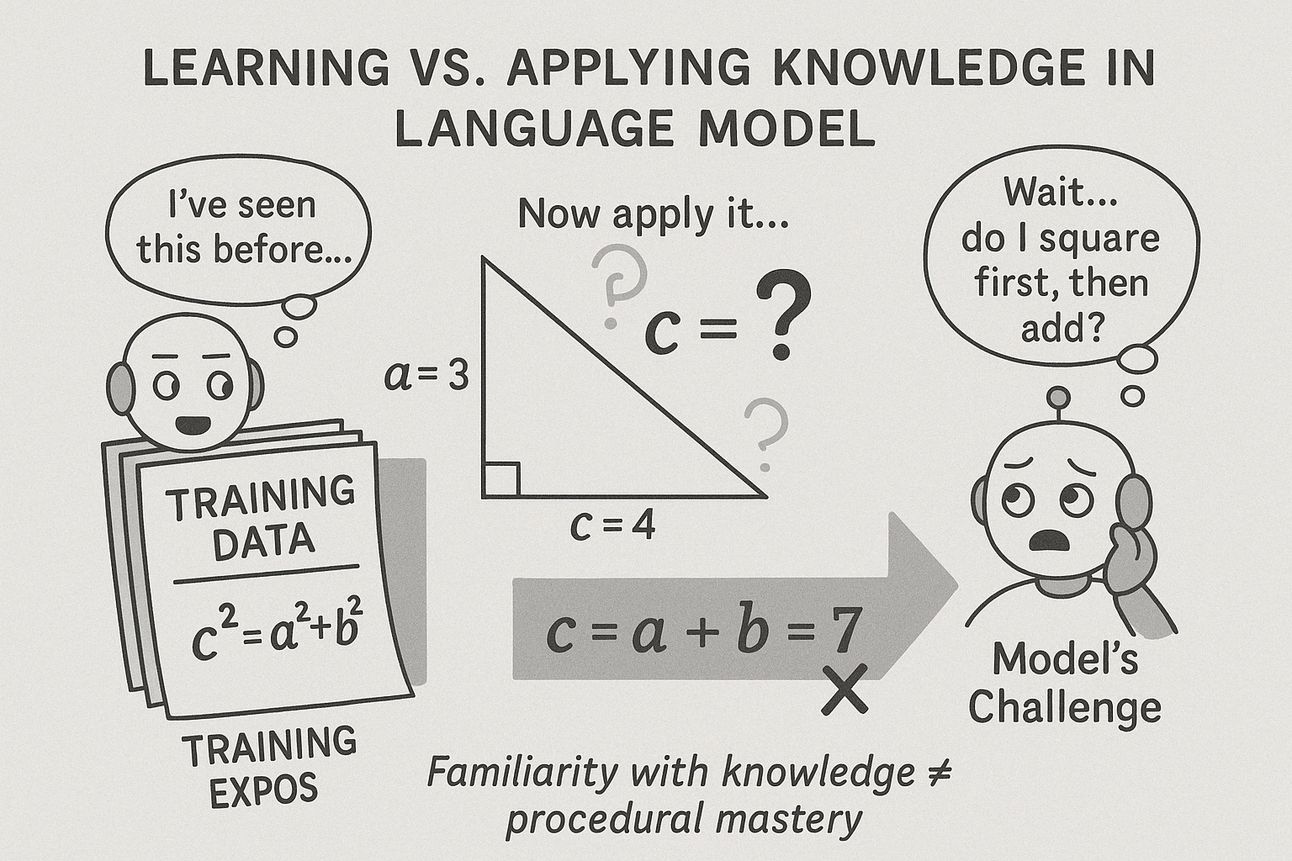

For example, suppose the model is tasked with finding the hypotenuse of a triangle with known cathetus values. In that case, it has undoubtedly seen the Pythagorean theorem hundreds of times during training but might not yet have learned how to apply the equation correctly.

Knowing it should use the Pythagorean theorem, combined with knowing the answer to the problem, is all the model needs. It will iteratively try to solve the problem until it finds a way to do so, learning how to apply Pythagorean’s equation in the process.

Of course, here’s is where the intuition of the backbone LLM—the System 1 model—becomes crucial. Trial and error with poor intuition always leads to a combinatorial explosion of trials, as the model is basically ‘winging it’.

Luckily, as the LLM acts as a good ‘idea generator’, the trial and error process is actually manageable in practice. Put simply, the model won’t suggest partial derivatives to solve a Pythagoras theorem because it knows it doesn’t need that, effectively ‘discarding’ infinite possible ideas that it knows won’t work.

That’s reasoning models for you. And let’s be honest here, reasoning models coulnd’t have appeared at a better time.

Eliminating Stagnation Fears

Over the last few months, there was growing evidence that progress was stagnating, as System 1 models (traditional LLMs, or non-reasoning models) were reaching a point of diminishing returns, and outsized increases in compute budgets were no longer yielding significant improvements.

Thus, this ability to think for longer on a task, known as ‘test-time compute,’ because we allocate more compute during test time (inference), ensured that the hype around AI was sustained.

But are reasoning models just hype? No, but if you want to understand why, you need to follow the money.

AI Labs, which need a lot of money to sustain their businesses, immediately embraced this new trend and are all obsessively training these new models. But that’s hardly a justification of the hype because they are precisely incentivized to push a new narrative to prevent ‘AI is stagnating’ fears from drying up the much-needed venture money.

But not only have these guys embraced this new trend: infrastructure and hardware companies, and above all, countries like China, have adopted this paradigm nose-deep.

Reasoning models have a profound effect on how the underlying infrastructure and hardware are deployed and designed. If GPUs once dictated what AI architectures became prominent, it’s now the other way around; reasoning models are now dictating the chip agendas of NVIDIA, AMD, hyperscalers, and entire countries.

If we look at NVIDIA and AMD, both have massively shifted their product strategies solely because of reasoning models (in the words of Jensen Huang, NVIDIA's CEO, not mine).

My deep dive on AMD delves deeper into the crux of the issue, in case you want to fully understand what I’m saying below.

In a nutshell, reasoning models are more ‘inference-heavy’ computationally, meaning that they shift the global balance of compute from AI training to inference. In layman’s terms, we now spend more compute running models than training them.

Thus, they require higher per-accelerator high-bandwidth memory (HBM) and tightly connected devices, with high cross-accelerator transfer speeds.

Accelerators are any device meant for parallel compute, such as GPUs (NVIDIA/AMD), TPUs (Google), LPUs (Groq), NPUs (Apple/Huawei, although they are only similar in the name), and so on.

This important needs have dictated the entire next generation of accelerators.

NVIDIA’s Blackwell is clearly an reasoning-focused design (albeit without compromising training capabilities), by not necessarily increasing per-chip compute and instead increasing the number of tightly-connected devices (term known as ‘scale-up’ in the AI hardware industry), going up to 72 GPUs all connected in high-speed (900 GB/s unidirectional), all-to-all topology. This essentially behaves like one big, fat GPU and supports larger inferences (longer chains of thought).

Google’s Ironwood design discussed on Sunday takes ‘scaling-up’ to a next dimension but ensuring it goes up to 9,216 chips, although using a 3D torus topology, meaning not all 9,216 chips are directly connected but assembled in a way that speeds don’t fall too much.

And China’s latest chip, Huawei’s Ascend 910C, achieves the largest all-to-all supernode in the world, with 384 tightly connected Ascend 910C chips and total FLOPs (operations per second of compute power) and memory size and speed that blow NVIDIA’s chip out of the water.

While scaling out (connecting several of these nodes together) is very important for training, as latency takes a secondary role, scaling up (creating larger, tightly connected nodes at very high speeds) is supremely fundamental for inference.

Consequently, all hardware companies are obsessing over this idea of ‘scaling-up’ instead of the traditional ‘let’s increase compute density per chip’, which now takes a secondary role.

This also comes pretty handy considering Moore’s Law (per-chip compute density doubles every two years) is clearly not true anymore.

Either way, this is without a doubt what defines ‘progress’ in the hardware layer today, and it’s entirely driven by the needs of reasoning models.

Earlier, I also mentioned countries (like China) in the picture, and I did so intentionally because this change in how hardware is created, giving more importance to cross-device communication speeds over compute power, is a great opportunity for China to catch up in AI infrastructure.

Based on what I said earlier (Huawei’s AI servers blowing NVIDIA/AMD out of the water), in case you’re wondering whether that means China is ahead in hardware, that’s not what I’m saying.

China is behind at the chip level, but it is leveraging access to cheaper energy to build massive AI servers that require 500 kW of power per server, more than three times the power of NVIDIA’s GB200, which NVIDIA cannot deploy in Western countries.

I’ll touch on this soon, but the point is that China has worse performance/watt, but a lot of more watts than Western countries. And this transition to inference helps them close the gap even more because per-chip compute requirements are no longer the deciding factor.

But why?

In this new era of hardware, the actual chip, where China lags behind, is less important, and networking, where China excels, becomes the main driver of progress, as mentioned above.

Worse, networking becomes much more “energy-constraining,” which is not a problem for China, as it has added an entire US' worth of electrical grid since 2011. However, this is a huge problem for the West and its obsession with phasing out nuclear and fossil fuels in favor of the “green deal.”

Nuclear adepts have been heavily criticized for going against this green energy trend for years, but just watch how the entire West is going to back to “fuck the environment” in the heartbeat as reasoning-models-led AI demand takes over the world. Just watch.

And let me be clear on my point here: European/US bipartisan governments and their disastrous energy policies have, for decades, destroyed the West’s energy self-sufficiency in favor of green energies while China and India laughed their a**es off and continued to invest in whatever means possible to increase power supply.

We must continue to build green energy, but it isn’t as reliable as nuclear, period, and we should invest in whatever source it takes to ramp up our energy production because we are falling behind China in what might be the biggest bottleneck in the entire supply chain.

But I digress.

I hope at this point I’ve conveyed the importance of reasoning models. Now we’re ready to understand OpenAI’s release.

A Real Look at o3 and o4-mini

As mentioned in the intro, the response to these models has given rise to two ‘opposite’ reactions:

Those that claim o3 is a step-function improvement and are massively overhyping the release

Those that claim it’s undeniable progress, but not enough to sustain the view that they are in the lead.

And although I’m going to give you both ends of the bargain, and setting the record straight: I’m on camp two. But that doesn’t mean I’m not impressed with some of the new capabilities of OpenAI’s models like the ones I’m about to show you.

The Improvements of o3 and o4-mini

First, stating the obvious: o3 and o4-mini are state-of-the-art, alongside Gemini 2.5 Pro, in reasoning models across most relevant benchmarks.

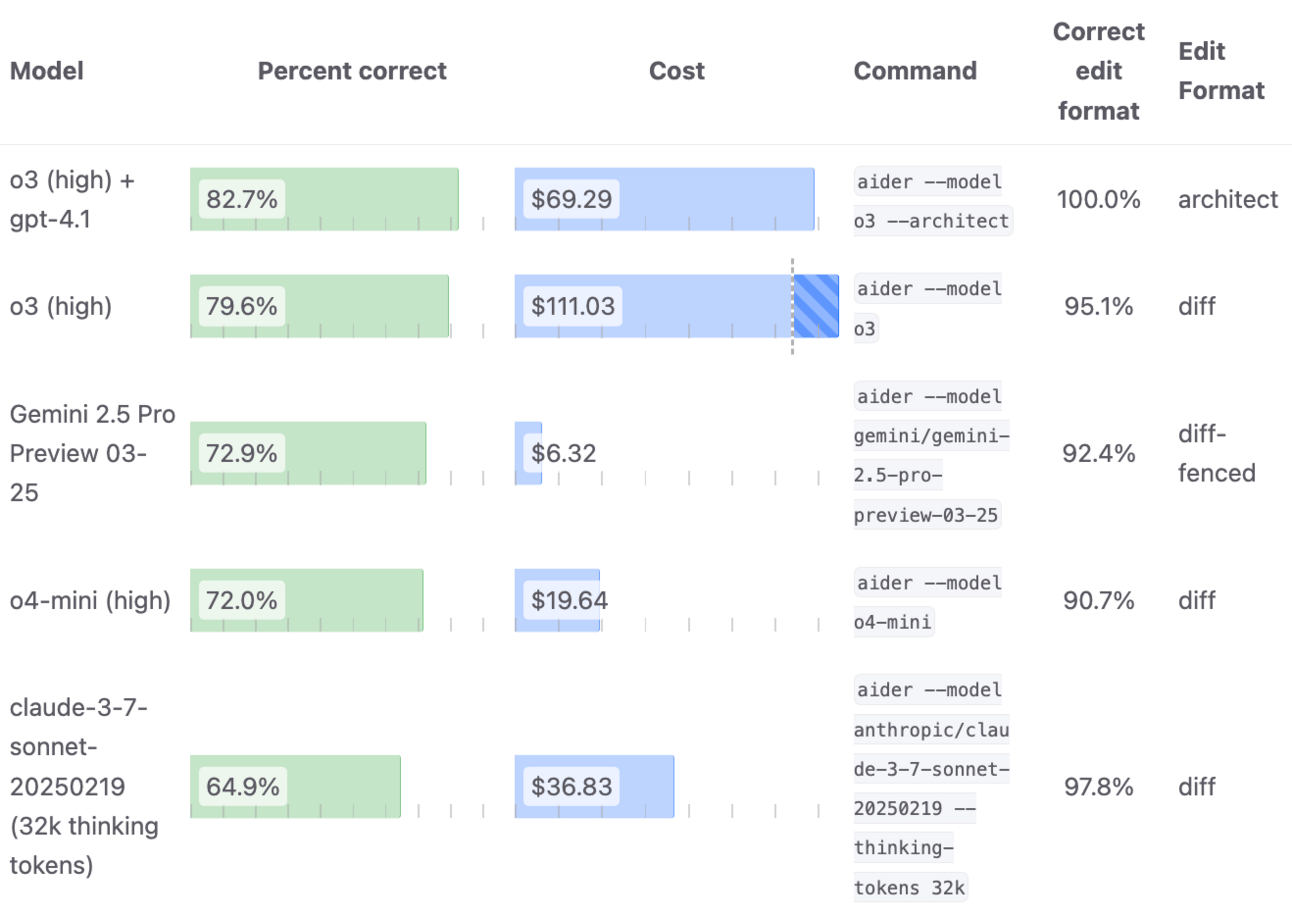

1st and 3rd on Aider’s Polyglot (coding)

Top tier marks in knowledge-related benchmarks like Humanity’s Last Exam or GPQA (PhD-level knowledge)

Top-tier marks on math-related exams.

And so on. No need for me to deep dive too much into these because we all know by now that benchmark results only tell half the story and are mostly “gamed.”

But here comes my first two critiques of this release:

They completely avoided comparing their results to non-OpenAI models, which is just them leveraging their brand to avoid looking bad—the “everyone compares to us, so we don’t need to compare to anyone else” mentality. Entitled and simply hiding the dirt under the carpet (we’ll see later why they did this).

The released model is not the exact model that shocked the world months ago, obtaining compelling results in benchmarks like ARC-AGI. The released models are far smaller, so please do not assume otherwise, despite OpenAI not being particularly vocal about this.

Let’s focus on what really matters, in that they introduce a new type of reasoning into the mix: visual reasoning or, as they call it, ‘Thinking with images,’ which, although we can’t jump into conclusions yet, might finally solve OCR for good.

But what is this?

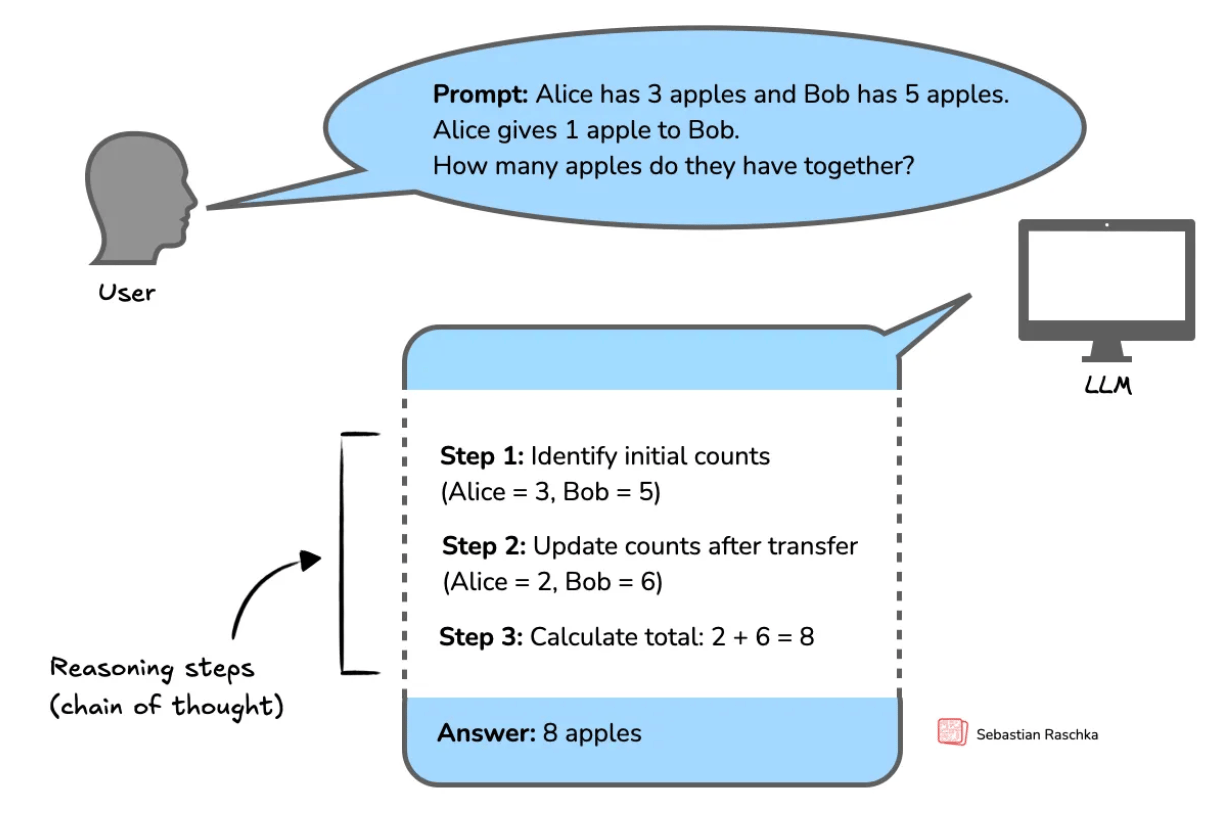

This pretty powerful feature introduces images into the chain of thought. Earlier on, we discussed how reasoning models emerged when we allowed an LLM to think for longer on a task. But what I didn’t tell you is that this results in a chain of thought (CoT), an intermediate concatenation of different thoughts by the model that show the model’s reasoning and help it arrive to the correct response.

Source: Sebastian Raschka

This behavior is trained into the model to incentivize multi-step reasoning, a common human reasoning prior where we break a complex problem into a concatenation of simpler steps.

Since OpenAI introduced reasoning models in September, chains of thought have been text-only. Therefore, what the o3/o4-mini release introduces is the addition of images into the CoT.

But why, and what does this look like?

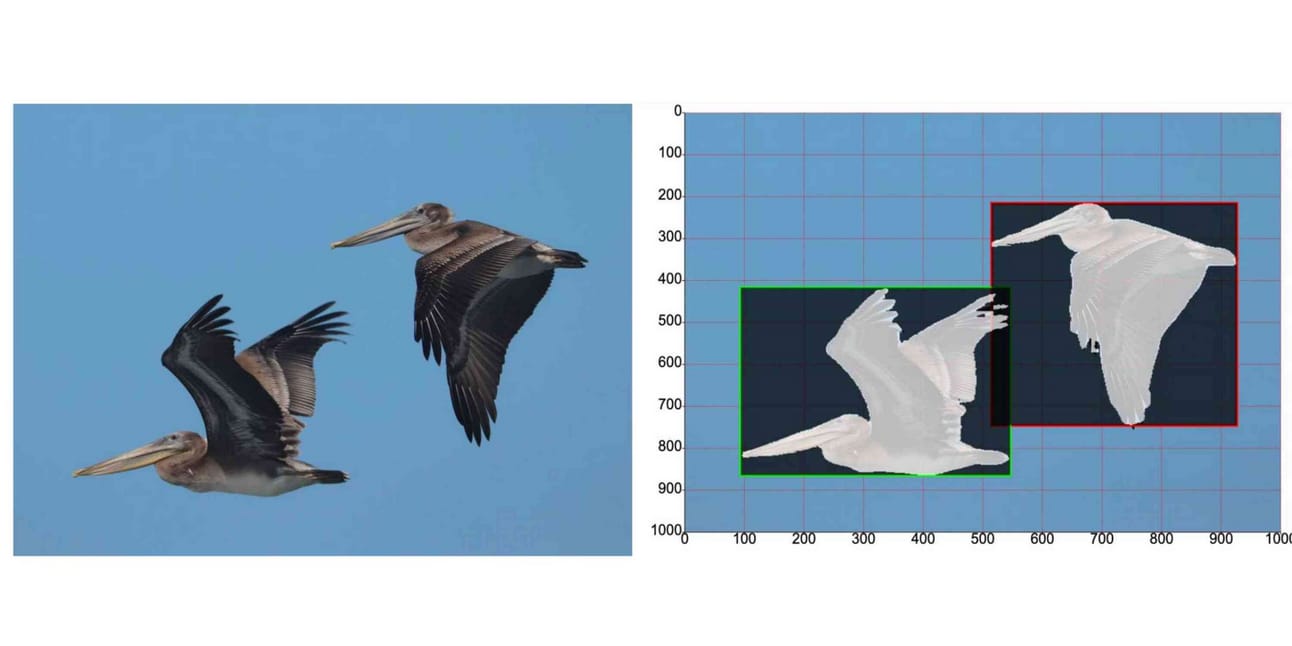

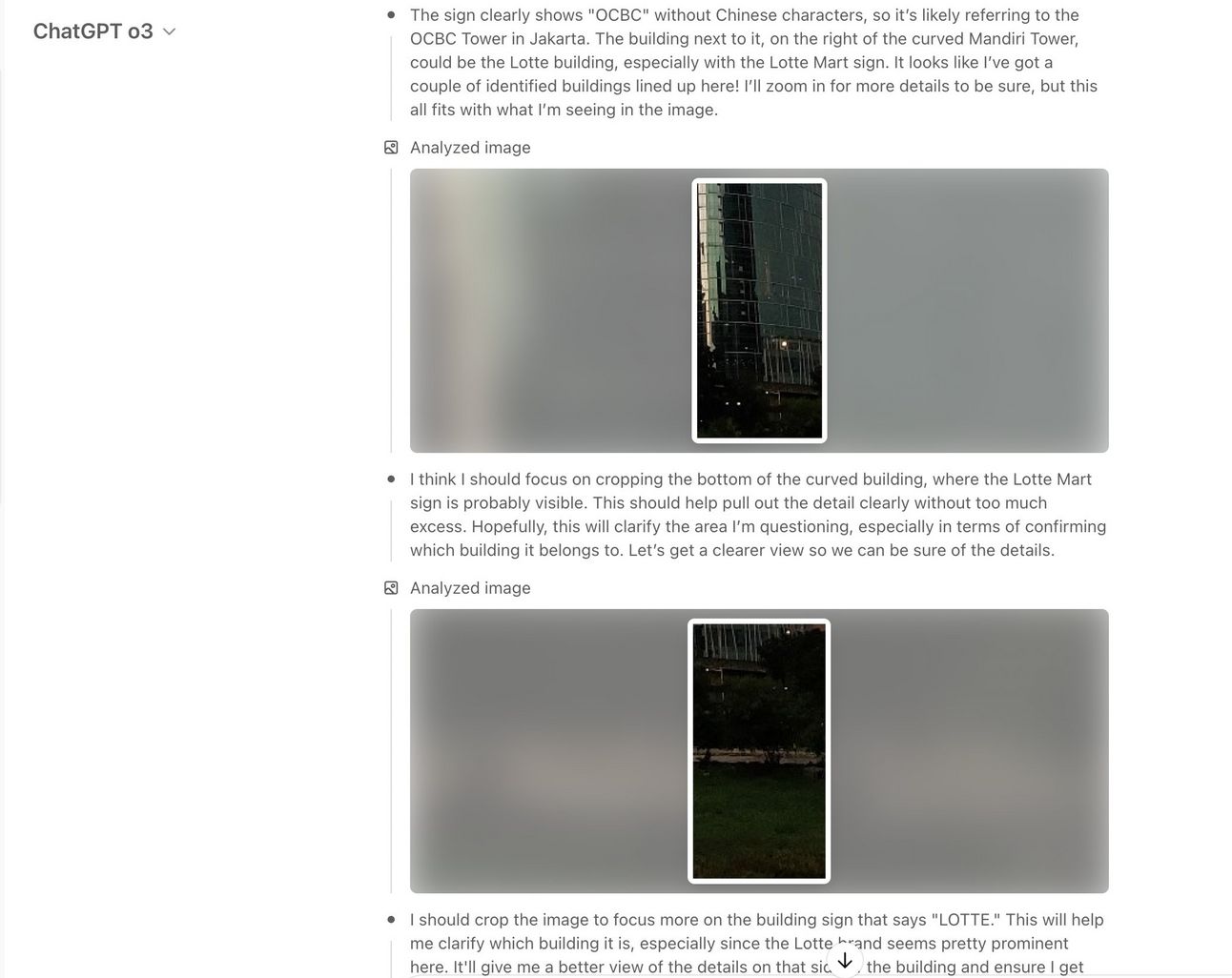

As you can see in the image below, the model can see images and actively reason over them, performing ‘zoom-ins’, rotations, edits, and other transformations to answer the question.

For example, in this image, the model crops the image further and further, trying to find cues as to where this building is located.

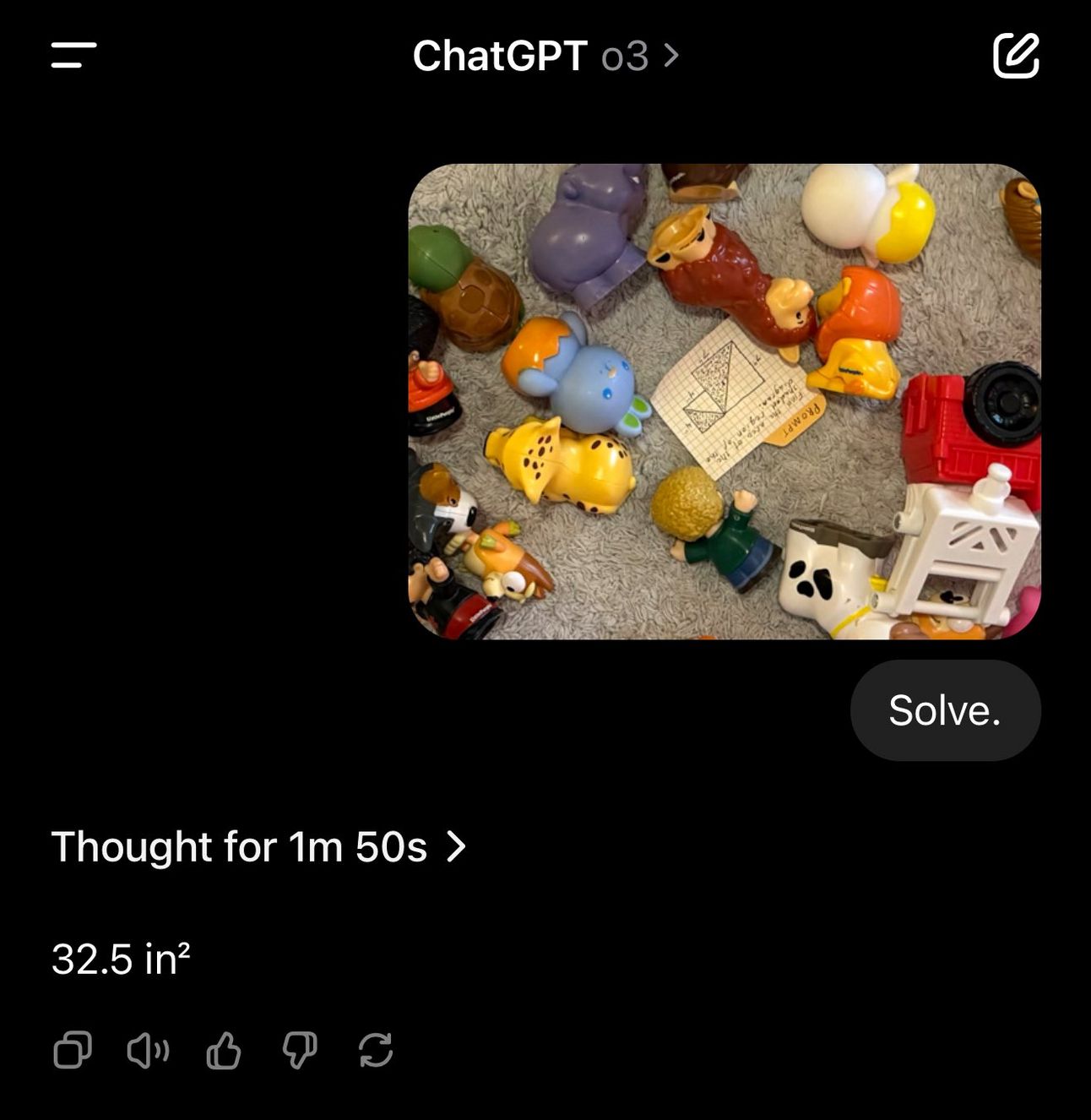

Or this example, where the model successfully solves a hand-written math problem that is initially hard to read by performing rotations and zooms (the answer is correct, confirmed by the user that posted it):

Perhaps more impressively, we have seen examples where the model can solve complex mazes using this feature, combined with code, as OpenAI demonstrates in its own release blog.

This is an extremely powerful feature that, once it becomes ‘affordable’ (you’ll see what I mean in a minute), has strong repercussions for AI adoption:

If you’ve used vision LLMs for businesses processes like OCR or anomaly detection, you’ll realize how immensely powerful having this iterative analysis process of the image is. Besides latency-sensitive use cases, you can consider OCR pretty much solved by now and you should immediately start discussing with your team how to use vision LLMs for your OCR processes.

It will naturally increase the perceived intelligence these models display. I’m not saying they are more intelligent, but that they can reason across more than one modality, which makes them inherently smarter (or, to be more specific, imitate a broader range of intelligence).

The model can generate bounding boxes and segmentation masks as the ones below, making them great for synthetic data generation and distillation training (and for tasks requiring this feature, naturally), both crucial for affordability (something Google’s Gemini 2.5 Flash can do too, and for way cheaper)

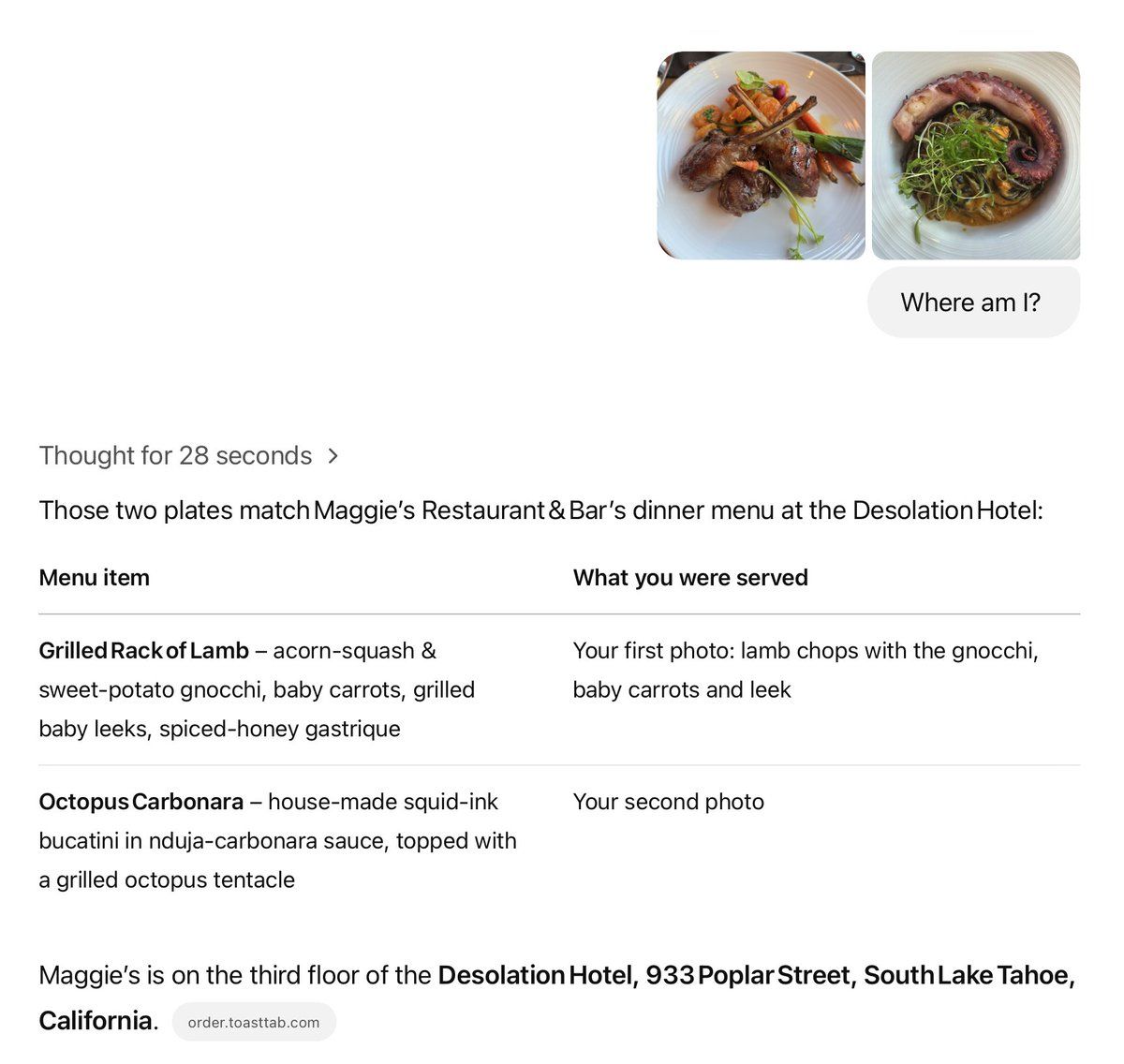

And before you dismiss my claim that vision is now very much solved at the business-practicality level (vision has other complexities like spatial reasoning that are very much to be learned yet, but most companies do not need spatial reasoning to use AIs for vision), you tell me if this image below is not mind-blowing (and the user who posted confirmed it’s a correct inference):

But is this AGI as some claim? Of course not.

The Dark Side of the Moon

Despite the improvements, these models continue to be fundamentally limited at various ‘basic’ problems that almost any human can solve.

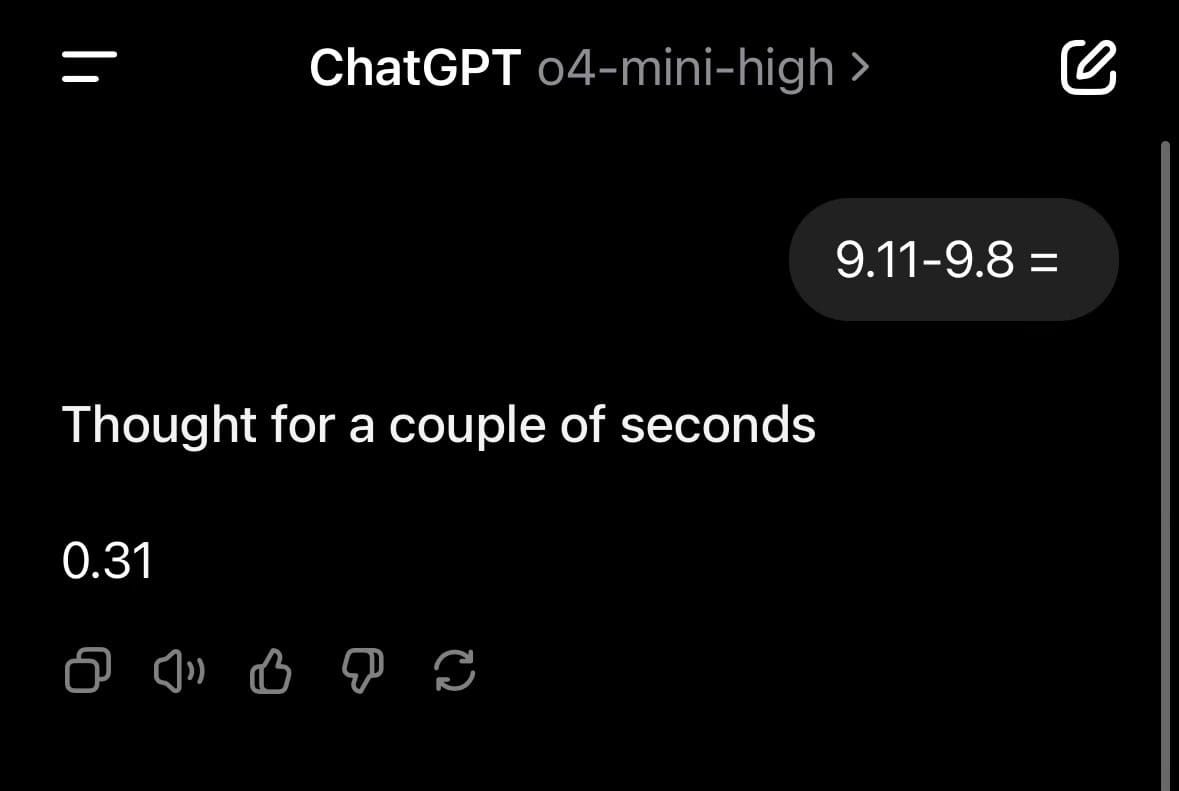

They are still unreliable in solving tasks like 9.11 - 9.8, giving results like 0.31

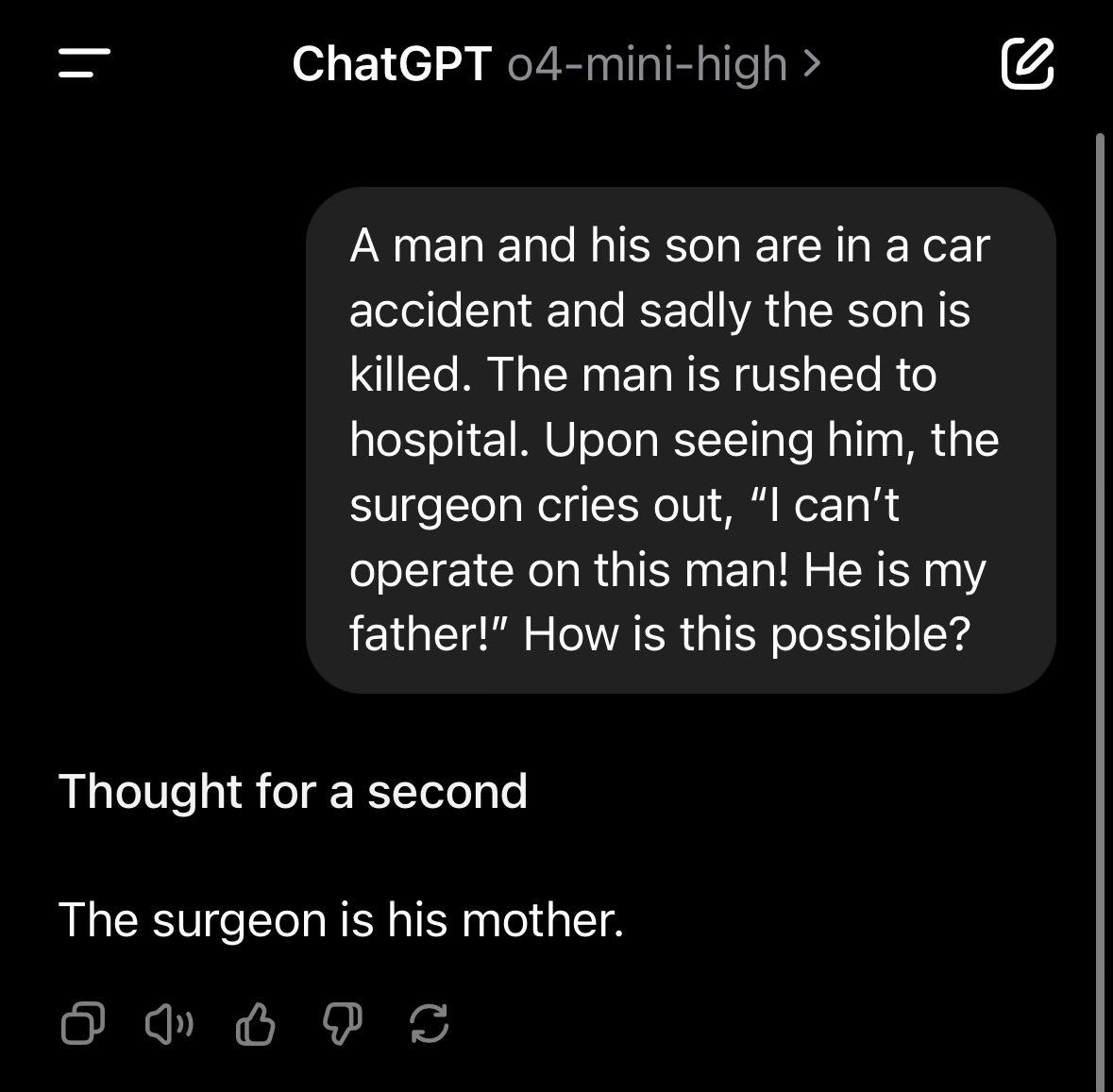

They sometimes fail tasks such as counting ‘r’s in ‘Strawberry’

They aren’t reliable at all in counting fingers on a hand

Or even simple riddles:

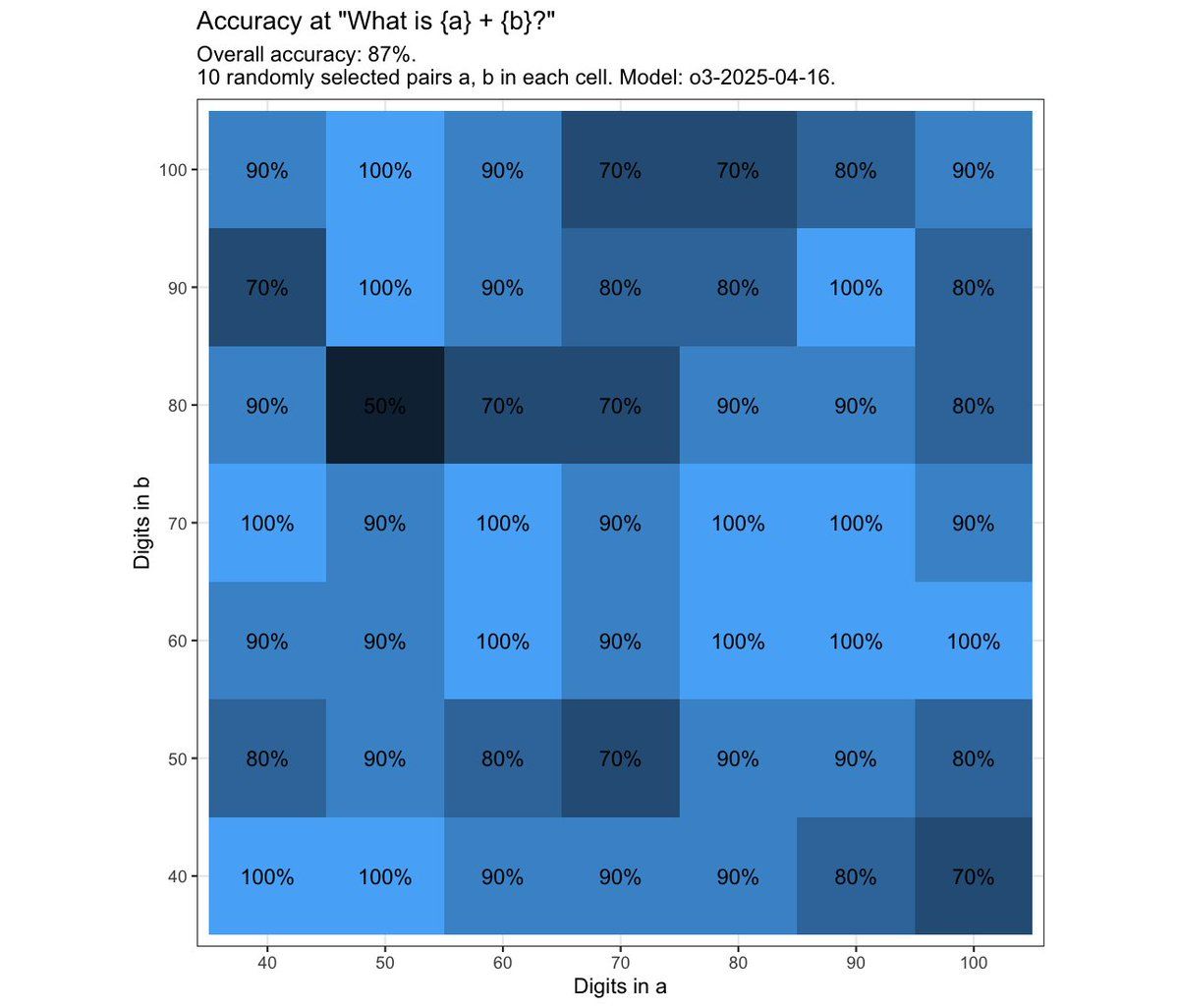

Furthermore, ironically, LLMs continue to prove being a step back in terms of intelligence compared to something that isn’t even intelligent, like a calculator:

Of course, you may suggest that the latter test is irrelevant and that tools like a calculator solve this and that we don’t need AIs for that.

And I fully agree.

But my point here is that we can’t expect to claim AIs are as smart as humans (or even smarter, as some say) and then accept them failing at the simplest of tasks. It’s the inaccuracy of the claim that triggers me.

Simply put, I’m happy to accept that these models are the most powerful augmenters of human intelligence and productivity the world has ever seen, but I don’t buy the framing of them being as smart or more intelligent than humans.

The truth is that they aren’t even remotely comparable to our intelligence, but I’m still shocked to see people who are considered incredibly smart misjudge these models for what they aren’t. I’ve even read claims that the o3 model has ‘solved math.’

And this people are serious.

And yes, AIs are ‘incredibly smart’ at finding patterns in their knowledge that lead to ‘smart-looking’ responses. However, they are still fundamentally limited by their own knowledge (they can’t solve what they don’t know), while this precise feature is a staple of human intelligence and what leads us to this day.

Long story short, they still fail what I call the Jean Piaget test: “Intelligence is what we use when we don’t know what to do.” If we frame intelligence that way, reasoning models are not intelligent because they won’t work in that precise instance, or at least I have yet to see a single example of such an occurrence where AIs work well in situations they have not seen before.

But they can do (and very well) is “connect the dots” between apparently disconnected themes, topics, or matters that humans have not realized yet; in that regard, they have indeed help humanity progress in some areas like drug discovery. But only as long as they have the underlying knowledge to achieve such discovery.

Maybe, and here's where I end my ramble: how we need to frame these models is as a new type of intelligence, different from ours, or simply as a tool to augment our own intelligence by amplifying our pattern-matching capabilities, complemented by the human brain’s capacity to adapt to novelty. Otherwise, let’s cut the stupid comparison between humans and AIs which only fuels fear instead of excitement.

But going back to our topic of today, it’s about time I give you my two cents on the models in a strict ‘state of the art of AI’ analysis.

Google is King.

I was very loud and clear on Sunday, even before the release of o3. Back then (full analysis on Google here), I already predicted that o3 would be the new state-of-the-art in raw performance, but it wouldn’t still beat Google in the key metric that matters today: performance relative to cost.

And we were completely right.

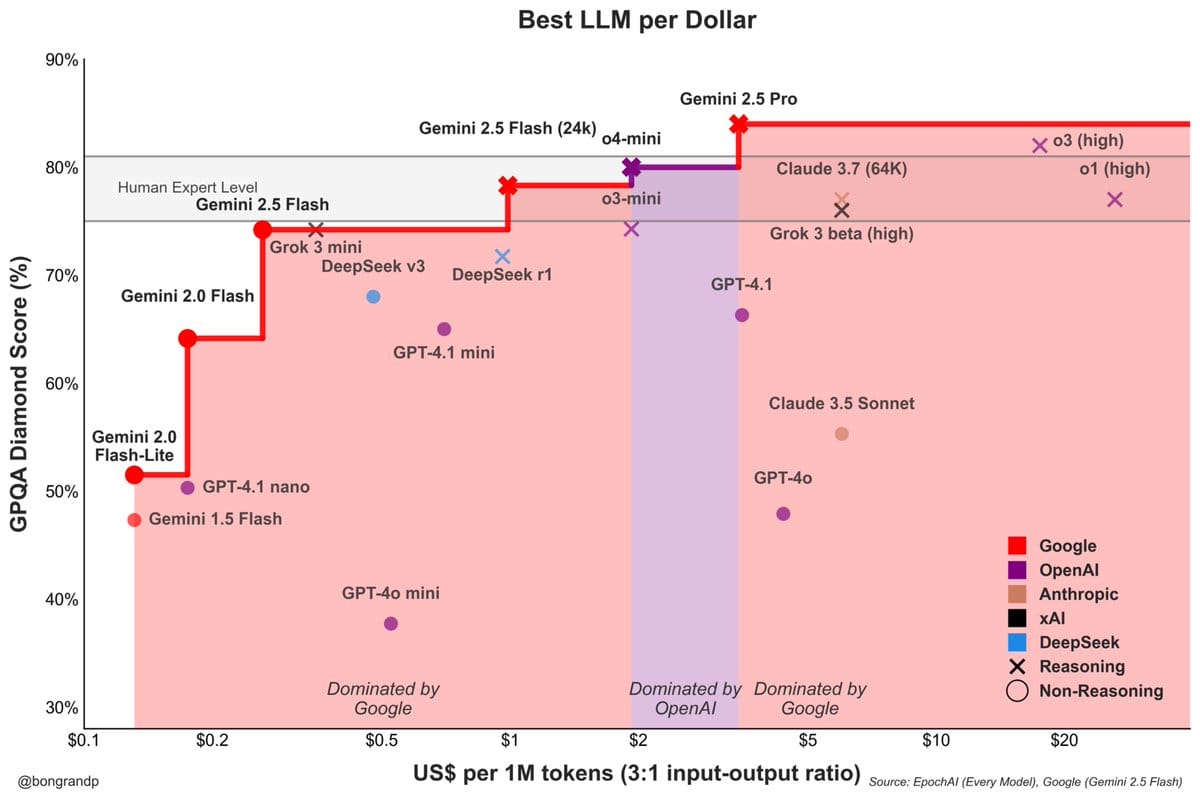

When we look at the performance relative to cost, it’s not even funny how superior Google is. If we look at the Pareto curve in GPQA Diamond, the prime benchmark to evaluate ‘PhD-level’ knowledge, the trend is pretty much self-explanatory:

Google basically dominates the curve, with only a small part of it dominated by non-Google models (o4-mini). This is just one benchmark, but the trend is pretty much replicated in every benchmark. In some extreme cases, Google models can be several times better per dollar spent than their immediate rival, again OpenAI’s o3 and o4-mini models.

As shown below, the Aider polyglot benchmark (tier 1 coding benchmark) has o3 and o3+GPT-4.1 (o3 as orchestrator and 4.1 as code editor) with the best results. However, the associated costs are 6 and 18 times more expensive than Google’s result, achieving only 10% and 6% improvement, respectively, despite being much more expensive.

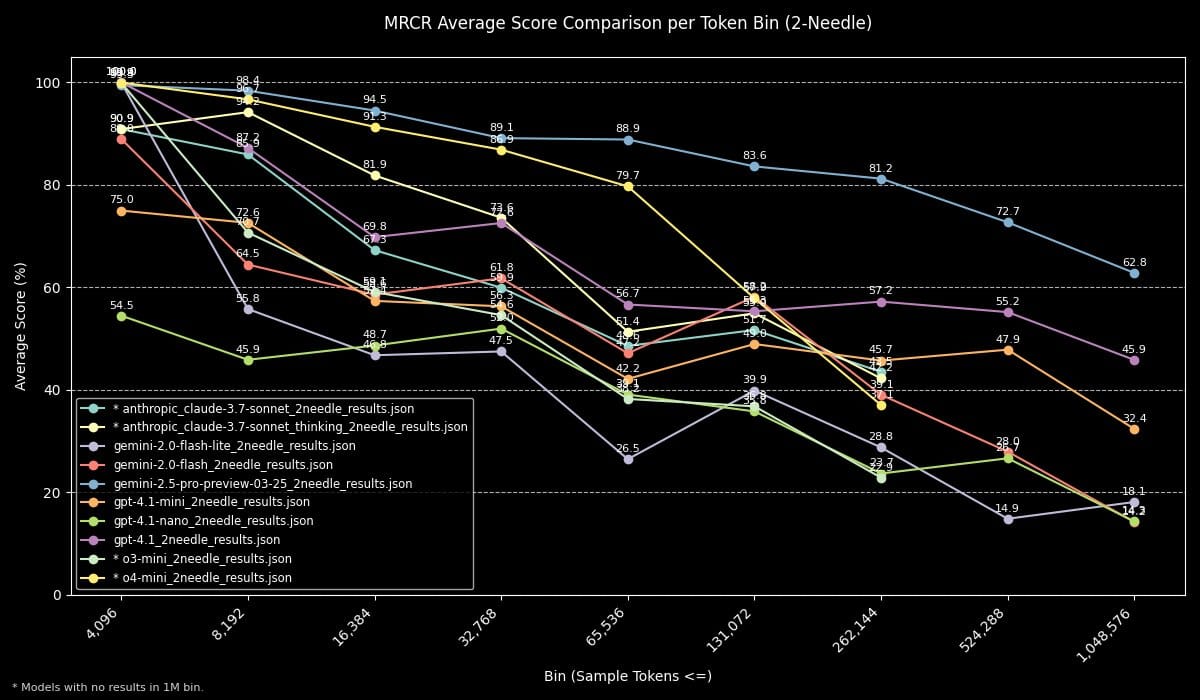

And let me be clear that o3 and o4-mini don’t lead everywhere, and Gemini 2.5 Pro takes the first spot in some cases even without considering cost, like in this long-context evaluation:

However, the issue for OpenAI is that I believe this trend (performance-to-cost ratios) will only get worse. As we discussed in our Google deep dive, Google has a fully sovereign tech stack because it runs its models on its TPUs instead of NVIDIA chips.

More specifically:

As covered on Sunday, Google now has a hardware in Ironwood that is NVIDIA-level that scales up even better than NVIDIA’s GB200 (for more on this, I highly suggest you read my deep dive)

Has, by far, the most experienced distributed workload engineers, led by Jeffrey Dean, quite possibly the most prominent expert in distributed workloads on the entire planet, with the lowest running costs by far among all Hyperscalers.

They have a top-tier AI lab lead by a Nobel laureate Demis Hassabis

They don’t have a major shareholder taking 20% of your revenue in Microsoft as OpenAI.

The point is, they’ve caught up in terms of model capabilities, and they are miles ahead everywhere else.

Therefore, it’s now OpenAI’s job to catch up in terms of prices, otherwise all developers and enterprises will inevitably transition to Google’s products because let’s face it: model capabilities are being commoditized across labs, and in a commoditized market, only prices and user experience matter.

OpenAI’s UX is great, but their prices aren’t remotely close. On the other hand, OpenAI has to endure the high capital costs, which include NVIDIA/AMD’s high margins, forcing them to raise prices to recoup the investment in these GPUs (a 5-year depreciation schedule). Also, Google can design its models to be purpose-built for TPUs, making them even more efficient to run.

The superiority is so clear that Google’s reaction to OpenAI’s release was not Gemini 2.5 Ultra, which we know is already fully trained. Instead, they released Gemini 2.5 Flash, an even cheaper version that still holds its own incredibly well.

Although this is just a rumor, Google is poised to release a new state-of-the-art model, probably Gemini 2.5 Ultra, next week. Codenamed ‘Claybrook’, it appears to be insanely powerful based on preliminary results in the Webdev arena.

Long story short, Google doesn’t feel threatened at all, making it really, really hard to bet against them.

This leads us to an obvious conclusion confirming last week’s suspicions: Google is now the lab everyone looks up to, including OpenAI.

Closing Thoughts

Overall, OpenAI has delivered the expected results: a very powerful state-of-the-art models performance wise, with incredible features like 'Thinking with Images.’

However, their release also confirms, in my earnest opinion, that they are no longer ahead of the curve. In fact, quite the opposite, they are well behind Google in the metric that matters the most in 2025: performance to cost.

Making matters worse, with the tariff war and export controls over NVIDIA/AMD and their Chinese businesses, GPU price hikes might be just around the corner, which will only make the job of competing harder and harder for the rest.

Conversely, Google owns the entire supply chain, except for chip manufacturing (TSMC), so it can even run the models at a low energy cost and use its superior margins to squeeze OpenAI even further (I’m focusing on OpenAI, but this also applies to other US labs, like Grok or Anthropic). In other words, they don’t need to make a return on TPU investments; instead, they view everything (hardware and software) as a single investment, unlike the rest, which gives them an even greater edge.

On a final note, a word must be said about Chinese firms, which, as mentioned, have access to dirt-cheap energy. Although lacking Google’s engineering expertise, they will be able to compete with Google on a margin basis. Naturally, if Google struggles with Chinese competition nothing has to be said about the rest. Here, all roads lead to Rome, and Rome here means ban for Chinese models in the West, or at least in the US.

Without getting ahead of myself, I would like to end with a very spicy reflection for you to ponder:

It starts to become as clear as day to me that AI compute is to the US what oil was in the 1970s; the US must ensure that top-tier AI models can only be accessed via dollar-priced compute, refueling the need for dollars in a world where dollar supremacy is at an all-time low.

But we’ll save this discussion for another day.

THEWHITEBOX

Join Premium Today!

If you like this content, joining Premium will give you three times as much of this type of content weekly, without overloading your inbox. You will even be able to ask the questions you need answers to.

Until next time!

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]