Last chance! Don’t miss out on AI’s event of the year starting tomorrow, NVIDIA GTC 2025, also known as the ‘AI Woodstock’ due to its impact across the industry. Register today for free.

FUTURE

AMD, The Sleeping Giant?

Today, I will convince you that a company with a price-to-earnings ratio of 99 is undervalued.

Yes, you read that right.

We are returning with company deep dives to discuss Advanced Micro Devices, or $AMD ( ▲ 3.63% ), which I believe has the highest upside potential for valuation growth in the entire space.

AMD's performance has been underwhelming for several years. However, something just took place that could change the course of this company forever. I believe this so profoundly that it has become the first stock I’ve directly invested in (I previously invested only in index funds).

Besides learning why I’ve made such a decision, you’ll also learn:

How AI hardware works in detail,

How top vendors compare (hardware, software, total cost of ownership (TCO), and more),

Understand the value propositions of disruptors like Cerebras (close IPO) or Groq.

And, finally, comprehend the door of opportunity I believe has opened for the both of us.

This article should interest anyone who wants to profoundly understand the hardware layer and improve their decision-making when dealing with AI.

Let’s dive in.

Before we get our hands dirty, pleas bear in mind this is not financial advice but technical analysis, which are two very different things. I’m an AI expert, not a financial advisor or professional investor.

The reason I’m disclosing my investment is to clarify that I’m biased toward AMD's success.

Keynes said it best: “Markets can remain irrational longer than you can stay liquid,” so even when fundamentals are correct, you can still be wrong, so please do you own due dilligence before making investment decisions.

An Inevitable Transition

Before we discuss the topic, in case you aren’t aware already, I want to introduce you to an idea that will guide you throughout the rest of the article:

AI compute is changing.

The Rise of Inference

For years, AI training represented the largest share of total AI compute. However, this is quickly becoming untrue for two reasons:

AI has been a research-led industry for decades. Models weren’t particularly adopted by the grand masses, so AI was more about a game of creating better AI models in the promise of future demand.

Now, AI is at a crucial time: with technology readiness and ‘return on CAPEX’ pressures, that “future demand” is—should be—now.

The year 2025 should mark the year that AIs become massively utilized by the masses (AI is already around us in our daily lives, mainly through social media algorithms, but that’s not a conscious act of AI usage; actually, it’s closer to manipulation).

Since the rise of foundation models, which can help users with many tasks, an exorbitant amount of compute has been dedicated to training them. While those training runs will continue to rise, as proven by GPT-4.5 or Grok 3, the behavior of the new reasoning models means that the amount of computing power we use to run them will explode.

Read Notion article {🧞♂️ Reasoning Models and inference} to better understand why those that fear that demand for compute will fall are simply clueless.

Combined, these two factors represent a paradigm shift in computing. But why does this matter? Well, it’s AMD’s great opportunity, as we’ll see later.

But to start off, let’s build intuition as to what AMD’s hardware, GPUs, are and why they are the quintessential engine powering most AI models.

The Ins and Outs of the GPU

Before we put sense into NVIDIA’s ($NVDA ( ▲ 2.5% )) dominance (no, “they just make better hardware is NOT the answer”), I need to give you my two cents on how GPUs work and why they are so important for AI.

Why GPUs?

GPUs were created decades ago to cover a particular need: rendering. In a nutshell, to play a video game, we need to change the colors of each pixel on our screen dozens of times per second (hundreds in some cases). Combined, they represent millions of computations per second. Importantly, all pixels need to be updated simultaneously.

And what does this have to do with AI?

Fascinatingly, changing colors on a screen and training and running ChatGPT are very similar exercises, even if that feels hard to believe (they are both simple additions and multiplications performed massively at scale).

For the cinephiles out there, Peter Sullivan explains it best in the film Margin Call when describing how a rocket scientist ended up in investment banking, “Well, it's all just numbers, really. Just changing what you're adding up. And, to speak freely, the money here is considerably more attractive.“

This beautifully explains NVIDIA/GPU’s shift from gaming to AI; it’s all numbers, but the money is more attractive.

Read Notion article {🧑🏼🎨 From Rendering to Intelligence} to understand better why both acts of computation are so similar.

However, they aren’t that similar; AI workloads have a particular “nuisance” that makes them far more challenging.

From Rendering to ChatGPT, A Matter of Memory

While theoretically almost identical, engineering-wise, gaming and AI workloads couldn’t be more different. And that’s because of the memory wall.

Simply put, AI workloads are extremely memory-demanding, saturating memory faster than compute resources. In layman’s terms, GPUs have much more compute than they have memory, and that’s a huge problem.

For a deeper dive into the memory wall and how memory affects both training and inference, read Notion article {🧱 The Memory Wall}.

But we then have to add another complexity: memory hierarchy. GPUs have different types of memory segments, distributed by their distance from the compute cores, as seen below. The further away memory is from the processing cores, the slower it is, which can end in situations where the compute cores are ‘sitting waiting’ for the data to arrive.

In a nutshell, it’s the time that it takes to read and write to memory, and not compute power, the real bottleneck in current AI workloads.

For a deeper explanation of each memory in the hierarchy, read Notion article {🛃 The Memory hierarchy}.

Consequently, besides having tremendous compute power like gaming GPUs, AI GPUs are characterized by two additional complexities:

Need for huge memory bandwidth: The amount of data that can be sent in and out of memory per second is enormous. An RTX 5090, NVIDIA’s latest gaming GPU, has a 1.8 TB/s memory bandwidth, while an AMD MI355X has up to 6 TB/s.

There is a need for a considerable amount of memory per chip: While an RTX 5090 “only” has 32 GB of RAM, AMD’s upcoming MI355X GPU has 288 GB.

To serve as a more familiar example, Apple seems to have lost its mind with its new Mac Studio for AI, a consumer-end computer that costs $14,000 in its most advanced form.

This computer has +500 GBs of HBM memory (five times more than an NVIDIA H100 GPU) coupled with almost a terabyte of memory bandwidth (much slower in this regard, but it’s ok because it’s for a single user).

Sounds like a complete overkill, but it’s not, because the sheer amount of data being sent on and off from memory and the insane speeds that requires are crucial for a good user experience.

All things considered, we can conclude that what drives the performance of AI hardware is based on three things:

Compute power, how many operations per second the GPU can execute,

Memory bandwidth, how many bytes of data can be sent on and off memory into the chips every second,

And memory size, how many bytes of data can be stored in the GPU.

However, there’s a fourth factor, which has stalled AMD’s growth for years and might finally be solved, the main reason behind my investment. More on this later.

But before we go into the side-by-side comparison between the two giants, one last thing to note. We need to recall what we mentioned earlier: the transition from training to inference and how that affects GPU workloads.

Inference as a fraction of total compute is increasing fast. This is very relevant because training and inference are almost opposite workload types:

In training, the more GPUs per model, the better.

In inference, the fewer GPUs per model, the better.

To understand why this is the case, I provide the full details in Notion article {🐘 The Elephant in the Room}.

Knowing this, we can finally start the discussion with AMD’s most prominent rival, NVIDIA.

Side-to-Side Comparison

First, let’s compare the hardware. In summary, they are pretty similar except for the networking topology. It sounds complicated, but it isn’t; bear with me.

Theoretical Numbers

To understand the numbers we are about to see, we need to comprehend that GPUs' raw compute power, or how many operations per second they can perform, depends on the precision of the computations. Precision measures how many decimal places we allow for each element. For instance, a large precision will allow numbers such as 3.493948292, but a smaller precision will round the number to 3.494.

Smaller precisions imply an accuracy loss but are computed faster. Thus, we have different precision categories, ranging from smaller precision (FP4/INT4) to more granular precision (more decimal places), such as FP64.

The number refers to the number of bits per parameter; FP64 means 64 bits per parameter, or 8 bytes, while FP4 refers to 4 bits per parameter, or half a byte.

Here, the thing to acknowledge is that GPUs process two main types of workloads with varying precision requirements:

HPC (High Performance Computing), used for physics and other very compute-intensive simulations, usually at maximum precision (FP64)

AI, which started at FP32 but is steadily decreasing precision. Currently, most AI models still run at FP16/BF16 precision (2 bytes per parameter), but more recent models like DeepSeek v3 are stored in FP8, and, importantly, many models run at even lower precisions during inference. The takeaway is that AI is slowly but steadily tending toward smaller precisions.

Now, talking about the two protagonists, AMD and NVIDIA, focusing on their most advanced respective GPUs, Blackwell and MI355, the raw performance is very similar:

AMD does not offer support at FP4, the lowest precision, while NVIDIA does.

At FP8, AMD MI355 provides ~4.6 PFLOPs, and NVIDIA Blackwell ~4.5 PFLOPs, very similar results.

A Peta FLOP is one thousand trillion operations, or 1×1015, per second.

At FP64, AMD theoretically leads again, offering ~81 TFLOPS FP64 (vector) and up to ~160 TFLOPS on matrix cores, nearly triple NVIDIA’s ~45 TFLOPS FP64 (Blackwell), due to NVIDIA's prioritization of lower-precision formats.

Regarding the third point, besides AI workloads, this bet on greater precisions makes AMD’s GPUs the best option for HPC use cases, which explains why the most powerful high-precision supercomputers in the world run on AMD GPUs. The most powerful low-precision AI supercomputer is xAI’s Colossus, the data center where Grok 3 was trained, in Memphis, Tennessee, built on 200,000 NVIDIA H100 and H200 GPUs.

In short, NVIDIA has an advantage at lower precisions, while AMD takes the win in higher precisions.

If we look at memory bandwidth, the equilibrium persists, with both having around 8 TB/s of memory bandwidth per GPU. However, regarding memory per chip, AMD’s GPUs have 50% more HBM memory, 288 GB vs 192 GB.

In a fair-and-square comparison, they offer almost identical performance. But then, why is NVIDIA so ahead? And the answer is, well, NVIDIA’s moat was never about hardware, but software and networking.

Let’s learn why this is the case.

Software is AMD’s greatest problem.

Let’s cut to the chase. The most significant setback for AMD has been their software stack. Powerful hardware is of little use if the software that enables the use of that hardware is terrible.

Most AMD software platform is built on NVIDIA forks (copies of NVIDIA code that are then repurposed for AMD hardware). Not a great signal.

Recent research by U. of Maryland researchers points out software deficiencies as the most significant cause of AMD's suboptimal performance. Jack Clark, Anthropic co-founder, uses this research to argue that AMD’s software must improve to challenge NVIDIA’s dominance (this guy is one of NVIDIA/AMD’s largest customers, so his opinion matters a lot).

But the issues go much deeper. While NVIDIA’s software stack lets you run state-of-the-art training and inference techniques like FlashAttention out of the box (with little to no developer effort), AMD is quite literally the opposite—a literal nightmare.

FlashAttention is a technique that reduces memory requirements by breaking matrix multiplications into smaller chunks, avoiding materializing the huge matrices in memory. It’s table stakes in AI training today.

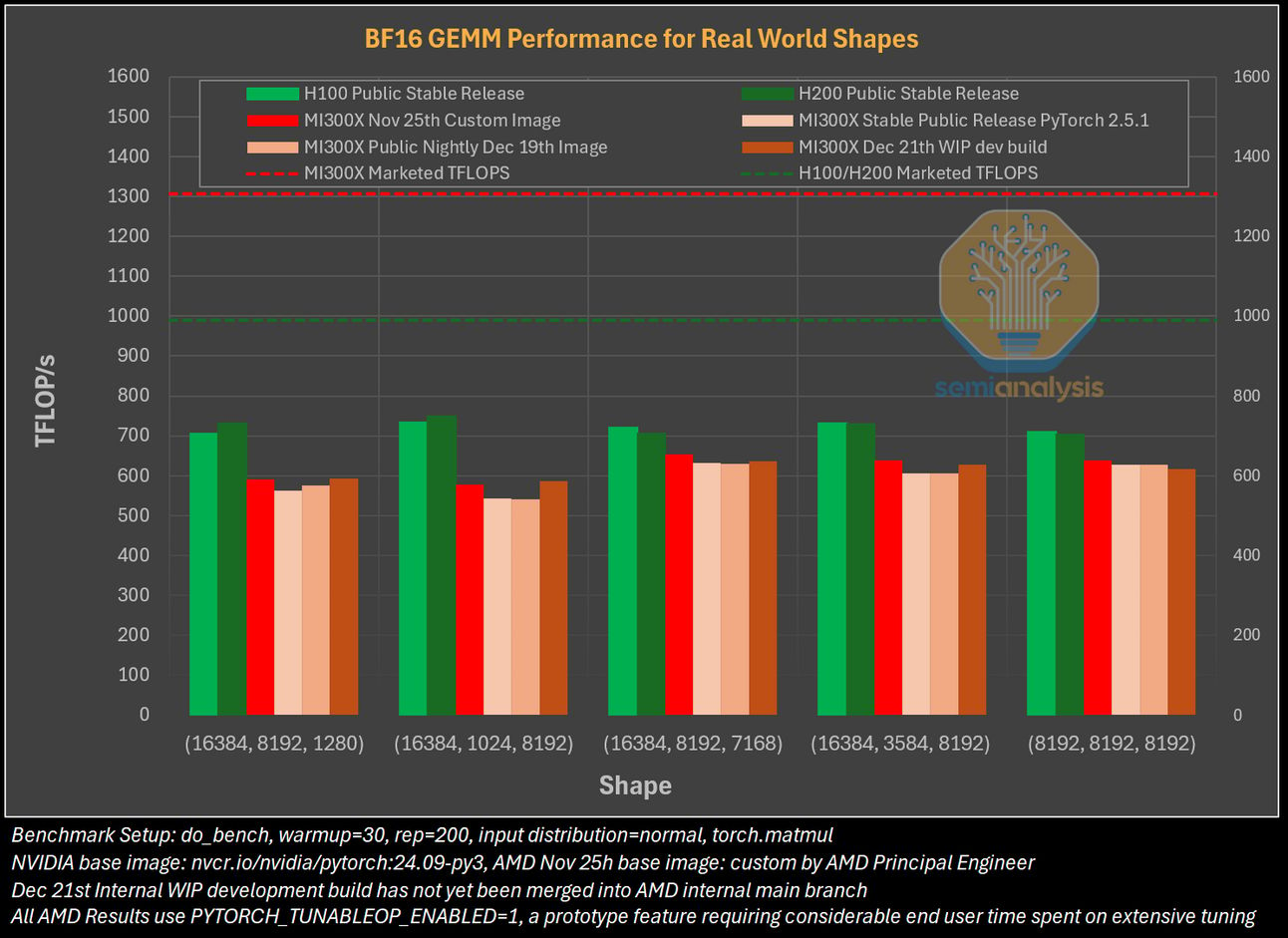

Moreover, when semiconductor and AI analyst firm SemiAnalysis ran tests to compare AMD and NVIDIA GPUs with similar theoretical specs, AMD GPUs terribly underperformed NVIDIA GPUs despite their hardware similarities.

Worse, the results would have been much, much worse if not for the fact that an AMD Principal Engineer prepared a fully customized workload to maximize AMD throughput, something most customers don’t have the capacity—or knowledge—for.

How many operations per second each GPU produced (brown is the customized AMD workload). The differences are striking, considering that, on paper, AMD’s GPUs are more powerful.

As a stark comparison of the approach both companies have to their software stack, FlexAttention, a new PyTorch API that has become the bread and butter of AI Labs because of its versatility to implement several attention variations, has been available for NVIDIA GPUs natively since August 2024.

But as you read these words, this implementation is NOT available for AMD GPUs and isn’t expected before Q2 2025.

Unforgivable!

See where this is going, right? AMD’s hardware is powerful, but a real pain to use. NVIDIA offers same quality hardware with a great developer experience. It’s a no brainer right now.

And what about networking? How do both compare when pairing several GPUs for a workload, which is the standard approach these days?

A More Mature Topology

A sad truth in AI is that, currently, most powerful AI models cannot fit in a single GPU. Their size and the KV Cache exceed the total HBM capacity of a single GPU.

As a reference:

DeepSeek v3 requires more than 8 NVIDIA H100s (or AMD’s MI300X), or 671 GB of memory just to store the model.

Some OpenAI models upward of 32 GPUs

Llama 3.1 405B more than 10 GPUs

And even Command A, a SOTA-ish model, still needs at least 2 GPUs with the current available hardware (with Blackwell B200 or AMD MI355 this model will fit in one single GPU).

In a nutshell, this means the model must be broken up into pieces to be served. There are different ways to break them (we discussed this extensively in our DeepSeek deep dive), but the point is that GPUs storing the model must communicate continuously during the process (the inputs to a GPU may be the outputs of another, for instance).

This introduces the idea of GPU-to-GPU communication, which is done via high-speed cables (NVLink in NVIDIA's case, Infinity Fabric in AMD). However, NVIDIA has an advantage here because of its network topology.

NVIDIA uses an all-to-all topology, which ensures single-hop communication between GPUs (every GPU is directly connected to every GPU in the node).

AMD uses a ring topology, which might lead to some GPUs requiring two or more hops to communicate, increasing latency.

For a more detailed explanation of network topologies, read Notion article {🤔 Comparing network topologies}.

To summarize, while AMD’s GPU specs are theoretically superior,

its software stack prevents it from capitalizing on that advantage,

and for large-scale deployments with many GPUs, like heavily distributed AI training, it scales worse than NVIDIA due to slower communication speeds.

The tables seem to be turning in favor of NVIDIA. However, a very recent event has opened a unique window of opportunity for AMD and convinced me to trust the company to the point of investing in it.

George Hotz and the Inference Transition

A few days ago, George Hotz, one of the most famous savant programmers/hackers in the world and the guy behind TinyCorp, a team of expert programmers and engineers working to create open software that democratizes access to compute, having created what’s possibly the most efficient AI hardware in the world, the tinybox, reached a partnership with AMD to rewrite their entire software stack, from the bare metal up to Pytorch (the Meta-maintained primary Deep Learning library used by most researchers).

This company already single-handedly built an almost sovereign software stack for AMD without having access to AMD GPUs. Insane. With this partnership, they now have full access.

In layman’s terms, they are about to fix AMD’s software problems for good. You’ve seen the hardware throughput numbers, so you clearly understand what will happen if the AMD software stack is fixed, right?

This could easily be the definite moment for AMD’s revenues to explode.

As George himself puts it, with the correct stack, the MI300X AMD GPU should outperform NVIDIA’s H100 (AMD’s response to Blackwell is the MI355X, not yet available).

If that’s the case, picture this:

AMD, which is 16 times less valuable on public markets than NVIDIA, will offer a cheaper (AMD has way less margin while NVIDIA's gross margin is one of the key drivers to maintain its value) product than NVIDIA.

Their GPUs aren’t only as performant but also have higher memory per chip

By developing AMD’s software as open-source, it will considerably lower the barrier of entry toward new hardware beyond NVIDIA, whose CUDA software has created considerable barriers to adoption for competitors due to its ubiquitousness in labs and universities alike.

In that world, the 16-time valuation difference makes no sense. Or NVIDIA is extremely overvalued, or AMD is considerably undervalued. Personally, I fall under George’s camp and believe that NVIDIA is fully valued (it currently trades at a forward PE below most Big Tech), meaning the upside potential for AMD is massive.

It’s also important to note I’m betting that GPU demand is only getting started, and that we are still miles away from meeting the expected compute demands of the following years.

This could very well be make-believe, but I trust adoption will arrive because the technology is legit (and also because noteworthy NVIDIA/AMD customers like Google expect compute to explode in the following years, and has even formally asked the US Government for more investment in infrastructure).

Moreover, Hotz, the guy who AMD is relying on to save the day, bought a quarter of a million dollars in AMD shares to support his arguments (he is walking the talk).

And Dylan Patel, the famous analyst behind the research comparing AMD and NVIDIA we mentioned earlier, has also shown great optimism toward AMD based on recent software announcements.

All things considered, my thesis is that, as AMD and NVIDIA are almost on par in terms of GPU hardware, an improvement in software should help narrow the gap between both considerably.

But wait, while this is true, AMD is also unequivocally worse on a scaling basis (as the number of GPUs to run the workload grows), due to the limitation of their network topology.

Shouldn’t that protect NVIDIA’s market share, considering that the AI workloads continue to grow?

Factoring in workload type.

The answer is that it depends on the workload. For training, the more GPUs, the better; here, where communication speeds matter most, NVIDIA’s superiority is guaranteed until AMD deploys a faster communication topology/hardware.

But here’s the thing.

At the beginning of this story, we mentioned that we were witnessing a transition toward inference in the total compute balance. Later, we also saw the importance of ‘keeping it small’ with your inference workload clusters due to the inevitable costs of communication overhead the larger the number of GPUs communicating.

This means that while AMD is behind in networking, the industry is moving to workloads emphasizing single-node or even single-GPU performance, where AMD shines.

But why is this the case?

Networking, even NVIDIA’s, introduces communication overhead, so having no overhead is still better than having little overhead. Single-GPU models, like Gemma 3, will become increasingly common in eliminating communication costs. Furthermore, they aren’t only faster but easily scalable, too.

Additionally, reasoning models can be made smaller because, as HuggingFace showed recently, you can recover the intelligence gap with larger models by running longer inferences.

With that transition being pretty clear, AMD gains prominence because the smaller the cluster, the less impact NVIDIA’s dominance in communication speeds has, and the larger the importance of single GPU memory and memory bandwidth allocation (in the former, AMD has the advantage, and in the latter, they are basically equal).

In summary, AMD has everything needed to explode demand if software improves (equal hardware and software, while offering cheaper prices).

But can’t NVIDIA drop prices and increase per-GPU memory (increase costs)?

It certainly can, but let’s not forget that one of NVIDIA’s metrics sustaining its valuation is its huge gross margins (upward of 70%), and the company was already heavily scrutinized in last week’s earnings call for dropping gross margins from 75%. Thus, if NVIDIA does that, you would be looking at a considerable squeeze well under 70% gross margins, which I’m not sure NVIDIA is willing to do.

Furthermore, I don’t think their shareholders are looking forward to that scenario right now (or expect it). On the other hand, AMD’s shareholders will happily see less competitive gross margins if that means an explosion in top-line revenue.

That said, not everything is champagne and cocaine for AMD.

Disruptors and the Bearish Case

While AMD may have an advantage over NVIDIA regarding inference (although NVIDIA might soon erase that advantage, the point is that the valuation gap is not nevertheless unjustified, which is the whole premise under my investment thesis), they are both behind, specs-wise, of Cerebras and Groq for inference workloads.

But is that enough for both to be eaten alive by the disruptors? No.

The biggest issue with these up-and-comers is that they have gained the hardware advantage of inference over NVIDIA and AMD, providing much faster per-sequence speeds, at the expense of making the Total Cost Ownership model much worse.

Let me explain.

Again, It’s All A Memory Game

As we have seen today, GPUs use a memory hierarchy. This allows each GPU to have large amounts of high-bandwidth memory, but it comes at the expense of processor idle time (time that the processors aren’t processing).

What makes these disruptor’s value proposition special is that Groq with LPUs (Language Processing Units) and Cerebras with Wafer-scale hardware eliminate the memory hierarchy, so all memory in their clusters is ‘on-chip’ (memory that’s really close to the chip), offering almost instant read/write access (it’s also mostly SRAM, the fastest available type).

The issue with these companies is that the TCO doesn’t quite add up. In some cases, the initial investment may be up to 18 times more expensive than going via the GPU route while offering lesser versatility.

For a more detailed TCO comparison between GPU/non-GPU providers, read {💶 Disruptors and TCO}.

Therefore, unless the use case requires over-the-top speeds, the business case clearly favors GPUs unless these companies figure out ways to decrease capital costs (which currently remain the main driver of AI hardware TCOs).

Ok, and what about the bearish case for AMD? There must be something that could go wrong, right?

The ‘What Ifs’

Every investment entails risk. My biggest concern is that my entire investment thesis is based on the idea that TinyCorp and AMD’s partnership delivers an excellent software stack for AI practitioners to train and run AI models on AMD hardware.

Another limitation that could hinder AMD’s growth is being supply-constrained. While AMD will quickly saturate demand, problems arise on the supply side.

NVIDIA has been supply-constrained for years, and AMD, with much less negotiating power than NVIDIA despite sharing key suppliers like TSMC or HBM suppliers (which are even more constrained), could have issues meeting exploding demand.

Furthermore, NVIDIA is closing the gap with Blackwell GPUs regarding AMD's inference superiority in hardware.

The B300 GPU, most possibly announced tomorrow by Jensen Huang (subscribe for free here to see his keynote), the next generation of Blackwell GPUs, is rumored to have the same memory per GPU as the MI355X.

If that’s the case, and assuming AMD delivers in software, we would be witnessing what’s basically the commoditization of the GPU (although only among two players).

While AMD has more justification for lowering prices as the challenger, NVIDIA has much more room than them to squeeze margins. This could quickly become a race to the bottom, so as an investor in both (AMD directly and NVIDIA through S&P500 index funds), I hope they both raise prices instead (and I’m pretty sure they will if demand continues to be limitless).

However, I don’t think they’ll be able to raise prices that much to offset the increase in HBM memory costs per GPU (~$110/GB, thousands of dollars per GPU), which makes me believe that both will soon see their gross margins decrease further as they are pressured to increase HBM.

Unless models increase compression speed (getting smaller faster), AMD and NVIDIA could soon find themselves in a very tough spot, especially AMD, which has lower gross margins.

Finally, we must acknowledge that both depend on TSMC to fabricate their chips. If a China-Taiwan war occurs, TSMC's manufacturing of advanced nodes could drop to basically zero, which translates to NVIDIA/AMD’s supply dropping to zero, too (interestingly, Groq doesn’t have that geopolitical concern as their chips are built in the US).

Closing Thoughts

If you’ve reached these lines, I hope you appreciate the huge effort I’ve put into assembling this analysis of AMD.

In summary:

AMD is hugely competitive on the hardware level with NVIDIA, but its software stack sucks. Luckily, recent partnerships with TinyCorp and SemiAnalysis could awaken the sleeping giant.

Model compression, thanks to reasoning models and the balance of compute shifting to inference will be a great demand booster for them.

AMD still has the advantage in HPC workloads, which should grow in importance as AI accelerates scientific discovery.

Disruptors (Groq, Cerebras) have a much bigger role to play in an inference-focused world, but their TCO could hold them back against GPUs in a moment when clients are being pressured to control CAPEX.

Thanks for reading, and see you again soon!

THEWHITEBOX

Premium

If you like this content, you are going to love the Premium subscription.

By joining, you will receive four times as much content weekly without saturating your inbox. You can even ask the questions you need answered for a more personalized experience.

It’s also my main source of income, so even the slightest gesture is welcome so that I can continue providing you with this content.

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]