THEWHITEBOX

TLDR;

Welcome back! In this issue, we cover AI’s leap into genuine scientific discovery, with GPT-5.2 Pro solving historic math problems and Stanford’s SleepFM predicting diseases from brain waves.

We also track the massive flow of capital into hardware and infrastructure, highlighted by TSMC’s 35% profit surge and Anthropic’s $350 billion target valuation. Finally, we analyze Google’s aggressive "Gemini everywhere" strategy, including its deep integration into Gmail and a landmark deal to power Apple’s Siri, among many other relevant news items you can’t miss.

This is a long but very interesting one. Enjoy!

AI in HR? It’s happening now.

Deel's free 2026 trends report cuts through all the hype and lays out what HR teams can really expect in 2026. You’ll learn about the shifts happening now, the skill gaps you can't ignore, and resilience strategies that aren't just buzzwords. Plus you’ll get a practical toolkit that helps you implement it all without another costly and time-consuming transformation project.

HEALTHCARE

Using Sleep to Identify Illnesses

Researchers from Stanford University have published a study in Nature Medicine describing SleepFM, a multimodal foundation model trained on polysomnography (PSG) data.

The model was developed using 585,000 hours of sleep recordings from approximately 65,000 participants across multiple cohorts. PSG captures brain waves (EEG), heart activity (ECG), breathing, muscle tone, eye movements, and other signals during a full night's sleep.

On standard sleep tasks (e.g., sleep staging and apnea detection), it performs competitively with or better than specialized models, and when linked to participants' electronic health records (with follow-up up to 25 years and predictions made only for future diagnoses), SleepFM estimated risk for over 130 diseases.

These predictions outperform models based only on demographics (age, sex, etc.) for many outcomes. The authors note that PSG populations differ from the general public, and the model identifies associations rather than causation. It remains a research tool, with potential future adaptation to simpler devices, such as wearables.

TheWhiteBox’s takeaway:

This work is very interesting because it reminds us, once again, that not everything has to be about Large Language Model (LLM) chatbots and that simple applications of AI can already offer substantial benefits to society.

Interestingly, the underlying mechanism is similar to that of LLMs, as SleepFM also relies on the attention mechanism to process and find patterns in data. Just like ChatGPT uses attention to capture meaning in words and sentences, SleepFM uses it to capture hidden patterns in sensory data.

Also, it reminds us that one of the best opportunities in AI is pattern-matching across vast amounts of data and modalities, which is particularly valuable for healthcare use cases. While we don’t know if we will build a machine as smart as a human, this pattern-matching ability is already life-saving and worth investing in.

THEWHITEBOX

Google Launches UCP

Google has announced the release of the Universal Commerce Protocol (UCP), an open standard meant to give “agentic commerce” a common language, so AI agents and commerce systems can work together across the full shopping flow (discovery, buying, and post-purchase support) without every retailer needing a custom integration for each agent.

It has been developed by Google in partnership with prominent retail companies such as Walmart, Shopify, Etsy, Target, and a long tail of others, ensuring immediate integration.

Moreover, they have released Business Agent, a shopper-facing conversational layer that Google is adding to Search: shoppers can chat with a brand in its voice, like a virtual associate. This way, besides enabling communication between the user’s AI and the different e-commerce platforms, users can now also “talk to the retailer”, similar to how you can talk to Rufus in Amazon’s platform.

TheWhiteBox’s takeaway:

I’ve always seen AI-led shopping as inevitable. And while it still feels that way, OpenAI’s similar shopping feature changed absolutely zero things about my buying workflow. I’ve used it literally once.

I still believe this is a matter of time, but many investors think this will be adopted immediately, but, if we’ve learned anything from the past three years in AI, is that some things take time.

This can be best summarised by OpenAI’s own experience: less than 10% of its users have tried (yes, tried) reasoning models. And when they rolled them out to free users to incentivize usage, thinking it would boost adoption, they had to roll them back because people hated them for being “too slow.”

If this sounds shocking to you, let this be a reminder that, in AI, the greatest gap isn’t economic; it’s a gap in human skill and agency.

AI empowers humans, but only those who want to be empowered.

DISCOVERY

Is GPT-5.2 Pro Actually Good at Mathematics?

In our previous Leaders post, tackling my 2026 predictions, I also covered my 2025 predictions, including one that was correct: I explained how 2025 had yielded some of AI’s first real discoveries.

And now, this seems to be accelerating, as GPT-5.2 Pro has been particularly successful in tackling what are known as Erdős problems.

These are a collection of over 1,500 challenging math questions posed by Paul Erdős, a famous Hungarian mathematician who lived from 1913 to 1996. He offered cash prizes for solving them, and many remain unsolved because they require creative thinking rather than just calculations.

GPT-5.2 Pro has solved several of these, often working alone or with minimal human guidance, and the solutions have been checked and accepted by top experts like Terence Tao, a renowned mathematician and considered by many the smartest human alive.

Here's a breakdown of some of the standout discoveries, explained step by step without getting too technical:

Erdős Problem #729 (Posed in 1968): This puzzle is about how numbers multiply together in a special way using something called factorials—basically, the result of multiplying a number by all the smaller whole numbers before it (like 5! = 5 × 4 × 3 × 2 × 1 = 120). The question asks for the smallest number where certain divisions work out neatly, with a twist involving logarithms to account for growth rates. GPT-5.2 Pro came up with a complete solution on its own, modifying older ideas in a fresh way. Experts are still double-checking it against existing books and papers, but early signs show it's a genuine new answer.

Erdős Problem #728: This one deals with sets of whole numbers and patterns called arithmetic progressions—think of sequences like 2, 4, 6, where each step adds the same amount. The problem explores conditions under which these sets avoid certain long patterns while remaining "dense" (meaning they include many numbers). GPT-5.2 Pro solved it mostly by itself, with some back-and-forth prompting from users, and even turned parts of the explanation into a format that computers can verify automatically. Terence Tao confirmed this as a milestone, noting the solution wasn't copied from anywhere else.

Erdős Problem #397: Similar to the others, this puzzle involves finding patterns or limits in numbers that grow in specific ways. GPT-5.2 Pro generated a “proof” (i.e., a step-by-step logical explanation showing why the answer is correct), and it was formalized (made super-precise) using another tool called Harmonic. This proof was submitted and accepted by Terence Tao.

Erdős Problem #379: This is another in the series, focusing on divisibility rules in large numbers. GPT-5.2 Pro cracked it quickly, and again, Terence Tao gave it the thumbs up. Posts from users like Dr. Singularity emphasize that this shows AI solving things humans couldn't, paving the way for faster progress in other fields.

All these discoveries have happened in just a couple of days, the last week or so.

Beyond the Erdős problems, GPT-5.2 Pro has made other notable contributions.

TheWhiteBox’s takeaway:

It’s hard not to feel excited by some of these discoveries; they truly feel like something is changing.

However, what people often forget about is that these achievements are also a merit of the user, who typed just the right prompt for the right task, meaning you won’t be seeing such types of discoveries coming from laymen, even when reading claims that GPT-5.2 required minimal or zero help.

What I mean by this is that humans are still required and, more importantly, humans still require expertise to achieve these results. AIs augment humans, but only those that know what needs to be augmented.

Would I, a non-mathematician, solve an Erdos problem with GPT-5.2 Pro? Extremely unlikely unless I went deep into the problem for weeks or months.

This is why I insisted earlier that the biggest “gap” I’m seeing has nothing to do with AI and a lot to do with us. The clear separation is between builders and those who lack the bias for action to “do stuff.”

WORLD MODELS

1X’s “Weird” World Model

1X, a humanoid robotics company, has released its first-ever ‘world model’, the 1XVM. This is a very interesting AI model because it’s “different” from how we normally approach controlling a humanoid’s actions.

The process works by sending textual or voice instructions to the robot. The robot then processes the prompt and, using what it sees through its cameras, generates one or more video rollouts that “imagine” how the action would be executed.

Next, the robot “reverse engineers” the actions it needs to perform based on the generated video, by predicting the actions it needs to take to go from one frame to the next.

On data and training, 1X says the backbone is a 14B generative video model, adapted via mid-training on 900 hours of egocentric human video and fine-tuning on 70 hours of NEO robot logs, with “caption upsampling” to improve prompt adherence.

TheWhiteBox’s takeaway:

This is a very interesting release because it’s a genuinely unique way of building a world model. Most world models directly predict actions from text and video inputs, meaning the model chooses the actions to execute at runtime.

Here, the system first “imagines” the action and then reverse engineers it. But wouldn’t the model being small hurt?

That’s the trade-off: the ‘imagine+reverse engineer’ combo seems to be a simpler prediction for the model, allowing it to be smaller, but it also introduces an obvious latency penalty that forces the model to be small to run fast.

Is this the right approach? Time will tell, but one thing’s for sure, it's surely innovative.

If you’re interested, I’ve written about it in greater detail here, providing more intuition for why this works and how it works.

CHIPS

TSMC’s Profits Rise a Staggering 35%

Taiwan Semiconductor Manufacturing Co. (TSMC) reported a record fourth quarter for 2025, with consolidated revenue of NT$1,046.09 billion (US$33.73 billion) and net income of NT$505.74 billion, translating to diluted EPS of NT$19.50 (US$3.14 per ADR). Revenue rose 20.5% year over year, while net income and EPS rose 35.0%, with gross margin at 62.3% and operating margin at 54.0%.

Under the hood, the quarter showed continuing migration to leading-edge nodes. Management said 3nm represented 28% of wafer revenue in 4Q25, 5nm represented 35%, and 7nm represented 14%, meaning 7nm-and-below technologies accounted for 77% of total wafer revenue.

In layman’s terms, TSMC’s revenues are more reliant than ever on advanced chips (chips below the 7-nanometer mark), the type of chips used for things like AI or smartphones.

But the other big number besides the huge profit growth was TSMC’s guidance and spending plans.

For 1Q26, the company guided revenue to US$34.6–$35.8 billion, with gross margin expected at 63%–65% and operating margin at 54%–56%. For 2026 capex, management set a notably high capital budget range of US$52–$56 billion, beating analyst spending expectations, despite TSMC's famously cautious approach to spending. This suggests that TSMC sees “huge demand” for its chips, and executives clarified that the supply shortage, the fact that supply cannot meet demand, will last throughout 2028-2029.

TheWhiteBox’s takeaway:

Banger earnings for a company that, despite its huge size (almost $2 trillion in market capitalization), is still managing to increase net income 35%, signaling they hold tremendous—and growing—pricing power (this is not surprising considering its extremely dominant market share for advanced chip manufacturing and packaging).

A higher CapEx also implies more optimistic expectations for companies down the line, such as ASML, the manufacturer of EUV lithography tools that TSMC uses in its fabs. Nonetheless, ASML is up 6% today based on the TSMC earnings.

I’ve said it many times, and I’ll say it again: the world is way too overexposed to the AI train to allow it to stop. Not only is the US heavily leveraged to the AI trade, but so are Taiwan, Korea, Japan, and China. It is in nobody’s interest to let the train stop, no matter how much of its fuel is still mostly vaporware.

And when you factor this with the huge government deficits all over the first world, debt that is always refinanced and will never actually be repaid, fiat currencies will continue to decrease in value so much as the money printer goes brr’ to fund expanding deficits and military expansion from the great powers, that anyone with a decent notion of economics will stay invested in assets no matter what.

This is the primary reason I believe the AI bubble may deflate, but it will be a rotation to other stocks, not people actually leaving the market.

At least this is how I approach investing these days; it’s less about which asset you want to be in (leaning too heavily on AI could definitely backfire, it’s always better to mostly diversify via ETFs/indexes and across several asset classes) and more about making sure you’re invested in assets, not cash.

MEMORY

Sk Hynix's Huge Investment To Maintain its Lead

SK Hynix says it will invest about $12.9 billion to build a new advanced-packaging factory in Cheongju, South Korea, aiming to expand capacity as demand for AI-focused memory chips accelerates.

Construction is slated to begin in April, with completion targeted for the end of 2027, leveraging the company’s existing manufacturing footprint in the region.

To remind ourselves what advanced packaging was, it’s a step in the process of manufacturing a chip or a system in which the dies are packaged before being placed on the board. Originally mostly about protection and heat dissipation, it has become a key part of the process because it’s now used for two other things:

Connecting compute and memory chips, a type of packaging done by players like TSMC, Samsung, or Intel

Vertical stacking, where several chips are stacked one on top of the other using Through-Silicon Vias (TSVs) to increase capacity without increasing surface area and reducing overall power consumption, something HBM companies do to stack more memory capacity into a package.

TheWhiteBox’s takeaway:

AI is slowly but steadily transitioning to a memory-centric paradigm. Memory is the biggest limiting factor for most progress these days, so I believe memory companies could actually displace NVIDIA and compute companies from the spotlight.

Nonetheless, TrendForce expects average DRAM prices, including HBM, to rise 50–55% this quarter compared with Q4 2025.

The memory bottleneck is only going to get worse, which is the reason I’m so bullish on DRAM/NAND companies like SK Hynix.

VENTURE CAPITAL

Anthropic To Raise at $350 Billion

The Wall Street Journal reported that Anthropic (maker of the Claude chatbot) is preparing a new fundraising round to raise about $10 billion at a roughly $350 billion valuation, with Singapore’s sovereign wealth fund GIC and Coatue Management expected to lead. Reuters, citing sources, said the round could close within weeks, though the size and terms could still change.

If completed, it would nearly double Anthropic’s valuation from its September 2025 Series F, when the company said it raised $13 billion at a $183 billion post-money valuation.

TheWhiteBox’s takeaway:

In just a few days, we’ve seen up to $30 billion in new venture capital for xAI and Anthropic, signaling that there’s still appetite for AI Labs shares in 2026 (we can’t say the same for China, as we recently saw).

That said, I already predicted in late 2025 that the roles of IPOs and debt would be much larger in 2026, the former for AI Labs and the latter for Hyperscalers that, at the end of the day, are silently footing the bill to a large extent. Even the New York Times is echoing the narrative that big IPOs are coming in 2026.

WEIRD M&A

OpenAI Acqui-hires Convogo

OpenAI is acquiring the team behind Convogo, an executive-coaching and leadership-assessment software startup, in what TechCrunch describes as an “acqui-hire.” OpenAI says it is not buying Convogo’s IP or technology; instead, it is bringing the team in to work on its “AI cloud efforts,” with the transaction described by a source as an all-stock deal. As part of the move, Convogo’s product will be wound down.

Convogo built tools used by executive coaches, consultants, HR, and talent teams to automate leadership assessments and feedback reporting. Convogo said it worked with “thousands” of coaches and partnered with top leadership-development firms over the past two years.

TheWhiteBox’s takeaway:

The reason I’m echoing this news is that the pattern is just too clear to ignore. If you look at most software acquisitions today, what do all leave out? The software.

I’ve written a lot about software’s value dropping to zero. With AI, people can build competitive solutions to your product in weeks.

And the worst thing is that not only can smaller teams release similar products to yours, but now you’ll see competition coming from your own customers with the notion of what I call ‘good-enough software’, the type of software your customers build not to compete with you, but to stop using your product and save the license costs. The product might have 10% the features your one does, but these are the 10% they actually use.

In a nutshell, software is commoditizing way faster than even AI incumbents predicted.

Instead, what is really lacking in today’s world is both AI knowledge, taste, and agency; the capacity to build software that is actually useful beyond the AI itself. Most software today is either a non-AI product that will soon see AI competitors or just an extension of AI (adding a few glorified prompts on top of an OpenAI API). That isn’t good software, it’s dead software.

HARDWARE

China Orders 2 Million H200s

According to sources, Chinese companies have ordered around 2 million H200 GPUs from NVIDIA. However, other sources claim that, despite strong demand, China is pressuring its companies to pause H200 purchases.

Quartz reports that China has told some tech companies to pause plans to buy Nvidia’s H200 AI chips, even as Nvidia says demand in China is “quite high” and it’s ramping supply.

The pause, reported by The Information, appears aimed at discouraging a last-minute buying rush while Chinese officials decide whether to allow H200 imports and, if so, under what conditions, potentially including requirements to buy domestic AI chips. NVIDIA has told clients it hopes to ship before the Lunar New Year in mid-February 2026 if approvals come through.

TheWhiteBox’s takeaway:

2 million H200 GPUs are a lot of GPUs. Measured at around 1,200W per GPU once we have them inside servers, and accounting for cooling and networking power draws, these represent around 2.4 GW of critical IT load that China gets to deploy using US hardware.

Compute-wise, this is around 3,341×1018 FLOPs at FP8 precision, or 3.3×1021 FLOPs, which represents 3.9 ExaFLOPs of FP8 compute, well more than what China produced domestically in 2025 (~0.6 ExaFLOPs).

If compute is the main moat the US has, is it really a good idea to allow this, considering that China’s own domestic efforts are ramping up tremendously?

But even more intriguing is the CCP’s position in all this. If computing is so important (it is), why are they so reticent to allow this free flow that the US has finally unlocked?

We have studied China’s deep ambitions to develop its own chip ecosystem, but we also know they are well behind, not so much on the technological or energy levels, but on the production level; they have the technology, they are just struggling to manufacture it.

IPOs

Lambda, another AI IPO for 2026?

Lambda, an AI infrastructure provider backed by NVIDIA (its largest client), is in talks to raise $350 million ahead of a potential IPO in 2026, sources have told The Information. If they IPOed in 2026, they would join the likes of Coreweave as another flashy AI infrastructure company finally accessible to the public markets.

TheWhiteBox’s takeaway:

I believe 2026 is the year private markets liquidity officially dried up, and more AI companies hit the public markets in hopes of accessing liquidity.

This company allegedly had more than $500 million in sales in 2025, with a total loss of a little under $200 million.

The problem? Feels like a lot of its revenue is self-generated by its largest investor, NVIDIA, which adds to the obvious concentration risks, given its customer pool is likely small.

INFRASTRUCTURE

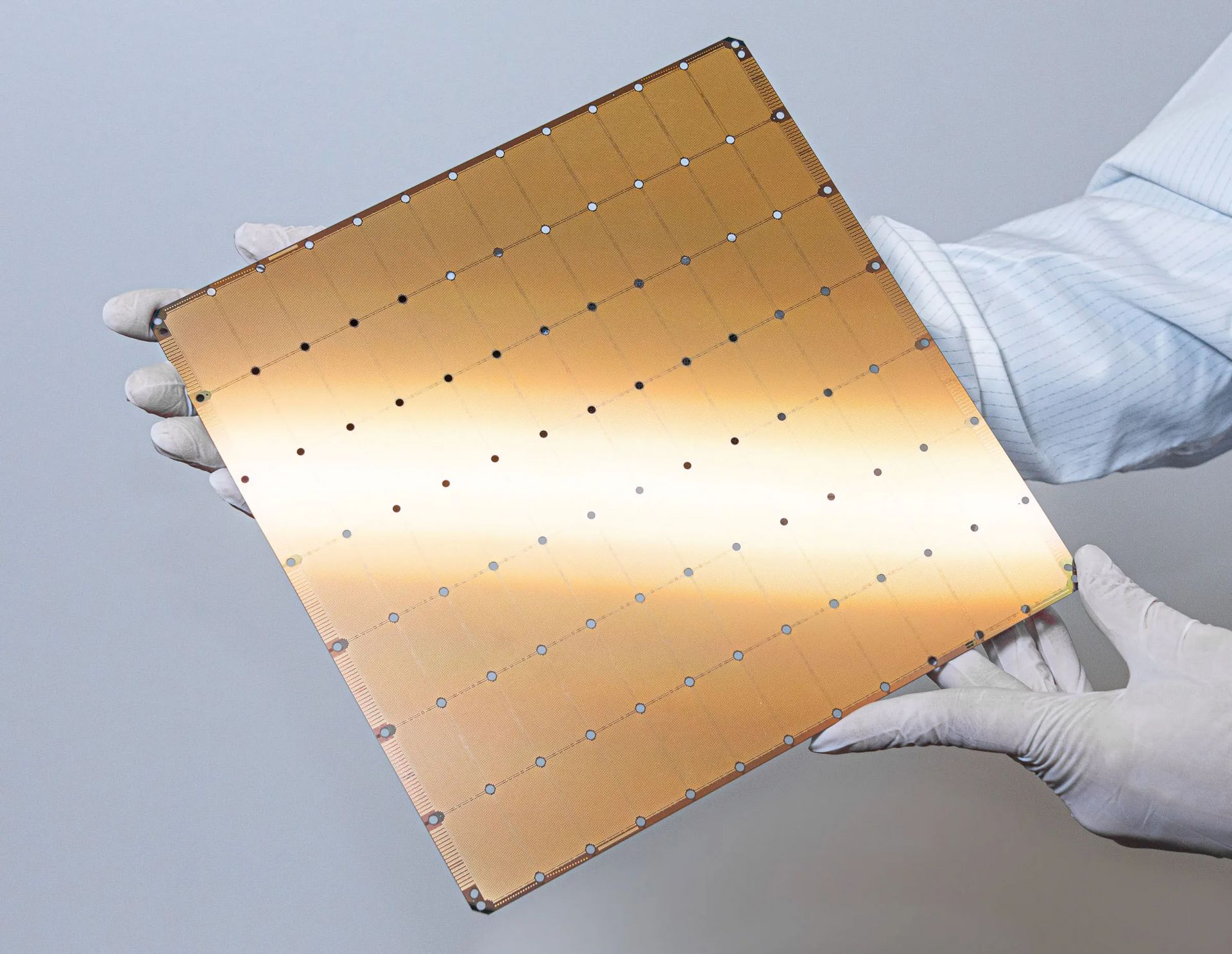

Cerebras $10 Billion OpenAI Contract

Cerebras says it has signed a multi-year deal with OpenAI to deploy 750 megawatts of Cerebras wafer-scale systems to serve OpenAI customers, rolling out in stages from 2026 through 2028, which it calls the largest high-speed AI inference deployment in the world.

In total, it represents a potential $10 billion contract for the infrastructure company that might IPO in 2026.

The post argues that faster inference is key to broader AI adoption and claims Cerebras can deliver responses up to 15× faster than GPU-based systems for low-latency use cases like coding agents and voice chat. OpenAI is quoted as describing Cerebras as a dedicated, low-latency inference option within its compute portfolio, enabling faster, more natural real-time interactions at scale.

TheWhiteBox’s takeaway:

I’ve spoken about Cerebras multiple times, but I’ll quickly summarise the key point of using Cerebras’s products. Cerebras doesn’t produce single or dual-die products like NVIDIA’s GPUs. These dies are sliced and diced from a silicon wafer.

Instead, Cerebras buys the entire wafer and uses it to run workloads. The point is that they get a lot of chips per wafer (humongous amounts of compute) and also a large amount of SRAM memory, thereby not requiring off-chip memory (DRAM) as NVIDIA’s or AMD’s GPUs, and similarly to Groq’s LPUs (although these scale differently).

Because on-chip memory access in SRAM is extremely fast, these products generally run inference workloads much faster than GPUs.

On the flip side, they lack enough memory to sustain the training workloads, in which you have to keep two or more versions of your model, activations, and optimizer gradients in memory.

Inference is a memory-bandwidth workload, but training is much more heavily constrained by memory size.

Another reason OpenAI might want this is to diversify away from NVIDIA/AMD, somewhat reducing NVIDIA’s pricing power over them (probably not much, to be honest). But what Cerebras has going for them is that you can use them to diversify yourself out of the heavily supply-constrained DRAM market, something we might see more and more of over the next few years.

This makes Cerebras a very appealing potential investment if it eventually IPOs, as the stars are aligned for it: the growing importance of inference and a way out of DRAM. Of course, one problem remains: who will manufacture its chips? You know the answer: TSMC, so they are still running into the same bottleneck everyone else is.

The caveat is that they use the 7nm process, so they might not be fighting the same battles NVIDIA, AMD, Google, or Apple are fighting.

PERSONALIZATION

Google’s Personal Intelligence

Google has introduced a new Gemini feature called “Personal Intelligence,” designed to make the assistant more personalized by letting it draw on information from a user’s Google apps and activity (such as Gmail, Google Photos, Search, and YouTube) and “reason” across those sources to answer questions with more context.

The rollout is described as an opt-in beta that starts in the US for paid Gemini subscribers, with availability limited to personal Google accounts (not Workspace/enterprise).

Google is also emphasizing privacy and control: the feature is off by default, users can choose which apps to connect, and there are safeguards around sensitive inferences (with Gemini generally not making proactive assumptions about certain personal topics unless asked).

TheWhiteBox’s takeaway:

There’s hardly a debate that Google wants to eat OpenAI’s lunch for good. Every move is a direct copy of previous OpenAI moves, but leverages Google’s amazing distribution (the capacity to reach potential users) through their application and search ecosystem.

Google is also positioning this as a competitive advantage versus rivals (and as a direct challenge to Apple’s “Apple Intelligence” direction).

Might the release of this feature have prompted Apple to rely on Google to power Siri, incentivizing Google to ensure Apple Intelligence is also a good product?

VIRTUAL ASSISTANTS

OpenAI Announces ChatGPT Health

Fidji Simo, OpenAI’s CEO of applications, announced via her Substrak the release of ChatGPT Health, a dedicated Health space inside ChatGPT for health and wellness conversations.

It’s designed to let people ask health questions with more personal context by securely connecting their medical records and selected wellness apps (for example, Apple Health, Function, MyFitnessPal, and others), so it can help with things like understanding test results, preparing for doctor appointments, and getting diet/workout guidance. OpenAI says it is meant to support—not replace—clinical care, and is not intended for diagnosis or treatment.

Access began via a waitlist and a staged rollout: a small group of early users first, with expansion planned “in the coming weeks” for web and iOS; Android is “coming soon.”

Eligibility includes ChatGPT Free, Go, Plus, and Pro in supported countries, excluding the EEA, Switzerland, and the UK at launch; the optional medical record (EHR) connection is US-only and requires users to be adults. OpenAI also says Health runs as a separate, compartmentalized space with extra protections (including added encryption/isolation), and that Health chats/files/memories are not used to train its foundation models.

TheWhiteBox’s takeaway:

This is an interesting release because it means OpenAI is now pretty comfortable with the hallucination rates in ChatGPT. Otherwise, it would be quite nonsensical to release this, considering the obvious risks a wrong diagnosis could represent to the user.

My other big concern is apprehension: if we have a “personal doctor” within reach 24/7, there’s a non-zero chance people will become obsessed with health. This sounds like a good thing, but it could seriously affect users negatively.

For several years, I used wearables such as Whoop to track my sleep and daily routines. Eventually, I decided to stop using it because these wearables are not only very inaccurate in some measures, but they can actually make you feel different from how you actually feel; at times, I woke up feeling ‘alright’ only to see my wearable telling me my sleep was terrible and that I should be feeling horrible. Other times, I felt bad, and my whoop score was amazing.

Of course, having “wearable dependence” is precisely what OpenAI needs to make money, and, yes, ChatGPT has already saved lives in some cases, but we must also consider the possibility that these Health buddies can backfire.

VIDEO MODELS

Google Updates Veo 3.1

Google has rolled out an update to Veo 3.1’s “Ingredients to Video” feature to make image-referenced video generation more controllable and consistent.

The upgrade improves how Veo uses reference images so characters, objects, backgrounds, and textures stay visually coherent across scenes, while also producing more expressive clips with less prompting.

The release also adds native vertical (9:16) output for mobile-first content and expands resolution options via upscaling to 1080p and 4K.

Google says the updated Veo 3.1 experience is available across the Gemini app, YouTube (including Shorts and the YouTube Create app), Flow, Google Vids, the Gemini API, and Vertex AI, with SynthID watermarking used to help identify AI-generated video.

TheWhiteBox’s takeaway:

Not much to say here, for me, video models are mostly irrelevant for my day-to-day. Rather, they represent a more important endgame: the door to world models (or the closest thing we have to such a thing).

EMAIL

Gemini comes to Gmail

Google is positioning itself to insert Gemini into everyone’s lives in 2026 (whether we like it or not).

The headline addition is AI Overviews inside Gmail. For long email conversations, Gmail can synthesize a thread into a concise summary of key points, reducing the need to scan dozens of replies.

Gmail will also let users ask natural-language questions about their inbox—turning the request into an AI Overview that returns an answer drawn from relevant emails (for example, asking who provided a renovation quote last year). Google says conversation summaries are rolling out broadly at no cost, while the “ask your inbox” Q&A capability is reserved for Google AI Pro and Ultra subscribers.

Google is also expanding AI-assisted writing. “Help Me Write” is becoming available to everyone to draft messages from scratch or polish existing drafts, and “Suggested Replies” is described as a context-aware evolution of Smart Replies that aims to produce one-click responses aligned with your tone and style.

A separate “Proofread” feature adds more advanced grammar, tone, and style checks, but Google positions it as a subscriber feature for Google AI Pro and Ultra. Google also says it plans to improve personalization for Help Me Write by incorporating context from other Google apps in the following month.

Finally, and probably the most exciting feature, is that Gmail is testing an “AI Inbox” intended to reduce clutter by highlighting what matters most—surfacing to-dos and prioritizing important items based on signals like frequent correspondents, contacts, and inferred relationships from message content.

Google says the feature is first going to trusted testers, with broader availability planned in the coming months, and emphasizes that analysis is designed to be secure with privacy protections and user control. We’ll see.

TheWhiteBox’s takeaway:

When I wrote my last deep dive into Google, I described it as a company that was transitioning into a “Gemini for everything” company. I envisioned them being the first big company to build its entire business around Generative AI models.

And it seems to be the case, and they are betting it all on AI. This is not necessarily true for other Big Tech companies, which are all investing heavily in AI, but their main businesses, with the exception maybe of Meta, are not fully immersive AI experiences.

Amazon is still a lot about getting boxes into people’s doors and letting organizations rent computers,

Microsoft is still an OS company with a half-assed, failed chatbot on the side that nobody likes,

Tesla is still mostly about non-autonomous cars despite what Elon might want to tell you,

And NVIDIA provides the shovels to find gold, but sells literally zero “gold”.

Rather, Google seems to be the closest to closing the circle and making its entire business about AI.

The positive thing is that, if this pans out, I already predicted in my last newsletter that we are talking about the future most valuable company on the planet.

But if AI doesn’t live up to the expectations, in what position does that leave Google in the eyes of investors? In a pretty terrible one, I believe.

SMARTPHONES

Gemini, Siri’s Brain

Apple has signed a multi-year deal to integrate Google's Gemini models as the foundation for its next-generation Apple Foundation Models, powering major upgrades to Apple Intelligence features.

The collaboration will bring a significantly more capable and personalized Siri later this year, while maintaining Apple's strong emphasis on on-device processing and privacy.

The agreement positions Google ahead of competitors (including OpenAI) as Apple's primary generative AI partner following extensive internal evaluations.

Another report from The Information provides greater detail: the Gemini models will either run locally on the device or in Apple’s highly private Cloud system, and Apple will have permission to fine-tune models to its liking.

TheWhiteBox’s takeaway:

In the end, Google won this battle over OpenAI, the other major AI lab fighting for this contract.

The most probable reason is that Google has done a lot of proven work in the SLM (Small Language Model) arena, with examples such as the Gemma models and even the most recent FunctionGemma, a tiny model that proved capable of interpreting user requests and execute tools very effectively despite being a few hundred megabytes in size, ideal to be stored in the smartphone itself, crucial for security and latency constraints. They even built a “Siri demo” as part of the release.

But one can’t help but notice that Apple has completely failed to deploy its own models, even in the simplest cases.

Nobody asked Apple to compete at the frontier; it was totally fine if they sat that one out. But the fact that they did not manage to compete at all, when they should at least be a “leader” in the SLM space, and will now have to pay a license fee to Google to run models on its own products, is a total failure.

And this incapacity to solve a clear problem any small AI Lab would have solved in months makes me wonder how on Earth a company like this is trading at growth-level stock multiples of 35x PE despite behaving (and growing) like a mature, 10x PE multiple company.

For how long, Western man?

Closing Thoughts

So, what does this chaotic mix of earnings reports, math discoveries, and hardware shortages actually tell us about where we are heading?

First, the era of the passive chatbot is dying. AI products are integrating into the fabric of most of the tools we use in order to gather more context about us. Soon enough, they will be in charge of buying our groceries, monitoring the web for those sneakers you’ve been looking for for months, or even monitoring our sleep for disease with SleepFM. Historically, AI’s biggest use cases have been invisible: recommendation engines or ad targeting. It may be just the same for Generative AI.

Second, as we’ve been saying for years now, software is commoditizing, but AI hardware is doing quite the opposite. AI infrastructure is just a bunch of oligopolies, one placed on top of the other, with huge pricing over the layer above.

The staggering profits at TSMC and the huge price increases in DRAM by SK Hynix et al prove that while code might be infinite, technology expertise isn’t.

This puts AI software companies, the AI Labs, at a very, very bad position; they are paying more for every electron, for every chip, while the huge competition in software forces them to drop prices way more than they should be doing so. IPOs are inevitable.

AI is also powering discoveries, especially in maths. With GPT-5.2 solving Erdős problems as the perfect example, who knows what may be discovered thanks to AI in 2026?

But, as I’ve been ranting about a lot lately, I fear the huge divide opening up between the "builders," who are using these slow, complex reasoning models to augment their expertise, and the "consumers," who are rejecting them as too difficult or slow.

The greatest gap in 2026 and beyond won't be about who has access to the AI, but who has the agency to do something about this incredible tool we’ve received.

The tools are finally ready. The question is: are you?

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]