—

Protect $200k+ Retirement Savings and Add 20% Immediately With GRIPS

If you’re between 45-55, live in the US and have at least $200k in retirement savings, there’s a strategy that can protect your future, ensure your money outlasts you, and keep it growing without worrying about market drops. Don’t risk losing the savings you worked so hard to build. Whether in a 401k or another account, this approach can give you peace of mind AND a larger cushion for retirement when you access the 20% immediate bonus.

FUTURE

Companies to Watch in 2025

2025 is going to be another amazing year for AI.

But while 2022-2024 was primarily focused on building the foundation models that will run the new software paradigm of declarative software, perfectly embodied by the trillion-dollar use case of AI: the Large Language Model (LLM) Operating System we already talked about, 2025 will be the year of transformative products actually being delivered into our hands.

If we were primarily observers of the industry until now, you will now become power users (sorry, using ChatGPT to summarise a PDF doesn’t count as a power user).

Over the next few weeks, we will examine in detail the known and unknown companies that will run the show in 2025 across several categories, such as hardware, intelligence, product, discovery, and industry-wise companies. We will go AI layer by AI layer, from the more predictable bets to those that are oblivious to most… but not to us.

Today, you’ll learn about many companies that could soon run the show, from:

upcoming stars attacking NVIDIA’s biggest flaws,

the big plans of several star researchers,

over-the-top moonshot,

and even companies that are calling bullshit on everything and everyone.

Let’s dive in!

Hardware

2024 has been yet another massive year for AI accelerator companies, the companies creating the hardware used to train and run AI models.

The Best Performant Class

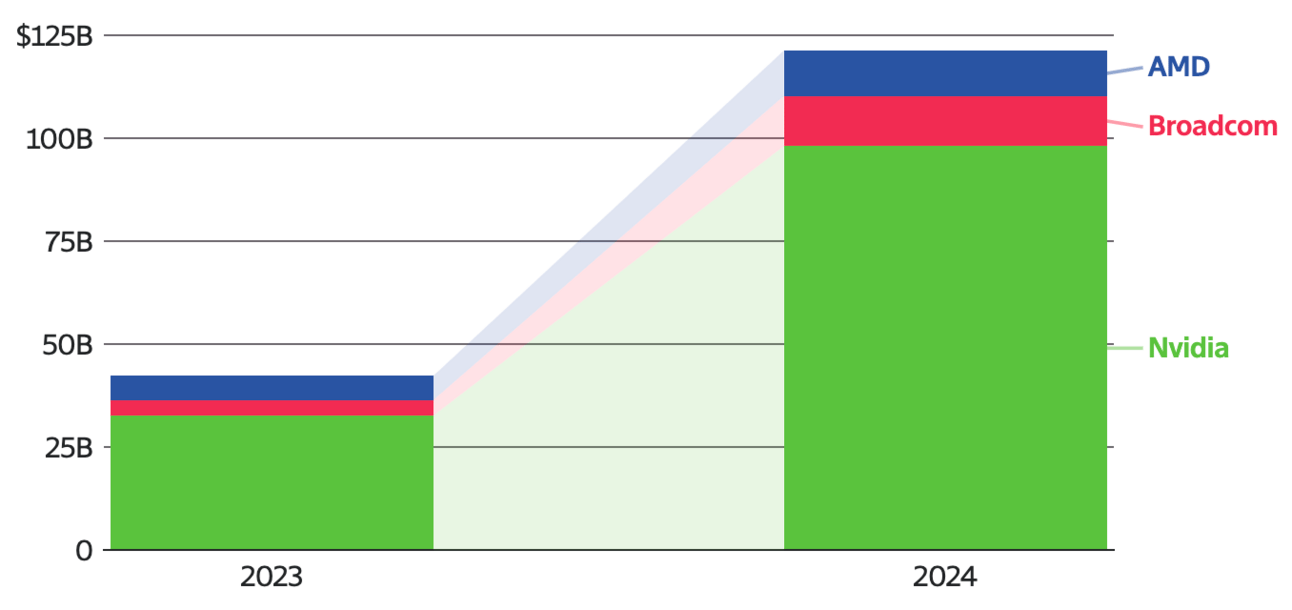

Particularly, NVIDIA has tripled revenues to almost $100 billion (just in the AI business), but others like AMD or Broadcom have also seen great traction.

It’s safe to say that hardware companies are the only tangible winners in AI so far (alongside some application-layer enterprises), as model developers are drowning in losses and can only survive through billion-dollar investment rounds.

2025 is going to be no different (NVIDIA is already sold out for 2025 in Blackwell chips, the upcoming generation), but we will test whether the challengers are up for the job.

While everyone directly assumes ‘AI hardware = GPUs,’ that’s a false and narrowed view because that reduces your set of companies to GPU chip designers like NVIDIA, AMD, Intel, and Huawei, if you’re Chinese.

Chip manufacturers like TSMC, Micron, or SMIC have also been great winners, but they can also manufacture other chip types.

However, 2024 saw the emergence of new hardware with a set of unique traits that could seriously challenge GPUs. To understand why this is the case, we must understand that we are transitioning into an era of inference-main compute, which significantly impacts hardware companies, especially GPU ones.

In layman’s terms, while up until now most compute was dedicated to training AI models, the balance will shift to running these models (inference), especially due to the emergence of Large Reasoner Models (LRMs), which I’ve talked about a lot and can be summarized in my extensive review of OpenAI’s ground-breaking o3 model.

And that is a problem for NVIDIA and an opportunity for others. But why?

The Balance of AI Workload Changes

Unbeknownst to many, GPUs aren’t application-specific for AI. While newer generations like Hopper or Blackwell are mainly focused on AI workloads, the overall architecture of GPUs isn’t ideal for AI inferences.

But why?

GPUs were initially conceived for screen rendering. Video games are usually played on a flat-screen (two dimensions). However, most video games are three-dimensional, so to make them playable, we must perform a mathematical projection, which basically involves several multiplications of matrices.

In simple terms, we need to take the 3D scene at one precise point and project it into a 2D space using simple-but-many matrix multiplications, updating the color of every single pixel on the screen to showcase the scene.

To make the game playable, this has to be done dozens or even hundreds of times per second per pixel, requiring thousands of GPU cores (processing units, a powerful GPU has thousands) working in parallel to update the pixels millions of times per second.

This very particular use case justified the creation of the GPU, a calculator on steroids that performs elementary calculations but can perform millions of them in parallel.

But what does this have to do with AI?

Simple. Our most sophisticated AI models run on, quite frankly, trivial algebra. LLMs and their newer versions, the LRMs, are literally matrix multiplications on steroids. It’s the same mathematical calculation as rendering pixels on a screen but used to perform AI predictions.

But there’s a catch. These models behave as a concatenation of matrix multiplications during training… but not during inference. And that, my dear reader, is a problem (for NVIDIA).

Frontier AI Model Inference 101

In this newsletter, we have often discussed a model’s behavior during inference. These models, due to their static computational graph (a sequence is always processed the exact same way, although our two previous reviews of Meta’s BLT and COCONUT models aim to change this), have a considerable amount of redundant computation.

For a sequence of words, each word ‘talks’ to all previous ones through the attention mechanism, which you can read in detail here. This exchange of information between words builds an understanding of the sequence.

Then, it predicts the next one, and this is done sequentially, predicting each new word based on the initial input plus the new predictions. All in all, the attention mechanism is one giant matrix multiplication fractioned across the many attention layers these models possess.

But for the already-processed words, with each new prediction, the process repeats. In other words, if word 5 already talked to word 4 in the previous prediction, it has to do the same in future predictions despite the outcome of that interaction being identical every time. This is the definition of redundancy. Thus, we cache (temporally store) these calculations.

Therefore, in practice, besides the initial prediction (the first predicted word), where the model builds the cache, explaining while it takes longer to predict that first token (this is measured through the time-to-first-token metric you usually see with every model release), all the subsequent predictions perform attention just for the last predicted word (which obviously hasn’t had the time to ‘talk’ to the rest of words as it was just predicted), toward the rest of the sequence. However, regarding the rest, their previous interactions among them are stored and reused in real time.

This not only results in faster predictions as the amount of computation is reduced dramatically (this is why ChatGPT seems slower at the beginning but then becomes very fast), but in today’s discussion, it implies that the dominant mathematic operation in inference is matrix-to-vector multiplication, which is NOT the main operation GPUs thrive on.

NVIDIA knows this, so newer GPUs dedicate a considerable portion of the die to inference workloads (matvec instead of matmul in AI parlance). Still, NVIDIA also knows that in AI training they are basically unchallenged, so they have incentives to remain as they are.

And this idea that no single hardware is best-in-class for each AI workload sets the ground for challengers to take a piece of the cake.

Hyperscalers

First, we have Hyperscalers like Google or Amazon, which have done their homework and, by the time you read this, will have a sizable portion of their internal AI workloads and those of cloud clients running on their own GPUs.

While Amazon does not have an advantage over NVIDIA in terms of hardware, unlike other companies listed below (quite the opposite), it has optimized its chips for power savings. According to Amazon’s own claim, its chips can be up to 70% cheaper to run.

Nonetheless, frontier models will continue to be trained on NVIDIA chips (except Anthropic, due to its deal with Amazon). Still, inference workload prices offered by Hyperscalers, who also have the capital to subsidize client costs if necessary to get them as AI customers, will be hard to fight with, even for NVIDIA.

Although not directly covered today, NVIDIA’s goal for 2025 is to become a Hyperscaler, offering AI cloud services to customers. So be alert about the development of this new business.

However, while Hyperscalers are still building GPU-like hardware, some companies are changing the paradigm completely.

Groq, the Creators of the LPU

Jonathan Ross, the CEO of Groq, created the TPU, or Tensor Processing Unit, while working at Google. But seeing the emergence of these AI models, he decided to create a new type of hardware solely focused on LLMs, the Language Processing Unit, or LPU.

Unlike GPUs, this hardware has no shared memory (memory is the main issue in GPU-land besides math operations). Instead, it runs with only on-chip memory. This requires a much larger number of chips to run AI workloads, but the communication between the processing core and the memory chip is almost instantaneous, making it orders of magnitude faster than GPUs for AI inference.

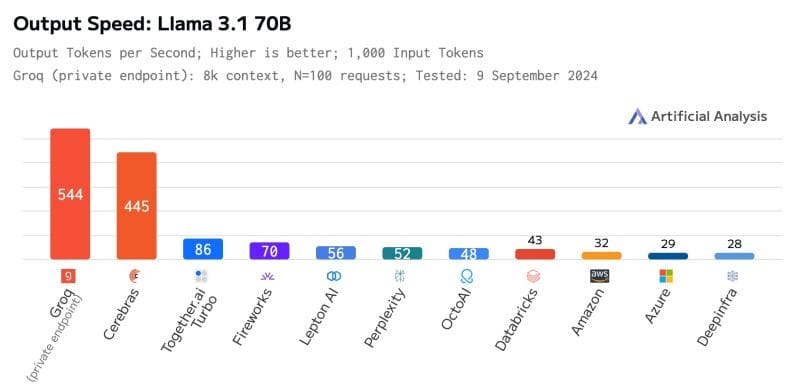

Although you can try it for yourself here, this graph is self-explanatory.

Importantly, this company also has a geopolitical advantage: Despite being insanely fast, its hardware is older than state-of-the-art GPU chips and can thus be produced inside the US, not requiring state-of-the-art manufacturing capabilities offered only in Taiwan.

This eliminates its exposure to TSMC, the global leader in chip manufacturing, and the potential war in the Taiwan Strait if China invades Formosa.

If Groq continues to work on its software stack to make its hardware attractive to developers, the reasons not to embrace its out-of-this-world token throughput are basically zero.

Etched, the Great Challenger

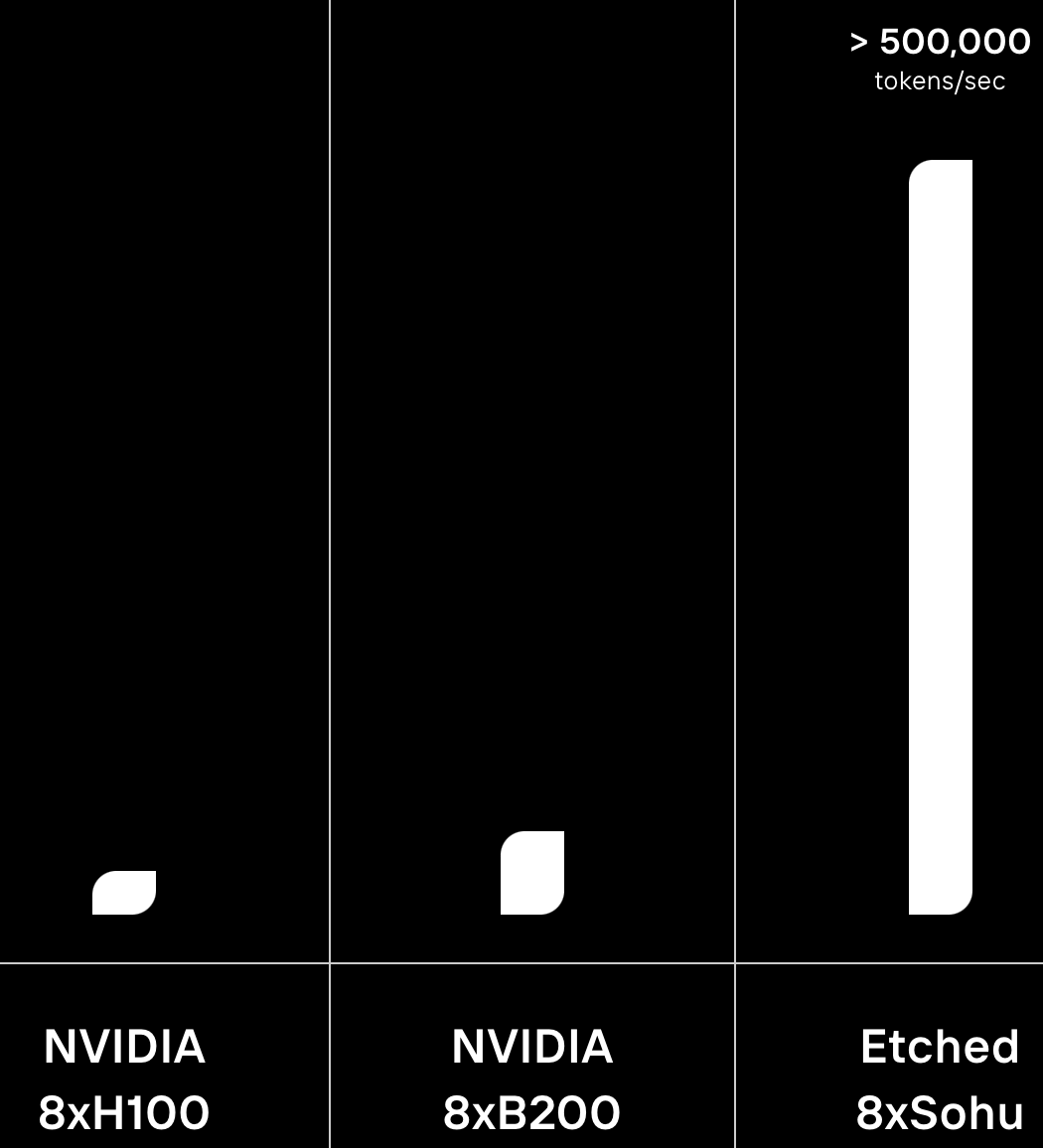

Moving on, we have the great disruptor of AI hardware, Etched. This start-up, which has raised over $100 million, is creating the Sohu chip, the first Transformer ASIC.

An ASIC, an Application-Specific Integrated Circuit, is a chip designed for a very specific compute workload. Besides that workload, they are useless, but they work extremely well for that particular compute task.

In particular, this chip is designed exclusively for the Transformer architecture, which underpins all frontier models today. This is a risky move, as Transformers have notorious deficiencies. However, the truth is that they are still unequivocally used by everyone, and the good thing about building an ASIC is that it offers unmatched performance.

If the company’s claims are remotely true, this chip offers an inference throughput that knows no comparison, not even Groq’s LPUs.

However, while they are already using the chip internally with partners like Decart, they still need to prove they can walk the talk. Also, if the architecture paradigm changes and the Transformer stops being used, Sohu is a $100 million broken product. But the promises are many, and the reward is literally a multi-billion dollar company in the making if they are right.

With hardware out of the way, we now move into the next layer: intelligence, a layer with well-known companies but also a set of upcoming players with star experience and much to prove.

The Intelligence Layer

While the progress in state-of-the-art model development is pretty drawn out for you in this article, there are many things to cover here that I’m very excited about.

With 2024’s end, we’ve seen the transition of self-supervised learning as the training phase where the most value is accrued by the model (the pre-training phase that ingests the whole Internet into the model) to post-training, a further refinement of these models that has taken them to new heights (think of models like o3, Gemini Thinking, or Qwen QwQ).

And while adding search on top of LLMs is undoubtedly the main trend (again, previous link), the way we will do this the right way is largely vacant in explanations by mainstream media and AI influencers because it’s hard and, quite frankly, too complex for those people to digest for you.

And that is what we are solving today by focusing on the real stars of the show, which aren’t the actual foundation models as so-called influencers will tell you cause they know no better.

The Year of Reward Engineering

A saying in the industry goes: “Your model is as intelligent as its verifier.” But what does that even mean?

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- NO ADS

- An additional insights email on Tuesdays

- Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more