An entirely new way to present ideas

Gamma’s AI creates beautiful presentations, websites, and more. No design or coding skills required. Try it free today.

FUTURE

The AI Trillion-Dollar Product

In a very recent interview, Satya Nadella, Microsoft’s CEO, claimed that current business applications will “collapse in the agent era.” Notably, he is referring to the very same apps his company is currently selling. Thus, he is predicting the death of its own current business model in favor of AI agents.

But this vision implies a much more powerful change that Satya is less keen on mentioning because it directly impacts Microsoft’s raison d’être: the introduction of AI as a structural part of general-purpose computing, the end game of ChatGPT: the LLM Operating System, or LLM OS.

This vision is so powerful that it is unequivocally OpenAI’s grand plan. Today, we are distilling their vision into simple words. I believe this is one of my most didactic articles on the future of AI.

In it, you will:

Lean how modern computers work and how this is about to change completely,

Learn what the word ‘agents’ really means in full detail,

Learn about their most powerful use case, the LLM OS, which will serve as the platform on which most future software will be built, and we will look at an actual implementation of this idea.

Finally, understand Meta’s strategy for keeping open-sourcing Llama models (and let me tell you, Zuck is two steps ahead of everyone else).

Learn how dramatically this paradigm will change what computers are and, importantly, how humans work.

It’s not benchmarks that lured investors into assigning companies like OpenAI, Anthropic, or xAI the insane market values they enjoy today ($250 billion combined); it’s this precise trillion-dollar use case.

Let’s dive in.

Changing The Way You Do Everything

To understand how game-changing the idea of an LLM Operating System (LLM OS) is, we first need to take a few boring-but-short seconds to talk about classical computers.

A 10-second intro to computers

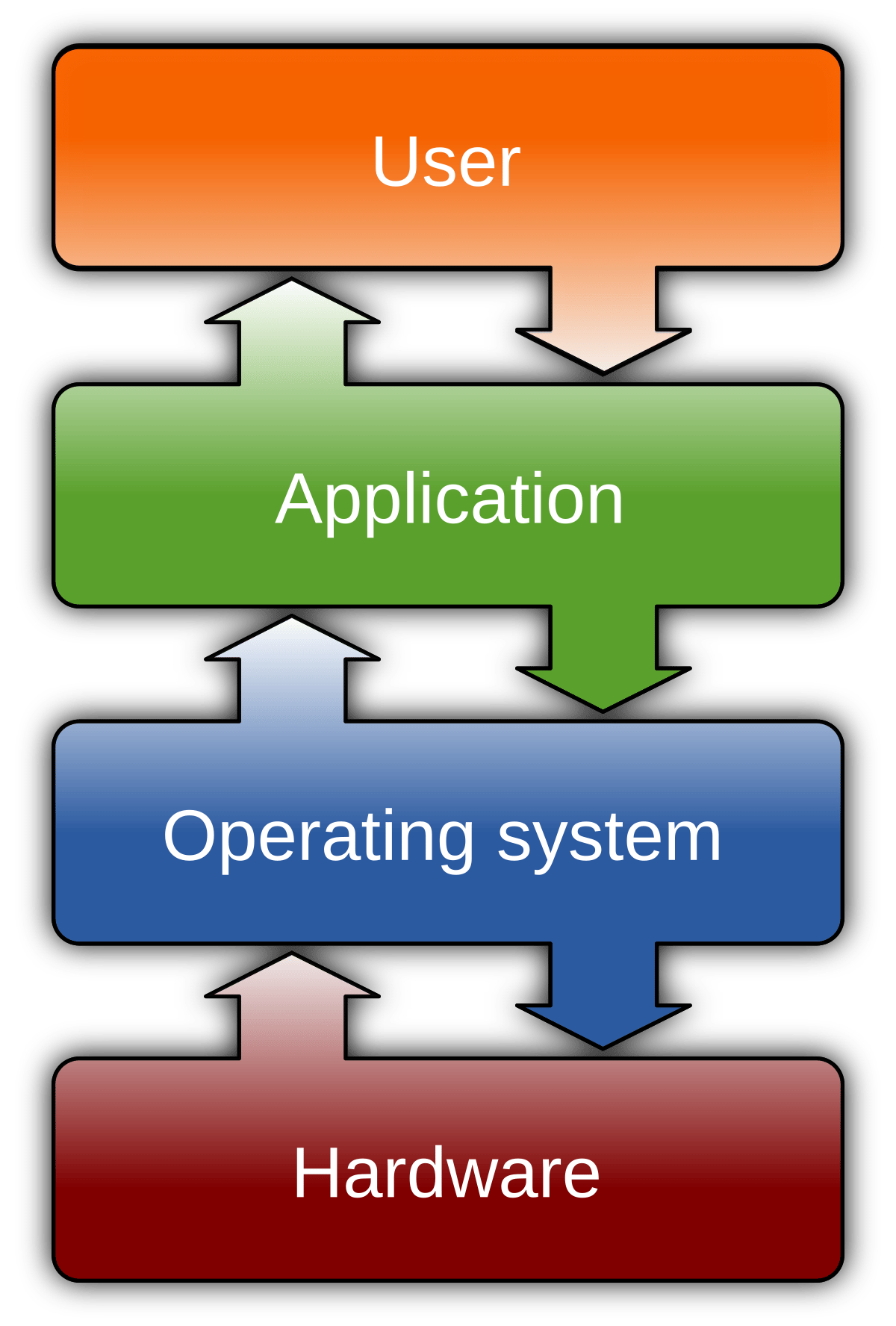

A computer combines hardware, an operating system, and software applications to perform tasks.

Hardware is the physical machinery, including the central processing unit (CPU), memory (RAM and storage), the Graphical Processing Unit (GPU), input devices like keyboards, and output devices like screens. The CPU is the “brain” that processes instructions, while memory stores and retrieves data as needed. The GPU manages loads that the CPU decides to, mainly those that can be parallelized, like rendering pixels on a screen.

The operating system (OS) is the middle layer—like a bridge—between the hardware and the software applications the user interacts with. It manages hardware resources, runs background services, and provides a user-friendly interface for controlling the computer. The OS ensures that applications can run smoothly by managing files, memory, and tasks, making the hardware accessible without the user needing to understand its complexities.

Software applications are what users actually interact with to perform specific tasks, such as browsing the Internet, editing documents, or playing games.

In a nutshell, the hardware provides the foundation, the operating system coordinates everything, and software applications enable the user to accomplish specific tasks.

This is how all current classical computers behave. Importantly, in this paradigm, the human is in charge of actually doing what he/she wants, and the computer is designed to allow him/her to do so.

But what if LLMs (and, above all, LRMs) were about to change that?

The Declarative Paradigm

Deep down, the biggest change LLMs have introduced is the idea that machines can now communicate with us—and with other machines—through natural language.

That means we can order them to do stuff in plain English (or any other language the LLM understands). From here, envisioning declarative software is trivial:

Declarative software is a new paradigm for technology and business in which humans declare what they want, and the AIs execute.

Of course, this means that AIs become agents. And, crucially, this idea of agents has its most powerful use case in the form of the LLM OS.

This must not be confused with RL agents, the traditional definition of agents, AIs interacting with an environment (usually physical).

However, to understand the LLM OS, we first need to clarify some often misunderstood elements of agents.

The No-Hype Guide to Agents

So, what really is an AI agent? This term is everywhere these days. It’s the center point around the SaaS market reinvention in order to save itself, and it is Level 3 of OpenAI’s route to AGI.

But why is this idea so powerful? To understand it, we need to understand the different components of an AI agent: the AI, memory, and tools.

Words In, Words Out

Whenever we think about LLMs, we need to understand the interaction dynamics.

As we mentioned, they are models that can process your natural language input and provide a natural language response back. But in reality, what they are doing is completing the sequence.

Most Generative AI models today are sequence-to-sequence models; you give them a sequence of words/pixels/video… and they give you back another sequence.

Focusing on LLMs, you can think of these models as a queryable natural language database. Having been exposed to the entire open Internet and proprietary datasets, we compress that knowledge into a digital file (where the model’s weights are) that users can query.

To abstract the complexity of performing such interaction, both closed and open labs build APIs (Application Programming Interfaces). You send your text to the API’s endpoint (the URL), and the API “magically” returns the response.

There’s a common misconception in that APIs are only used by labs like OpenAI or Anthropic that don’t allow you to access the model directly. But this abstraction is also done in open-source, as the providers of the GPUs you rent give you access to the models through APIs too, even if you’re storing them in your private cloud and even while having direct access to the model.

In Generative AI, everything revolves around APIs.

The issue with LLMs is that the model’s knowledge is compressed into a file that doesn’t change unless you actively retrain the model. However, we can leverage LLMs’ most powerful feature to the mix to solve this.

In-context Memory

LLMs are referred to as ‘foundation models’ because they can use new data they have not seen before. As long as it’s ‘in distribution' (somehow familiar to their known knowledge), they can use this acquired knowledge in real-time.

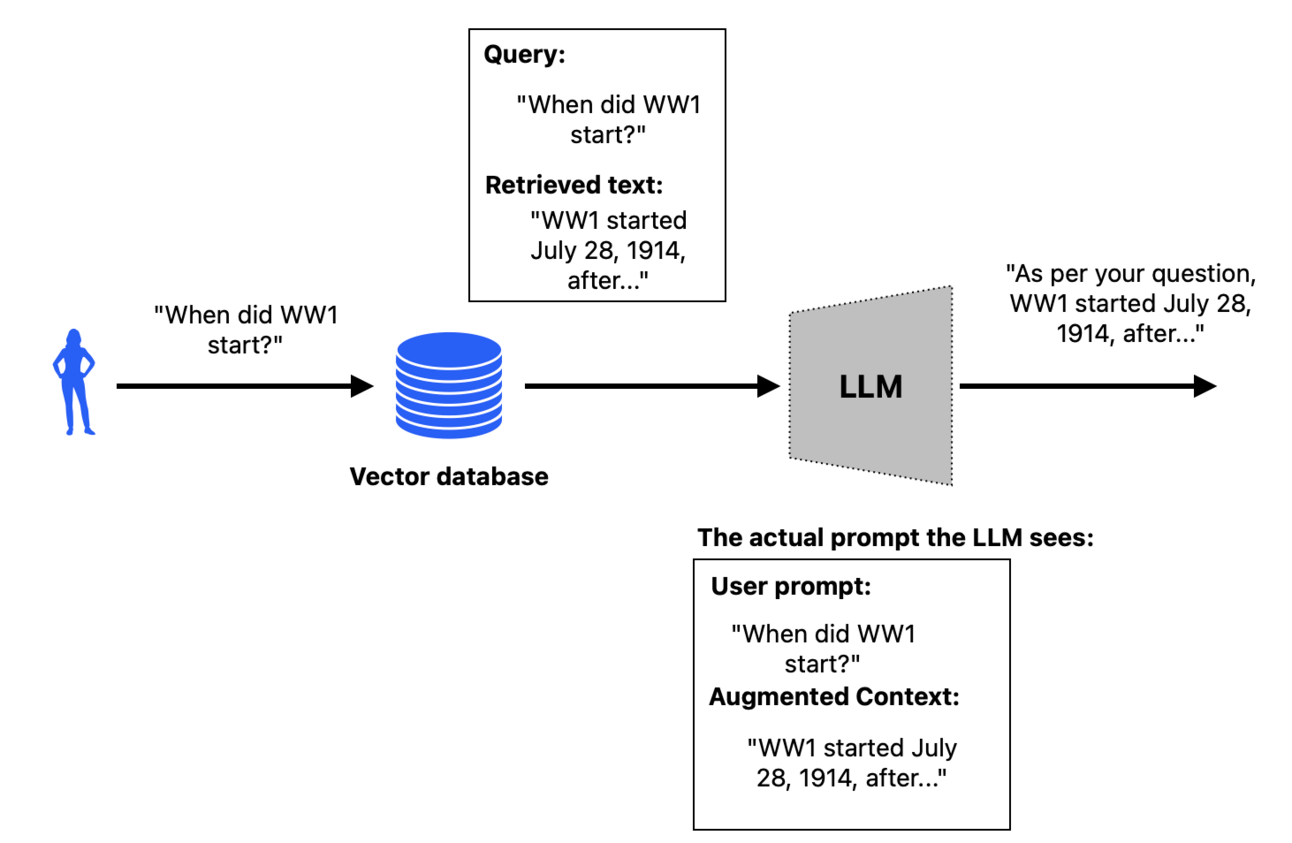

In other words, we can enrich the context we provide to the model in real-time so that it can better answer. This introduces the idea of Retrieval-Augmented Generation, or RAG. Before actually querying the model, we enrich the context through a memory module, which can be a vector database, a knowledge graph, a database, or a browser API.

Using the user’s input as a query to these modules, we extract meaningful context that may be valuable to the model. Then, we send all the data to the LLM’s API, which responds.

Moving on, we arrive at the third component, probably the first thing people associate agents with: function-calling. You’ve probably heard of this term, but it’s generally extremely poorly explained.

Let’s fix this.

The Power of Tools

When you understand LLMs are just sequence-to-sequence models, you quickly realize their limitations. In most cases, they are quite literally memorizing the exact sequence, as we saw with Apple’s painful-to-read research where they destroyed this notion that LLMs ‘reason.’

While this works fine with text, other areas, like math, are another story. The model may have seen the sequence ‘1+1=2’ several times, but not the sequence ‘1-7+8,’ which gives the exact same answer. This leads them to make silly mistakes in remarkably simple situations.

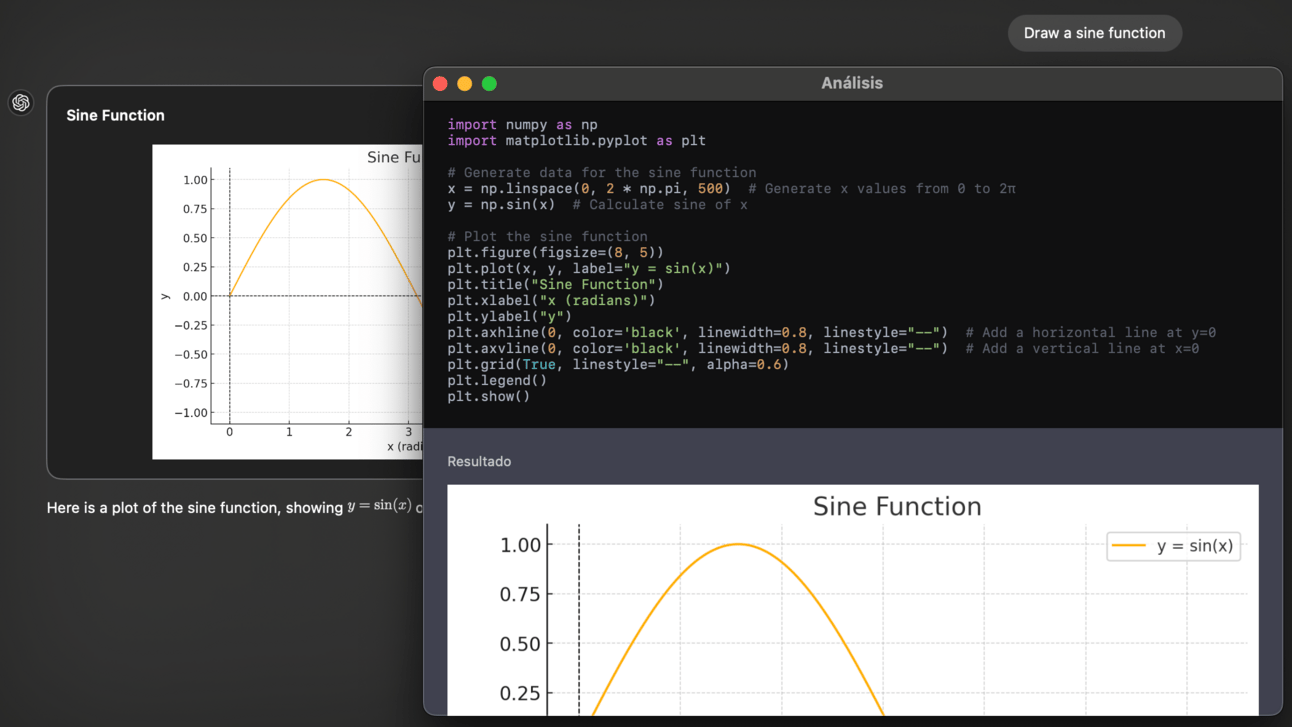

Therefore, companies like OpenAI increasingly rely on symbolic tools to improve ChatGPT's performance. For instance, every time you give ChatGPT a math problem, it autonomously opens a coding environment, writes code, runs it, and returns the results, assuring that these calculations are done correctly.

But have you ever wondered how this interaction works under the hood? How does the LLM know when to use these tools?

And the key to answering those questions is that these models have been trained with this precise API interaction in mind from the very beginning. Specifically, as part of their vocabulary, they have special tokens, like <BoS> (Beginning of Sequence) and <EoS> (End of Sequence).

But they also have special tokens that the model predicts when it decides that the next word in the sequence to predict isn’t actually a word… but a tool.

Just like they can predict that, for the sequence “The capital of France is…” the next token is “Paris,” they can also predict that for the sequence “Please give me the latest news on AI,” which isn’t something they know because it’s very recent, the next token is <Browser>. Simply put, the model has just predicted that it needs to use a browser API to search for the answer.

This isn’t something you see when interacting with ChatGPT’s web interface, because these special tokens are obviously hidden from you.

After predicting that special token, the LLM, through its coding environment, executes a function call to the search API, retrieves the information, and parses it. The result is a text that contextualizes the question, which is then returned to the LLM for it to respond to based on this enriched context. Of course, this also applies to any other tool, like ChatGPT’s Code Interpreter.

ChatGPT has a code interpreter to generate code to support its responses. Source: Author

So, what is function calling?

When you interact with OpenAI’s APIs (or any vendor API with function_calling support) you can provide a list of tools to the model to make it “aware” they have access to them. Then, when the LLM discerns it needs to use a tool, the return API response includes a ‘stop_reason’ parameter where it suggests what tool to use.

While in the browser version of these models, like ChatGPT or Claude perform this abstraction for you, if you’re direclty interacting with the API, your LLM interaction script should include a function that uses that tool unless native support is provided (like ChatGPT’s API that supports native RAG and will support browsing without you having to configure them).

Based on all this, we can now more appropriately define an LLM agent: an LLM that can leverage external memory and tool modules to perform actions on behalf of its users.

Now that we truly understand what on Earth is going on under the ‘LLM Agent’ idea, we can finally discuss the most important value proposition emerging from AI:

The LLM operating system that will enable this declarative paradigm to become a reality and forever transform how we interact with our computers and the digital world in general… forever.

Enter The LLM OS

As we discussed earlier, computers are hardware devices that can run software applications through a bridge known as the operating system.

But now, with LLMs/LRMs (from now on referred to as ‘LLMs’ in general), we can transform our computers into hardware devices that follow and act on our instructions instead of simply allowing us to interact with the software by transforming the inner workings of our computers into, literally, a conversation between autonomous agents.

Yes, you read that right. But what does that even mean?

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- NO ADS

- An additional insights email on Tuesdays

- Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more