THEWHITEBOX

Why Optics is the New Memory

In AI markets, memory chip companies, or the ‘memory trade’ in general, have absorbed most of the attention as the world places memory as the mother of all bottlenecks.

Well… not quite.

Instead, eventually, the real answer will be networking, which explains why optics is the next big thing in AI. And to prove my point, I’m taking action today by announcing my next stock investment.

By the end of this article, you’ll have learned the instrumental value of optics for AI by learning the ‘ins and outs’ of AI hardware scaling and the crucial role optics plays, understanding why it will displace copper, as well as the intricacies of the technology (switches, CPO, etc.) that will give you nearly unparalleled intuition of what to expect from AI in the next decade.

In the age of AI, everyone is talking about compute while everyone should be talking about networking.

Let’s uncover why.

This is not investment advice. I’m just sharing my investments in the spirit of transparency and because many of you have shown great interest in knowing what I invest in. If anything, this is just an explanation with just as much value for those that want to understand the technology as for those that want to make better investment decisions.

However, I can’t guarantee profits. Please do your own research before making any decisions.

Stop Looking at Compute, Look at Networking!

Kim Jeung-ho is the father of High Bandwidth Memory, or HBM, the memory technology used in AI data centers today and the most coveted product on the entire planet right now, and I’m not even kidding.

And while today’s article is about optics, the point I’m trying to make is that we are in the midst of a profound narrative shift regarding ‘what matters in AI’.

And what will matter the most soon won’t be compute, but two other things: first memory, then optics.

The Importance of Communication

I wouldn’t blame you for thinking that, whenever you interact with an AI model, what’s “on the other side” is a GPU that stores the model, runs your query, and responds.

But that can’t be further from the truth.

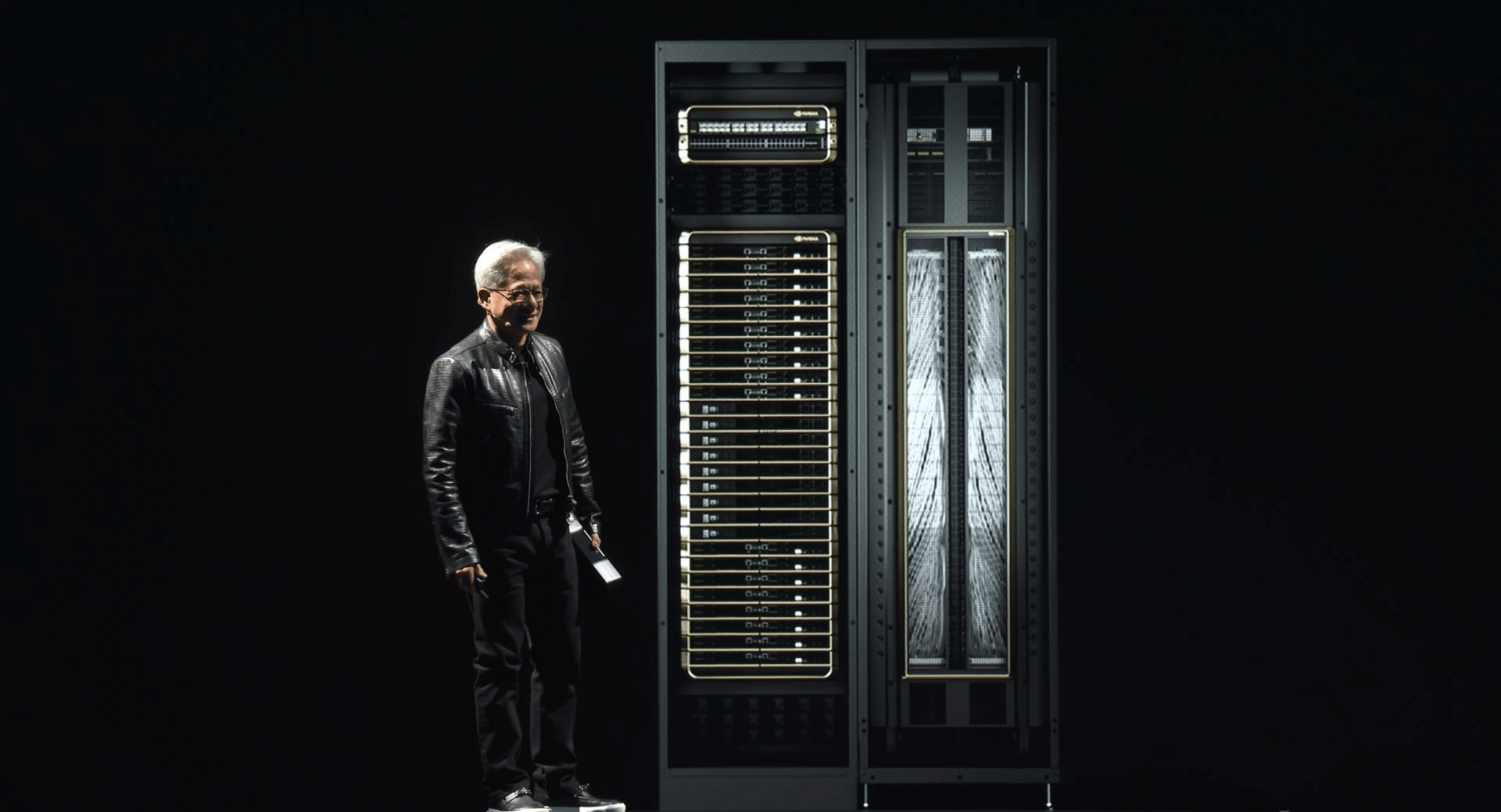

On the other side, you have an entire server worth millions of dollars, storing around 72 NVIDIA Graphics Processing Units (GPUs) tightly connected to one another, responding to you.

NVIDIA’s CEO, next to one of these racks

This means the actual AI is distributed across several accelerators, communicating over kilometers (about 3.4 km, or ~2 miles) of copper. This wiring, central to today’s discussion, is called ‘scale-up’ networking gear.

Ok, so? Simple:

When a computation workload is distributed, that usually means that the real bottleneck is not how powerful your computer is, but how fast your distributed hardware communicates.

For example, if your GPU can process 100 operations per second but, due to communication constraints, you can only feed it 10, it will do so very quickly, but the limitation is palpable: you are leaving a lot of performance on the table, which translates to heavy economic losses, both in opportunity cost and also on energy consumption (the GPU isn’t profitable).

It’s like making an Olympic swimmer race kids; they still win, but you’re still paying them as if they were racing other Olympic swimmers.

In practice, especially among investors who always desire simplified narratives they can understand, this is usually referred to as the ‘memory bottleneck’, because the most obvious limiting factor is how much data you can feed to your compute cores.

But, my dear reader, that’s only half the picture.

Scaling up

Say you can run an AI model on just two GPUs. Inside each, you have your compute chips (where the magic happens, and what Jensen Huang misleadingly to laypersons calls a ‘GPU’) and the memory chips.

Between them, you have a data highway they both use to communicate. In AI, that highway is very wide, a staple of HBM, allowing communication to be insanely fast.

HBM is not about making the lanes super fast, but having a lot of lanes. Source: Author

However, what happens when you have to make this GPU communicate with another?

At this point, you not only have to consider your memory bandwidth, but you also need to account for the pace at which memory receives data from other pieces of hardware through wires connecting the GPUs.

Here, data is no longer just traveling back and forth between the memory and compute chipos inside a GPU; data is actually leaving the GPU package and travelling a distance that has to be smaller than two meters (more on why later) through copper wires to what we call a ‘scale-up electrical switch’, a piece of hardware that connects all GPUs inside the server in an ‘all-to-all’ topology and that will become very important later in this article.

But what’s the point of a ‘switch’? In short, switches allow two GPUs to communicate directly without being wired together.

The reasons are obvious; if we have 3 GPUs, we need just three cables. Great. However, with 4 GPUs, you need 6 connections; with 5 GPUs, 10; with 6 GPUs, 15, and so on. As you can see, the number of connections grows superlinearly (i.e., faster than the GPU number scales).

If you do the math, the actual formula for calculating connections is (n(n-1))/2, where ‘n’ is the number of GPUs. That means we would need 2,556 connections for 72 GPUs. With a switch, we just need, theoretically speaking, 72.

In practice, we still use many more connections in AI servers. In NVIDIA’s 72-GPU servers, that’s 1,296 to be exact, across 18 switches.

The reason is that a single switch cannot provide sufficient bandwidth for all 72 GPUs. Each copper cable is currently limited to 800 Gbits/second (100 GB/s), and each GPU has 18 connections, giving a total GPU-to-GPU bandwidth of 1.8 TB/s, which is the number quoted by NVIDIA in NVLink 5, their current scale-up technology.

Don’t worry too much about the math, but focus more on what the takeaway here is.

Besides the fact that switches are very important in AI (and we’ll later see why they are crucial for our investment thesis), perhaps more notably, when we think about AI workloads, two big, crucial points must be very clear:

Communication, not compute, sits at the center of “what’s important”, and

We can’t think about communication as just memory, but the interconnections between GPUs in a single server (and beyond, as we’ll now see) are just as important. Well, actually, even more because they are more of a bottleneck than memory itself.

But before we focus on optics, we need to clarify that AI infrastructure scales way beyond a single server.

Scaling out

In training clusters, the AI data centers where the models are trained (created), a single server doesn’t even get you started. In fact, even the most advanced servers aren’t enough; you need many.

A simple calculation tells us why. The most powerful AI server today, NVIDIA’s GB300 NVL72 (known as Blackwell Ultra), would take months to train GPT-4, despite this model being ancient by AI standards, trained even before the first ChatGPT came into our lives in November 2022.

GPT-4 required ~1x1025 computations to be trained. A single Ultra server outputs 720 PetaFLOPs, or 720,000 trillion operations per second (in practice, it’s way less), or 720×1015. A simple calculation shows that the server would take 1.39×107 seconds to train that model, a little under 6 months.

Therefore, in training, we may need thousands of interconnected servers inside the same building, a term known as ‘scale-out’, to train models faster.

This is why we are talking about gigawatt-scale data centers: without them, there’s no ‘next frontier model’, considering that training budgets today are in the order of 100 to 1000 times bigger than GPT-4’s, numbers a Blackwell Ultra would take years or even centuries to go through.

Crucially, training budgets are so massive that, for today's most advanced AIs, a single data center doesn’t cut it; you need many, leading to multi-datacenter training, a term known as ‘scale-across’.

So, in the overall picture, one evident realization emerges: networking is as equally vital as it is ignored by markets.

But for how long? Well, not long. In fact, some investors are already noticing.

The optics thesis: the all-optical vision, NVIDIA’s end goal.

Unbeknownst to many, optical hardware already plays a very important role in AI, serving as the main gear for scaling out and scaling across.

As we saw in our China analysis a few weeks ago, Chinese servers already use optical technology for scaling up, too (interconnections inside servers are also optical), which is the future of US servers and one of the crucial takeaways of today’s newsletter.

But the reason is not that “China is living in the future and the US in the past”. They were simply forced to do it.

Here’s why.

Inference and the role of scale-up

While copper can be, in itself, a great investment due to the massive shortages we’re going to see throughout the next 15 years (more on this in a future issue), when it comes to AI networking, it’s actually on its way out.

As we’ve learned, AIs are extremely dependent on communication between accelerators (e.g., GPUs) having a high bandwidth.

That is, it’s useless to have very powerful GPUs computationally speaking if data from other GPUs/servers takes forever to reach them.

And when it comes to bandwidth, networking is a larger bottleneck than memory, even.

Simple maths shows us why. GPU compute chips receive data from three different places (ignoring scale-across, which is quite niche):

In-package: From their own memory chips inside the actual GPU package, at around 8 TB/s today, up to 13 TB/s with the upcoming NVIDIA Rubin chips.

Scale-up: From other GPUs in the same server, at a pace of 1.8 TB/s in Blackwell Ultra

Scale-out: From other servers, the number drops to a staggering 100 GB/s, 18 times slower.

Currently, the optics thesis is that it’s already very important for scale-out, making it vital for scaling AI training and making gigawatt-scale data centers a reality. That’s a lot of fiber-optic cable and already places optics top-of-mind for NVIDIA et al., but here’s the thing: It’s somewhat already priced in, and we need to think bigger.

The truth is, scaling out has a somewhat limited market opportunity; the real business comes with scale-up, because wiring density is just on another level (miles per single server remember!).

Crucially, the importance of scale-up stems from inference, which is already the predominant AI workload.

The point is that inference is not affected by scale-out; the severe bandwidth drop translates into unbearable latency for the end user. This is not a problem in training, where it’s more about processing as much data per second as possible, but it’s a deal-breaker on inference.

In a nutshell, today, inference doesn’t care about scale-out and thus optics at all (except for Chinese servers).

Therefore, considering inference top-of-mind position in the industry, scale-up has become the biggest bottleneck and thus the most important thing, to the point that many people call this bottleneck the “scale-up is all that matters” bottleneck.

But then, is optics becoming irrelevant? No, quite the contrary, because it’s the future of scale-up.

The end of copper.

Let’s understand why optics is going to displace copper as the communication medium in scale-up. We have already internalized that networking is what determines how performant your AI workloads are in inference. This means we need to make things much faster.

Much, much faster.

One option is to increase each connection's data rate from today’s limit at 100 GB/s to 200 GB/s, which is exactly what we are in the process of doing. We can do this with copper as long as each cable is less than 2 meters long (~6.5 feet), but it results in much higher power requirements and greater losses.

Put simply, if you want to double the amount of data a copper connection can transmit, you will start losing significant signal in the process. This increases the need for electronic devices like DSPs (Digital Signal Processors) to recover the signal, but at a skyrocketing power consumption in turn.

Worse, networking gear in its current state already accounts for a non-zero percentage of a data center's total energy consumption, well over the double-digit mark. This, coupled with the fact that, unless you’re living under a rock, you will know we are severely power-constrained, is all but good news, or simply prohibitive.

Another option is to double the connections to each GPU. Problem solved, right? Well, if not for the fact that, sadly, this is not Alice in Wonderland, and things occupy space.

Recall that we already have 2,556 connections; miles of cabling. Doubling them in such a constrained space (also recall the server image with Jensen) is like trying to pack twice as many clothes into your luggage while being already full.

So let’s double the server size then!

Well, here we run into the third issue: as we mentioned, copper is not viable beyond 2 meters. In layman’s terms, once copper wires are longer than that, the high data rates entail too much loss, which explains why scale-out gear is already all-optical.

This also explains why Chinese servers like Huawei’s CloudMatrix are all-optical from the get-go; they don’t have enough power density per chip, so servers are physically enormous, forcing each connection to be optical from the get-go.

A Huawei CloudMatrix server, 16 times larger than NVIDIA’s biggest server.

Sadly for copper enthusiasts, all roads lead to Rome, and Rome was not built with copper.

Instead, it’s going to be built with optics fiber.

And this leads to one of the most transformational changes in AI for this decade I have already mentioned: the arrival of optical scale-up, a very large market with huge annual growth potential that will soon be all optical.

Unbeknownst to most investors, optics represents the solution to many of AI’s biggest scaling problems.

And there’s no one better positioned to ride this huge market that opens to optics than a company that is one of the lesser-known companies already well in bed with key players like NVIDIA or Google, the company I’ve invested in and that I want to talk to you about today.

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- NO ADS

- An additional insights email on Tuesdays

- Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more