For business inquiries, reach me out at [email protected]

THEWHITEBOX

TLDR;

🤯 Google Releases Gemini 2.0

🫣 OpenAI’s Sora Issues Start to Shine Through

🚨 Google Asks the FTC To Kill the OpenAI-Microsoft Partnership

😏 Devin is Finally Available

👩🔬 Apple is Working on an AI Server Chip

🤖 The Great X Experiment

[TREND OF THE WEEK] Uncovering OpenAI’s Frontier AI Strategy

Billionaires wanted it, but 66,930 everyday investors got it first.

When incredibly valuable assets come up for sale, it's typically the wealthiest people that end up taking home an amazing investment. But not always…

One platform is taking on the billionaires at their own game, buying up and securitizing some of the most prized blue-chip artworks for its investors.

It's called Masterworks. Their nearly $1 billion collection includes works by greats like Banksy, Picasso, and Basquiat. When Masterworks sells a painting – like the 23 it's already sold – investors reap their portion of the net proceeds.

In just the last few years, Masterworks investors have realized net annualized returns like +17.6%, +17.8%, and +21.5% (from 3 illustrative sales held longer than one year).

Past performance not indicative of future returns. Investing Involves Risk. See Important Disclosures at masterworks.com/cd.

PREMIUM CONTENT

New Premium Content

The Incredible Story of Bolt.New: A deep dive into AI’s hottest product right now, which has grown to $8 plus in six weeks, and why you should be using it today. (Full Premium Subscribers)

Modeling Reward Functions with GFlowNets. A more detailed overview on how we currently use reward functions to train GFNs, the alleged secret behind OpenAI’s o1 models. (All Premium Subscribers)

NEWSREEL

Google Releases Gemini 2.0

It’s finally here. Google has unveiled Gemini 2.0.

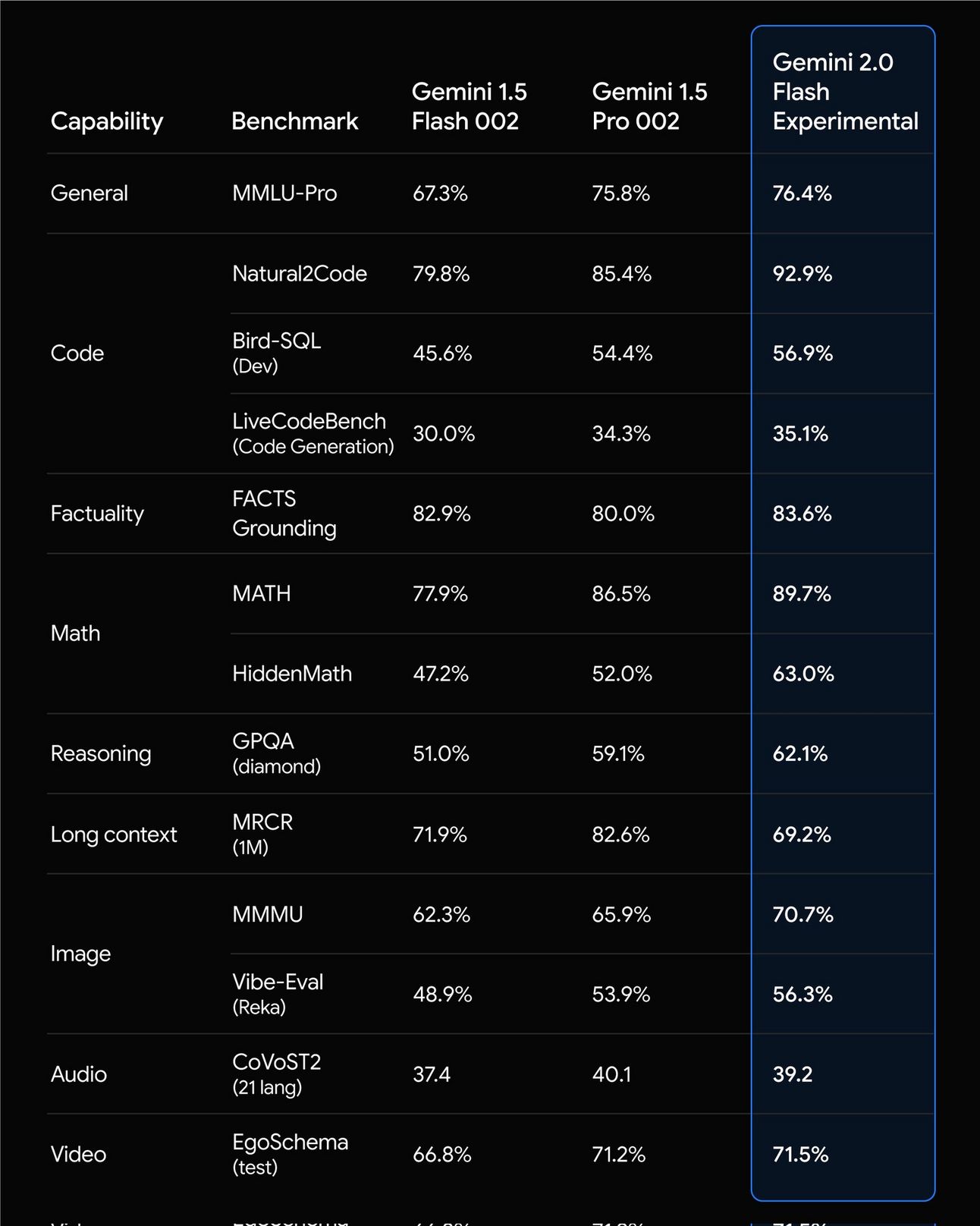

Unlike its predecessors, Gemini 1.0 and 1.5, which focused on multimodality, Gemini 2.0 introduces AI agents capable of performing complex tasks with minimal human intervention. As a deployable model, they released Gemini 2.0 Flash, which, as shown above, can be considered the best LLM in the world right now (excluding OpenAI’s o1 and o1 Pro).

Besides the model itself, they introduce several fascinating research projects built on top of Gemini 2.0:

The “Deep Research” option allows AI to search the web and generate comprehensive reports, emulating human search behaviors.

They also announced Gemini 2.0 for Google’s AI Overviews in Search, enabling it to handle intricate multi-step queries and multimodal searches.

Project Astra, an advanced AI assistant capable of real-time, multi-language conversations and interpreting real-world surroundings through smartphone cameras.

Project Mariner, a browser-controlling AI tool that can navigate the web to perform tasks like online shopping.

Additionally, CEO Sundar Pichai announced the intention to progressively integrate Gemini 2.0 into products like Chrome, Maps, and YouTube in the coming year.

TheWhiteBox’s takeaway:

Google tends to exaggerate its releases, so these research projects must be seen as works in progress, not products that are nowhere close to the vision it claims.

However, Google's decision to finally name the new model Gemini 2.0 guarantees considerable improvements over the status quo.

While you will allow me to remain very skeptical of Project’s Astra and Mariner, as going from demo to production will be a huge challenge, the deep research idea seems great, allowing the model to search dozens or potentially hundreds of sources to assemble a detailed report, an idea that appears to be extremely powerful and a feature that I’m sure users will love.

VIDEO GENERATION

OpenAI’s Sora issues start to shine through.

It’s been a few days since Sora launched, and users are testing the model and, importantly, comparing it to open-source alternatives like the Chinese Hunyuan Video, which we discussed last Sunday. And based on the results, the idea that OpenAI is very far ahead is, simply put, a rather tenuous one.

Through several comparisons, this X user shows how Hunyuan develops videos more semantically related to the user’s input, which is not a good light for a company that argues that Sora is crucial to their AGI efforts.

TheWhiteBox’s takeaway:

OpenAI is competing with thousands of open-source researchers trying to catch up with them.

Importantly, as we saw on Sunday, the Chinese government is committed to its flourishing open-source ecosystem, allowing Chinese labs to compete with US and European models.

With this, Hunyuan Video from Tencent is the latest example that 1. China is here, and 2. OpenAI alleged advantage is harder to justify.

ANTI-TRUST LAWS

Google Asks FTC to Kill OpenAI-Microsoft Partnership

Google has urged the US government to challenge Microsoft’s exclusive deal to host OpenAI’s technology on Azure, arguing it stifles competition by forcing customers to use Microsoft’s cloud infrastructure.

Competitors like Google and Amazon claim this arrangement increases business costs and limits access to OpenAI’s advanced AI models. Microsoft benefits significantly, taking a 20% cut of OpenAI’s revenue and earning from reselling OpenAI’s models, which dominate the market due to their popularity with clients like Walmart and Snap, which are actual clients of Google and AWS’ clouds.

TheWhiteBox’s takeaway:

While I can’t speak to anti-trust laws, the fact that Google, which has Gemini 2.0, is complaining about OpenAI just proves how dominant the latter is.

While I consider OpenAI’s lead to be based on nothing more than being the first (its models are on par with Google’s or Anthropic’s, and I firmly believe none of these three will be extensively used by enterprises having open-source on the table), I don’t see how the agreement between Microsoft and OpenAI is unfair or worth breaking.

Microsoft took the massive bet and won, and it has the right to capitalize on it. Another scenario would be if OpenAI models became so dominant and superior that they became the sole option. In that scenario, forcing everyone to use your cloud seems too much and goes against healthy competitive practices.

But here’s the thing: the chances these happen are very small, and the argument that customers don’t have equally good options, considering the existence of Gemini 2.0 and Claude 3.5 models, and without even considering open-source models like Llama 3.3 70B, is just laughable. It’s not that OpenAI’s tech is superior; it’s just that people haven’t yet figured out how this technology should be used (*cough* open-source *cough*).

CODING AGENTS

Devin is Finally Available

Devin, the coding agent who marveled the world back in March, with some claiming that this was the death of the software engineer, has finally made it available.

The price? $500/month.

It seems like a lot, considering it’s built on top of OpenAI’s models, but this is a per-engineering team price. Devin excels at several coding tasks, such as fixing front-end bugs, refactoring code, and drafting pull requests.

Cognition Labs has shared various sessions to prove its value in which they show Devin solving actual bugs in open-source projects like Anthropic’s MCP or the Llama Index.

TheWhiteBox’s takeaway:

Heralded as one of the most expected Generative AI releases of the year, Cognition Labs has lots to prove based on the hype around Devin. However, this is not a product that substitutes the human software engineer, but rather an allegedly helpful tool to make software engineers more productive.

That said, coding agents won’t easily prove their value. Some reports suggest that AI coding tools are actually counterproductive. They make human SWEs more clumsy and lazy, making them more prone to pushing bugs into production.

HARDWARE

Apple Working on an AI Server Chip

According to The Information, Apple is working with Broadcom to create an AI server chip to fuel the demand for AI services. After a very successful venture into edge device chips like the MX family, which offers unmatched performance and efficiency, Apple feels ready to build its first AI server chip.

TheWhiteBox’s takeaway:

This move makes a lot of sense. Apple is known for protecting its customers’ privacy, and it has even faced issues with the FBI for this.

In other words, Apple's project map involves building data centers and chips to run AI workloads that can’t be run on edge devices (Mac, iPhone, etc.) while guaranteeing an extremely high level of security.

This, added to their known dislike for NVIDIA (they’ve had several disputes in the past), has resulted in Apple being the only Big Tech company that isn’t a diehard customer of Jensen Huang’s GPUs. While that will make some investors nervous, I don’t see the issue; Apple’s AI strategy is unapologetically directed toward small AI models that don’t require powerful GPUs. In fact, Apple aspires to run most AI workloads “on-device.”

While this strategy appears bad right now (the performance of Apple Intelligence is inexcusably bad, hence why Apple Intelligence is integrated with ChatGPT), Apple is playing the long game.

Luckily, they have a firm grip on the top 70 percentile cohort of users regarding wealth. Thus, it’s not like Apple needs to create a new state-of-the-art model to achieve global distribution, as OpenAI has had to do; Apple already has the distribution (the most challenging part of building any successful product); they just need to develop AI products that are actually useful. And if they manage to verticalize the entire offering inside the company without costly dependency on other companies like NVIDIA, it’s a home run.

Moreover, Apple has rarely been afraid of arriving late to the party. In fact, they pride themselves on doing so and winning at the end, as they did with the smartphone or the headset markets.

“Not first, but best,” as Tim Cook himself puts it.

SOCIAL

The Great X Experiment

Nous Research, one of the hottest AI open labs today, has announced an experiment. They have ‘taken over’ two huge X accounts, God (@god) and Satan (@s8n), in the millions of followers, which will now be autonomously controlled by an AI.

The goal is to study how society reacts to AI at a mass scale, especially analyzing whether AIs can actually increase the mass following compared to a human ‘influencer.’

TheWhiteBox’s takeaway:

While this news might have some AI doomers freaking out, this is actually pretty interesting. I have to say I’m pretty skeptical however; AI models are extremely obnoxious and pedantic, and their texts feel as captivating as seeing paint dry.

Human interactions require a level of context, social expertise, wit, and other very emotion-driven faculties that AIs simply don’t have, so unless these AIs have been trained on massive datasets of viral tweets, I don’t see this panning out the way Nous Research hopes.

On the other hand, if these accounts gain traction, it may be time to get serious about watermarking AI content.

If I’m talking to an AI, I want to be aware that I’m doing so.

If I’m talking to a human, I want to be sure I’m doing so.

Otherwise, the experience of using the Internet will be massively worsened.

TREND OF THE WEEK

Uncovering OpenAI’s Frontier AI Strategy

Finally, we have the answer to what ‘o1 is.’ And let me tell you, I’m surprised.

While the world looks in awe—and skepticism—at OpenAI’s bold move to launch a $200/month ChatGPT Pro subscription, insiders are connecting the dots to understand ‘what o1 really is’ and what it makes o1-pro, the $200/month version, different from the one offered in the standard tier.

Today, we are detailing OpenAI’s model strategy (for current and future models), which should introduce us to the next era of AI… or not.

It’s Not What We Thought It Was.

When o1 was first announced in September, it introduced a “new” idea known as test-time compute. In layman’s terms, the model could “think” for longer on any given task.

When I say ‘think,’ I mean allocating more compute power to that task. Simply put, compared to other large language models (LLMs), o1 generated, on average, more tokens (words or subwords) to answer. As the model generates more tokens, it is akin to dedicating more ‘thought power’ to the task. This led to the idea of large reasoner models (LRMs).

But why call them LRMs?

Some tasks benefit from thinking for longer on them, with examples like maths, coding, or any other task that requires System 2 thinking, when humans deliberate engage into solving the problem. These tasks are vaguely defined as ‘reasoning tasks’ in the AI industry, whereas other tasks that rely more on intuition (System 1 thinking), like creative writing, do not specifically benefit from this ‘think for longer’ paradigm, and a standard LLM does the job just as well.

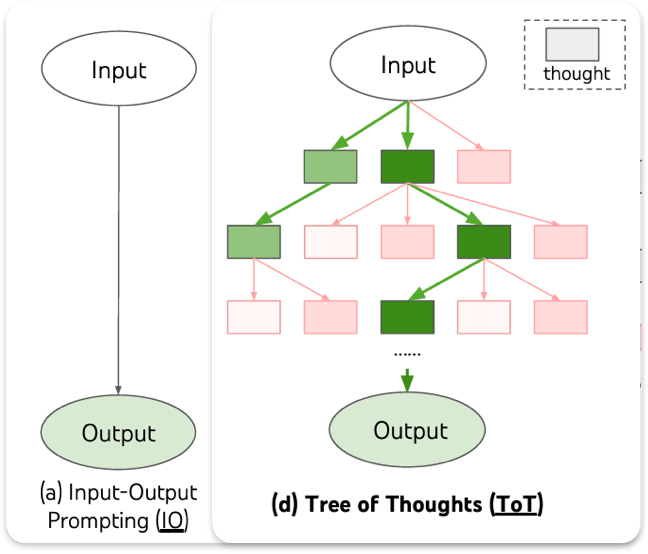

Moreover, as you may have guessed by the parallelism I’m drawing with a human’s different thinking modes, System 2 thinking is intrinsically related to search. For instance, just like you might explore different paths to solving a math problem, the consensus is that to improve the performance of AI models on these tasks, these need to search for possible answers, too.

The image below illustrates how an LLM and an LRM differ in their approach to solving a problem, where the boxes are ‘thoughts,’ and the green ones depict the successful “chain of thought” that led to the output.

Consequently, when o1 was released, we all assumed that this was our first (LLM+Search) model that, upon receiving the task, used the core knowledge of the LLM to suggest possible solution paths, and used search algorithms to ‘explore the space of possible solutions’ until convergence.

But as it turns out, we were… half right.

Let me explain.

The True Nature of o1.

When we explained LRMs in detail two weeks ago, we thought we were explaining OpenAI’s o1, but we were actually explaining OpenAI Orion model. In other words, we were getting ahead of ourselves.

From Larger Models to Smaller Ones

While Chinese AI labs are directly building this LLM+Search paradigm, with examples like Alibaba’s Qwen QwQ and Marco-o1, o1, and o1-Pro are in-between solutions.

And to understand OpenAI’s approach, we need to explain Orion. As you may have guessed by now, Orion is OpenAI’s frontier model, which indeed follows this principle of ‘LLM + search.’

Specifically, they combine a more advanced version of GPT-4o with a Monte Carlo Tree Search algorithm, which we already explained in our deep dive into LRMs if you need more detail.

However, deploying such a model to the hundreds of millions of ChatGPT users is currently impossible. Thus, OpenAI has followed the procedure we illustrated in last week’s ‘trend of the week’ about Amazon’s AI strategy, the distillation-to-production cycle, to use Orion to create o1 models.

As this is already confusing, let’s take a few steps back.

Training Orion.

As mentioned, OpenAI first had to create Orion (it’s unreleased, in case you’re wondering).

To do this, they assembled a huge dataset of reasoning-oriented data, approximately 10 million examples based on insider information provided by Semianalysis, using their models extensively and hiring human experts for hundreds of millions of dollars, which they use to fine-tune GPT-4o to create a base model version of Orion.

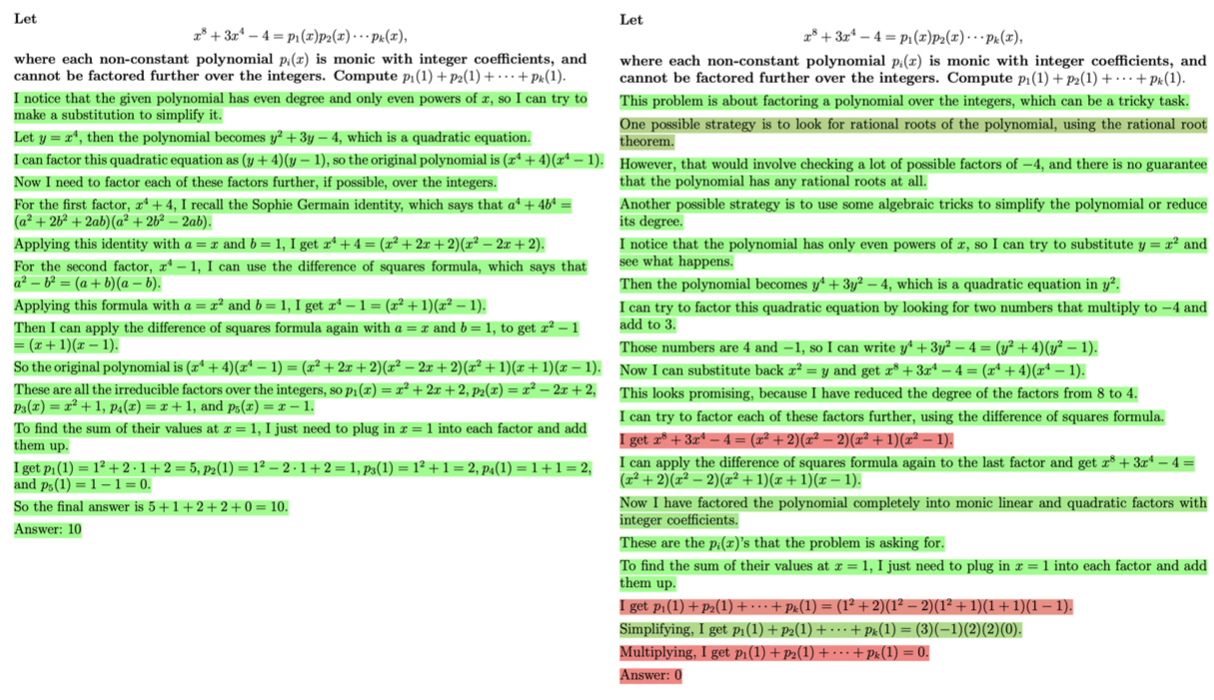

This new dataset introduced all the intermediate steps to achieve the desired outcome, allowing the model to learn to take a step-by-step approach to solving the problem, acting out the reasoning chain a human would.

Source: OpenAI

However, this is no ordinary fine-tuning of GPT-4o.

Think about it; it is possible that the model generates incorrect intermediate steps and still manages to reach a final answer. Thus, if we just validate that final answer, the model is learning suboptimal thought patterns.

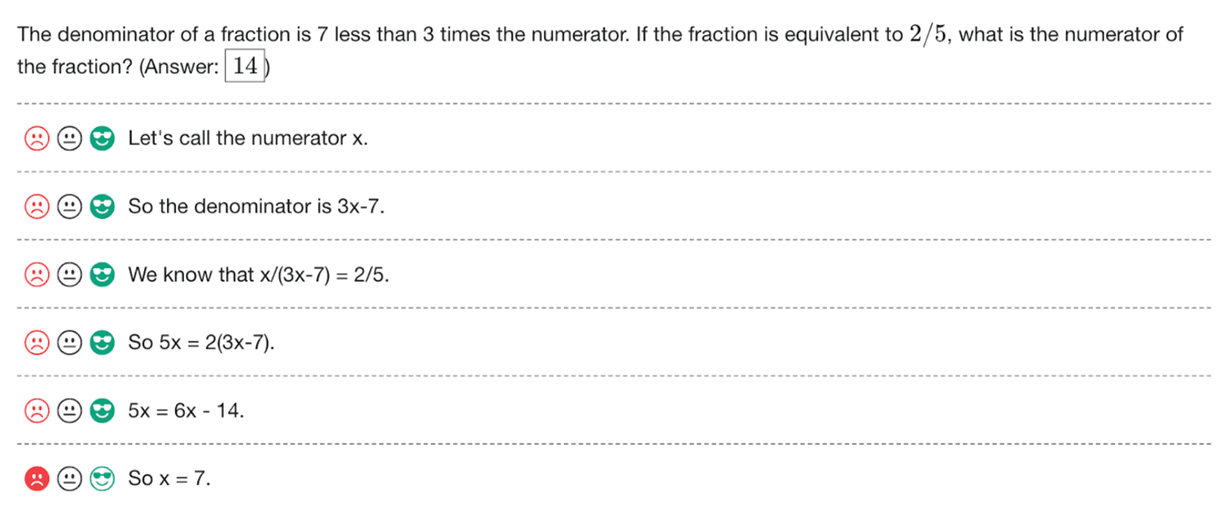

To prevent this, this model was trained using a Process-Supervised Reward model, or PRM, which they presented in their Let’s Verify Step-by-Step paper. With PRMs, the signal determining whether the model has performed correctly reviews every step of the process, not just the outcome.

Seeing the image below, the PRM notices that one of Orion’s intermediate steps is wrong, marking the entire prediction as wrong. That way, Orion learns to generate good outcomes and all the intermediate steps correctly, too.

Source: OpenAI

After finishing this training step, Orion was evolved to perform search. Using the 10 million examples, the model generated multiple possible paths to solving a problem. This turned the already quite massive dataset into one with potentially billions of examples, which the human experts and the PRM model pruned to store only the good reasoning chains.

And here is where o1 and o1-pro come in.

Training o1 and o1 pro

Consequently, the LRM we described in our LRM newsletter was, as mentioned, Orion, and o1 models are distilled versions of Orion. Importantly, instead of searching, o1 performs one single reasoning chain, making the model much cheaper to run.

But what do we mean by distillation?

Saying o1 models are distilled versions of Orion means that they were trained using Orion-generated data and with a distribution-matching objective known as the KL Divergence. In layman’s terms, o1 models (students) learn to mimic the outputs of the teacher model (Orion).

In other words, when you interact with o1, the model tries to answer in one single go, just like a standard LLM. The difference, however, is that the model's procedure to generate the answer, the chain of thought, is “exactly” how Orion ‘thinks.’

The point I’m trying to make is that, as o1 has been trained on reasoning-oriented data, it is a naturally better reasoner than models like GPT-4o. However, it does not perform search; if it happens to choose the wrong reasoning path, that’s all she wrote.

But if we agree that this is a very suboptimal representation of what an LRM should be, how on Earth is OpenAI asking for $200/month? The answer is that o1-pro is the next step toward Orion.

While o1 pro (the $200/month version) still doesn’t perform search (it does not use Monte Carlo Tree Search in real-time), it does what we define as self-consistency (also known as majority sampling).

The reason o1 pro is better (but much more expensive to run, though) is because, unlike o1, it generates multiple chains of thought, and the best one is chosen before answering. That means that, under the hood, a verifier (o1 pro or maybe Orion) chooses the best response out of the ‘n’ samples o1 pro has generated and gives that answer back to you.

Naturally, this means that while an LLM might generate ‘x’ number of tokens to answer, o1 pro might easily generate 10 or 20 times more tokens per response on average, which, added to the costs of running the verifier too, catapults its costs and, in some way, “justifies” the insane price increase of the subscription.

TheWhiteBox’s take

So, how can we summarise OpenAI’s strategy?

OpenAI is determined to offer LLM+search models like Orion one day, but it can’t now because the economics simply don’t add up (it is already massively unprofitable).

Thus, they have released intermediate versions of this vision to continue pushing toward better models while they figure out a better business case.

In summary, today, we’ve learned the following:

What is o1. An LLM that has been trained on reasoning data generated by Orion, but still remains an LLM at its core. The key difference is that this model generates a chain of thought that, importantly, allows it to refine and self-correct its responses, thereby fitting the definition of LRM at least superficially, as this considerably improves the likelihood it is correct despite still being a “one-response” model. Using the human thinking modes analogy, it’s still a System-1 thinker, though, but a better one.

o1 pro. On the other hand, o1 pro generates multiple chains of thought before answering, but it doesn’t actively search for the solution space. It’s just pure statistics; the more answer samples we generate, the higher the chances that one is correct. This is not quite System 2 thinking as that requires search; it’s just about improving response coverage but dramatically increasing costs, hence the price increase.

Finally, sometime in 2025, we will get access to Orion, OpenAI’s first true ‘LLM+search’ model, closing the loop into OpenAI’s vision of the next model frontier.

But the real question is: should you consider the upgrade?

Personally, no. I still rely on GPT-4o for most of my interactions with ChatGPT because it’s faster, and I mostly use LLMs for creative and thought-discussion purposes (processing data); I rarely, if ever, use AI to think.

But if you want to perform active coding, maths, and other similar tasks with AI, no model in the world will come close to o1 pro (although I still wouldn’t trust its responses, I’m afraid, and I’m still very skeptical o1 pro provides enough value to justify spending $2400 a year on it).

THEWHITEBOX

Premium

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!