OTHER PREMIUM CONTENT

Other New Premium Content

GFlowNets: A Technical Deep Dive to Bridge the Worlds of Bayesian Inference and LLMs, The Alleged Secret to OpenAI’s o1 Success (for those that want to dive deeper into the technique, which is described below).

Writer RAG tool: build production-ready RAG apps in minutes

Writer RAG Tool: build production-ready RAG apps in minutes with simple API calls.

Knowledge Graph integration for intelligent data retrieval and AI-powered interactions.

Streamlined full-stack platform eliminates complex setups for scalable, accurate AI workflows.

TECHNOLOGY

Forget LLMs. LRMs Are All The Rage Now

Inevitable. The AI industry has shifted its focus to post-training techniques, transitioning the ubiquitous word ‘LLM’ in everyone’s mouth to ‘LRM,’ (standing for Large Reasoner Model), which are all the rage.

Even Microsoft’s CEO is now pumping his chest with the new law in town, the “inference-time compute” law, indirectly acknowledging the pre-training plateau we discussed last week and welcoming the ‘LRM era.’

Nevertheless, over the last few months, but especially the last two weeks, there has been an explosion of new releases from OpenAI, Alibaba, DeepSeek, Nous Research, MIT, Writer, Fireworks, and others. All offer the same ‘new thing’: they are all LRMs.

Today, we are deep-diving into these models, explaining all the different ways they are being built, from inference-time scaling to inference-time retrieval update, test-time training, and even GFlowNets, which seem to be the secret sauce that OpenAI is fiercely trying to protect.

By the end of this article, you will understand:

AI’s inescapable current limits incumbents and media don’t want you to know,

Why LLMs are no longer the name of the game,

2025’s Post-Training Framework: three ways to how AI labs are creating these exciting new AI models,

The emergence of a new training paradigm, GFlowNets, that will force you to reframe your understanding of AI completely,

Finally, we will define the perfect model to build in 2025 based on all the techniques described today, i.e., what will o2 or Claude 4 look like?

Let’s dive in.

LLMs Aren’t No Longer ‘It’

As we saw last week, scaling laws are plateauing. Brainlessly increasing model and dataset sizes no longer yields the outsized improvements they once did.

The Bitter Lesson

It’s quite funny when you look back, but not long ago, it seemed like the ‘AGI problem’ was solved, and the only thing we had to do was to unlock more compute.

The AI conversations were little about the algorithms and the technology and all about building GigaWatt data centers and buying as many GPUs as one possibly could. AI was less about science and more about religious beliefs. Life was simple.

But that’s no more.

Now, AI labs are desperate to create new ways to develop next-generation models. And most efforts involve techniques that take the LLM after pre-training and perform a series of methods to improve its performance, changing its natural behavior.

The Importance of Fine-tuning

Developing a frontier AI model involves more than pressing the training button and releasing the model once it's finished. After this training, the resulting model has compressed the required knowledge but doesn’t know how to elicit it.

Thus, it undergoes two significant next steps:

Supervised Fine-tuning (SFT): A phase where we send the model a carefully curated dataset that teaches it to be a good conversationalist that provides answers to the questions it gets asked, aka ‘be useful.’ After this phase, we usually call the model {LLM_Name_Instruct}.

Preference Fine-tuning: A “final” phase where we train the model to remain useful while adhering to a set of human values expressed via a set of human preferences. In other words, it’s not enough to be useful, but also provide answers that don’t break a set of guidelines; we teach the model to make better decisions to know what to answer and, crucially, when not to answer. After this phase, we say the model is ‘aligned’ and can be finally released.

This is the pipeline for creating an LLM. However, in 2025, the training pipeline will add new steps to transform an LLM into an LRM, all of which we are covering in detail today.

2025’s Post-Training Framework

Before we dive in, why is everyone panicking? Well, because the delusion is finally over.

The Blurry Limits Became Visible

AI’s limits have been known for decades, and the current frontier still fails to overcome the same hurdles as its predecessors, despite what you might have been told.

But what are these limits?

They can’t learn while they ‘think.’ Imagine you went to school for the first 20 years of your life and, afterward, had to perform every action and thought based on those learnings but couldn’t learn anything new, even if the world changed. That’s AI’s reality today.

They only know what they know. Or, conversely, they don’t know what they don’t know, aka they only work well if they have seen the data or task before—and many times. In AI parlance, their generalization capabilities are limited to data that falls ‘in distribution;’ as long as the data is somehow similar to their knowledge, there’s a chance. Otherwise, they are doomed to fail.

These two limits are apparently-unbreakable chains tied to AI’s heels that prevent it from liberating its real power. So, what do all these revolutionary techniques bring to the table to finally change this?

A Fundamental Compute Shift

If there’s one thing common across all the techniques we will see today is that they all make a compute transition from training to inference, i.e., they all increase the compute allocated to inference (when the model makes predictions).

This transition differentiates an LRM from the previous generation of LLMs.

In layman’s terms, they all increase the compute the model uses to solve a problem, i.e., the model has “more time to think.” In AI terminology, this new paradigm is called “inference-time compute,” as Satya referred to it in the first link in this piece.

But while all imply more compute during inference, the following methods use this compute in—at least—one of three ways:

Update the model’s memory: We use the new data to update the memory pool the model has access to so that it remembers the input the next time it sees it.

Perform search: The model is given time to explore different solutions to the problem and provide the best one.

Actively learn: The LRM updates its parameters (what it intrinsically knows) to learn this new skill, fact, or task.

These are all orthogonal (more on that at the end of this piece). In the meantime, let’s review each one in detail.

Updating Model Memory

In this case, the idea is pretty straightforward. All LLMs have proven is that they are great at retrieving knowledge from their experience (and that’s pretty much it). Therefore, the idea here is to make this memory adaptable; upon seeing a new input the model does not know, it internalizes that content for future retrieval.

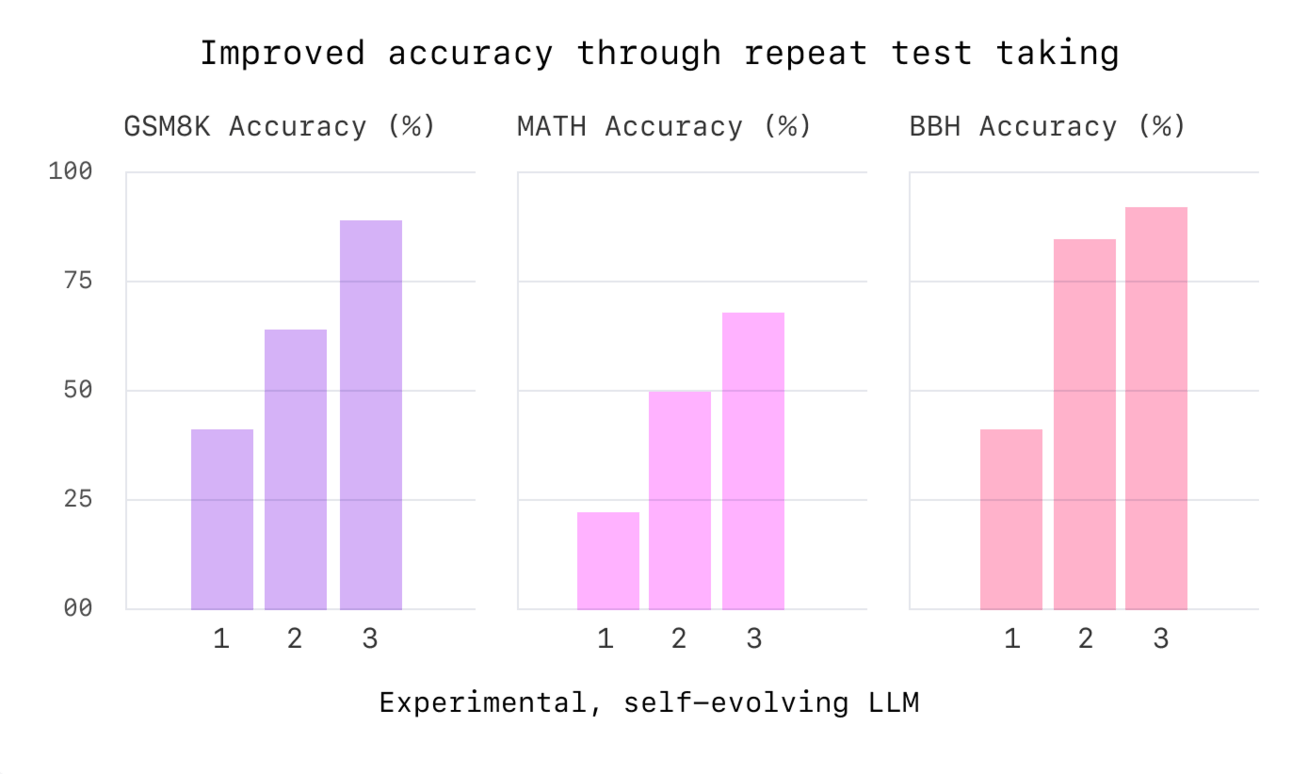

For instance, we have Writer’s proposal, which allows real-time updates of the model’s knowledge. As we can see below, future retries of every benchmark show how the model improves performance (as it has previously memorized how to solve the task).

This is a very down-to-earth approach to improving LLMs' reasoning capabilities. If we know they excel at memorization, we allow them to update their memory. It is simple yet effective.

On the contrary, it doesn’t seem like real reasoning, as memorization is not reasoning; we are just expanding the tasks the model can mimic reasoning on through memorization.

However, our following “context enhancement” technique is another story.

Graph-Based Reasoning for Richer Context

I won’t go into much detail as we did on Thursday, but this new method (the most recent out of all the ones we are reviewing today) also increases the reasoning capabilities of LLMs (so they become LRMs).

In this case, we expose the model to data (via some sort of memory pool like before), but this data is exposed in graph form.

Besides the fact that exposing content to the LLM in graph form is in itself an already-known effective way to induce better reasoning (as the graphs already abstract the key knowledge from the text), MIT researcher Markus Buehler introduces the use of isomorphisms, common structures across seemingly unrelated topics that allow the LLM to reason over data that, while appearing to be new to the model, has an underlying structure common to other epistemic sources the model knows.

As Murray Gell-Mann once said,

“The complicated behavior of the world is merely surface complexity arising out of deep simplicity.”

In short, the world is ruled by simple—and common—laws common across most things. Therefore, picking up these common structures across data (isomorphisms) makes unknown data approachable to AI, appearing crucial in helping models generalize.

However, graph-based reasoning is nonetheless just a way to enrich the content provided to the AI to make it more ‘reasonable.’ Moving on, there are more advanced techniques—the ones actually being heavily studied by frontier AI labs—that take us a step further.

Verifiable Rewards and TTT

Next, we move into Tulu3, another very recent release by the Allen Institute, this time fully open-source.

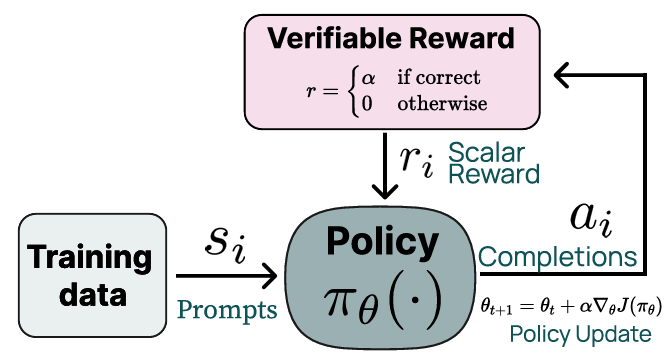

While the paper extensively details various techniques to improve the fine-tuning states of SFT and Preference tuning we saw earlier, the post-training technique we are discussing today is Reinforcement Learning from Verifiable Rewards (or RLVR).

The idea is straightforward. In areas where we can automatically verify whether the response is correct, we connect an automatic verifier to the LLM and train it. This greatly improves their math, coding, and other verifiable capabilities, becoming LRMs (better reasoners).

Through this method, AIs improve over time as they receive a clear, concise, and accurate reward indicating their performance.

But while RLVR does improve performance, it doesn’t unlock the capacity to actively learn as Test-Time Training does. As I don’t want to repeat myself (I covered TTT in detail last week), many labs are studying how to allow models to learn during inference, a term known as active learning.

As our environment is always changing, it is crucial to have models that can learn to adapt to that change. If they can’t, they are stuck in the past and cannot adapt to the variations that will inevitably occur in their surroundings (be that in the digital world or physically through AI robots).

However, performing this active training is tremendously complex because capturing the learning signals (a way to give the model feedback so that it can improve) is hard (as we just mentioned) and very expensive. Performing learning updates on models the size of entire GPU servers is prohibitive.

Thus, several techniques for cost-effectively addressing these issues have emerged, with MIT’s approach, explained in the previous link, stealing most of the spotlight.

In short, the LRM generates a small dataset of variations of the task in test time, trains itself on them, and then makes the prediction, as if a human did some short practice runs on how to take a basketball shot, fixing his/her technique before actually making the try.

But while all these techniques are interesting and somehow novel, none compare to the promises and excitement around a technique that, albeit being three years old, is only now gaining real traction.

And, as always, it’s OpenAI leading the way.

Considered a new learning algorithm, GFlowNets might hold the secret to genuine AI reasoning through an extremely innovative sampling method that has some of the most brilliant minds of our time very excited.

Nonetheless, these are the words of legendary AI researcher and Alan Turing award recipient (the Nobel Prize of Computer Sciences) Yoshua Bengio about it:

I have rarely been as enthusiastic about a new research direction.

Notably, GFlowNets force us to rethink what and how we teach AIs. Thus, brace yourself because we are about to rewrite your entire understanding of AI; I guarantee you will never see it the same way again.

In the meantime, you will learn what OpenAI’s biggest secret is.

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- NO ADS

- An additional insights email on Tuesdays

- Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more