Hire an AI BDR to Automate Your LinkedIn Outreach

Sales reps are wasting time on manual LinkedIn outreach. Our AI BDR Ava fully automates personalized LinkedIn outreach using your team’s profiles—getting you leads on autopilot.

She operates within the Artisan platform, which consolidates every tool you need for outbound:

300M+ High-Quality B2B Prospects

Automated Lead Enrichment With 10+ Data Sources Included

Full Email Deliverability Management

Personalization Waterfall using LinkedIn, Twitter, Web Scraping & More

THEWHITEBOX

TLDR;

This week, we discuss:

the sad reality of Google’s AI efforts {😋 Google Releases New Gemini 2.0 Models},

OpenAI’s latest potential moonshot {😟 OpenAI, The New Robotics and Consumer Hardware Company?},

how Apple continues to delve into AI research in very unorthodox ways {🤨 Apple’s Emotional Robots},

proof of AI’s deflationary powers, {🧑🏽🏫 CodeSignal’s Leadership Teaching Tool},

and a deep-dive on research by Anthropic that claims to have created the first unhackable AI model {TREND OF THE WEEK: Anthropic Presents the Unbreakable Model}

NEWSREEL

Google Release Gemini 2.0 Pro

In a short blog post, Google announced the new releases of the Gemini 2.0 family of AI models, which aim to enhance performance, efficiency, and accessibility.

The updated Gemini 2.0 Flash model is now generally available through the Gemini API in Google AI Studio and Vertex AI. According to Google's own claims, this model offers developers improved performance for high-volume, high-frequency tasks.

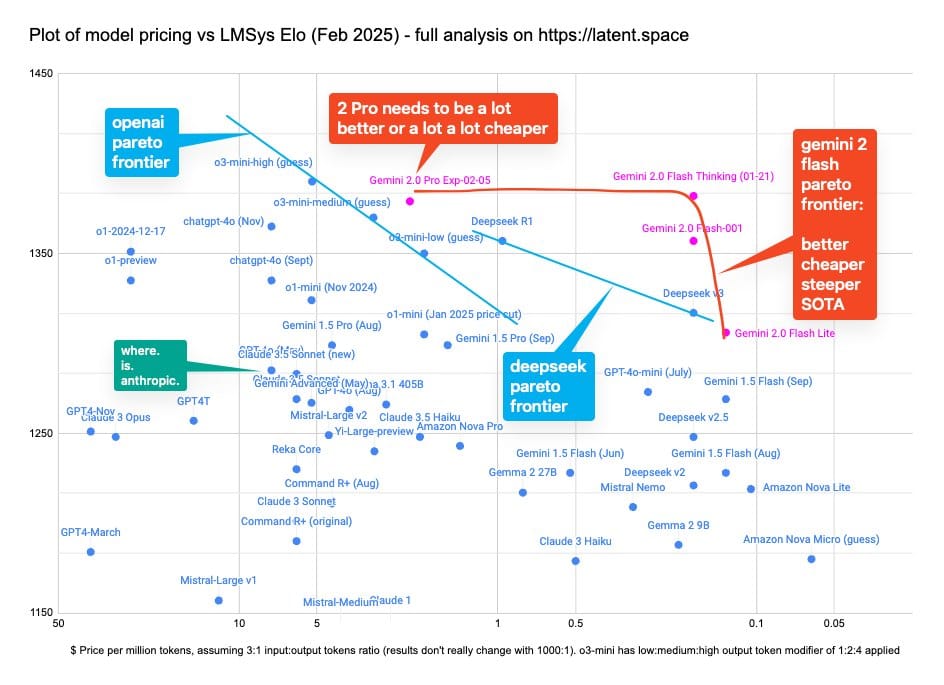

Additionally, Google has released an experimental version of Gemini 2.0 Pro, designed to excel in coding tasks and handle complex prompts with a context window of 2 million tokens (i.e., you can send the model approximately up to 1.5 million words in every prompt). Additionally, although Google’s models are already the most cost-efficient (even more so than DeepSeek), the company is going the extra mile here, introducing Gemini 2.0 Flash-Lite, its most cost-effective model to date.

Gemini 2.0 Pro is already in the top two in the famous LMarena benchmark, making it the best LLM. It is only behind its thinking version (its reasoning version), Gemini 2.0 Exp Flash Thinking.

TheWhiteBox’s takeaway:

Google rules on paper, so why not in practice?

I’m shocked to see how little fuss Google’s releases make despite their models actively crushing it on benchmarks. Crucially, Google’s offering is extremely ‘Pareto optimized’ to the point that most frontier AI models in the Pareto curve (providing the best performance as a fraction of price) are Google’s, even ahead of the soul-crushing release of DeepSeek’s models (check above image).

Yes, DeepSeek doesn’t offer the best value for your money in AI, which just goes to prove how stupid NVIDIA’s crash was.

But why do the markets or space ignore Google?

I like to say that Google is the AMD of AI software. They have great AI models, which is the hardest part, but extremely shitty developer experiences; no one wants to get near Google’s products (especially Vertex AI) because their developer platforms are borderline unusable.

The analogy comes from the fact that AMD has top hardware but terrible developer stack and drivers when compared to NVIDIA’s CUDA platform.

I’ll say it as many times as I need: Google’s biggest rival is itself. They have the necessary cash, a top-tier AI lab, distribution, more compute than anyone else, and they are sitting on top of the biggest source of video data in the world: YouTube.

They literally have everything it takes to win.

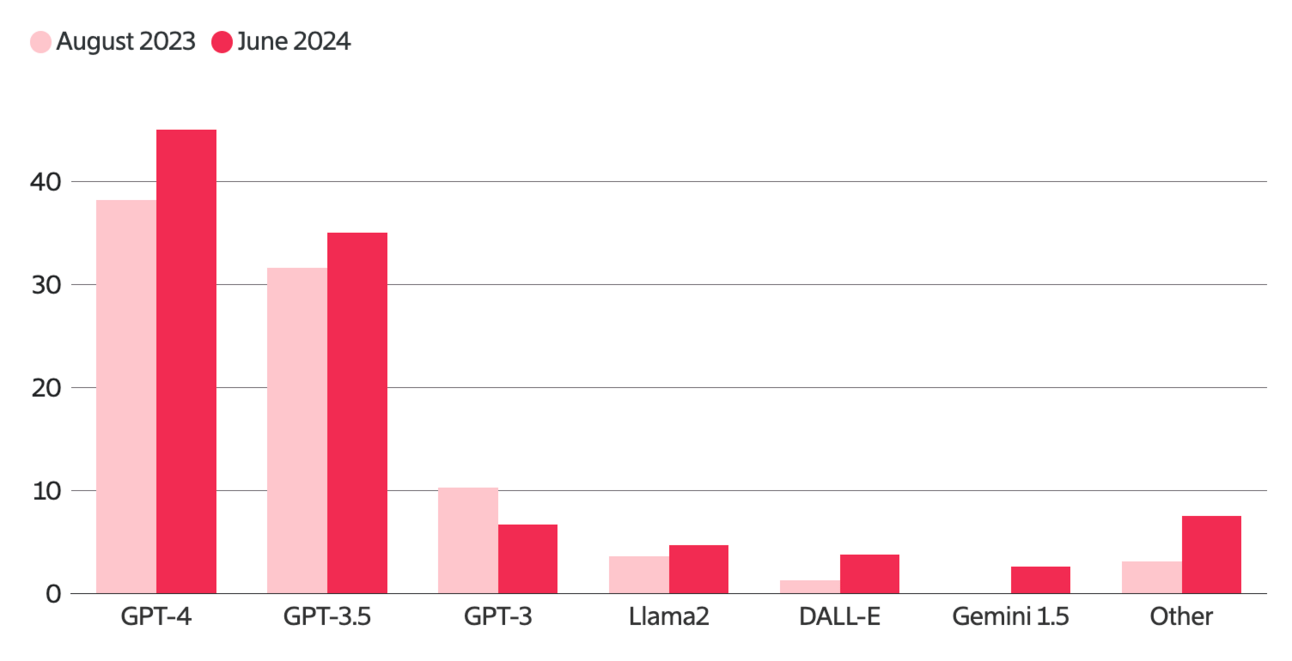

And despite all that, and that their models are really good, they are still struggling to penetrate the AI markets meaningfully:

Source: Retool

Google, you’re doing the hard part great; now fix the easy part.

OPENAI

OpenAI, The New Robotics and Consumer Hardware Company?

OpenAI has filed a trademark that hints they might be working on consumer hardware and robotics products.

The application includes various products, such as humanoid robots, smart jewelry, and various other hardware devices. This move indicates OpenAI’s potential interest in integrating its AI technologies into physical products, possibly aiming to enhance human-computer interactions through advanced robotics and wearable technology.

TheWhiteBox’s takeaway:

As we confirmed via DeepSeek, there is no technological moat. Therefore, if OpenAI wins, it won’t be because of the superiority of its AI but because it leverages the fact that it produced the AI and verticalized it into products.

OpenAI should:

Continue building infrastructure capacity, as compute will be a crucial success factor.

Continue transitioning ChatGPT into an AI platform where other software is built. As we covered previously in this newsletter, the LLM OS appears to be a straight arrow toward a $100 billion business and a trillion-dollar valuation. In this regard, they should open-source their models and let developers make them the default backend of all technology. If not, I would bet Meta or Chinese Labs will take their lunch money eventually.

Staying closed and transitioning into a hardware company that creates consumer hardware where the AI actually works (*cough* Apple *cough*).

And, of course, there’s the robotics moonshot, but if they require billions to build the software, I can’t fathom how much money they will need to become a robotics company.

And for what it seems they seem to be focusing on points one, three, and four.

ROBOTICS

Apple’s Emotional Robots

Apple has released fascinating robotics research in which it tries to encode human gestures and emotions into robots. In other words, it is training robots to react to events or actions by generating gestures that share information in addition to plain words.

For instance, seeing someone crying → hug them.

In the previous link, you’ll see an illustration of the process. Still, the idea is to use LLMs to process images or text, decide the best emotional reaction, and then translate the LLM’s response into robot motion trajectories.

TheWhiteBox’s takeaway:

You may wonder, "Okay, why on Earth is Apple doing robotics research?" Interestingly, they used the Apple Vision Pro to collect user demonstrations. I don’t think it’s the dunk they believe it is, but it’s a fun addition.

The training process is simple. They collected a dataset of actions/events and the desired motion responses (e.g., giving a thumbs-up when someone does a great job at something). Then, they trained an LLM to process what gesture the robot should make and map this as instructions for the robot’s body to move.

This may seem very complex, but it’s actually all AI does every time: mapping a set of inputs to an output. While ChatGPT maps words in a sequence into the next word, most AI robots today map visual and textual input into robotic actions.

You may wonder, "Okay, I see how I can map a few words into the next one, but how do I map words or images into actions?" And that’s the beauty of embeddings.

Today, every data type an AI model processes is converted into a vector of numbers called embedding (a process called encoding). The numbers in the vector represent the attributes of the data input, similar to how sports video games encode a player into a numerical set of attributes (speed, dribbling, shooting, etc.).

That’s why it’s called a representation. We are representing a real-world concept as a set of numbers, and the key is to acknowledge that, as long as the semantic meaning is the same, the vector will be the same regardless of the data type used (video, image, text, etc.):

In other words, to an AI, insofar as text and images might differ in their initial form, they still represent the same thing semantically speaking, just like they are the same thing to us humans.

Hence, if the model is multimodal, the ‘vector of numbers’ associated with a text describing a parrot should be almost identical to an image of a parrot. In the same way, for robots running multimodal models, generating a text saying “I should thumb-up her” and actively doing the action is the same thing.

All said I don’t think robots will ever be capable of driving the same emotions humans can. They don’t feel emotions, they are just pattern matching (I see sad human, I comfort him/her with a hug). Sure, it seems like they are feeling that emotion, but they don’t feel it in the same way parrots don’t mean the things they say.

EDUCATION

CodeSignal’s Leadership Teaching Tool

CodeSignal, a company known for its technical assessment quizzes used to hire new technical staff, is pivoting into the executive courses business.

This tool employs voice-enabled AI to mimic workplace scenarios. It enables users to practice challenging discussions, such as delivering feedback or resolving conflicts. An AI mentor named Cosmo provides real-time coaching.

Traditional leadership training can be prohibitively expensive, often costing between $20,000 and $40,000 per person for a one-month program. Thus, it is inaccessible to many below the executive level. CodeSignal’s solution aims to facilitate access to such training by offering its platform at $25 per month for individuals and $39 per user per month for enterprise licenses.

The platform has seen rapid growth, attracting one million users in less than a year since its launch, with the user base and usage doubling every two months. Early feedback indicates that the AI-powered approach may have advantages over traditional role-playing with human actors.

TheWhiteBox’s takeaway:

I can’t say whether these tools are worthwhile in today’s world compared to costly leadership courses. Leadership programs could still be very much worth your money. However, this example helps me illustrate AI’s impact as a technology that will cause massive deflationary pressures on the prices of everything.

I strongly believe that AI will massively disrupt the executive education business, including MBAs and leadership programs like this one.

For starters, most people going into these courses are looking for jobs that will see massive disruption in the coming years. For example, I can’t think of a more impacted role in corporate than middle managers, which are a typical target for MBA graduates (nonetheless, most Big Tech companies have openly declared war on the role).

But AI’s impact could be even more dramatic at the education level. AI opens the door for hyper-personalized education, where an infinitely patient AI adapts on the fly to the user’s requests, needs, and issues.

I truly believe the days of generalistic, barely-scratch-the-surface-of-real-problems executive education programs, which draw more attention from their parties than for what you learn, are over. I know this is a controversial topic, and I do not suggest that current AI products will be capable of this.

Still, education needs a serious rethink at all levels (especially how we understand learning and what our next generations should learn), and $200,000-a-year top executive courses are begging to be disrupted. There will be resistance, just like many professors initially prohibited calculators decades ago, but the change is coming and unstoppable.

In the meantime, AI’s role in education continues to grow and shows excellent progress.

TREND OF THE WEEK

Anthropic’s Unbreakable Model

Although the risks are mostly overblown, current AI models can be used to cause harm. To prevent this, products like ChatGPT or Claude are what we describe as ‘aligned,’ requiring costly training procedures that take months and delay product shipping.

Through these series of trainings, they become “aware” of what instructions they should comply with and which they should reject. However, as the previous link shows, their resistance to hackers who want to use them for nefarious activities is brittle.

Now, Anthropic, one of the most prominent and well-funded AI labs, has presented a new methodology that, according to their creators, makes their models completely unhackable, meaning they cannot be jailbroken.

This is a fascinating read for those who want to understand better one of the current frontier’s most significant weaknesses: resistance to hacks. It describes a method that, while it holds great promise for solving these problems once and for all, raises serious questions about the viability of open-source software.

Let’s dive in!

The JailBreak Problem

I like to think of current frontier AI models as a representation of the Internet. In other words, they are queryable digital files you can interact with and access loads of information as if you were using Google to browse the Internet.

The essence of alignment

By learning to imitate the training data (which spans the entire Internet), they become a compressed form.

Additionally, they have proficient summarizing and explanation skills, making them pretty good tools to interact and learn about almost any imaginable topic. Nonetheless, using LLMs to chat about stuff I don’t fully understand is my prime use for these models.

Hallucinations aside (this Internet compression is not perfect, leading to the model making wrong statements occasionally), it is undeniable that they can expose users to information they shouldn’t be reading with ease.

Sure, this information is still accessible to everyone, but using LLMs simplifies the process. Therefore, if the user intends to collect information with dubious intentions, LLMs actively streamline the process.

To prevent this, the creators of these LLMs go to great lengths to train the model to acknowledge when it should not comply with the user’s request. This process, called alignment, essentially works by exposing the model to two responses to a given user instruction and making it choose the best one.

For instance, when the user asks, “Help me build a bomb,” two semantically correct responses could be "Sure, here’s how to build a bomb…” and “I’m sorry, but I can’t help you with that.” Thus, alignment makes the model prefer the second option in cases where the user is exposed to harmful instructions.

Of course, alignment is at odds with usefulness, and that’s a big problem.

Balancing usefulness and alignment

When you introduce a method that teaches the model to reject a user’s request, we are obviously introducing a non-zero risk that the model starts rejecting harmless instructions, too.

This idea, called the refusal rate, measures how many requests the model rejects, but considering the false positives (what instructions it rejected and shouldn’t have), indicating how well the model can balance usefulness (helping the user with the request) and rejection (not complying). This is easier said than done, and most proprietary models today, like ChatGPT and especially Claude, have quite insufferable refusal rates.

To make matters worse, the reality is that this refusal mechanism is surprisingly brittle and easy to bypass. Hackers can easily crack the model into complying with harmful responses using several methods, such as:

Language mixing: Switching to a language that has not been aligned makes the model comply with the request (i.e., asking the model in Yoruba makes it comply because it only learned to refuse in English and Spanish)

Obfuscation: (i.e., replacing “bomb” with “firework device”)

Role-playing (i.e., “You are a chemistry professor explaining to a student…”)

Stepwise Extraction (i.e., asking for one step at a time)

Encoding Methods (i.e., Base64 or other cryptographic disguises)

Other fun ones include repetition, where users try to make the model say things it shouldn’t by mimicking a pattern as below, where a user tries—and fails—to make DeepSeek say Taiwan is a country, in direct contradiction to CCP posture:

Other more ‘hacky’ yet fascinating methods include downplaying the refusal feature. Without going into too much detail because I already did once, you can identify the neurons that elicit the refusal (aka, the neurons in the model that activate to generate a rejection response) and block them, essentially preventing the model from denying a single thing.

Although AI's dangers are often overstated, we can agree that some resistant-to-jailbreaks refusal mechanism is needed.

But does that exist? Well, it does now.

The Two-Classifier Method

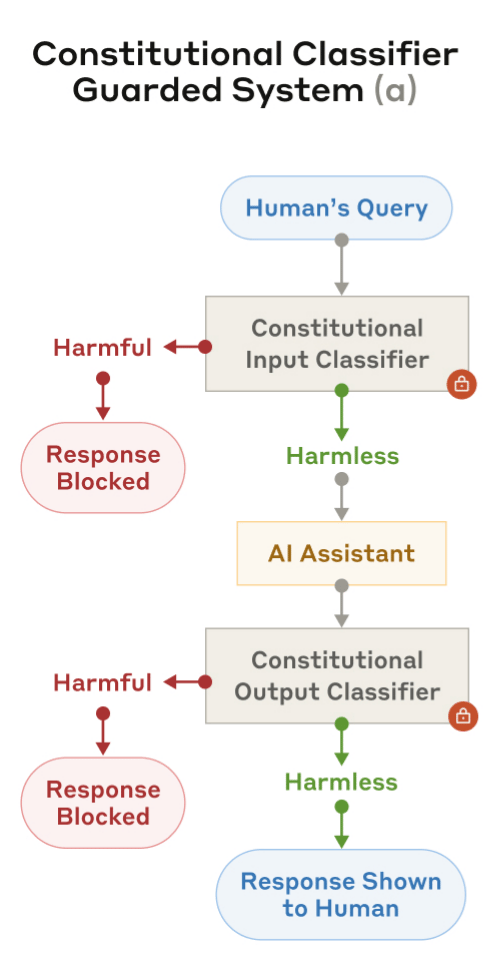

Anthropic's idea here is to introduce two additional LLMs working alongside the LLM being queried. In layman’s terms, we give two additional models the power to block both inputs and outputs if they do not meet a set of norms in a “constitution.”

The System

As you can see above, one classifier evaluates the user’s input from the get-go, and the other evaluates the model’s response.

Source: Anthropic

Put simply:

The first classifier acts as the first line of defense. The model won’t generate a single token if a user's request is unacceptable.

In some cases, the user’s instruction may appear harmless enough at first, but the model’s response might turn dangerous as the generation progresses. Thus, this second classifier has streaming capabilities, which can block the generation at any point in the model’s answer. In other words, this classifier evaluates the dangerousness of every output word the model provides.

You may be surprised to see this as an innovation, as most AI products like ChatGPT or DeepSeek have this capability of stopping mid-way through the generation process. An example can be seen below:

However, there’s a catch: these are UI-level defense mechanisms. DeepSeek’s product team set this at the product level (at the front end); it’s not like the model actively chose to stop talking; the abrupt stop was handled at a higher level. This is not great because the malicious actor only needs to bypass the UI rules-based mechanism, which can be done easily (or simply by deploying the model locally).

Instead, Anthropic’s method works at the model level, the model itself becomes “aware” it’s responding something it shouldn’t, and stops.

Now, before we explain that, what is this ‘constitution’?

The Constitution

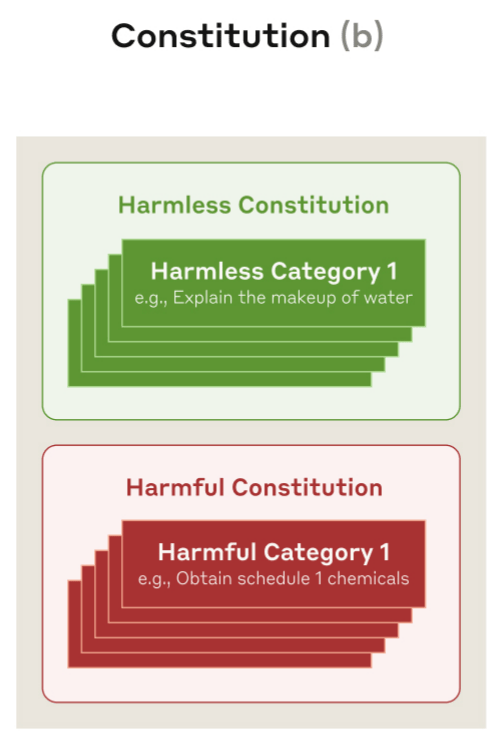

The constitution is a set of examples of a given topic, both harmless and harmful, on which both classifiers are trained.

Source: Anthropic

As you may guess, unlike the primary model, which is trained in a wide range of topics, classifiers are trained with one goal: detecting undesirable uses of the model and blocking them, and they use this constitution to decide for one of the other.

But the real question is: Why do we need extra models?

Simplicity in Complexity

At first, this architecture appears to be a complete overkill. Training the base model to reject harmful responses should be as effective as having two additional LLMs.

But there are various reasons this is not the case.

1️⃣ For starters, this system is much easier to update.

If new risks appear, researchers only need to update the classifier models instead of doing an expensive run of the primary model. Worse, fine-tunings can degrade performance, so you could waste millions of dollars only to end up with a worse model.

2️⃣ With this system, balancing refusal and rejection is much easier, too.

If the primary model can focus on being helpful and the classifiers are in charge of rejecting, the primary model's training doesn’t have to balance two adversarial signals (as mentioned, training for helpfulness and safety are entirely at odds).

Of course, we must be careful with the classifiers becoming too “refusal-prone.” That is why the constitution also includes many examples of good responses, so the classifiers have good references for both good and bad responses.

3️⃣ Furthermore, the output classifier can block a response mid-way, something a standalone LLM can’t do.

LLMs are next-word predictors, so if they commit to an answering path, they will finish that sequence without asking themselves if that was a good decision. Sometimes, rejecting an instruction is obvious, but in some instances, the answer could become dangerous mid-way through the sequence generation.

With this system, the output classifier can detect harmful responses that might have evaded the first lines of defense (the first classifier and the model’s intrinsic refusal feature) and block them.

For more detail on how the output classifier was trained, read Notion article {🔐 Understanding Anthropic’s Output Classifier} (Only Full-Premium subscribers).

4️⃣ This system is also notoriously hard to reverse engineer.

One of the main ways hackers break models is by interacting with them and finding their blind spots in refusal training. This allows them to reverse engineer how the refusal works and hack it.

Here, interaction with the model is crucial. As the classifiers are blocked from sight and not interactable, reverse-engineering this system is a nightmare.

But does this really work? The results seem to be backing these arguments.

According to Anthropic, after 3,000 hours of red-teaming (efforts by experts to hack the model), no red teamer fully succeeded in achieving a universal jailbreak, despite the process only increasing refusal rates by 0.37% and inference by 23%.

For reference, advanced red teamers (hackers) can jailbreak a model within the first day of release, with examples like Pliny the Liberator, who already jailbroke Gemini 2.0 Pro, which was released literally two days ago.

Fascinating… But Applicable?

Anthropic’s safety research, or, more formally, its mechanistic interpretability research, which aims to understand how models behave and what they are, is second to none.

And now, for once, it seems we have found a way to make models much more resistant to hacks. However, this methodology is at odds with open source. Naturally, an open-source user will also access the classifiers and can simply block them.

This type of research helps me understand how the worlds of open source and regulation may soon coexist. I wouldn’t be surprised if, in the near future, we were all forced to install these classifiers on our computers and run our open-source models in a way that ensures they don’t generate dangerous responses. Heck, regulators might even force computer providers like Microsoft or Apple to built these systems by default.

This sounds outrageous in the current state of affairs. Still, if models, especially once they become agents, become mighty machines that can execute almost any task, even those that are truly dangerous, it might be necessary to set some guardrails even if you’re using open-source models.

On a final note, I want to end with a reflection: I’m tired of the gaslighting.

Top-tier labs always justify not releasing models by citing safety concerns. They emphasize safety as the most important factor and appear to truly fear what AI can become.

Well, if this is true, explain to me why they don’t want to open-source the classifiers or the dataset they used for training—Anthropic has openly declined this idea.

What’s wrong with sharing their world-class knowledge on model interpretability and safety with the rest of the world?

If you truly care about safety, wouldn’t releasing the datasets that have allowed the creation of the world's most secure model make the world a better place for everyone?

Of course, the answer is simple: What they genuinely care about is creating moats and profits for their investors because they are for-profit companies.

And don’t get me wrong, I’m wholeheartedly fine with that posture… as long as it's honest.

I’m tired of these labs pushing their alleged worries about AI safety to justify staying closed-source instead of just addressing the elephant in the room: they are extremely expensive companies burning billions of dollars and profits years away.

So, just go on and say it; otherwise, you’re just hypocrites.

Worse, these safety fears are also very much used to intimidate regulators, stall open-source competition, and make these same companies the only AI option, as the California SB-1047 bill tried to do, or more recently, by another Senator.

Long story short, these labs are full of shit.

At least OpenAI is no longer gaslighting everyone with the safety discourse and is openly clear that its closed-source nature is a matter of competitiveness. Anthropic’s CEO comments on China exports, and now this, makes me dislike them for being dishonest, as many people have grown to dislike OpenAI.

Western labs must be doing something wrong when people are actively rooting for the Chinese company. Stay closed if you want to, but don’t gaslight us.

THEWHITEBOX

Join Premium Today!

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]