THEWHITEBOX

TLDR;

This week, we take a closer look at tariffs and AI based on recent announcements, new details on the insane costs of o3 and DeepSeek’s latest proposal to push AI’s frontier. We then discuss an AI that barks, and Amazon’s genius agent move.

Finally, we examine Meta’s disastrous release of Llama 4 and what it means for them and for the US’ AI strategy.

TARIFFS

Tariffs Are Coming for AI’s Lunch

Just as we predicted on Sunday, tariffs are already generating a lot of nerves in the AI industry, this time echoed by the Wall Street Journal. The article discusses the growing concerns, and the journalist makes the case for the most likely outcome being a drawdown on AI investment.

While no plans to cut AI spending have been announced, some of the big spenders, like Meta or Google, are surely discussing this, given their exposure to a potential recession, and others like Amazon or Microsoft, which appear much more resilient to ‘bad times’ are also allegedly increasing caution around their investments in AI.

TheWhiteBox’s takeaway:

Hyperscaler CaPex is seen as the thermometer of the AI industry. If spending goes up, the party continues. But if they cut spending…

Such an event would mean an enormous cut in NVIDIA’s AI revenues, representing a sizable portion of the total AI market revenues. Real revenues from AI workloads are still much smaller in comparison.

If NVIDIA reduces its guidance for the rest of the year (it reduces its revenue or growth expectations for future quarters), we could see a complete collapse, which would seriously impact not only public markets but also Venture Capital, forcing it to cut its investments into billion-dollar rounds.

In fact, some VCs, like Eric Bahn from the Hustle Fund, are already telling their invested companies to assume their last round will be the last one in a while.

The question is, will Hyperscalers actually cut spending?

To me, the crucial point will be if they all decide to do so. Concerningly for NVIDIA, it’s not return on investments that fuels current AI spending; many of them continue to invest heavily into AI out of pure FOMO (Fear Of Missing Out), so most of them will gladly ease off the spending pedal if that’s the overwhelming decision (this also applies to VC).

A less catastrophic outcome is that the narrative swirls entirely in favor of efficiency, putting a lot of effort into decreasing model sizes, thereby reducing costs and latency, and more effort is put into enterprise applicability (higher robustness, more specificity) to the detriment of ‘the pursuit of Artificial General Intelligence (AGI).’

TARIFFS

China & the US Escalate Tariff Tensions

As Trump rallied markets by announcing that most “reciprocal tariffs” were halted except for Chinese ones (which were ramped up to 145% at the time of writing), tensions between the two superpowers have never been higher.

AI is at the center of these pains, despite Trump’s efforts to exempt semiconductors. China is calling the play and placing an 84% blanket tariff, which is unbelievably high, too (and we have yet to see China’s response to the new one).

But what AI goods/companies are in the middle of all this?

Besides stating the obvious, that rare earths are going to become much more expensive, the key thing to acknowledge is that chips, which appear tariff-free for the moment (although maybe not for long), are only exempt if they come into the US in their raw form, as we mentioned on Sunday. However, most chips are already packaged into their GPU boards and inside physical servers packed with aluminum and electronic components that are definitely not tariff-free.

Companies like NVIDIA are trying to avoid this by assembling their servers in Mexico, which would save them based on the USMCA (United States-Mexico-Canada agreement), but this is a tricky one based on the idea of ‘rules of origin’. This states that the country where the last ‘substantial transformation’ occurs is the one where the tariff applies. So even if NVIDIA servers are assembled in Mexico, it’s probable that Taiwan, where the chips are packaged and tested (for advanced chips), remains the place where tariffs apply, which would still imply a 34% tariff. It’s very unclear what ‘last substantial transformation’ means in such a complicated semiconductor world.

In all honesty, I don’t know what rules apply in this case, but if it helps, according to recent news, up to 60% of NVIDIA’s servers would be tariff-free as they are shipped from Mexico (meeting USMCA constraints).

For other Big Tech companies, the outlook is far worse.

All Hyperscalers face a potential increase in CapEx (investment in AI data centers) due to many components necessary for such buildouts, like aluminum, electronics, networking, and power supply gear, being shipped from tariffed countries. If these cost increases materialize, these companies could seek to relocate their data center project outside the US, literally the opposite of what Trump expects. While the cost risks have decreased (for now), many of these components still end up going through China at some point, so, depending on the importance of China in each particular case, they could be subject to Chinese tariffs, which essentially more than double the price.

Apple is considerably impacted due to heavy manufacturing in China. The company is trying to diversify manufacturing to other countries like India, but the price hikes seem inevitable in the short term. According to the Wall Street Journal’s podcast, estimates claim that 60% of iPhones could theoretically be manufactured in India (Apple has five big manufacturing hubs there), but currently that’s far from the case.

However, one thing people keep forgetting to talk about is the balance of trade when it comes to sales of US companies in China. The CCP could easily target some of these companies in retaliation:

Apple: In its 2024 fiscal year ending in September, Apple reported that 17% of its total revenue came from the Greater China region.

Microsoft: Microsoft’s exposure to China is relatively minimal, with the country accounting for approximately 1.5% of its global revenue.

Alphabet: Specific figures for revenue from China are not publicly detailed. However, it’s known that Asia contributes a significant portion to Alphabet’s revenue, with 19% in Q1 2021.

Meta: In 2023, China contributed 10% of Meta’s total revenue, amounting to $134.9 billion, up from 6% in the preceding two years.

Amazon: Amazon’s direct consumer operations in China have been limited since it closed its domestic marketplace in 2019. Consequently, its revenue from China is relatively small compared to other regions.

NVIDIA: Revenue from China fluctuates. 12% in mid-2024 ($3.7 billion). ~17% in FY 2023. In some extreme cases, some estimates put Greater China’s share at up to ~39% of annual revenue.

Tesla: ~22.5% of total revenue in 2023 came from China. ~21.4% in 2024. In Q1 2025, ~40% of global deliveries were from China.

While companies like Microsoft or Amazon are not worried in the slightest, others like Apple, NVIDIA, or Tesla are extremely dependent on free trade between China and the US.

INTELLIGENCE EFFICIENCY

o3 is More Expensive than We Thought

ARC AGI 1

o3, OpenAI’s flagship reasoning model, which, according to its CEO, is being released soon, is even more expensive than we would have initially thought.

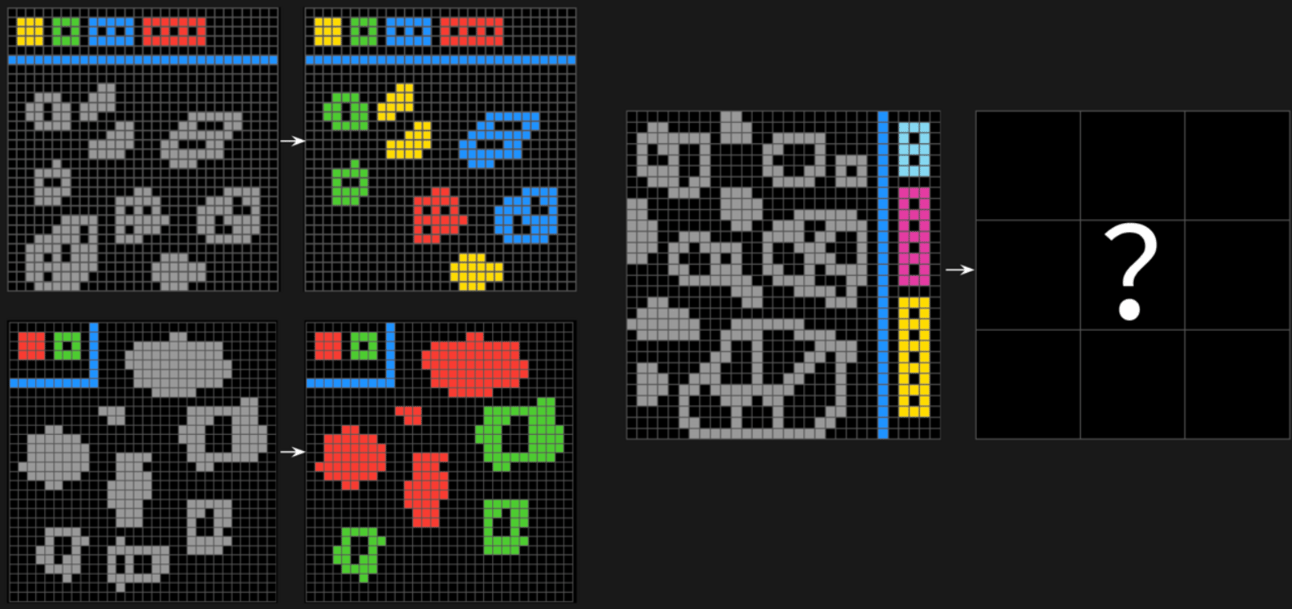

According to new estimates by the team behind the ARC AGI 1 challenge, a benchmark with tests that are incredibly complicated for AIs, which o3 saturated (forcing a new version, ARC AGI 2, where AIs drop back to low single digits), o3 might have cost around $30,000 for every solved task in the benchmark, an absurd amount of money to solve IQ-test type problems.

An example task for ARC AGI 2 (very low performance to date)

TheWhiteBox’s takeaway:

Imagine spending $30k to solve a task a human can solve in seconds (or, at most, minutes). This is a beautiful example that illustrates how raw performance and economic viability don’t always go hand in hand.

As I always say, it was never about increasing absolute intelligence but intelligence relative to humans. Or, dare I say, we aren’t necessarily looking for true intelligence (which these models display a very primitive version of) but for a result that is good enough to substitute or augment humans in some tasks.

And the reality is that in tasks where memorization isn’t helpful, this model requires one too many dollars to solve the tasks, making them totally unusable.

So the question is whether we are going to find ways to make their learning and solving cost curves decrease; otherwise, we are just creating something that won’t ever leave the realm of research labs.

Therefore, with its upcoming release of o3, OpenAI will show us whether it’s found a way to decrease costs or will aim for the infamous $20k/month subscription rumored a few weeks ago. But even then, if the costs of o3 are so massive, there’s a non-zero chance that OpenAI would actually lose money on these subscriptions, which is insane just to think about.

FRONTIER RESEARCH

DeepSeek’s GRMs

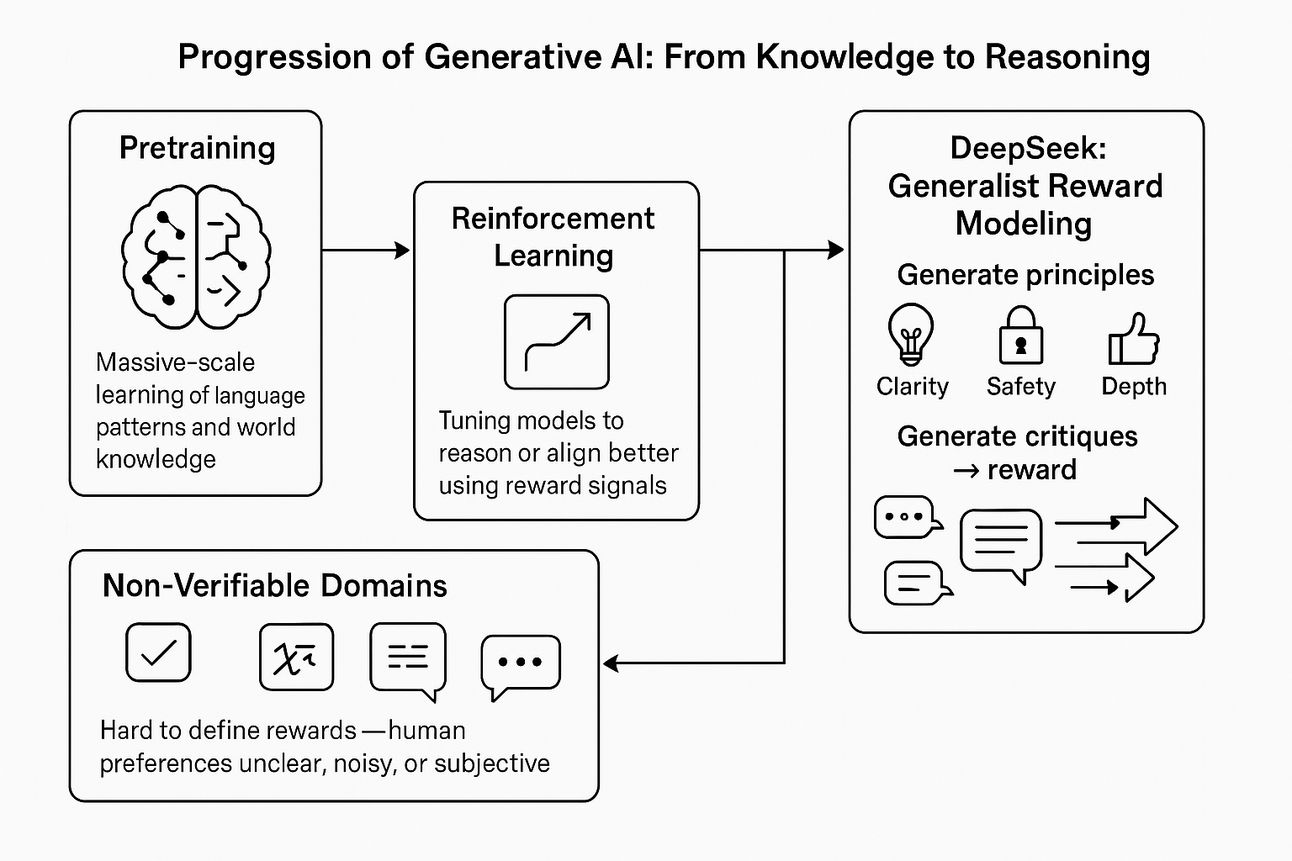

DeepSeek, the AI Lab at the core of China’s AI aspirations, has released new research on a crucial topic: unlocking reasoning capabilities on non-verifiable domains.

But what do they mean by that?

The idea is pretty straightforward. When we talk about ‘reasoning models’ like o3 or DeepSeek R1, these can solve very complex tasks that would require strong human reasoning, but only in areas like maths or coding. The reason for this is that in those domains, we can verify whether the model’s prediction is correct. As models learn via trial and error, we have a “learning signal” to help them improve.

In fact, it was this idea of testing models only on verifiable domains that led to DeepSeek’s impressive R1 results that shocked the world (and markets).

However, non-technical areas, like creative writing, can’t be verified, so these ‘reasoning models’ are exclusively focused on ‘verifiable domains’ and represent no improvement over “non-reasoning models” like GPT-4.5 in ‘non-verifiable’ areas.

In other words, we have figured out how to scale AI intelligence only in areas where we have a clear learning signal. In others, we truly don’t know how to improve what we have.

And now DeepSeek thinks it can change that with Generalistic Reward Models, models that learn to give good rewards (good learning signals), in subjective areas (areas where a clear correct/wrong signal is not possible).

The idea is quite simple. As we don’t have a clear way to discern the correctness of the model’s output, we are going to train AI models that act as ‘reward scorers,’ actively judging the reasoning model to guide it through the reasoning process.

But this is not new, so what is DeepSeek proposing? Simple, switching the way these reward models work.

For every input-output pair they receive, in which they need to score the quality of the output based on the input, the model first writes the set of principles that should regulate a good response for that input.

Based on all three elements (the input, the output, and the principles), it judges the quality of the response only then. This helps the model evaluate the correctness based on principles designed exclusively for this input-output pair.

Think of this as follows: you see an essay from one of your students on Kantian philosophy, so you first lay down the principles that should make such an essay good (like the list of principles that guide Kantian philosophy), and then evaluate the quality of the essay based on the ‘ad hoc’ principles you’ve just defined based on the task.

This may look like a subtle change, but it makes all the difference. Even though these principles may be very clear in our knowledge, it’s still helpful to first write them down and then condition our evaluation of the student’s output based on this just-written principles; a way of explicitly ensuring the model is evaluating based on the correct principles instead of evaluating based on its implicit understanding.

But you may argue, as long as the model knows the principles, why does it matter the fact that it explicitly writes them down before evaluation?

The answer is obvious when you understand the behavior of LLMs, their output is always highly dependent on the provided context; the higher the quality of the context, the better the outcome. Therefore, even though the knowledge is indeed ‘inside’ the model, by verbalizing it, we improve the actual context and, thus, we increase the performance.

Put another way, and this serves as a great prompt engineering lesson, explicit context beats implicit context. In other words, you will always get better results by having explicit context than by trusting the model will elicit that knowledge from its weights autonomously.

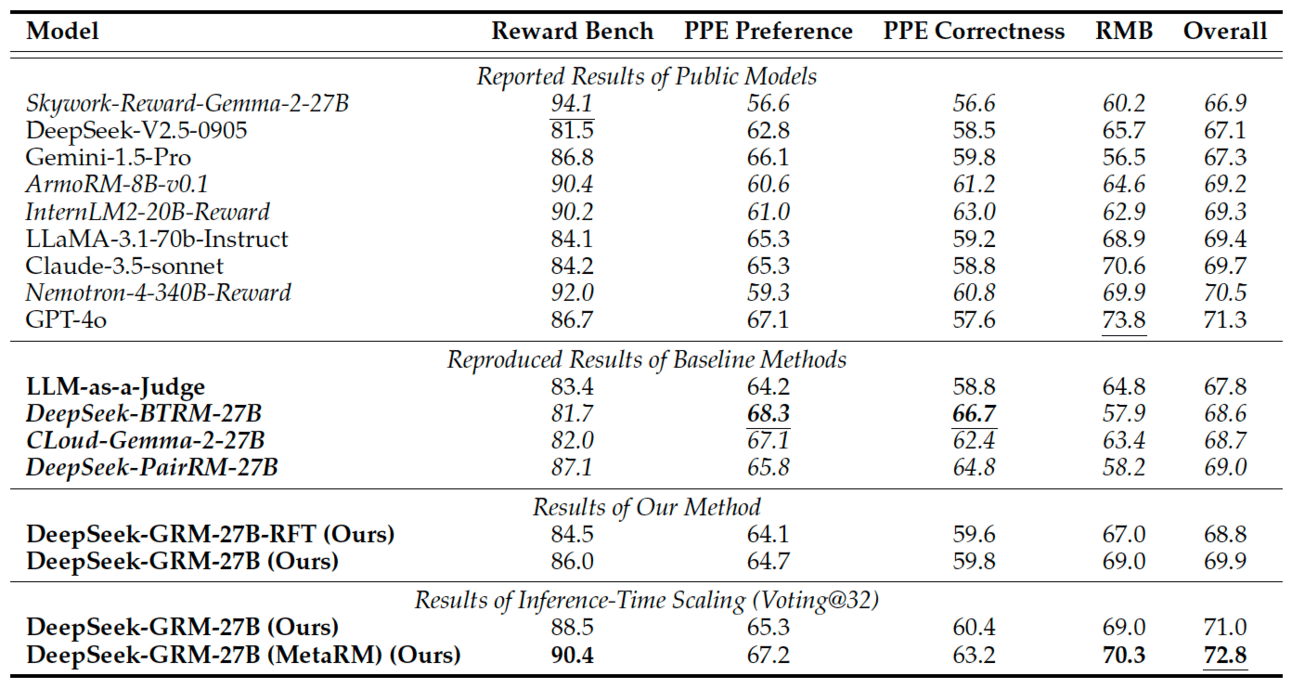

And the proof is in the pudding. With this subtle change, these models become capable of adapting to the evaluated task, and show great promise compared to previous methods, becoming better than most SOTA-ish models despite being much, much smaller:

TheWhiteBox’s takeaway:

Could DeepSeek be preparing a new reasoning model that is also great beyond maths and coding?

If true, this could not only widen the gap between China and the US, but it would literally switch the entire narrative of who is ahead of whom (more on this on today’s trend of the week).

With this model nearing its release, I can’t wait to see whether it delivers.

DECODING ANIMAL SOUNDS

ElevenLabs’ Text-to-Bark

ElevenLabs, one of the prime AI labs for modeling sound (audio and speech), has released a hilarious text-to-bark model that translates text instructions into barks for your dog.

You can choose the dog type from Golden Retriever, Chihuahua, and Dachshund. You can try the model for free to see if your dog is also experiencing the AI revolution.

TheWhiteBox’s takeaway:

Jokes aside, there’s actual evidence that AI could play an interesting role in the relationship between humans and their canine best friends (sorry, cat owners). Examples include this research by University of Michigan researchers, where researchers attained a 70% accuracy score in interpreting dog communication.

Fascinatingly, as dog bark data is very scarce, researchers departed from a human speech model; the fact that the end result worked means that AIs can find common patterns across human speech and dog barks and successfully transfer into the canine world with a smaller subset of dog data.

In fact, I consider the pet industry one that AI could seriously improve; if AI is capable of helping us understand pets when they are sad, hungry, nervous, you name it, I bet people will literally pay any amount to have that (that includes me).

Nonetheless, rich people are paying thousands of dollars so their pets can travel comfortably, picture what they would do to understand their pets better or lengthen their short lives; that’s a money-printing business if I ever saw one!

SHOPPING ASSISTANTS

Amazon’s ‘Buy for Me’ Button

Amazon has introduced a new beta feature called “Buy for Me” in its Shopping app.

This feature enables U.S. customers to purchase products from other brands’ websites directly through Amazon when those items aren’t available in Amazon’s own store. This feature aims to streamline the shopping experience by allowing users to discover and buy products from external brands without leaving the Amazon app.

TheWhiteBox’s takeaway:

This is actually genius.

Imagine a world where a large amount of traffic is AI agents searching for stuff in representation of their users. This is actually pretty disruptive for the advertising business; companies pay premium prices to Meta, Amazon, or Google to get eyes on their products, especially things humans weren’t necessarily looking for at first, but, thanks to the great profiling these platforms offer, could still be very interesting to these users.

But what if those human eyes aren’t there anymore? If advertisers stop seeing conversions from their Amazon ad spending, why should they bother?

In short, they wouldn’t. However, a way for e-commerce platforms like Amazon to retain those ad spending dollars is to offer ways to buy stuff from other platforms without leaving the Amazon platform, which is the primary objective of the retailer. This way, you still incentivize companies to advertise themselves on the platform, and offer them a one-click purchase experience that is music to their ears due to the frictionless experience for the user, while also benefiting Amazon as the user (or agent) never leaves Amazon during the entire process.

Yes, I’m sure many retailers would prefer for the agent to go to their website, but at least the user/agent who searches on Amazon is exposed to their products even if they aren’t visiting their website.

This means that agents could not impact big-platform spending that much. Still, it seems clear they are going to kill Google AdSense-type products (which allow small websites to monetize visitors with ads), as I believe agents will concentrate traffic on big platforms, and traffic for most websites will drop considerably.

In summary, agents will transform the global distribution of traffic, mainly concentrating on a few websites and eliminating the long tail of existing websites. In turn, this will give these distribution behemoths huge pricing power while obliterating entire industries like SEO or web design. Sounds like a dream come true for some, a nightmare for most.

TREND OF THE WEEK

Meta Releases Llama 4

Meta recently unveiled its latest series of AI models, Llama 4, allegedly introducing advancements in multimodal capabilities and performance. However, the results have been met with intense criticism along various lines:

Extremely shady “benchmark-maxxing”, with strong accusations by the community

Betrays commitment to the real open-source spirit with non-deployable models at the consumer level

Actually very poor results in some instances

Overall, Meta has primarily disappointed, with repercussions at various levels, but mainly looking to the East; for the first time, it’s clear who’s in the open-source lead (and I would argue, based on that, in AI in general), and it’s not the US.

Let’s dive in.

Hype: 100, Impact: 0

Meta has taken an unusually long time to release the new version of their Llama models (almost a year), once heralded as the frontier of open-source AI.

Sadly, it seems those days are gone.

Underwhelming is the Word

The release includes two models, with a third still in development:

Llama 4 Scout: This model features 17 billion active parameters utilizing 16 experts, totaling 109 billion parameters. It boasts a 10-million-token context window and is designed to operate efficiently on a single NVIDIA H100 GPU. This means that, at least theoretically, you can send the model almost 8 million words in one single prompt.

Llama 4 Maverick: This model also has 17 billion active parameters but employs 128 experts, accumulating 400 billion total parameters. It is engineered to outperform models like GPT-4o and Gemini 2.0 Flash across various AI benchmarks, and can run on a single NVIDIA H100 DGX Server (8xH100s)

Llama 4 Behemoth: Currently still in training, Behemoth is projected to have 288 billion active parameters and approximately 2 trillion parameters in total. It aims to surpass models such as GPT-4.5 and Claude Sonnet 3.7 on STEM benchmarks and is considered a teacher model (not meant to be served but to train smaller models).

The biggest architecture change is the use of mixture-of-experts (MoE), where the model is effectively “divided” into different experts during training, thereby specializing in different topics.

Then, when the model responds, it chooses what experts to activate, while shutting down the rest. In practice, if my model is divided into 10 experts and only 1 activates for the prediction, I’m effectively dropping compute costs and latency by a factor of 10 (it’s a little bit less for reasons I won’t go into). Most top models are MoEs.

And based on the published results, the release was initially highly promising, with:

Maverick scoring a fantastic result on LMArena.

Strong results in several popular benchmarks like GPQA Diamond

Strong results on coding benchmarks too

However, here is where the fun ends. In fact, the release has been accompanied by extreme controversy.

Very Strong Accusations

Meta has faced allegations (or direct accusations, I would say) of manipulating AI benchmark rankings by submitting an experimental version of Maverick, explicitly optimized for conversational performance, to the LMArena benchmarking platform.

The key, though, is that they did not disclose the existence of this experimental version, obscuring the idea that it was not the main version but a fine-tuned one designed to maximize benchmark results. Most models are not ‘benchmark-tuned’, which gives Meta’s model an unequivocal advantage.

The LMArena team has since acknowledged this, which puts Meta in a very embarrassing position.

But accusations have gone much further, with some people accusing Meta of training on test sets. This is the closest to high treason you can get in AI. But why?

When training models, you randomly separate your available data into training and test sets. This way, you can use the test set to check whether you have learned meaningful patterns during training or memorized the data.

Specifically:

If your model performs well in training data but not in test data, it has overfitted to the training data, aka memorized it, making it useless to use in the real world.

Instead, if your model ‘generalizes’ well into the test data, it means that the model compresses knowledge from the data that can be applied to new data, making the model usable.

But why is this so consequential in AI? Let’s say you train a model to identify cats and want to test whether it truly understands cat patterns.

You give the model a training set with only black and brown cats, but not orange, which only appear in the test set. If your model understands what a cat is, it will learn that the color does not define whether it’s a cat or not and should ‘generalize’ and identify orange cats as cats even though it has never seen one.

By making this separation, you can test whether the model is simply memorizing the training set (cats can only be black or brown because that’s all it has seen) or has genuinely understood the generalizable pattern (all cats have four legs, slit-shaped eyes, and a tail, for instance).

Instead, Meta is being accused of training the model on the test sets that precisely test whether it is truly generalizing, which is clearly cheating.

In our example, while the test set wants to see whether Meta’s model understands cats can also be orange, Meta is secretly training on orange cats to ensure it does, but this doesn’t prove the model actually understands cats.

Although Meta has denied these allegations, their claims are hardly credible when we see their results in other benchmarks where the test set is hidden from AI labs.

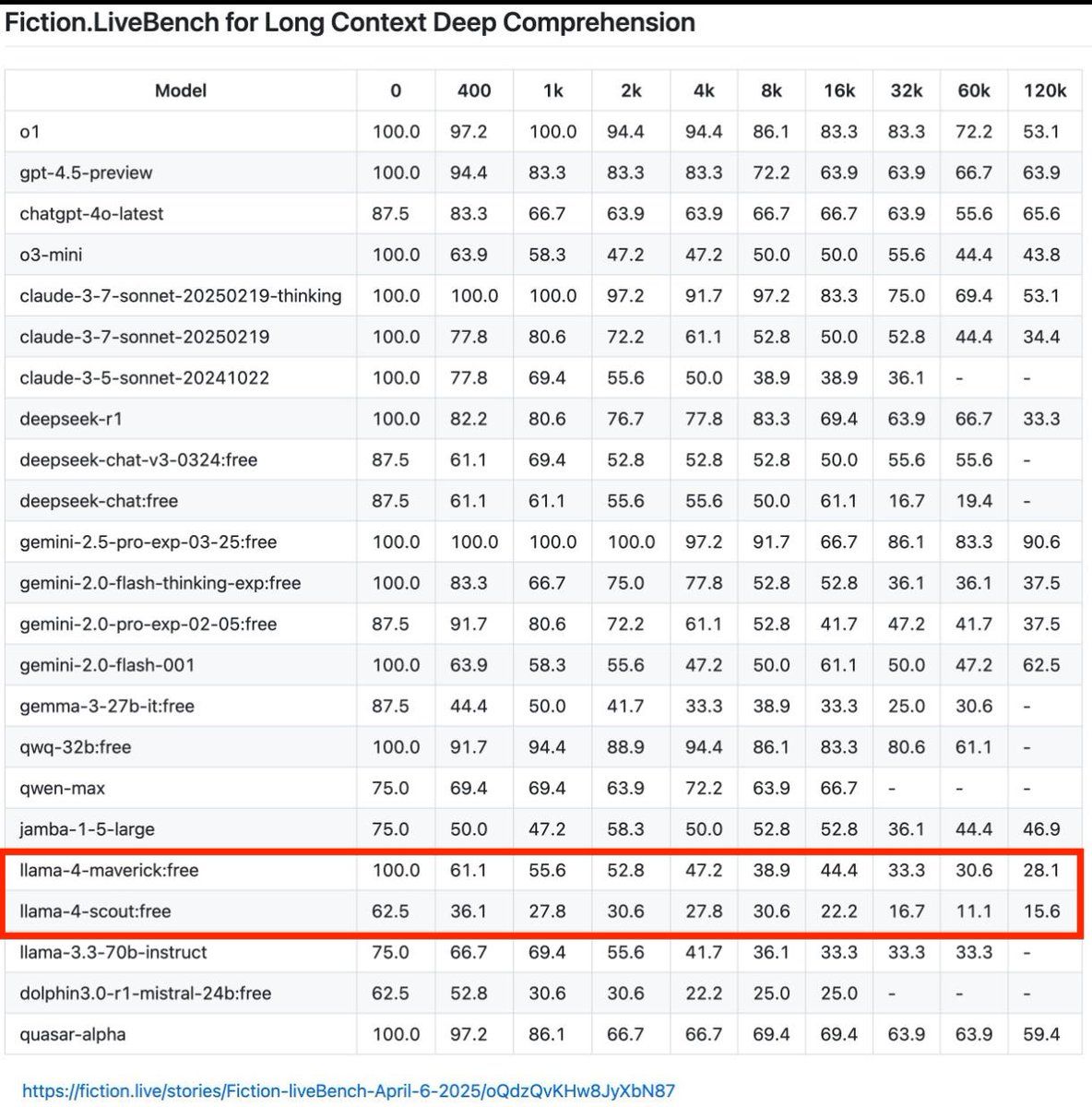

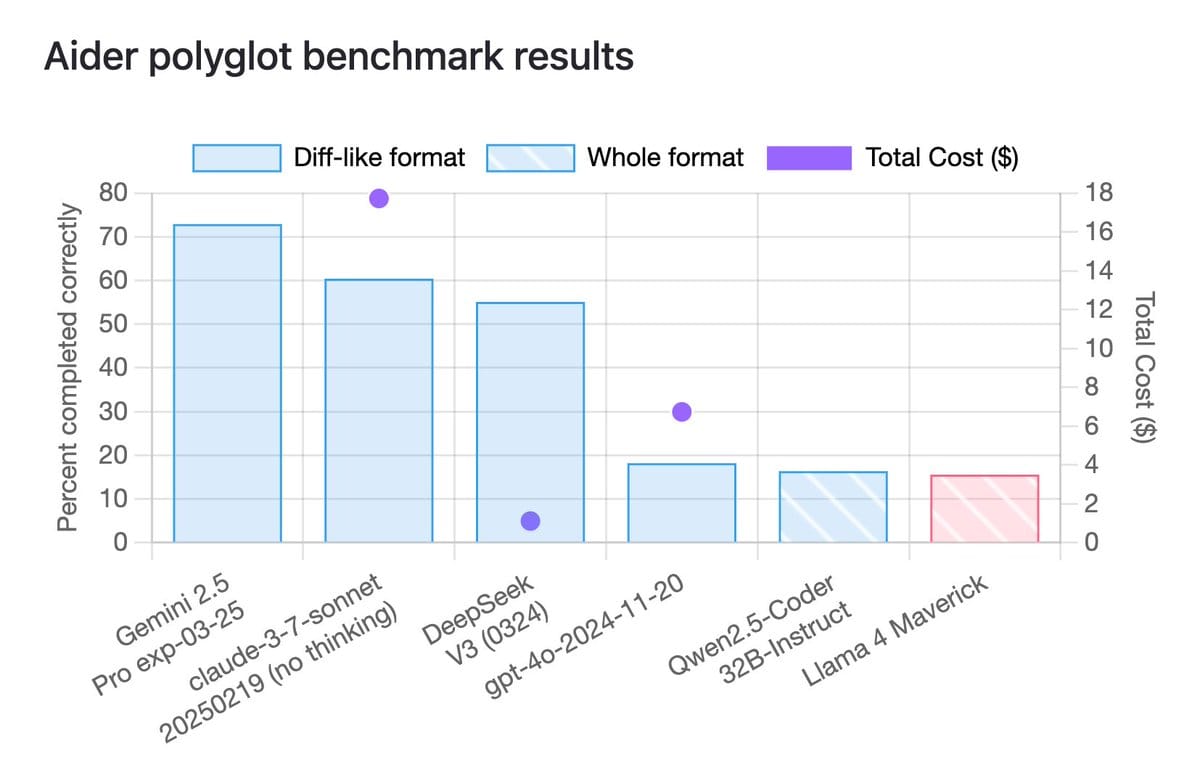

Worse, in tests where the test set is hidden from AI labs, Llama 4 models have had tragic performances, with examples like Aider’s polyglot, the quintessential coding benchmark, scoring well below the frontier:

Worse, some people have tested the accuracy of the 10 million context window and, well, it seems to be a bare-faced lie, as the model’s performance collapses way earlier. At the 120k context window (~100k words in the prompt), accuracy drops as low as 15.6%, so you tell me the performance at 10 million.

I can’t express how bad these results are at the current stage. With all other competing AI Labs having internal models as good as or better than Gemini 2.5 Pro, Meta is way behind the curve.

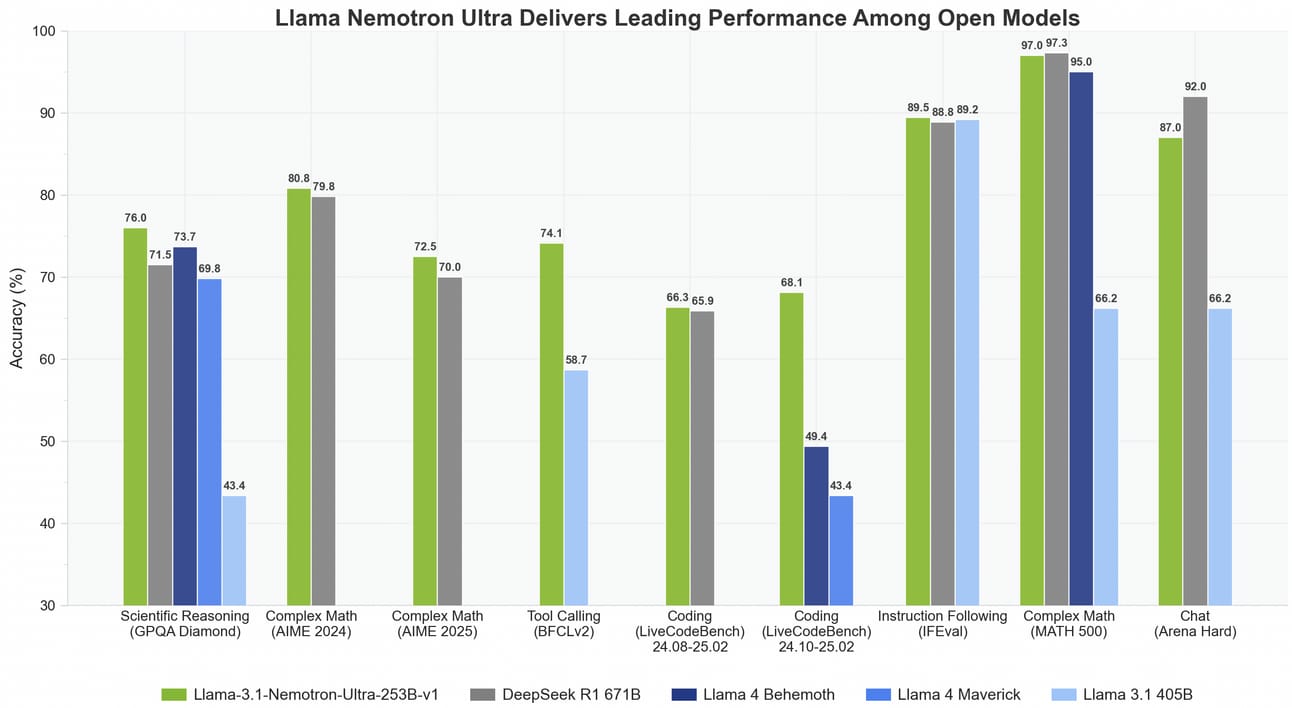

Another example that illustrates how bad things are looking for Meta is NVIDIA’s recent release of a fine-tuned version of Llama 3.1 405B, the previous generation of Llama, that beats both Maverick and Behemoth (the gargantuan unreleased Llama 4 model) despite being based on a year-old model.

In layman’s terms, people are getting better results with your previous generation than you’re getting with your new version, even the one with two trillion parameters (the fact that it’s still being trained is hardly a justification).

And all this without even considering that none of the models can be run on consumer-end hardware (unless you have a $15k Mac Studio with 500GB of RAM), which betrays the spirit of open-source Meta once fully embodied. This is a complete betrayal of the open-source community that once saw Meta as the beacon of progress.

But what did go so wrong?

A Poor Read of Progress

Here I’m just speculating, but this is how I see it: Meta has taken too long to embrace mixture-of-expert architectures, which are the norm now because they considerably reduce cost and latency while enjoying the benefits of being large.

OpenAI, Google, and DeepSeek have been training their models as MoEs for years. In fact, Llama 3 should have already been an MoE due to the obvious performance/cost benefits.

And while this appears to be a simple architecture change, it seems that it’s actually quite hard to implement, especially when training these models.

DeepSeek research is at the cutting edge of Expert Parallelism, the method required to train MoE models at scale. Read my analysis of DeepSeek to understand this better.

Llama 4 is Meta’s first attempt at this architecture, and it is telling that the architecture is almost a copy of DeepSeek v3 (using the idea of shared experts popularized by the latter).

The poor results also suggest that Llama 4 is actually a pretty recent effort, indicating that when DeepSeek released v3, it caught Meta completely off guard and forced them to scrap the previous version of Llama 4 and re-do their entire training (we have proof they used several war rooms to reverse engineer the DeepSeek models). Overall, this was an inexcusable failure from Meta’s AI team, especially considering the AI teams are orders of magnitude better funded than DeepSeek’s, so I’m pretty sure Zuck is incredibly upset with the team.

Unsurprisingly, key figures have left the company in recent weeks, like AI’s VP of Research.

Overall, Meta’s release has it all, but in the wrong:

shady benchmark tactics,

unamusing performance,

and clear proof they are nowhere near the frontier, which is inexcusable considering companies with much less funding (DeepSeek) and much younger (xAI) have completely surpassed them.

But despite how bad things look, we can make it worse. To me, the most alarming takeaways from this are two: declining open-source competitiveness and Meta’s future.

What This Says About the AI Race & Meta’s Role

The main thing that has to set the alarms in the US is that there’s no denying that China is now leading the pack at the open-source level. If we consider the top 7 labs, four are from the US/UK (OpenAI, xAI, Anthropic, Deepmind), and three are Chinese (Alibaba-Qwen, DeepSeek, Kimi).

Yes, Meta is no longer in this group (for now).

Notice the pattern? The four American/UK labs are closed labs, while the Chinese are all totally open-source. This is absolutely embarrassing just to mention it. Concerningly, the only company that was more or less hiding this embarrassment was Meta.

But after this week’s events, they are no more.

In my view, this is the first time in the last two centuries that a technology revolution is at risk of not being led by the US (or the West if we consider the UK during the last phase of the 19th century and the beginning of the 20th).

A Paradigm Change

This is terrible because the one who leads open-source is the one who leads innovation. Think of open-source for AI as network effects for social media apps, it’s a hidden force that generates unbelievable traction around products and companies, “invisibly” pushing the entire space forward.

While Chinese AI labs are openly collaborating with each other extensively and sharing learnings, US companies are doing the opposite. It’s not a coincidence that many top Chinese labs are presenting top results at the same time; they are literally riding each other’s waves.

At this pace, in six months’ time, most worthwhile models will be Chinese. And if Chinese models become the best option, you’ll soon see most US universities and undercapitalized AI labs using Chinese models for their research… the very same research closed labs use to push the frontier models forward.

In that scenario, we will literally have US universities actively helping Chinese labs gain traction by using their models. This is not only embarrassing, it’s a national security threat.

If that materializes, trust me, it’s a matter of time before Chinese open-source models are pushed toward illegality by the US Government, panicking at the trend. Of course, that would only make matters worse because you are cutting your own legs by preventing your citizens' access to better models.

If you read carefully, I’m literally describing the innovation flywheel that the US could potentially miss on while the rest of the world (I can assure you Europe won’t ban Chinese models) leaves the US behind.

You can dream all you want, but a handful of thousands of AI researchers in Silicon Valley and London cannot compete with the entire world deploying and adopting Chinese models.

Is that the world the US wants? And what about Meta?

Tariffs & the Future

Not only is Meta the Big Tech that is, by far, the most exposed to a possible downturn caused by the tariff war, as most of its revenues come from ad spending, which takes a huge hit whenever recessions hit, but its entire AI value proposition revolves around the idea of it leading open-source.

Meta’s play isn’t to sell AI models to consumers and enterprises like the other hyperscalers, but to ensure two things:

Llama models are the foundation where agentic apps are built (think about this as what they did with PyTorch or React, making Meta tools the foundation of AI training and website development, giving them the power to influence where the industry heads. For instance, if Meta is developing specialized hardware (like MTIA), it can ensure PyTorch supports it out-of-the-box.

Meta uses the open-source innovation loop to offer its customers improved versions of its models via its social apps. For instance, Llama 3 has almost infinite fine-tuning for particular tasks created by the open-source community. As Meta builds its AI strategy around Llama, it literally has people working for it for free and can adopt these fine-tuned models with no effort from their side.

But suppose Meta can’t stay competitive at the frontier. In that case, nobody will use their models, killing the free improvement flywheel they enjoy with open-source and basically throwing them off the race.

Also, them not being capable of delivering powerful models cripples their capacity to provide better ad AI products, crucial to promote advertisers using their platform, which could be the killing blow.

AI is consequential to Meta’s future—I would say as much as it is for Google. Thus, Meta needs to get its act together fast, not only to save its company but also to preserve goodwill around the US’ open-source efforts.

The US Government should support Meta to ensure it jumps back into the top seat. Otherwise, we are literally witnessing the birth of a genuine national security threat for the West—a sign of the changing times for the US in its battle with China.

THEWHITEBOX

Join Premium Today!

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]