Transform Your Excel Skills with 50 Pro Hacks (+ Free Templates)

Stop wrestling with spreadsheets. Our comprehensive Excel mastery pack includes everything you need to become a spreadsheet wizard in record time.

Master 50 essential Excel tricks with step-by-step GIF tutorials

Create stunning data visualizations using done-for-you graph templates

Learn time-saving shortcuts the pros use daily

Access game-changing formulas and functions explained simply

Ready to revolutionize your Excel workflow?

THEWHITEBOX

TLDR;

Product:

🧐 OpenAI Releases “Operator”

😎 Sam Altman Announces o3-mini

😟 Perplexity Fires Back

Technology:

👾 Finally, a 3D Generator That Works… And That You Can Use

☹️ Meta’s GenAI Teams Enter ‘Panic Mode’

Markets:

💰 Blackstone Invests $300 Million into Data Company

TREND OF THE WEEK: DeepSeek r1, Much More Than Good Looks

AGENTS

OpenAI Releases ‘Operator’

Two days ago, in our Premium news segment, we discussed OpenAI's potential release of its agent product, Operator.

And, well, the wait is over.

Yesterday, in a keynote, they showed the next step in their “journey to AGI.” Essentially, it’s a ChatGPT-style interface (the UI is almost identical), with the difference that you can choose the ‘plug-ins’ that the AI will use.

Of course, this AI is an agent, a large language model (LLM), because it’s based on GPT-4o, called CUA, and can perform several actions on your behalf. Under the hood, the LLM has access to a virtual environment where a remote browser interface is raised so the agent can interact with it. This is crucial to understanding how Operator works because the model doesn’t interact with websites via APIs, the traditional method for automating agents.

Instead, it’s a vision-language-action model that can read computer screens, understand them, and choose the next action based on the user’s goal, such as “book me a table in a vegan restaurant via Opentable in downtown LA at 7 pm”.

As you may have guessed, this isn’t new. It is OpenAI’s response to Anthropic’s Computer Use and Google Project Mariner computer agent features, all of which promise to automate most computer tasks humans perform.

TheWhiteBox’s takeaway:

Although it’s a really cool demo, I can’t say I’m impressed.

Creating machines that interact with web interfaces is not new; we’ve seen it for years with Robotic Process Automation (RPA). Of course, this is more powerful than RPA, as the latter requires extensive rule-based coding to work (it cannot ‘understand’ things and thus follows pre-coded instructions).

But the real reason I’m not impressed is that Operator is pretty much useless in its current state. You must be extremely lazy to see Operator as a better option for searching a table in OpenTable than doing it yourself. This reminds me of OpenAI’s GPTs, released almost two years ago, which looked like they would transform the world.

Currently, I don’t know of a single person who uses them.

When would Operator be useful, if ever? Ask it to run Skyscanner for flights every few hours until it finds the best flight deal to Chicago. Then, it would elaborate and book a tourist plan that includes monuments, restaurants, and hotels that minimize commute time and consider a particular set of restrictions (low-carb dinners, 4-star hotels, etc.).

Now, that’s pretty powerful and really saves humans time.

However, as we discussed in our review a few weeks ago, agents suffer from a ‘many-steps’ illness. Because they are prone to errors, even if your agent has a 95% chance of completing a step correctly, the chances they concatenate 10 successful steps fall dramatically to 59%. This means that this agent will execute a 10-step plan correctly only 59% of the time.

There’s a reason why all the demos OpenAI showed yesterday were simple, few-step tasks. The one I’ve described requires hundreds, which for an agent with 99% per-step accuracy would drop its task accuracy to 36%.

Long story short, unless agents achieve 99.99% per-step accuracy, allowing overall accuracy to stay around 99%, agents will remain incredible to demo but impossible to deploy.

All calculations above assume per-step independence, meaning step 5 can still be done even if step 4 fails. This is a looser restriction than most cases, as most plans imply dependence across states (step 5 needs step 4 to be done, meaning that step 5’s probability would be calculated as P(step5|step4), which would require applying conditional probabilities to all previous steps).

In other words, the reality is actually harder and more nuanced, and you can see accuracy drop much faster in between steps, which means these products are still fancy toys, nothing more.

CHATGPT

Sam Altman Announces o3-mini

On the same day, Sam Altman also announced the release of o3-mini to free and priced tiers. This puts yet another state-of-the-art LRM in our hands, with priced tiers having less restrictive usage limits.

TheWhiteBox’s takeaway:

If you’re wondering why OpenAI released o3-mini so quickly, the answer is just below in this newsletter: DeepSeek has released its r1 model, which is as powerful as it gets while being free to use (or very cheap to run in the cloud).

That’s why we should protect open-source software at all costs. It forces companies to compete, allowing users to access powerful features faster and at more competitive prices.

AGENTS

Perplexity Fires Back

The same day OpenAI released Operator, Perplexity expanded its platform's agent capabilities by releasing Perplexity Assistant, a product with a similar goal to Operator: performing computer-based actions on our behalf.

The WhiteBox’s takeaway:

Curiously, the CEO’s announcement refers to the assistant as ‘calling other apps’, indicating that this model uses APIs as its main tool access method.

While this should increase robustness (APIs are more straightforward to exploit than computer interfaces), it limits the number of apps this assistant can use (while OpenAI’s Operator prefers some apps through its plugins, OpenAI clarified that it should be able to use any interface).

One way or another, as mentioned earlier, I remain skeptical; I believe the tasks these assistants can currently execute aren’t powerful at all.

HEALTHCARE

Finally, a 3D Generator That Works… And That You Can Use

Microsoft Research has released a powerful 3D model that takes images and transforms them into 3D renders, which can also be adapted.

It’s a really cool project you can try for yourself here (my dog isn’t that fat, by the way, and doesn’t have two tails, which I assume you know isn’t accurate either). Still, these models are getting better pretty fast, and the latency was actually very small.

OPEN-SOURCE

Meta’s GenAI Teams Enter ‘Panic Mode’

According to a viral anonymous tip, Meta’s Generative AI team seems to be in full panic mode based on DeepSeek’s recent model releases. The latter and most impressive of these is this week’s ‘Trend of the Week,’ a testament to why I chose it as this week’s biggest news.

As shown in the screenshot, Meta sees its leadership in the open-source AI space being contested by Chinese labs like Alibaba, but especially DeepSeek, and the teams in Meta are reportedly copying everything they can from DeepSeek’s playbook.

TheWhiteBox’s takeaway:

Who can blame them? DeepSeek is literally firing on all cylinders. Worse off, they aren’t only surpassing the quality of Meta’s models, but they are doing so in a much more frugal way.

For comparison, DeepSeek v3 required ten times less pretraining than Llama 3.1 405B (4e24 vs 3.8e25, according to EpochAI), which means the training costs are multiple times inferior (DeepSeek claims less than $6 million for DeepSeek v3, which is unheard of).

Based on the tip, Meta’s Generative AI efforts have led to an oversized, bloated team (highly typical of US corporations). This may have been one reason behind Zuck’s recent commitment to layoffs, while DeepSeek remains a much smaller team.

To make matters worse, DeepSeek finally has an LRM on the market, with R1 (explained below), while Meta’s efforts in this area are nonexistent to the public eye. Meta’s monetizing strategy for GenAI depends on them being the main platform for open-source, a throne they are losing as we speak.

Not great news for them and their investors.

TRUMP ADMINISTRATION

Blackstone Invests $300 Million into DDN

DDN has announced a $300 million strategic investment from Blackstone, valuing the company at $5 billion.

This partnership aims to enhance DDN’s enterprise AI infrastructure and accelerate its growth. The investment will be utilized to advance DDN’s AI product development, expand global marketing efforts, and strengthen channel partnerships. With over two decades of experience in data intelligence, DDN has achieved a fourfold increase in AI revenue while maintaining high profitability (according to them, of course).

But the question is, why is smart money going crazy for AI data and hardware?

TheWhiteBox’s takeaway:

While AI labs steal the spotlight in mainstream media, it’s in data and hardware where most deals are made. Especially regarding infrastructure, being the company that figures out how to run AI workloads in a scalable and profitable manner is a one-way ticket to doubling your valuation.

While AI advances in raw terms (it’s getting smarter), it’s definitely not getting easier to profit from; if anything, the latter has become more challenging.

While open-source continues to commoditize the cost of intelligence to the consumer ($ per token), the costs of running AI inferences are increasing, which means that, assuming a very assumable assumption that companies run LRMs at a $ per token loss, this loss grows larger every time an LRM is run (which is why OpenAI’s o1 continues to being rate limited in all tiers).

TREND OF THE WEEK

DeepSeek r1, The Model That Scared Everyone

DeepSeek is the gift that keeps on giving. This week, they released DeepSeek r1, their first reasoning model, which matches or exceeds the performance of OpenAI’s o1 model.

But this research is so good that this amazing result is the least important part of the entire release, as:

They break every possible rule in the LLM book, sending a clear message to incumbents: it’s all about compute; there is no tech moat.

They released a suite of Small Language Models (SLMs) distilled from r1 that take even the minute ones to frontier-level territory.

They even have the time to criticize many frontier research papers published by OpenAI and others.

And the most impressive thing? They proved that reasoning can “emerge” from AIs without human help, raising serious questions about the interpretability of these ‘things’ and forcing us to ask whether we ever understood these models in th first place… and even whether we should actually create them.

Let’s cover everything here today.

A SOTA Reasoning Model

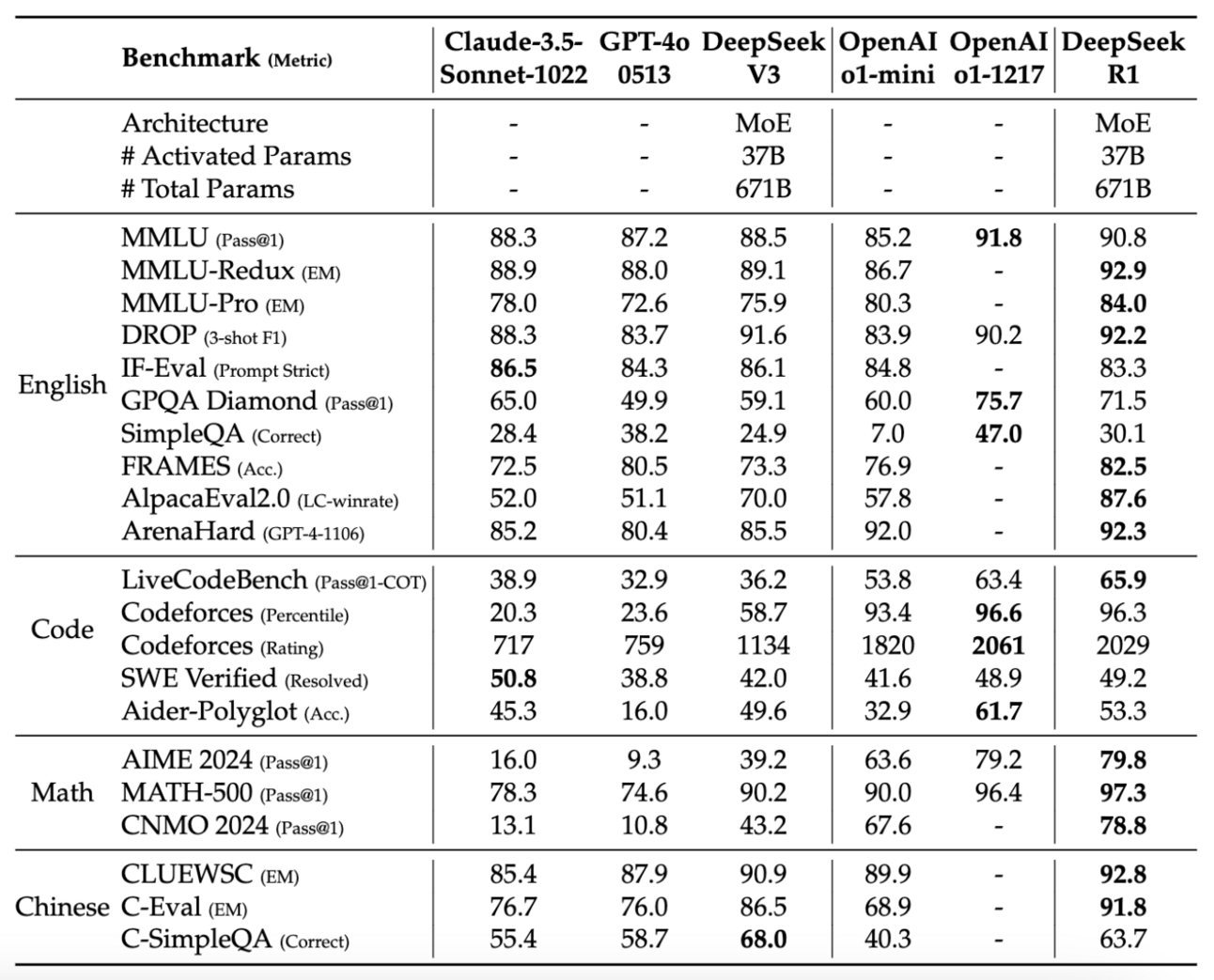

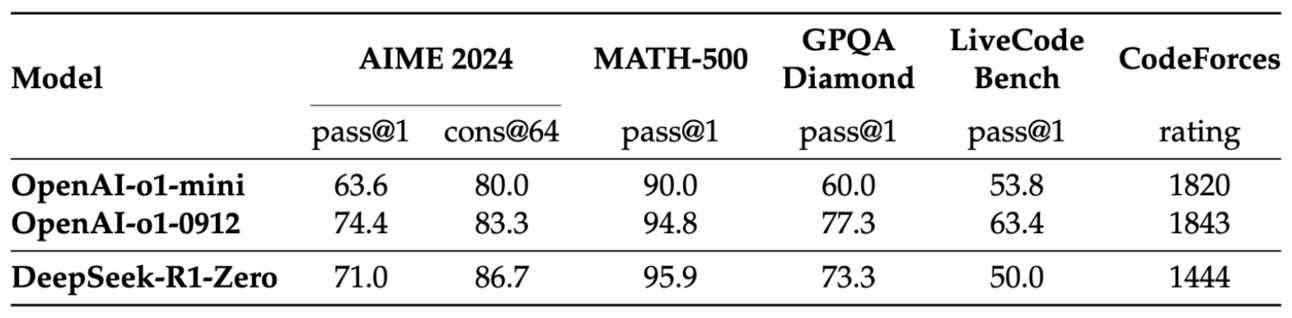

In simple terms, DeepSeek r1 is an open-weights model (the data set isn’t published, so we can’t consider it fully open-source despite having MIT license, the most permissive open-source license of them all) that reaches published state-of-the-art performance on multiple reasoning benchmarks, matching or exceeding o1 in most of them.

In other words, OpenAI’s leadership in the Large Reasoner Model (LRM) arena, already in question with Gemini 2.0 Flash Thinking, has been entirely erased by now (until o3 gets released and proves its allegedly superior performance).

An actual work of art

Across the most popular benchmarks, r1 matches or outcompetes o1, across areas like maths (AIME), code (SWE and Codeforces), and knowledge (MMLU, GPQA).

When compared with the best Large Language Models (LLMs) like Claude 3.5 Sonnet, although not really a fair comparison, DeepSeek-r1 blows everyone out of the water:

Crucially, DeepSeek also introduces several modifications to the Transformer architecture (some inherited from DeepSeek v3), which are summarized below but explained in full detail in a Notion piece I will publish this Sunday:

R1 is a mixture-of-experts model with shared and fine-grained experts (experts that activate always), so 95% of the network doesn’t activate during inference, saving massive overhead in cost and latency

They use multi-latent attention, a technique that compresses keys and values by leveraging the low-rank nature of Transformer latents. This reduces the KV Cache considerably, allegedly with little to no impact on performance.

They use multi-token prediction. Each R1 prediction predicts three tokens instead of just one by adding two additional module heads.

Overall, DeepSeek r1 is not only immensely powerful, but it runs at a considerable price and speed discount compared to models like o1, which is reflected in its pricing: while OpenAI’s o1-preview model is priced at $15 per million input tokens and $60 per million output tokens, DeepSeek’s V3 model, which underpins R1, is priced at $0.90 per million input tokens and $1.10 per million output tokens, 16 and 54 times cheaper than OpenAI’s flagship model.

Thus, while a naive performance comparison makes them look similar, if we factor in efficiency, DeepSeek-R1 is considerably more “intelligent efficient” than o1.

It’s the king now.

But there is no better testament to r1’s prowess than the next surprise DeepSeek had for us.

Distillation is the answer

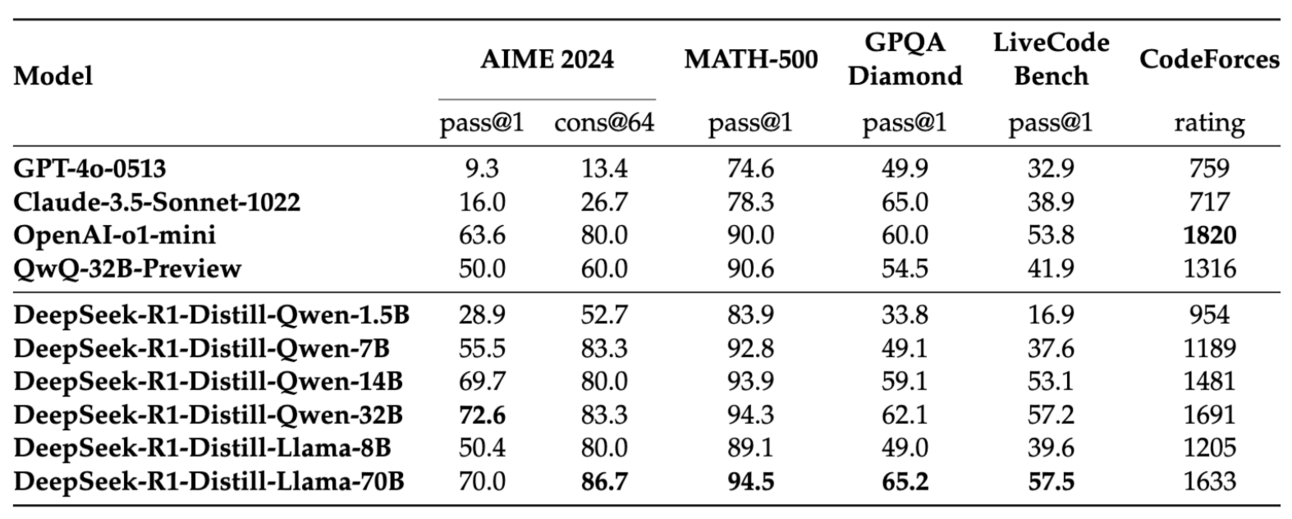

Besides releasing r1 and Zero (more on that in a minute), they also released a set of Qwen and Llama distillations at various sizes, going from 1.5B to 70B, based on Alibaba’s Qwen 2.5 models and Meta’s Llama 3 models.

But what does that even mean?

In simple terms, they’ve taken these smaller models and retrained them using R1’s data using distillation, a method we’ve discussed multiple times. Distillation is a learning technique that teaches inferior models to imitate larger models' responses without dealing with the issues of a large model (size, cost, latency, etc.).

Think of them as Pareto-optimized models that give you 80% of the performance of the larger model for 20% of the cost.

And the results? Well, amazing. As seen below, these distilled models reach state-of-the-art LLM performance relative to their sizes, generally surpassing the performance of models two or three times their respective sizes.

For a brutal comparison, DS-r1-Qwen-1.5B, a model small enough to fit in your iPhone, has better performance in maths than GPT-4o or Claude 3.5 Sonnet.

As seen below, I tried the 1.5 and 7B versions in my MacBook M1, and these models are scarily smart for their size.

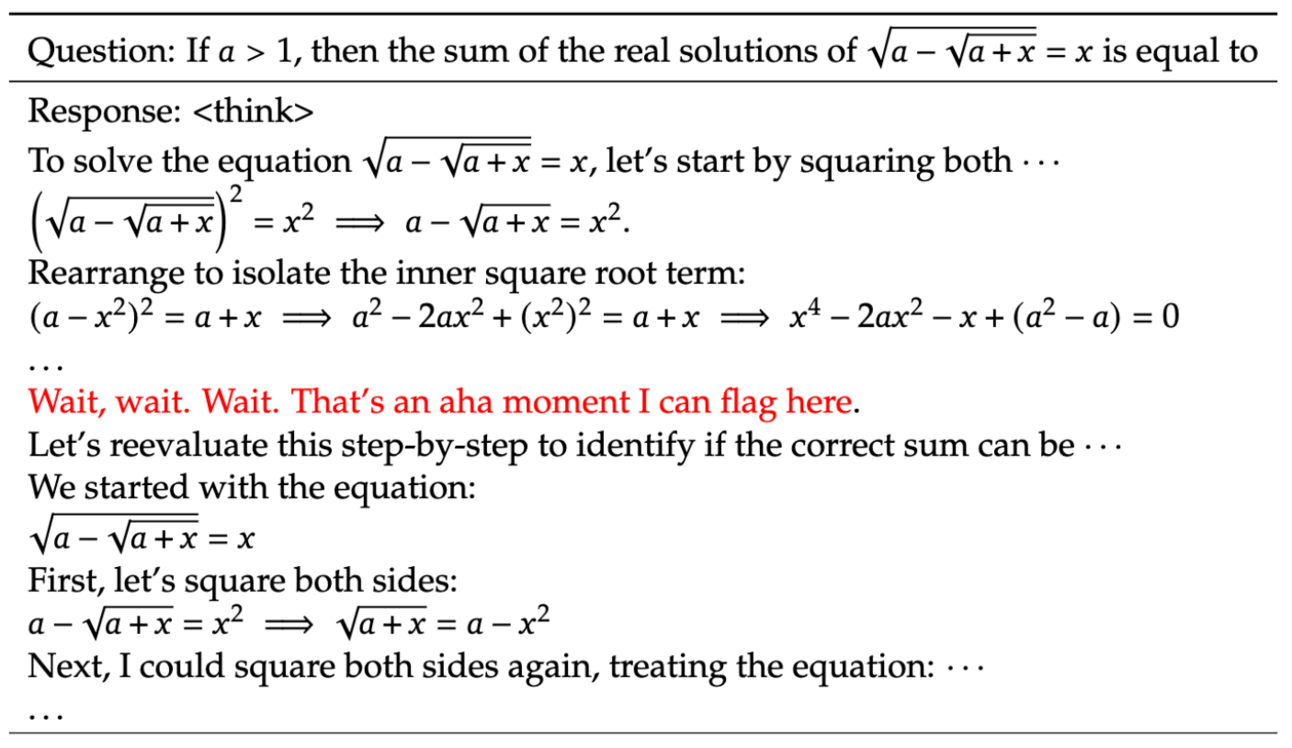

However, as you may notice, they are obnoxiously wordy during the reasoning phase despite handling pretty straightforward tasks (enclosed by <think></think> clauses), which also gives a clear view of how LRMs produce many more tokens (sometimes unnecessarily) than LLMs.

As a recommendation for those looking to implement these models, I seriously suggest hiding the chain of thought from the user or providing a summary as ChatGPT does by masking any token inside the <think> clauses.

Fascinatingly, they also made an amazing discovery: distillation is better than retraining the smaller models for longer. This proves that size still matters and that patterns that larger models pick on, which are then distilled to the smaller models, aren’t picked by smaller models no matter how long they are run. If we thought distillation was powerful, we were actually underestimating its powers.

But aside from all the technological advancements, DeepSeek-R1’s most significant breakthrough is the training method itself.

Just RL’ It.

If Nike’s slogan is ‘Just Do It,’ after this release, AI’s slogan should be ‘Just RL’ It,’ because the most remarkable discovery of this paper is how the R1 was trained, breaking every conception we had until now.

No Fancy Hacks

As we explored LRMs, we discussed their three main components: generator, verifier, and search algorithm. The first generates thoughts, the second evaluates their quality, and the third allows the system to search multiple chains of thought.

For instance, with o3, we assume it’s a very complex system, where many surrogate model verifiers and coding and math environments evaluate a model’s outputs every step, even allowing for search over multiple solution paths, quickly increasing costs.

However, DeepSeek takes a much simpler approach: no fancy verifiers, no search, just pure Reinforcement Learning. In other words, they took DeepSeek v3, the LLM they presented a few weeks ago, and trained it with a dataset of complex reasoning tasks that could be verified automatically (data where we can automatically verify whether its outputs are correct or not)

And that’s… pretty much it.

Put another way, the team didn’t have to create a hugely complex reasoning dataset to teach the model ‘how to think’, or adding multiple complex components to verify ever-more-so-complex problems, or engage the model in complex and costly self-improvement processes. Far from that, they simply gathered a large set of questions with verifiable answers and let the model discover how to solve problems by itself.

The question here is that, if we aren’t teaching the model how to reason, how does it learn to reason?

It’s Actually Simple.

Well, the answer is as simple as it gets: trial and error.

While humans go to school from a very young age to learn the basic principles of each science, as our short life limits us spans and trial-and-error frequency (how much time we have to guess a problem until finding an answer) prevent us from doing trial and error for days to figure everything out for ourselves, AIs can parallelize their thoughts.

This means that we can create an “infinitely scalable” trial-and-error flywheel in which the model tries stuff out and verifies it until it finds good answers and uses these as feedback to improve.

Fascinatingly, this led to the model figuring out for itself several crucial features of human reasoning, like reflection and backtracking when making a mistake:

Also, it figured out inference-time compute by itself. In other words, the model realized that to get better answers, it had to think for longer. This was proven by the fact that, over time, the model’s responses grew in length, unsurprisingly increasing performance.

Again, let me insist these inductive biases, assumptions that we would generally introduce to the model to make it reason better, like (‘model, please generate more tokens, as that will increase your chances’), were not introduced by the research team, but emerged inside the model.

To emphasize this point, they even trained a model with no prior reasoning training, which they called Zero. This model reached insane performance in reasoning benchmarks (using the previous method) but couldn’t be released because its chains of thought were unintelligible to humans despite the correct answer.

An ‘alien’ model with human reasoning capabilities.

Therefore, did DeepSeek find a way to train AIs to reason in non-human ways and got too scared to release it?

If we are having trouble comprehending the behavior of AIs that speak like us, it would be interesting to see AIs reaching (or reasonably imitating) human-level reasoning while engaging in thoughts we humans can’t even understand. AI doomers will thrive in social media with this idea alone.

So, What Now?

The consequences of this release are enormous in many aspects.

AI progress

With R1, we can challenge the current status quo. In a way, DeepSeek is telling everyone that we should go back to the basics, as The Bitter Lesson first suggested, and that humans should remove themselves from the process and let computers compute and figure things out.

Markets

This release will scare the living daylights out of the US Government, reinforcing projects like OpenAI’s $500 billion four-year investment plan.

As the US is just stepping up its GPU controls over China, the fact that ‘compute is the only thing that matters,’ as suggested even by Chinese labs, signals that these export restrictions won’t be dropped anytime soon. But don’t think China isn’t doing its thing, too, as ByteDance is reportedly investing $12 billion this year alone into buying chips ($5.5 billion of which are going to Chinese chips).

Overall, DeepSeek’s results impact all AI companies in some shape or form:

This is also great news for NVIDIA, which now has more reasons than ever to position itself as key to AI progress despite the base model having been trained in such a frugal way.

This should be great for Apple, which can now use DeepSeek’s R1 MIT license to distill its performance into its models (or even use DeepSeek Qwen 2.5-1.5 for Apple Intelligence)

This is terrible news for OpenAI/Anthropic/Google, who see how their technological moat is erased again. How does one win a market where no money is made as the product is commoditized every few weeks, while the go-to-market costs are utterly insane? For the moment, as a response to this release, OpenAI is releasing o3-mini today, probably as a way to retain the narrative that they are ahead.

And this is terrible, terrible news for Meta, as they are seeing themselves lose their narrative over the best open-source lab.

But today's biggest lesson is that you can’t beat open-source, so AI alone can't be the way to victory.

Thus, what will make private companies like OpenAI win?

Integrations to other products? Easily replicable.

Regulatory capture by banning open-source? Not enforceable, China will continue to drive open-source progress.

Compute advantage? That’s probably what the US sees as the best bet, and, without a doubt, the most significant competitive advantage between companies. However, at the geopolitical level, China has it very easy to kill the entire semiconductor market tomorrow simply by invading Taiwan and halting chip production.

As an investor, all roads seem to lead to hardware and data companies and Hyperscalers; I wouldn’t want to be an AI software investor right now.

THEWHITEBOX

Join Premium Today!

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]