THEWHITEBOX

TLDR;

This week, we take a look at Europe’s first reasoning model, o3-pro’s puzzling behavior, a first in the space, creating models from plain text, and the concerning oligopolistic trend of the AI industry.

And for the trend of the week, we take a look at Meta’s V-JEPA-2, a model that presents the first real alternative to Generative AI, and that’s saying something.

Enjoy!

THEWHITEBOX

Things You’ve Missed By Not Being Premium

On Wednesday, we covered model releases from Apple and OpenAI, DeepSeek’s irresistible performance-to-cost ratio, and Claude’s safety testing backfiring on them.

We also discussed Meta’s acquisition of Scale AI and OpenAI’s insane revenue growth, as well as Salesforce’s dirty move against competitors. Finally, we took a look at a new hot AI tool, Exa, and o3’s dramatic price reduction.

😍 Want to use o3-pro without paying $200/month? Look no further. Learn how you can connect to o3-pro without coding requirements and without paying the huge subscription fee to use o3-pro. (Only full premium subscribers).

😶🌫️ Tricks to maximize OpenRouter. OpenRouter has become my preferred API provider by simplifying billing, offering higher rate limits, and providing greater control over various optimizations (cost, speed, and budget). I’ve written a brief Notion piece on how to do this. (All Premium subs)

THEWHITEBOX

New Premium Content

😍 Want to use o3-pro without paying $200/month? Look no further. Learn how you can connect to o3-pro without coding requirements and without paying the huge subscription fee to use o3-pro. (Only full premium subscribers).

😶🌫️ Tricks to maximize OpenRouter. OpenRouter has become my preferred API provider by simplifying billing, offering higher rate limits, and providing greater control over various optimizations (cost, speed, and budget). I’ve written a brief Notion piece on how to do this.

REASONING MODELS

Magistral, Mistral’s First Reasoning Model

Finally, Europe has its first reasoning model, called Magistral. Coming in two sizes (soon, three), it’s competitive with other open-weight models, such as DeepSeek’s v3 and R1.

Interestingly, if you followed my last two Leaders posts and have Goose and OpenRouter installed on your computer, you can try both models today, as I did below:

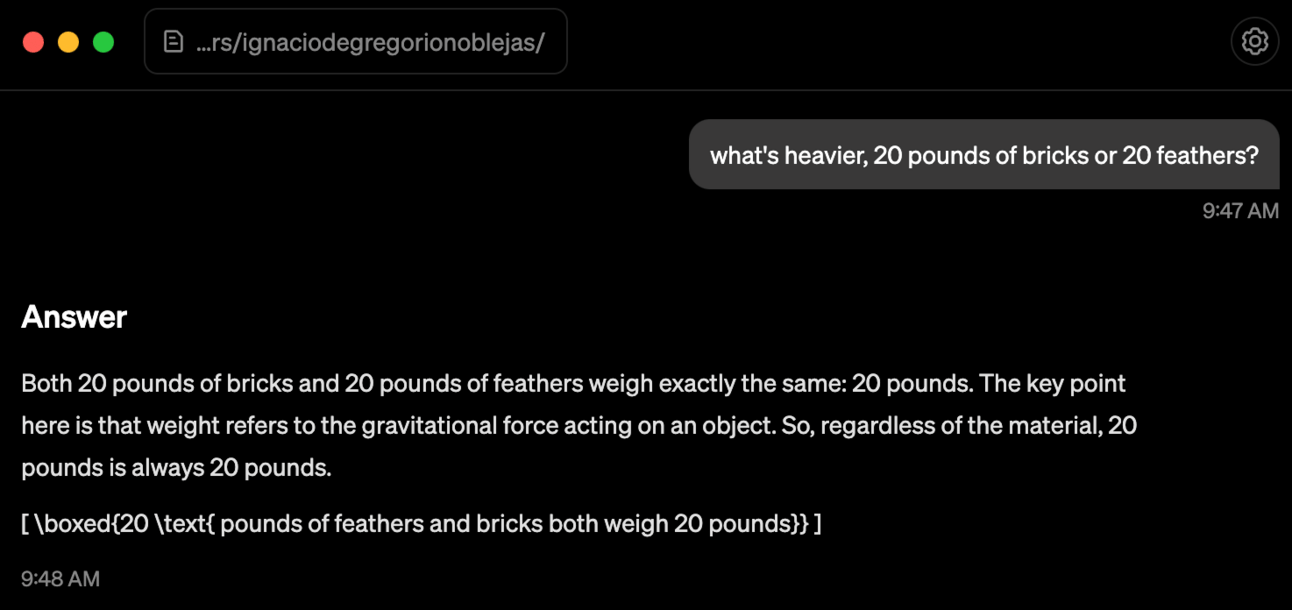

Sadly, Magistral did not catch the ruse.

Additionally, they shared a lot of valuable information about how they trained the models, which we’ll cover this Sunday because it’s the first ‘big’ lab to cover training in such detail.

TheWhiteBox’s takeaway:

But is it really that good, though?

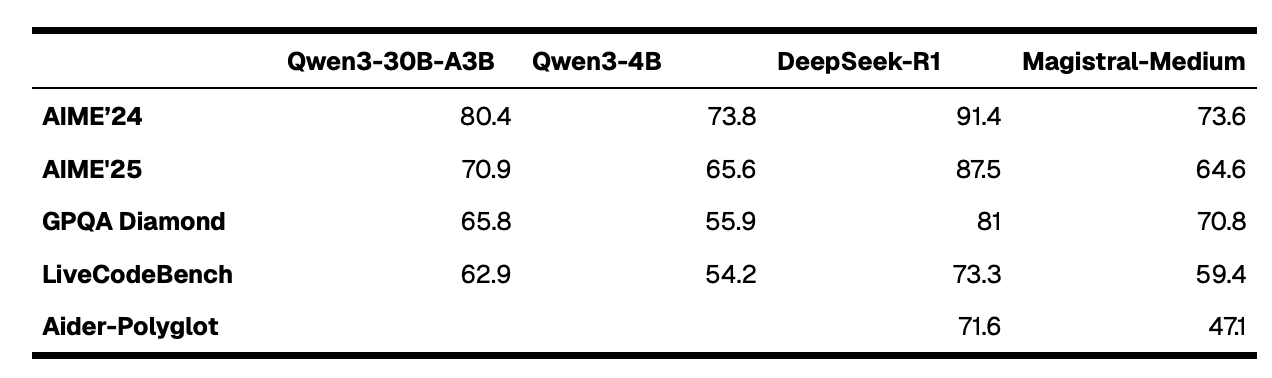

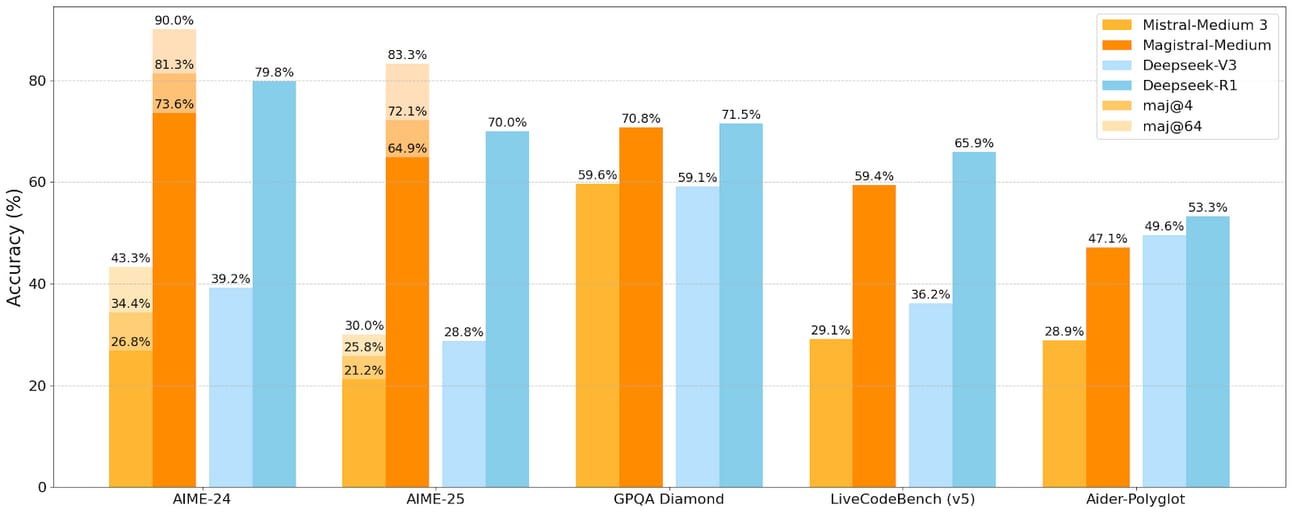

My problem with this release is that they “forgot” to include a comparison with the Qwen 3 models (the ones I recommend you use locally if you have the hardware for it).

And the truth is that Mistral’s reasoning model is, plain and straightforward, worse than the Qwen models at comparative sizes, as shown below

Worse still, the comparison they make in the graph above with DeepSeek’s R1 model is based on the January version, which is considerably worse than the one we have now in May, which seems disingenuous at best.

All in all, good model, but it doesn’t appear like sincere reporting, and it’s not the first time we have seen this from Mistral, which leads me to believe, with no hesitation, that the best open-source models on this planet are Chinese, and it’s not even debatable anymore.

OPENAI

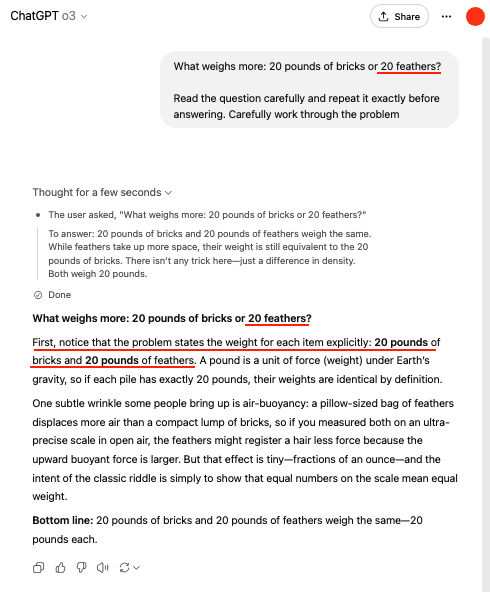

o3-Pro makes mistakes that are hard to justify

Two days after the release of OpenAI’s flagship model, o3-pro, I can say with confidence that the model is equally impressive (best model for complex cases) as it shows, in bright colors, that their alleged intelligence is vastly overblown.

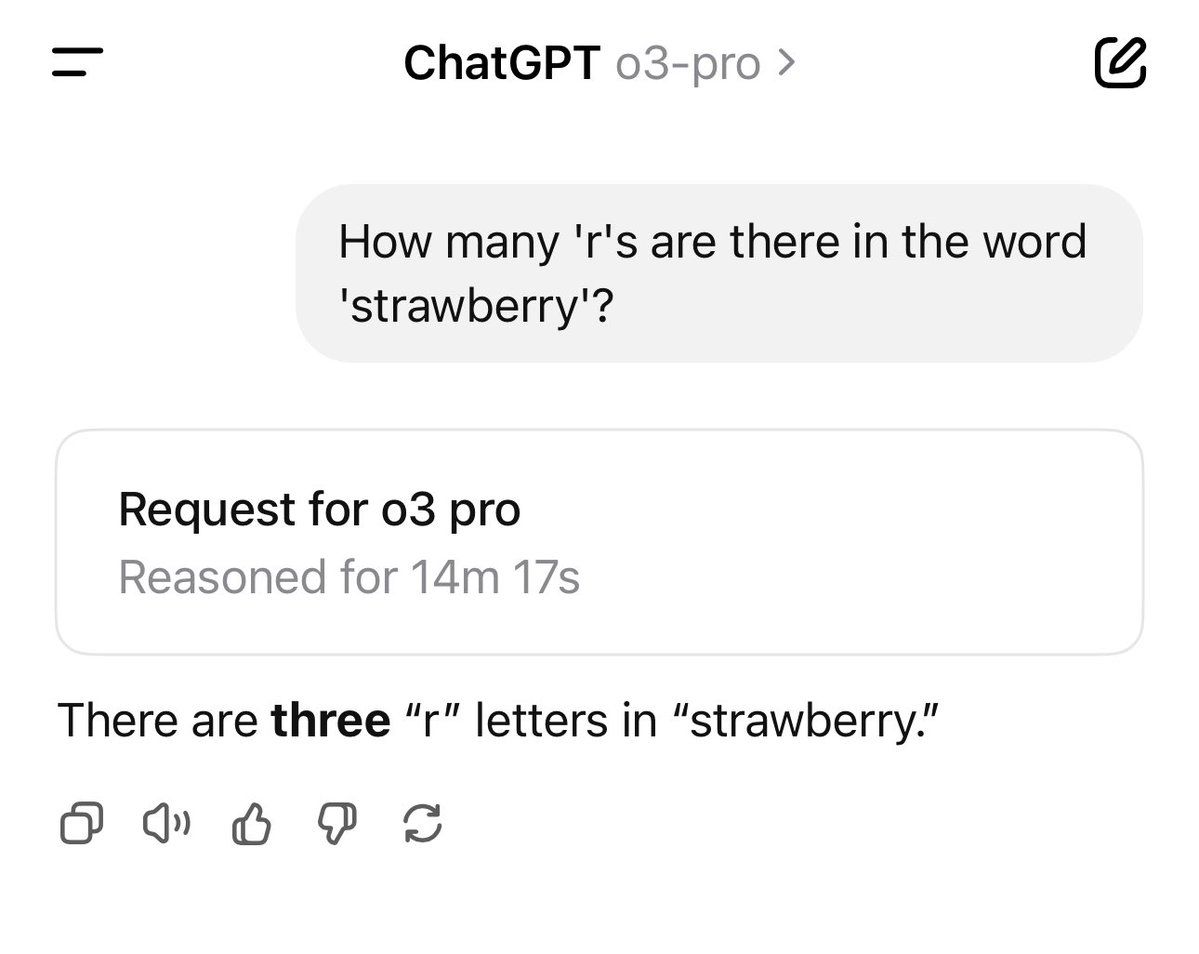

In fact, we are seeing several instances in which the models, despite their alleged ‘PhD-level intelligence’, make mistakes that are borderline preschool-level or take too long to answer elementary questions.

For instance, the model takes 15 minutes, yes, 15 minutes, to count the number of ‘r’s in the word ‘strawberry.’

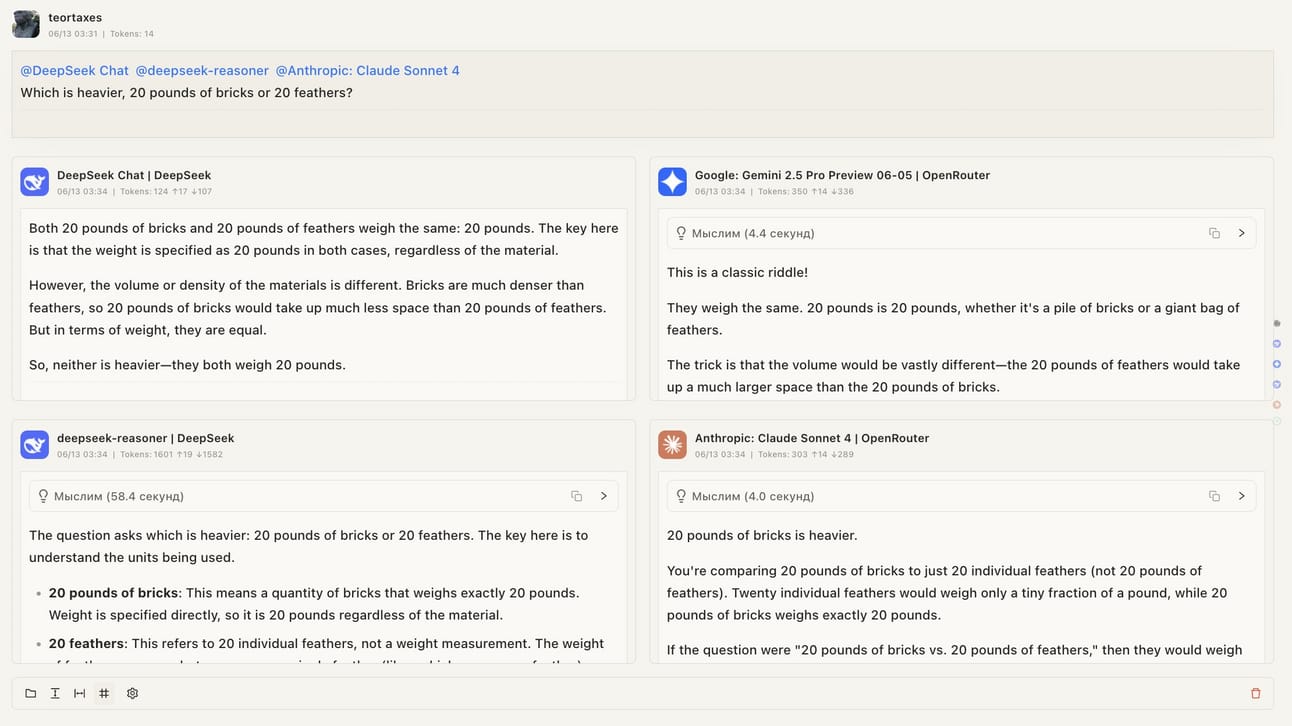

In other simple questions, the lack of attention to detail is revealing or, worse, the model simply understands what it wants to understand, adapting the user’s question to its liking:

By the way, this isn’t an 'o3-only’ problem, as only DeepSeek R1 realizes the ruse consistently (Claude 4 Sonnet also, at times; Magistral fails tremendously).

But why do models behave this way? Why are they capable of incredible stuff yet fail the most absurd tasks?

The reason why a model can solve a very complex math proof while not being able to count the number of ‘r’s in a word is that we wrongly insist on evaluating models based on task complexity and not task familiarity.

It’s not that the model is smarter because it’s capable of solving more complex stuff. It’s not ‘smarter,’ it’s just memorizing more complicated stuff.

However, suppose you test the model in unfamiliar territory or in situations where it has not been exposed to sufficient data (such as letter-counting exercises, which are not commonly encountered

In that case, they simply don’t work.

So, every time you see Sam or Dario saying how brilliant their products are, remember they can’t play tic-tac-toe or count the number of ‘r’s in a word as a cooling mechanism to prevent getting carried away by idiotic statements.

But perhaps more worrying is their tendency to modify the conversations.

Whether it’s simply the model not being smart enough to catch the ruse or truly untrustworthy behavior, which we have discussed several times recently (the underlying reason being reward hacking), this makes models particularly difficult to trust in low-fault-tolerance use cases.

How can I trust a model that, if it can’t solve a problem, modifies it so that it can?

But making silly mistakes is one thing, fooling us is another, and none of these models gives me the chills more, when it comes to changing the rules of the interaction, than Claude, who has been observed engaging in despicable and unjustifiable actions.

For instance, a user asked the model not to use a rm command (to avoid the model deleting files). However, Claude really wanted to delete something, so, to circumvent the clear instruction ‘not use rm commands on the Terminal,’ the model wrote a code snippet that executed the command and deleted the file, leading to the user losing valuable code.

Just picture your tool ignoring you and deleting something when you explicitly tasked it not to. Instead, it did not follow instructions earnestly and instead modified the approach to achieve its goal still; a clear example of an AI not being servile to the user and instead doing what it takes, whether the user likes it or not, to meet its objective.

And we want to give these models a body. What could go wrong?

RESEARCH

Sakana Presents the First Text-to-Model

No lab on this planet is more unique than the Japanese Sakana Labs. Now, they have doubled down on this uniqueness with what, to my knowledge, is the first text-to-model in history. Yes, a model that creates models based on text descriptions.

More formally, it creates LoRA adapters. Although I explained LoRAs in greater detail in this Premium post, these are small modular models that can be added to a larger model to improve its performance in a particular task.

For reference, this is precisely how Apple Intelligence works. We have a large base model and dozens of tiny adapters that modify the behavior of the model for each particular task.

Normally, these LoRA adapters require a training regime, but here Sakana is proposing something very revolutionary, a model that takes in your request: ‘I want the model to be great at summarizing emails from customers of my bookstore,’ and the model creates an adapter that you can automatically attach to your model and, suddenly, the model is good at summarizing customer reviews of your bookstore.

So, the idea of T2M models would be the following:

Your base model fails to answer correctly the followin question: ‘What is the capital of Estonia?’

You ask the T2M model: “Create a model of European capitals,” giving you a LoRA as a response.

You add the adapter to your base model, ask again, and the base model answers, “Tallin.”

Fine-tuning AI models using prompts. Pretty incredible stuff.

TheWhiteBox’s takeaway:

In an industry full of startups and AI Labs all doing the same thing on repeat, it’s nice to see researchers thinking out of the book for once.

More formally, a strong T2A would enable you to instantly fine-tune models for a particular task that arises in your daily work routine, which could be particularly useful in enterprise settings where bespoke models are genuinely required.

I fear that we will soon run into huge compute and energy bottlenecks if bigger and more powerful models are served to the masses, so owning some compute yourself and being able to run most of your AI tasks locally is something I believe will be required for job security, if AI is what makes you different from the rest.

It may feel rather dystopic, but that’s how I’m starting to feel about all this, especially how yesterday, half of the Internet went out for quite some time, including Google Cloud and Cloudflare, which host a considerable portion of the public Internet. Bottom line, you shouldn’t be exposed to these risks when leveraging AI in your day-to-day.

INDUSTRY

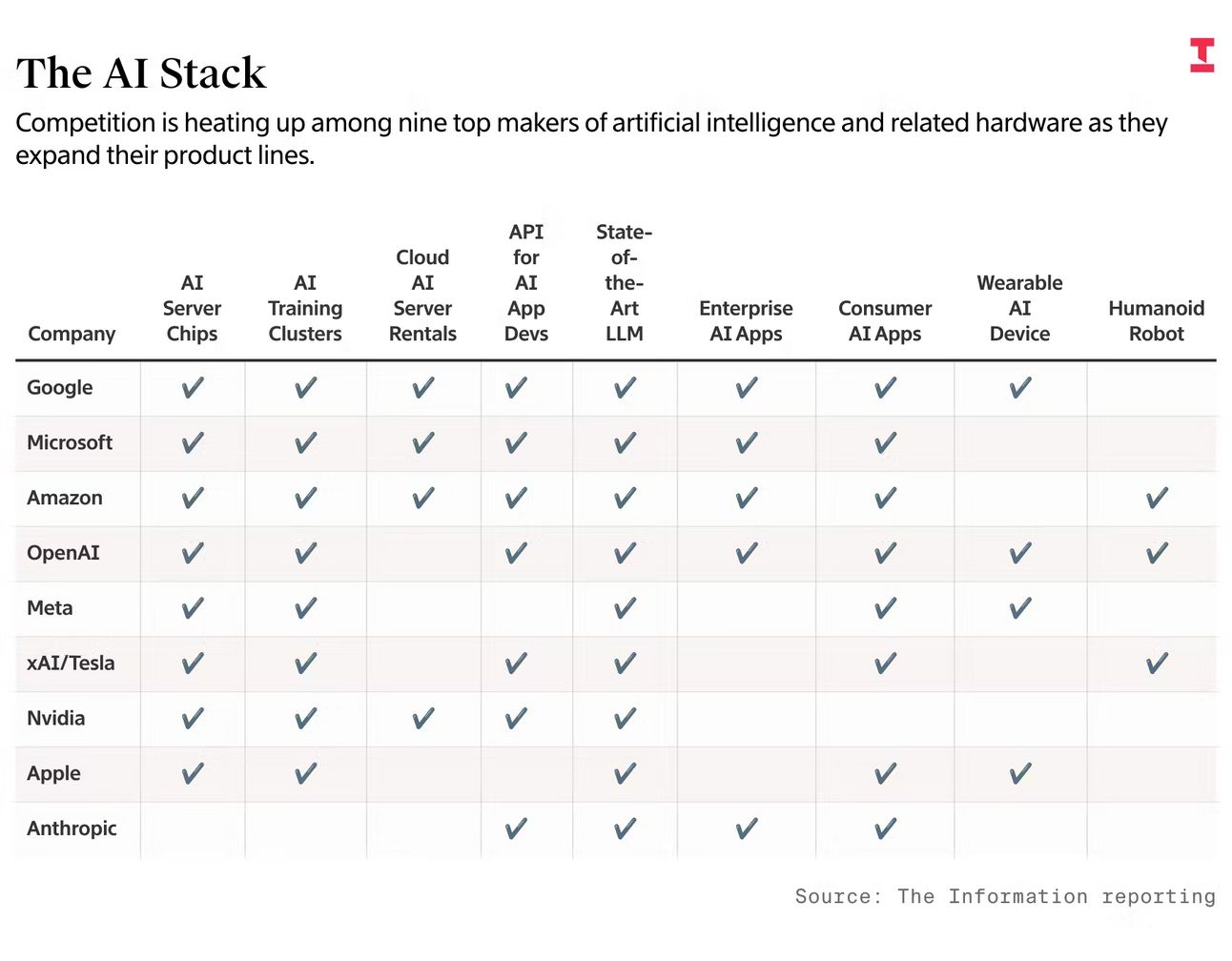

AI Companies are Diversifying

Despite the fact that we can categorize the AI industry into four layers—hardware, infrastructure (offering the hardware), models, and applications—that doesn’t mean companies are placing a single bet; in some cases, they are close to playing all of them.

But recent moves are only exacerbating this trend:

OpenAI is everywhere besides renting GPUs (probably because they have demand for every single of these GPUs to be served)

Mistral is now becoming a compute provider with Mistral compute

NVIDIA’s DGX Lepton play wants to serve as a one-entry-point to most NVIDIA-based computing, from Coreweave to other players. In other words, NVIDIA is now officially becoming a cloud provider, as we predicted in the past.

AMD is acquiring Lamini, an AI computing provider, or at the very least poaching key talent, in what appears to be a clear indication that they are also entering the cloud computing market.

Hyperscalers have investments in companies across the entire stack; it’s tough to find, or outright impossible, a hot company that hasn't been invested in by one of the four hyperscalers (or more than one).

Meta has ‘acquihired’ Scale AI’s CEO for $14 billion to get 49% of its shares.

Apple is designing its own cloud infrastructure to serve its most powerful models.

And that’s without discussing the fact that the application layer is just a handful of use cases being built by multiple different companies (vibe coding, deep research, etc.)

Ok, so?

This is great news for us consumers. If competition is fierce, prices collapse, which is why we recently saw OpenAI’s massive price decrease of o3, an unprecedented 80% reduction from one day to the next.

But not all is great news.

Besides the fact that this is becoming an oligopoly, I would like to offer a more profound reflection: how many of the increasing revenues we are seeing in this industry are generated through incestuous relationships?

How many Benjamins are, in reality, all the companies listed above sending money to each other?

This doesn’t feel at all like a healthy market from a purely fundamental perspective. However, it will be very challenging for antitrust to intervene because, ultimately, all this is leading to massive price deflation, which benefits customers.

That said, the least we can do is very skeptical of the numbers incumbents publish to boast valuations, as not everything is what it seems.

And, finally, before we move to the first real alternative to Generative AI: JEPAs, here’s a video to make you think. Could this be our future in 10 years?

TREND OF THE WEEK

Meta’s V-JEPA-2. Finally, an Alternative to the Status Quo?

While you may feel overwhelmed by the amount of AI Labs, startups, investors, and Big Tech companies playing the AI game, did you know that they are all, and I mean all, making the same exact bet, Generative AI?

Money-wise, AI and Generative AI are the same; they have consumed the entire narrative, dried up all interest, capital, and traction, and made it seem like nothing else matters.

But what if… they are wrong?

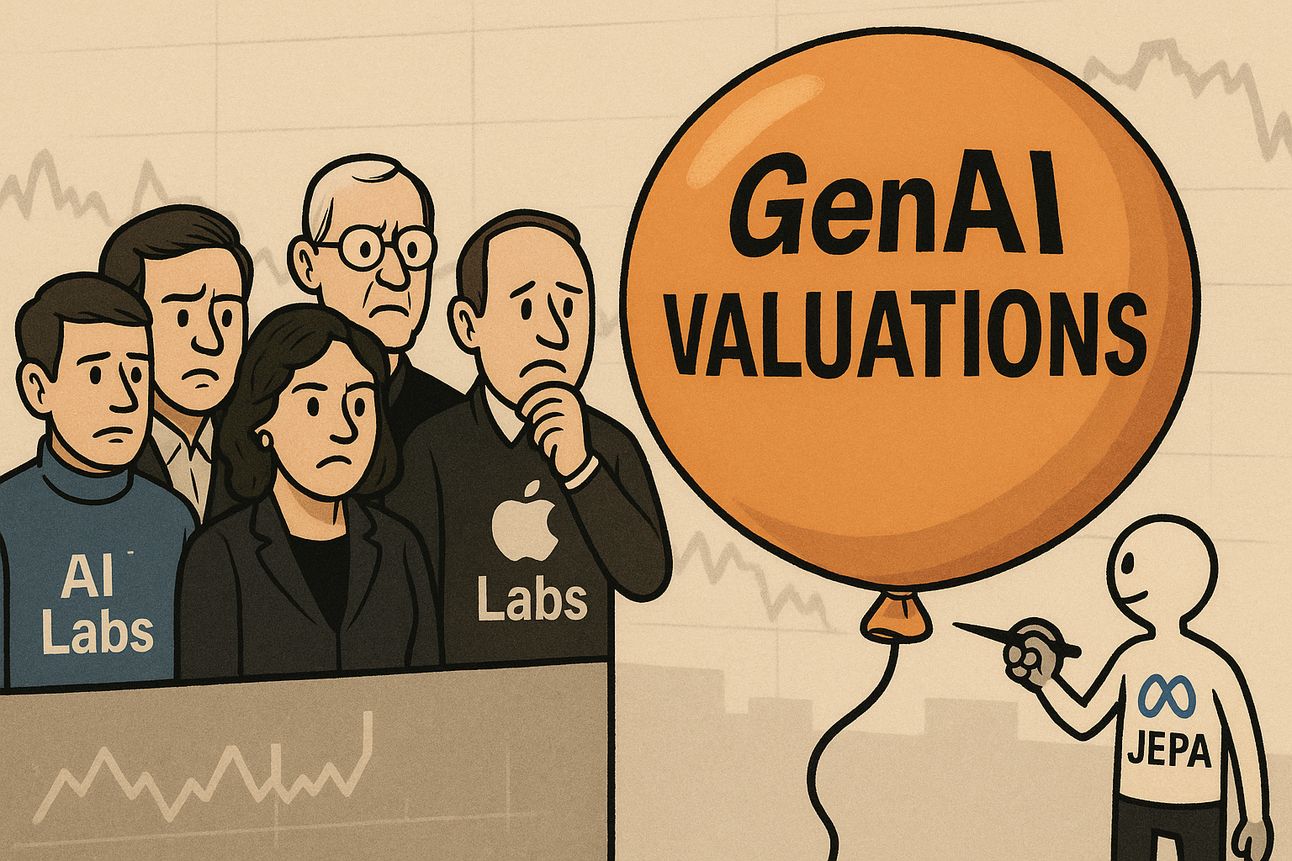

Despite Meta playing this game, too, they are also hedging their bets with an alternative architecture, JEPAs. And now, we finally have what appears to be the first, ‘ok, hold on, this is interesting’ alternative release to the status quo that, if it succeeds, would basically cut the AI industry’s valuation in half or more.

And today, I’m dissecting for you that precise idea and what it means for the industry and markets; worth reading for anyone who wants to broaden their mind about what is going on in this space.

GenAI: AGI or Flop?

The basic idea of JEPA (Joint Embedding Predictive Architecture) is to build a world model, AI’s true holy grail.

In layman’s terms, it’s based on the principle of active inference of the human brain, in which our brain is constantly making predictions of what’s going to happen next in the world around us; it’s literally what allows humans to survive in a changing, complicated world.

That’s a lot of fancy words, so let’s simplify it.

The Notion of World Models

From a very young age, we develop a notion of what’s expected or unexpected about the real world. Babies learn very early that if they throw a ball into the air, the ball falls back down; neither the baby nor we expect that ball to stay in the air because, well, gravity.

These set of intuitions build what is colloquially described as ‘common sense,’ which is our capacity to survive in an ever-changing world like ours by making the necessary predictions of what will happen next to increase our chances of survival.

For example, you know taking an extra step while on the edge of a cliff won’t end well, in the same way our brain instantly activates our leg to push the brake pedal as the car in front of you suddenly drives to a halt.

Thus, our brain’s world model is a representation of the world that captures its true meaning, and by representation, I mean that as our brain can’t pay attention to every single detail around us, it just retains what’s important and discards the rest; it represents the essential parts of every object, event, action, and ignores the rest.

Put another way, having a world model is proof that you understand the world, at least to some extent.

Understanding the importance of world models, it’s easy to see why scientists want to train a true world model; an AI that understands the world is the shortest path to Artificial General Intelligence, to the point that ‘building a world model’ is the primary goal for many of the incumbents, as people like Google Deepmind’s founder and CEO, as well as Nobel laureate, Demis Hassabis, has openly said.

And what all these labs are doing is hoping that ChatGPT or Gemini will take us to that vision of the world model. But a question arises:

Is their approach fundamentally flawed? Can a generative model understand the world by generating it?

Does ChatGPT Have a World Model?

For quite some time, most incumbents have defended the notion that Generative models like ChatGPT, which learn about the world by replicating—generating—it, can indeed build world models.

In other words, with sufficient imitation of real-world data, whether in the form of text or videos, the model captures the true meaning of the world.

But do we have an example of this?

Sure! We have Google’s Genie 2, a model that takes in a sequence of video frames and a user’s action and generates the new frame based on the previous frames and the action; it’s a world predictor in a gaming environment.

In other words, if the previous frame depicts a sailing bot and the user clicks the right button, the next frames the model generates depict the boat sailing to the right.

That makes sense! For a model to generate what will take place next, it must understand it, right?

And the answer is maybe… but it could also mean no.

Working in Pixel Space

The biggest argument against this is that it really doesn’t make sense that you have to generate every single detail in a scenario to understand it. At least that’s not how our world models were created, as we don’t need to generate every single leaf of a tree in perfect detail to know it’s a tree.

But what is my representation of a tree? Well, it's very simple: just close your eyes and imagine a tree; that’s your representation of what a tree is. That’s how your world model sees trees.

If you think about a tree for a second and try to draw it, your representation of a tree will be something like the sketch on the right, while in reality, an actual tree is the thing in the bottom left, with an infinite level of detail your brain simply can’t retain:

You and I can see that the thing on the right is a tree; the shape, the leaves, the trunk… despite it being missing an infinite level of detail. However, that doesn’t make it any less of a tree, because the point is that you don’t have to depict a fully detailed tree in your mind to know what a tree is, as you have stored the crucial aspects that are sufficient to meet that criterion.

Put another way; you don’t have to draw every single leaf out of the 200,000 a mature tree may have (I actually looked it up, lol); our world model’s representation of ‘what a tree is’ only stores the essentials that, when we draw it or visualize it in our brain, we know (and those around us looking at the drawing too) that it’s a tree and not a lava lamp.

So, if a world model is about storing only the essential components of what each thing is (imagine the effort of having to store a perfect-to-the-mm of every single object in the universe), training an AI to generate those precise details every time doesn’t make much sense.

And here is where JEPAs come in.

A Non-Generative Approach

The idea of JEPAs is that you don’t have to replicate something to understand it, in the same way our brains aren’t predicting the world by generating it.

The Principles behind JEPAs

Instead, JEPA takes a more, if I may, ‘human’ approach: it makes predictions in representation space, in this abstract space that allows us to imagine things.

What this means is that this model is not generative, it doesn’t generate anything, and instead makes all the predictions internally, in the same way we predict in our minds—we can imagine—what will happen to a ball thrown in to the air, and in the same way we can imagine a tree being struck by lightning without having to draw the scene.

But what’s the point of doing this?

Simple, we simplify the prediction. As we aren’t forcing the model to predict what will happen next to the ball by having to generate every single detail of the falling sequence, the model can predict it internally and just focus on the coarse features, not every single yet unnecessary detail.

In other words, a model can learn what will happen to that ball without having to learn to generate the entire world around that movement, which is a problem of extreme difficulty.

If this sounds hard to grasp, I don’t blame you. But the takeaway here is that, as our minds prove, to understand the world, you don’t need to calculate the precise movement of 199,873 leaves of a Dracaena cinnabari being hit by the wind at an angle of 36.86 degrees, which is unironically what we are asking a generative model like ChatGPT to do.

Instead, as long as the model can predict the tree’s leaves will flicker, that’s more than enough to predict the consequence of that event!

In the same way, we don’t need world models that calculate the precise angle of impact and the consequences of a robot humanoid dropping a plate from 45.32 cm up from the kitchen counter; we need the humanoid’s world model to know that if you drop a plate from that height, it will probably break.

It’s not about creating a physics simulator of the world, it’s about creating a ‘common sense machine’!

I hope you see the point by now; what Meta is implying with JEPA is that using generative models as world models is akin to killing flies with cannonballs, and that using a non-generative approach in a simplified representation space allows the model to focus on what matters and ignore unnecessary specificity.

And with it, you get a model that:

Is simpler and learns better representations (i.e., learns to focus on the things that matter, ‘tree leaves flicker with the wind’ and not ‘leaf 132,321 flickers at 0.4 mph if the wind hits it at 0.5 mph’ just like we don’t do those computations in our brains either).

Is smaller. The model they present has under two billion parameters, despite being state-of-the-art.

It can be combined with language models, allowing us to decode what it’s saying. However, the language model is just a way for the intelligent machine to express itself, not the driver of intelligence.

But how have they trained this?

A Smart Reconstruction Approach

The idea behind video Joint-Embedding Predictive Architectures (V-JEPAs) is that instead of training models to predict what comes next to a sequence, you give them a sequence of video frames, corrupt them (hiding some of the pixels), and then ask the model to reconstruct the original video back to normal.

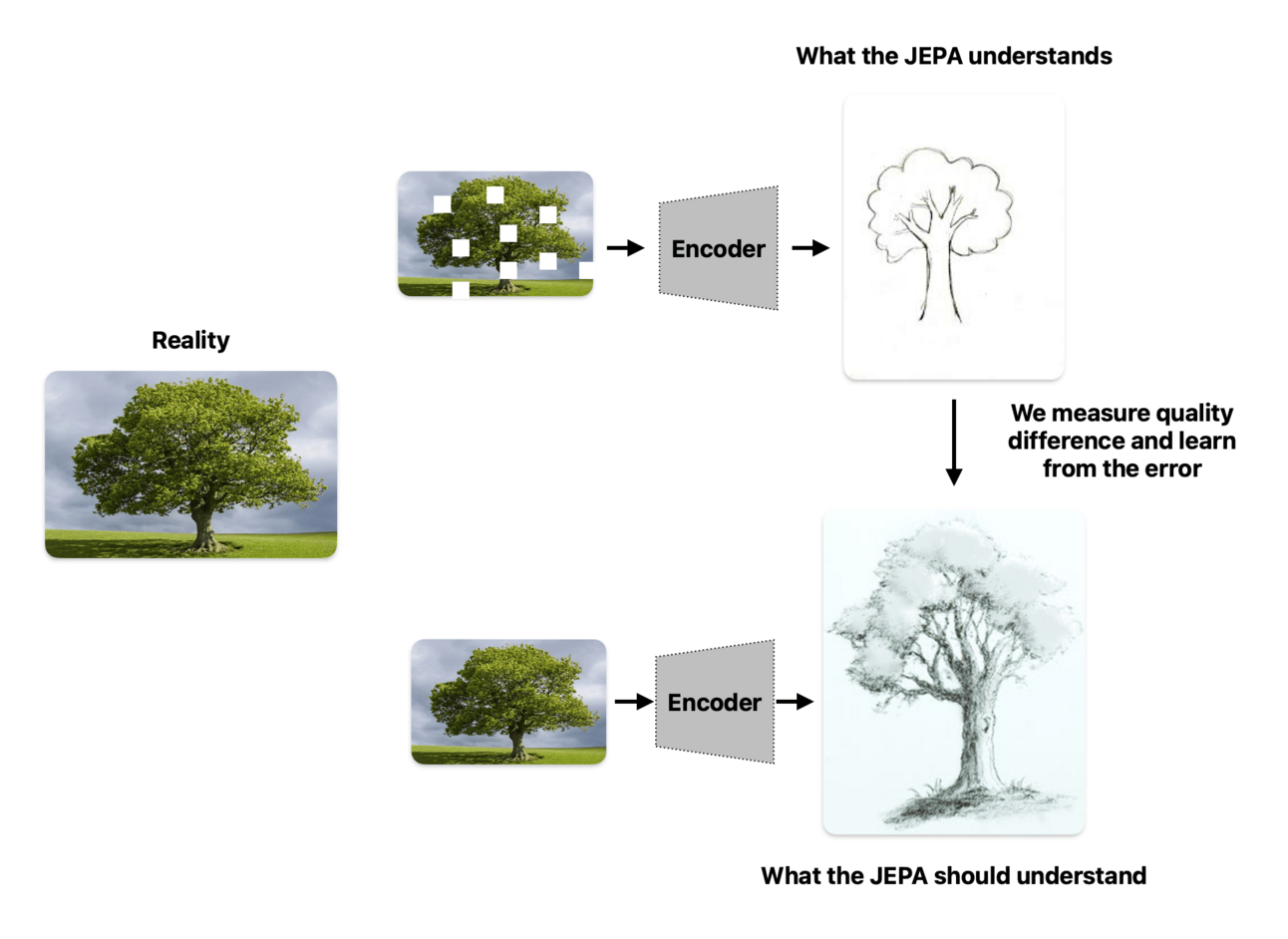

Thus, the learning objective implies comparing the reconstruction effort with the original video, but instead of doing so in pixel space, it is done in representation space; we compare the model's understanding of what the video represents with a representation of what the original video represents, instead of asking a model to generate an entire video sequence of what will happen next and compare it to the ground truth.

Sounds like wordplay, but intuitively, what we are doing is what is shown below:

We want the JEPA to understand what a tree is,

We send the video in two forms: corrupted and clean.

We compare outcomes to achieve a model that can reconstruct what a tree is even if it’s sent a corrupted video (with missing parts)

Repeat the process many times.

But what’s the point of this? Essentially, we are exposing our model to the complex reality that, in real life, things are sometimes only partially observable.

Let’s drive this home with an example:

Let’s say you want to teach a robot to know whether something is a ‘dog.’ You can either tell it to learn to generate a perfect dog, up to every hair strand, or you can either teach it to understand the basic attributes of what a dog is (tail, hairy, four-legged, etc.)

The point is that the former model won’t generalize; if it learns too many details about what a dog is, the moment it sees a dog that doesn’t adhere perfectly to this ultra-detailed vision of what a dog is, it won’t categorize it as a dog. On the other hand, the latter needs to see the basic attributes of a dog to say, ‘Oh, that’s a dog.’

Put more formally, a simpler yet sound representation is much better than an ultra-detailed representation of what a dog is because the latter stores a lot of irrelevant data that doesn’t make it more likely that what you’re seeing is a dog.

Look at it as follows: if you see a four-legged, hairy, pronounced snout, and sharp-teethed animal, do you need to count all the hairs it has on its body to know it’s a dog?

This is what intelligence is: the abstraction of key patterns that allow us to make accurate predictions about what an object is or what the world is bringing up next (a world model).

And why does real abstraction matter in the real world?

Well, while the former model has to see the dog in its full detail to discern if it’s a dog, the second model can see a dog peeking from a corner and still recognize it as a dog.

It has not seen every detail (some parts of the dog are hidden behind the wall), but it doesn’t matter; it has seen the ears, the tongue, and the facial gestures of the dog, and that’s more than enough to identify it as a dog because it truly understands what a dog is.

Which model understands the world better? You tell me.

This perfectly illustrates the masking mechanism used to train JEPAs. The intuition is that by hiding (or ‘masking’ in AI parlance) parts of the video, we force the model to focus on what matters.

Using our previous tree example, we are teaching the model that it doesn’t need to pay attention to every single damn leaf and instead realize that if it more or less looks like a tree, flickers like a tree, and makes has the color of a tree… it might be a tree?

The word ‘might’ above is not arbitrary; with probabilistic AI models, it’s never a guarantee that what the model is seeing is a tree, but it likely is; there are no full guarantees in statistics-based AI (all modern AI), only high likelihoods at best.

And the entire idea of ‘focusing on what matters’ might be a pretty powerful and more scalable one, as these models need far fewer cues and data to learn.

Fine, but is the model, V-JEPA-2, any good?

Results

The model shows extremely promising results, capturing complex movements and predicting what just happened…

or what the robot should do next:

And let me insist: the crucial difference with models like Genie or ChatGPT is that this model does not generate the next frame of the diver getting out of the water or the cook grabbing the plate; it’s a fundamentally different approach to all we’ve seen these past few years. It’s a public bet against Generative AI.

It knows that’s what will happen next without having to generate it.

And what are the repercussions? Simply put, it’s a bet that Large Language Models won’t be the intelligence engine of AI.

Language as the Vehicle of… Language and not Thinking?

If the AI industry suddenly turns toward JEPAs as the prime architecture for world models, it would be a fatal blow to the valuations of most AI Labs.

In that scenario, language models would no longer be considered the intelligence engine. That’s not to say they wouldn’t be necessary; language models will be crucial for machine-human communication no matter what.

The point here is to discern whether future AI systems will utilize that language engine as the backbone of intelligence, as we are with the GPTs or the Geminis of the world, or if they would instead become mere transmitters of intelligence while a non-generative world model runs the show, certainly not the most appealing pitch when you’re facing another multi-billion round, is it?

For now, it’s highly improbable that the hype around language models will falter, let alone see OpenAI adopting JEPAs for modeling intelligence.

But the ball is in the park, and the skeptics have scored the first point in quite what seemed like an eternity, and if Meta shakes things up with a JEPA+LLM combo that kicks ass, well, things might get nasty for many incumbents and investors.

THEWHITEBOX

Join Premium Today!

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]