FUTURE

The Age of Exploration

The timings couldn’t have been better. Last week, I gave you my best prediction about what was next in AI, “the age of exploration”, putting special emphasis on the growing importance of Reinforcement Learning as the ‘it’ moment for agents.

Luckily for me, two days-old releases, Grok 4 and China’s Kimi K2, have pretty much confirmed our suspicions, as both extremely successful releases represent a step-function improvement for agents.

Not exempt from concerning issues (like the MechaHitler drama), Grok 4 is nonetheless the first of its kind, the first model with exploration-based learning successfully applied at a large scale.

On the other hand, Kimi K2 is quite possibly the best non-reasoning model the world has ever seen, thanks to an incredibly innovative training method, leading to a highly emotionally intelligent model that, guess what, is also open-source.

This has several substantial repercussions I intend to explain today. Among other things, we’ll:

Review the milestones that took us where we are and why these models represent another milestone in the journey to machine intelligence.

Explain why Enterprise AI finally makes sense thanks to the ‘Palantirization of AI’.

Understand why these models will kill human-to-human management

And comprehend why these models will also kill software as it is

This post is not about Grok 4, I talked about that already; it’s about what Grok starts.

Let’s dive in.

Why This Time is Different.

To understand why this week marks the beginning of the new era, the exploration era, we must first go back in time a little bit.

A Change in Behavior

While I will mostly avoid technicalities as today is much more about seeing the future than talking about AI theory, let’s ensure we are all on the same page.

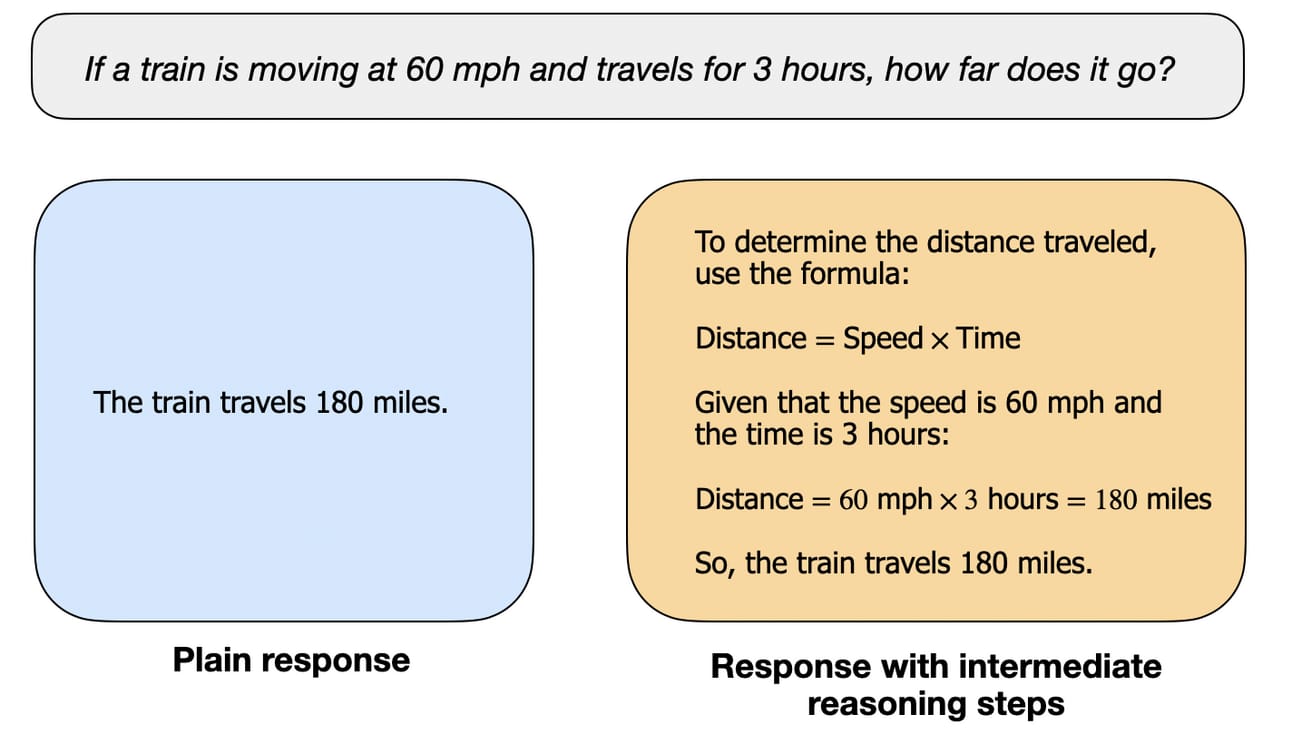

Back in September, OpenAI presented the o1 model, the first of its kind. A Large Language Model that thought for longer on tasks by generating a stream of ‘thinking’ words before answering, which helped it break problems into more straightforward steps that, when combined, yield the correct answer with much higher statistical likelihood.

The reason is obvious; thinking step-by-step makes the problem easier to solve, just like in the example below.

Therefore, the straightforward answer to what made o1 different from everything else we had seen before was that, while all previous models would choose the left route, o1 would respond using the right approach.

Previous to o1, we would usually ask models to ‘think step-by-step’ to induce standard LLMs to generate chains of thought. The key difference is that this is no longer necessary as it’s the default thinking mode for reasoning models like o1/o3 or Grok 4.

But as we learned in our last newsletter post, there’s much more to the change; what makes these models unique is that they were trained in a fundamentally different way, a way that xAI and Grok 4 have taken to a totally new level.

The change is not behavioral; it goes deeper.

The Age of Exploration

While the above explanation clarifies the differences in behavior of these models, it doesn’t explain the biggest change we are observing here. And the answer lies in how the two models are trained.

It’s the transition from imitation to exploration.

For the longest time, AI researchers and engineers have relied heavily on imitation to train models. Building on the shoulders of giants like Sir Alan Turing, who first coined this idea back in 1950 (he called the proof of machine intelligence the ‘imitation game’, hence the name of the film that covered his life), Turing argued that to create intelligent machines, we just had to teach them to imitate us.

And for the longest time, even LLMs were a perfect exemplar.

To train the most powerful models around, we mostly provided them with Internet-scale data and asked them to replicate it, and that was pretty much it.

Coupled with the design decision that the imitation dataset is much larger than the model itself, which forces the model to compress knowledge (pack that knowledge in a smaller package), we created models that could reasonably imitate datasets much larger than them, which means that, to some extent, the models had captured key regularities (patterns) in the data.

But what does that mean?

The best example of this is the rules of grammar. Unless models learn grammar, they could never speak our language fluently because the number of possible combinations of English words to create sentences is, computationally speaking, basically infinite.

Via compression, models are forced to learn the correct way words follow each other according to the rules of the English language, aka grammar, and that allows them to be much smaller than the data they intend to learn because they do not need to store every single possible combination, just those that are correct.

And by doing this at a large scale, with trillions of words, we got the standard LLMs OpenAI introduced to the mainstream back in 2022 with ChatGPT’s first version.

But was imitation enough? Was Turing correct in that imitation is all we needed? Well, no.

The Next Step in the Intelligence Journey

Human intelligence includes two extra steps called exploration and adaptation, stemming from the need for humans to solve problems they have not encountered before.

Putting this out more clearly, while imitation leads to compression, which does help us build intuition—if we notice a pattern in data, it’s more likely that the next time we stumble upon similar data, we will immediately detect the pattern, aka the solution becomes ‘intuitive’ to us—imitation requires prior experiences to identify patterns, making it ineffective when faced with the unknown.

If we notice the sun coming out every day, we see the pattern and expect that the next day the cycle will repeat.

Hence, as Jean Piaget once said, “Intelligence is what you use when you don’t know what to do.” That is, while compression helps us find key patterns in data, it is of no help whatsoever when faced with the unknown.

But what do we humans do in such a case? Well, we do two things: exploration and perception.

As explained, the former translates to testing different ways to solve the problem until finding the correct way.

The latter serves as a feedback loop, or reward mechanism, that tells us if our exploration is yielding success or failure; it’s the guide.

Combined, they allow us to adapt to new challenges. If you’re a regular, you already know where I’m getting at.

Feedback-based, or rather, reward-based exploration is precisely the definition of Reinforcement Learning, or the process of training models by reinforcing good behaviors and punishing bad ones, which has become the staple of AI training these days, perfectly exemplified by Grok 4 and Kimi K2 (although in different ways as we’ll see in a minute).

Consequently, the key takeaway is that this week marks the moment where the frontier of AI transcends from an imitation-focused paradigm to an exploration-based one.

But why is this week that time?

The Tables Have Turned

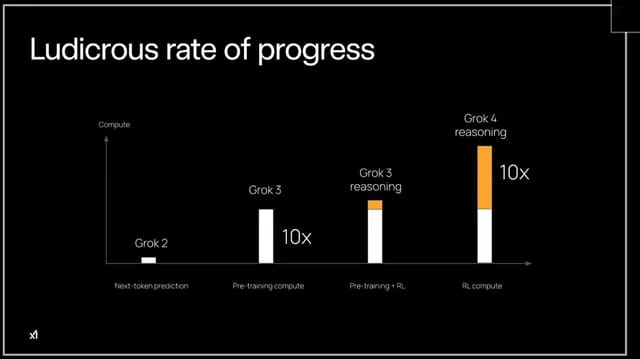

While Grok is not the first reasoning model (OpenAI’s o1 was), it’s the first model that truly embraces, or embodies, this transition. And the reason lies in the graph below.

While most previous models, including OpenAI’s o3, Gemini 2.5 Pro, or Claude 4 Opus had a similar training budget distribution as Grok 3 reasoning (third colum), with a lot of pretraining (imitation learning, shown in white) and a little bit of RL on top (in orange), Grok 4 turns the tables.

While keeping pretraining compute moderately high, for the first time, a model equaled the compute budget with RL training.

Put another way, Grok 4 is the first model that really puts RL to the test at scale.

And the reason to be excited is that… it worked.

Grok 4 is unequivocally superior to other models in most intelligence benchmarks, especially in areas with clear rewards.

If we trace back to our ‘exploration + reward’ idea, areas where the reward is clear and dense (non-sparse feedback) are where we are getting the best results, with examples like maths or coding. Not so much in “sparse/subjective reward” areas like writing or having emotional intelligence, where Grok 4’s results are actually pretty bad.

As the saying now goes: “Give me a good reward, and I’ll give you a good AI model.” But that is easier said than done and has serious trade-offs will comment later.

And what about Kimi K2?

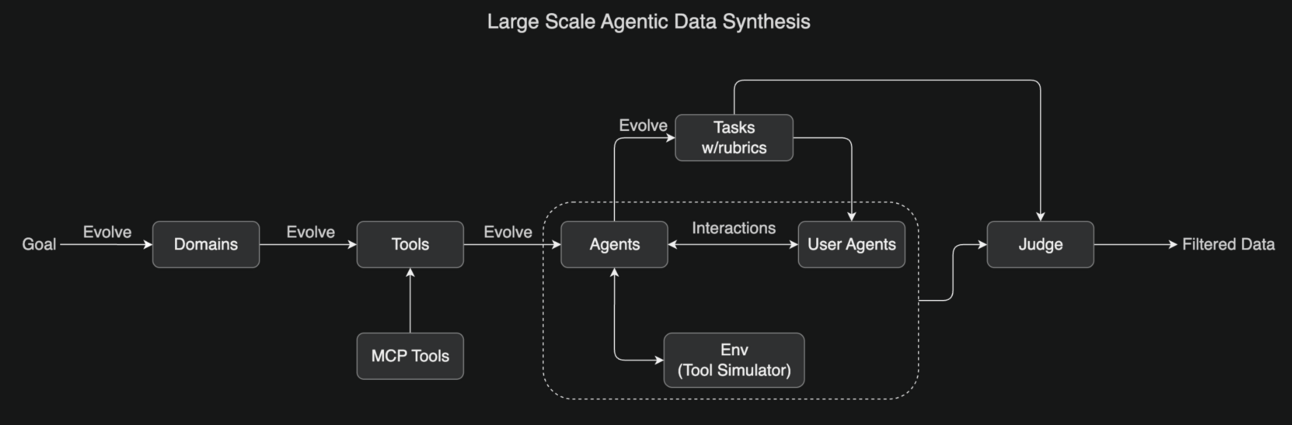

In their case, their main innovation is that, unlike all previous reasoning models, which were trained to ‘reason’ using mainly maths and coding problems, this model has been trained to be a good thinker by exploring agentic settings, solving problems that require heavy tool use in long-horizon settings.

For example, building reports using data from a long tail of sources, or solving business processes requiring multiple tools and steps.

Sounds familiar?

Extensive tool-calling and long-horizon task accuracy were two key components as to why I argued last week that agents were finally ready. Seeing actual research and new models proves this makes me even more comfortable making the case of agents being finally within arm’s reach.

Kimi K2’s agentic synthetic tool-calling training regime. Source

Seeing both success stories, the second part of 2025 promises a complete explosion of models following this precise playbook, which is summarized as follows:

The approach isn’t that important, but as long as you’re focusing on goal-oriented training, aka Reinforcement Learning.

Now, with all this said, it’s time to get into the real consequences:

How does this change the technological landscape of the industry?

How does this affect enterprise AI?

And the labor market?

Let’s answer all these questions.

Customization, Enterprise, and Fine-tuning

The first thing that we need to wrap our heads around is that we don’t yet fully understand how these models behave. Thus, we will see nasty things happening when models push ‘too far’ to achieve goals or directly cheat to ‘pretend’ they are meeting the goal in the expected way.

RL-trained models may rewrite tests, lie about intentions, and exhibit other concerning behaviors that are a result of being too goal-oriented. This is what it is, so instead of crying about it (the resulting models are too powerful just to look back and pretend nothing happened), I predict there will be considerable effort in undermining such behaviors.

Consequently, we’ll see a lot of interest and hype around:

Interpretability and safety training to try to make these models more predictable.

VC interest in the controllability and monitoring layers, aka responsible deployment of AI systems.

While the former will see researchers finding ways to prevent such behaviors, the latter will see products that are focused solely on monitoring other AIs and redacting, notifying, or even blocking such behaviors, especially on enterprise agents.

The second big trend will be the emergence of a new type of AI startup called cRL-SaaS startups, or as I like to call it, the Palantirization of AI.

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- NO ADS

- An additional insights email on Tuesdays

- Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more