Create How-to Videos in Seconds with AI

Stop wasting time on repetitive explanations. Guidde’s AI creates stunning video guides in seconds—11x faster.

Turn boring docs into visual masterpieces

Save hours with AI-powered automation

Share or embed your guide anywhere

How it works: Click capture on the browser extension, and Guidde auto-generates step-by-step video guides with visuals, voiceover, and a call to action.

THEWHITEBOX

TLDR;

This week, we discuss Grok 4’s massive announcement, a super cool robotics launch by HuggingFace, and other news including what I consider one of the most beautiful research papers published in a while, one that shows with striking clarity how promising this new type of training we are doing with reasoning models actually is as a way to build real machine intelligence.

Enjoy!

THEWHITEBOX

Things You’ve Missed By Not Being Premium

Yesterday, we had an extra-large list of interesting news to share, from impressive new AI products like a cracked Excel agent and a chores robot, several market news from Coreweave, Meta, or Anthropic, new technology by Google and Silicon Valley’s latest obsession, AI art, and the lesson it gives us about the future of art in general.

FRONTIER MODELS

xAI Shocks the World with Grok 4

On Monday, I said that OpenAI’s deep research models were the best agents on the planet. Well, while I was correct, my correctness lasted two days.

In this fast-paced industry, we now have a new king of AI: Grok 4. xAI has released two versions of this model:

Grok 4: The standard reasoning version

Grok 4 Heavy: A multi-agent system (multiple Grok 4s together)

With this release, xAI no longer offers non-reasoning models (traditional LLMs) which seems the most possible outcome for most AI Labs due to the growing importance of post-RL training (more on this in our trend of the week).

Both models are available via the SuperGrok subscription, but you’ll have to pay $300/month to access the Heavy version. In the case of the API, Grok 4 is also available there.

In a nutshell, the model, released in the late hours of July 9th in an hour-long live stream, is staggeringly powerful, the most significant jump in quality over competitors since OpenAI announced o3 back in December.

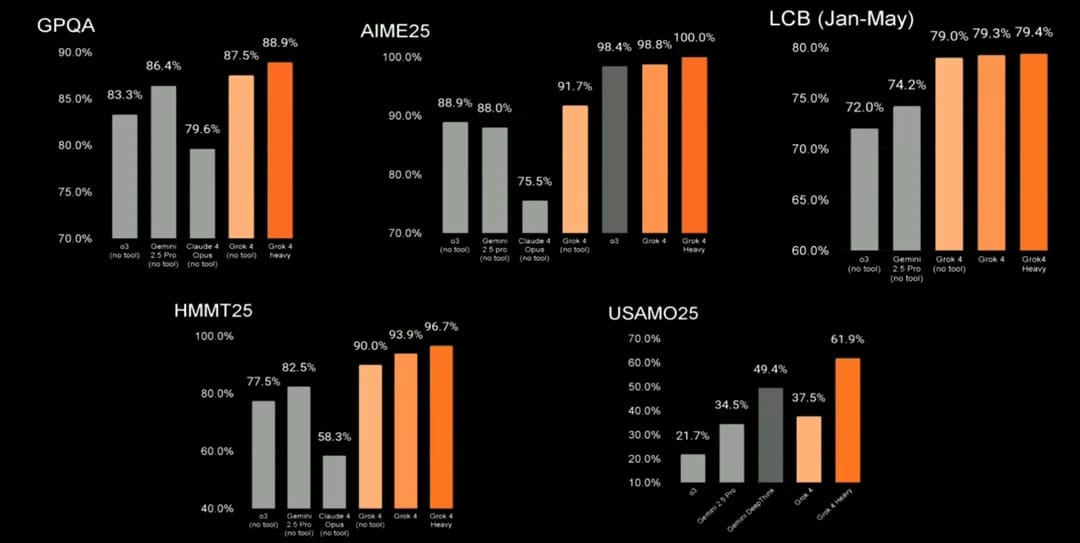

I could go benchmark by benchmark, but let me simplify it: the model is first everywhere.

It’s first in knowledge-based benchmarks like GPQA, first in math (100% on the competition-level AIME benchmark and leading in the USAMO), and everywhere else, basically.

But if we have to highlight particularly impressive results, we have:

A 44.4% in Humanity’s Last Exam, the hardest knowledge-based benchmark out there where humans score well below most AIs these days, more than doubling OpenAI’s o3 result (although using a multi-agent system, w/o the multi-agent setting, the score is slightly lower, 38%, but still humiliates the rest).

A 73% in Artificial Analysis’ Intelligence Index, slightly ahead of OpenAI’s o3-pro, and that’s without testing Grok 4 Heavy.

A record-setting 15.9% in ARC-AGI 2, probably the hardest non-knowledge-based benchmark out there, doubling the previous best result (Claude 4 Opus). As described by the President of ArcPrize (the company behind the ARC-AGI benchmarks), Grok 4 is the first model that “breaks through the noise barrier”, implying that it’s the first model with results that can’t be attributed to ‘sheer luck’, and that it’s showing “non-zero levels of intelligence” quite the remarks from a company notoriously skeptical of the usual intelligence claims in AI.

75% on SWE Bench, one of the primary coding benchmarks, again defeating the rest of the competitors by quite a margin.

If there’s a relevant benchmark where we don’t yet know if Grok 4 leads is the Aider Polyglot, the primary coding benchmark.

Other points worth considering are the following:

The model is impressively good with audio, capable of handling back-and-forth audio conversations with users much better than models from OpenAI.

According to them, the secret sauces are two:

Increased RL compute (test-time scaling) with two orders of magnitude over Grok 2’s total compute budget (100 times more compute). In plain English, the model was trained not only with larger amounts of compute power, but it was also trained to think for longer on tasks… for much longer, if that makes sense.

Embedded tool use during training. During the RL training (which involves a very challenging task where the model has to figure out a solution), the model was permitted to use as many tools as needed, when needed. This not only made the model more capable, but it also made it smarter.

The model is notably comfortable discussing uncomfortable topics. It won’t shy away from almost any conversation. As a side effect, please be aware that the model might say things you don’t like, and that the model is quite possibly considerably right-leaning (in the same way that most other frontier models are mostly left-leaning). They call this being “maximally truth-seeking,” but let me be very clear that these models are all, without exception, biased by their trainers, and xAI is a right-leaning AI Lab.

The models aren’t outrageously expensive, but they aren’t cheap either. They are more costly than OpenAI’s, Google’s, or DeepSeek's, but slightly cheaper than Anthropic’s.

So, is Grok 4 now the go-to model for everything?

Although this model is clearly superior, as I’ve said many times, model differences for most of the tasks you use these models for are marginal, so choosing one or the other mostly depends on personal taste, so don’t feel forced to use Grok just because ‘it’s the best’.

- If your ideas are progressive or liberal, I can guarantee you’ll be happier with ChatGPT or Claude.

- Conversely, if you are a conservative, you’ll be happier with Grok.

For edge cases, cases where you really want the best, I suggest you use models via APIs as these models are more focused on enterprise use-cases not to talk to people, making them far less biased. In that case, or if you aren’t bothered by political biases, you should optimize for performance and that means Grok 4 or o3-pro if the budget allows it, and o4-mini and Gemini 2.5 Flash for the more mundane tasks.

TheWhiteBox’s takeaway:

Lots to discuss here.

First, we have to give credit where credit is due; this company has gone, in 500 days, from zero to the best model, while all of its competitors have been in this game for multiple years.

Focusing on the technicalities, this release highlights the importance of several things we have been commenting on in this very newsletter:

RL training is the key factor right now. Everything revolves around how well your post-training is and, importantly, we aren’t seeing the test-time scaling law (the law that larger compute budgets on models thinking for longer on tasks yield better models) stagnating at all.

As Elon Musk himself stated, tool use is a key component; the more tools the model can use in the same request, the better the outcomes (<beginning of self-praise>if that sounds familiar, that’s literally what I said on Monday <end of self-praise/>). This highlights the significant opportunity for ‘software agentic tools’, as there’s substantial potential for profit in developing an agentic tool that most AI models utilize in their processes.

They are all playing the same game. There is no technology moat, only a data one. xAI researchers and Elon both mentioned in the stream that they are running out of problems to give the models that they can’t solve yet. As we have mentioned in the past, xAI has a unique positioning in terms of engineering data, as they will have access to data from SpaceX, Tesla, and Neuralink. I once said xAI’s recent valuations were outrageous. Well, I might have been very wrong in retrospect, as these guys look amazing talent-wise, and greatly positioned under Elon’s infinite money glitch.

The real frontier: perception. Also mentioned by Elon, it seems that AIs are reaching a limit in terms of problem-solving data. Soon, there will not be a textual problem they can’t solve (even if they still rely heavily on memorization, nothing prevents these labs from feeding them every single data point on the planet anyway, so why care about that distinction at this point). Thus, the real intelligence test will come from reality: can these models be used as the brains of robots like Tesla Optimus deployed in the physical world? Right now, the answer is categorically no, but the fact that we are already discussing this milestone is a testament to how much AI has evolved.

VENTURE CAPITALISM

Mistral in talks to raise $1 Billion

A year after its last fundraising, Mistral is in talks to raise $1 billion to fuel the development of new models and applications. The company is in talks to borrow debt, too.

TheWhiteBox’s takeaway:

I just don’t know what to say about mid-tier AI Labs, because I really don’t know what to think about them.

Labs like Mistral, Cohere, et al are great companies, but being ‘great’ isn’t enough when you are competing with monsters like OpenAI, xAI, Google, or xAI, and with Meta and its superintelligence team joining the fray too.

I believe that the only reason these two companies are allowed to live is because they are national champions (in the same way that SSI is for Israel). Possibly seen as a matter of national security, these labs benefit from being a matter of national security, facilitating liquidity.

Would I invest in Mistral today? Yes, they have a great team and a great positioning in Europe.

Would I invest otherwise? Hell no!

AI LEGISLATION

SB 53 Continues to Move Forward

California’s SB 53 (2025-26) continues to advance, with some recent amendments, in what tries to be the first state-level significant AI legislation in the US, more concretely, California.

It’s an AI-governance bill that now rolls three big ideas into a single package:

mandatory transparency for “frontier-model” developers,

robust whistle-blower protections,

and the blueprint for a public-sector super-cloud called CalCompute.

The bill’s heart is the Transparency in Frontier Artificial Intelligence Act. Any “large developer” that trains a foundation model using more than 10²⁶ FLOPs (roughly a nine-figure cloud bill, in the domain of current frontier models—althoguh I believe models like Grok 4 are probably already an order of magnitude over) must publish a detailed Safety & Security Protocol before deployment, keep it updated within 30 days of any material change, and release a public transparency report for every new or substantially modified model.

Those reports have to spell out risk-assessment methods, third-party audit results, mitigation steps, and the developer’s rationale for shipping a model that still carries a “catastrophic-risk” profile (death or $1 billion-plus damage). Developers must also notify the Attorney General of any “critical safety incident” within 15 days, and they face civil penalties, enforced by the AG, for false or misleading statements.

Moving on, a second pillar extends California’s whistle-blower law to AI.

Employees, contractors, or even unpaid advisers who spot catastrophic-risk issues or violations of the Act can report to the AG, federal authorities, or internal channels without fear of retaliation. SB 53 forces firms to post annual rights notices, maintain an anonymous reporting portal that gives monthly status updates, and shifts the burden of proof to the company in any retaliation lawsuit. Courts may award attorneys’ fees and grant fast injunctive relief to stop ongoing reprisals.

Finally, the bill orders the Government Operations Agency to convene a 14-member CalCompute consortium (academics, labor groups, public-interest advocates, and AI technologists) to design a publicly owned cloud cluster housed, if feasible, at the University of California.

The consortium must deliver a complete framework (governance model, cost estimates, workforce plan, and access policy) by 1 January 2027, after which it dissolves.

CalCompute’s mission is to “democratise” high-end compute so that researchers, smaller companies, and public agencies can pursue ‘safe, ethical, equitable and sustainable’ AI. The cluster will only launch once the Legislature appropriates funds.

TheWhiteBox’s takeaway:

The bill contains some very concerning aspects and others that are interesting, but it’s essential to note where this is coming from. Scott, the senator behind this bill, is closely associated with AI doomers, individuals like Dan Hendrycks, who sincerely believe in AI as an existential risk to humanity.

Nonetheless, SB 53 is just a softened legislative proposal compared to the original SB 1047 that Governor Newsom eventually vetoed. But this is still a legislation proposed by a pessimist, hawkish cohort.

Personally, I believe AI doomers think AI will ‘kill us all’ just because they are projecting what they would do if they were that AI. But as Anthropic has shown us multiple times, the more you optimize something to be safe, the more unsafe it becomes.

It’s highly similar to announcing to a kid that they could do something, and immediately telling them not to do it. In reality, you are just giving them an idea and have made it much more likely they’ll do it. Much of the AI safety paradigm is very similar to this analogy.

Furthermore, they continue to propose arbitrary compute budgets as ‘ok, here’s when AIs become dangerous.’ No, that’s not true. You can build safe AIs with significantly larger compute budgets and dangerous AIs with significantly smaller budgets. Legislation was never meant to be arbitrary; therefore, this is unacceptable.

They do have some positive things, though. Protection for whistleblowers is a good thing; if these people see something that is really bad, they should be protected to allow them to speak out.

Additionally, having public compute, which could be potentially used to train and run models that support Government services and as a potential source of income for the US Government with rampant deficits, is also a good idea.

However, I worry about the talent in charge of running those clusters (public management is rarely well-executed), but placing this cluster at the University of California makes me feel a lot better about it. I’m okay with having public clouds run by public servants as long as these are profitable and not an enormous waste of money.

ROBOTICS

HuggingFace Launches $299 Trainable Robot

Hugging Face, valued at around $4.5 billion and boasting over 10 million users, has released Reachy Mini, an 11‑inch, open‑source desktop humanoid priced at $299. Designed to democratize AI-driven robotics, it contrasts sharply with industrial and humanoid robots that typically cost tens of thousands of dollars.

Despite its modest size, the robot packs robust hardware: six degrees of freedom in head movement, full-body rotation, expressive antennas, a wide-angle camera, microphones, and a speaker. The wireless version includes a Raspberry Pi 5 and onboard battery, making it fully autonomous.

In a nutshell, an affordable programmable robot for those wanting to train robots affordably.

TheWhiteBox’s takeaway:

Absolutely fantastic project, a complete must-buy if you want to introduce kids to coding, AI, and robotics in a fun, interactive way. I care about the future of our kids, and I’ve already ranted recently on how broken modern education is, completely unprepared for the AI Wave.

These types of projects should not be recommended, but compulsory for schools to include in their curriculum because it will get kids hooked on the idea not only of using AI and programming robots, but also getting their hands dirty, running into problems, and solving them.

People ask me a lot what the star skill a human needs in the AI era is, and my answer is always, always, having a bias for action.

In an era where AI allows you to ‘just do things,’ those who do things are those who succeed.

Getting your kids hooked on building with AI and robots is the shortest way to train a successful adult in the era that’s coming our way.

Does this project justify HuggingFace’s insane valuation?

Not even close, but it creates enormous amounts of goodwill around a company that feels like a superhero amongst profit-only villains; shows that some in this space do want to use AI for something more than just making money.

TREND OF THE WEEK

A Beautiful Research into Generalization

One of the most recent viral papers is actually beautiful, as understanding its conclusions clarifies why the AI industry has become so obsessed with the Reinforcement Learning (RL) paradigm that has created, among other things, the Grok 4 model discussed above.

Published by a group of researchers from both the US and Hong Kong, it shows how AI models develop as we post-train them (making them better at particular areas) and how different techniques make all the difference, revealing why RL has great potential of being an actual precursor to real machine intelligence.

What Does it Really Mean to Train an AI?

As I have mentioned multiple times, there are essentially two primary methods for training AI models: imitation and exploration.

The first trains AI models by making them imitate data (95% or more of all AIs in existence were trained this way),

and the second one trains models by giving them the option to find an unknown way (for the model, at least) to solve a problem, always assuming that the base model that is about to be explored has an above-chance possibility of solving the problem (otherwise it’s just guessing, which means that training would take forever).

And where do Large Language Models, or Generative AI models in general, fall? Well, it’s a mix.

They always start with imitation learning at scale, making them imitate trillions of words of data. This gives us the base model. However, this is not the model you use, as the ones that hit the market always have post-training techniques.

These are also broken down into two:

Supervised Fine-tuning (SFT): An imitation learning technique where we prepare a reasonably large-ish dataset of data that the model has to imitate again. However, this time, the dataset is of extremely high quality and biased toward capabilities like instruction following, refusal to harmful prompts, step-by-step reasoning (chain of thought), and particular domains the AI Lab wants to make sure the model is good at.

Reinforcement Learning: This is an exploration-based, trial-and-error training regime where the model knows the final answer and has to find its way to it, as explained earlier.

While both sound similar, the semantics matter here. And understanding this will make your understanding of AI actually be pretty advanced.

While both techniques may use the same problems to test on, the approach—while misunderstood even by many researchers—is fundamentally different, as the former literally guides the model from the question to the solution, including all intermediate steps.

On the other hand, the latter provides the model with the ground truth solution and a pat on the back, allowing the model to decide for itself how to achieve it. Same end result, two totally different ways of getting there.

But wait a second, if SFT shows the model exactly how to solve the problem, why should we bother using RL and other exploration techniques that seem far more inefficient?

And the answer, the crux of today’s research, is generalization.

As you know, if you’re a recurrent reader of this newsletter, fine-tuning (retraining a model) always comes at a cost: if you train a model on a new domain extensively, you risk losing performance on other areas.

Of course, that should not happen if the model is really “intelligent.”

If the model is really abstracting the patterns instead of memorizing the solutions, good math priors should transfer to other sciences, as math is the foundation of all STEM fields.

As Peter Sullivan says in the film Margin Call, when asked why a rocket scientist ends up in a risk management department of a large investment bank, Peter responds:

“Well, it’s all numbers really. Just changing what you're adding up. And, to speak freely, the money here is considerably more attractive.”

Put simply, a model that truly understands mathematics should be able to generalize and apply its knowledge to physics or chemistry, or at the very least, be able to apply mathematical knowledge to those areas while learning them.

And here’s where things get murky and set the stage for one of the most important things you can learn from AI training:

The main takeaway from the research is that imitation learning, while more convenient, kills generalization; it kills intelligence understand as a form of adaptating “known knowns” to the unknown.

And this makes all the difference.

Generalization is Intelligence.

While, even recently, imitation learning has been the go-to training regime even at the frontier, this research provides concrete evidence that RL generalizes well to different domains, offering a novel explanation for why AI Labs are so interested in this type of training.

To prove this, they performed the following test:

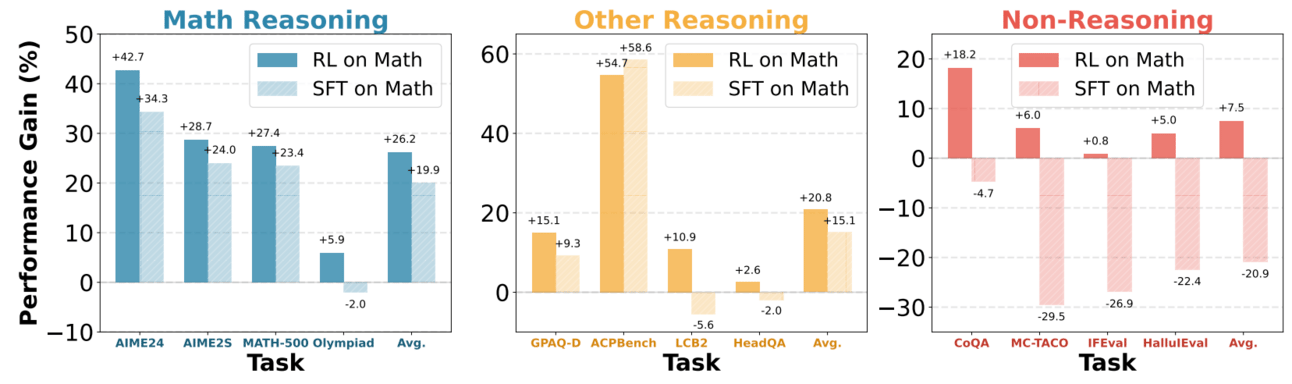

Using the same base model and fine-tuning dataset, ensuring the training technique (imitation vs RL) is the only difference and, thus, guaranteeing that if we observe differences it is due to the fine-tuning method, they trained two different models to see if they could observe difference in how the models would then perform in areas they weren’t trained for explicitly (i.e., training for maths, testing in sciences or essay writing).

And the results were clear as day. After evaluating the math fine-tuned models on benchmarks related to other math domains,

The RL model improved performance in other areas, including those apparently unrelated to math.

In contrast, the SFT model showed mixed results. While it showed moderate improvement in math-related domains (sciences), its performance severely declined in non-reasoning domains.

It must be said they did not test whether differences were statistically significant, although they are very compelling.

In a nutshell, the same model trained on the same dataset exhibits improved generalization capabilities when fine-tuned using exploration-based techniques. In contrast, it regresses in non-mathematical areas when trained on mathematics using imitation learning.

Put another way, we can conclude that:

Imitation learning enables the model to rote-imitate the dataset, potentially forgetting relevant information in the process if necessary; it doesn’t need it as the solution process is laid out for it, so why bother using previous learnings?

On the other hand, the exploration-based model, as it’s forced to find the solution to the problem, is much more likely to base the exploration on known priors (things it knows).

Intuitively, you can frame this from the perspective of human learning. Picture yourself learning with the two methods. In the former, you are given a solution, so you don’t need to recall anything, just imitate. With the latter, you aren’t given the solution process, so you'd better use your “known knowns” to solve the problem.

In a way, one is a lazy way of learning that requires low cognitive effort, just rote imitation, while the other requires actual “intelligence” which is nothing but applying learned abstractions to new data.

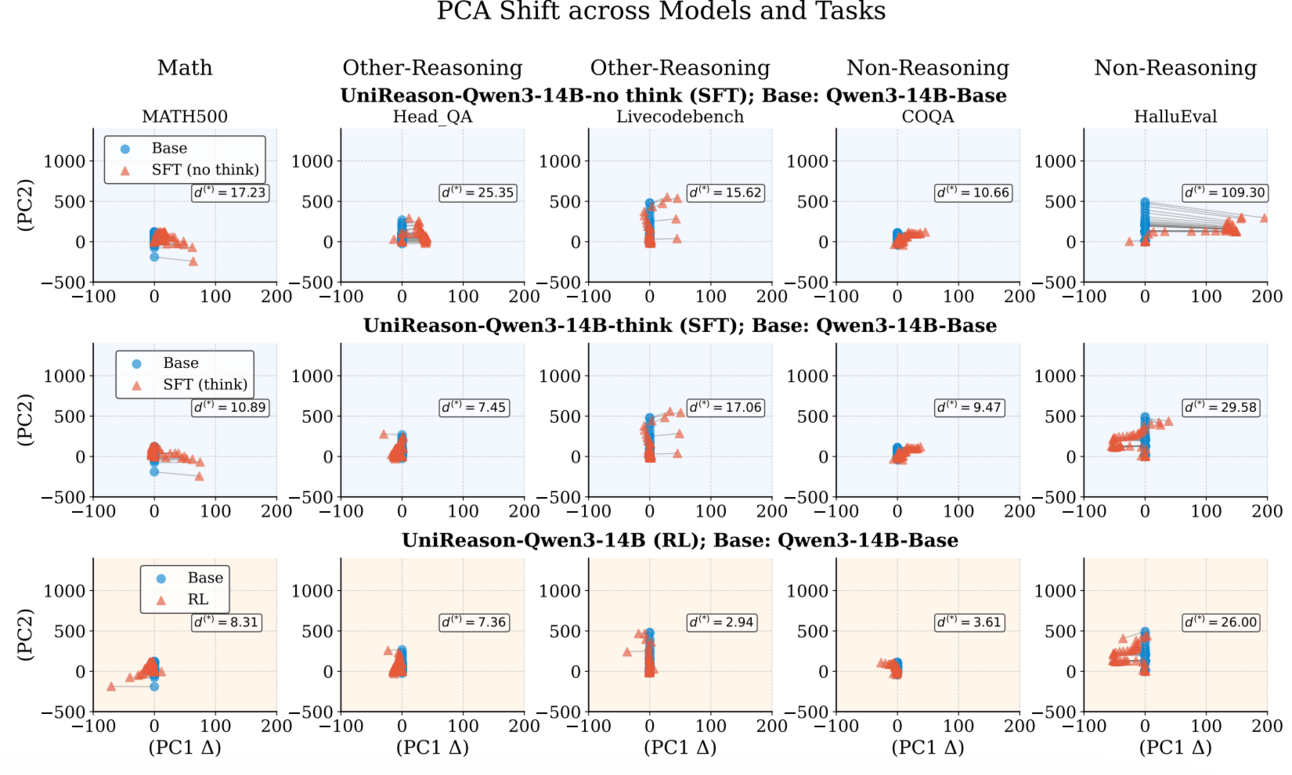

Furthermore, they proved the thesis further by using PCA (Principal Component Analysis), a dimension-reduction technique that shows the two dimensions of higher variance in the model’s latent activations.

That’s a gibberish-sounding paragraph if you’ve ever seen one, I know. But let me simplify:

They analyze the model’s internal structures to see if they changed a lot after fine-tuning. If the model’s internal structure varies significantly, we assume the technique isn’t effectively aiding generalization, but rather steering the model too much into that domain. In othe words, it’s not finding new ways to use what it knows to adapt to a new domain, it’s learning everything “from scratch” again and forgetting previous learnings in the process.

Conversely, if the internal structures remain ‘similar’ despite the model’s outputs improving, it suggests that the model’s understanding of maths and sciences overall has not changed that much despite the model being better at maths, indicating that it’s just learning the new stuff that matters and keeping most of the model’s correct beliefs intact, only adapting when learned priors aren’t enough to solve the problem.

As you can see below, the model in the first two rows, the SFT model, the model trained by imitation, shows a very different pattern structure, suggesting that the model has changed a lot with SFT training.

Contra, the RL model (third row) still shows internal structure very similar to the base model (despite the improved performance), proving that you can train models to be better at a new domain without having to modify the model’s base structure too much, in a similar way that you don’t have to redefine all your beliefs about maths whenever you study new domains like physics (2+2 = 4 in both domains, so why bother relearning what you already consider to be true?).

To summarize, the key abstractions that are common across al sciences aren’t challenged by new maths; you’re just adapting your brain to new knowledge without changing what you already know to work.

In short, generalization is intelligence, and the new training regime dominating the AI scene these days (as illustrated by Grok 4), Reinforcement Learning, is a promising way to develop real intelligence in machines.

Isn’t that, well, a big, beautiful research?

THEWHITEBOX

Join Premium Today!

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]