THEWHITEBOX

TLDR;

Welcome back! This week, we have another long tail of news to talk about, from new powerful models, both large and small, a shocking announcement from Micron, a key AI player, Google’s release of Gemini 3 Pro Deep Think, the alleged most powerful model in the planet I’ve compared to GPT-5.1 Pro to test that claim, and analysis-heavy insights on advanced packaging, the GPU trade and Intel, and Anthropic’s pack of new features for agents.

Enjoy!

THEWHITEBOX

Things You’re Missing Out On

On Wednesday, we reviewed Amazon’s new flashy AI servers, an essay against space data centers, products from Runway and anti-slop prompts by Cursor, releases by Mistral and DeepSeek with very different outcomes, as well as news in markets, including a potential Big Lab IPO.

FRONTIER RESEARCH

DeepSeek v3.2 Speciale, Dirt-cheap frontier performance

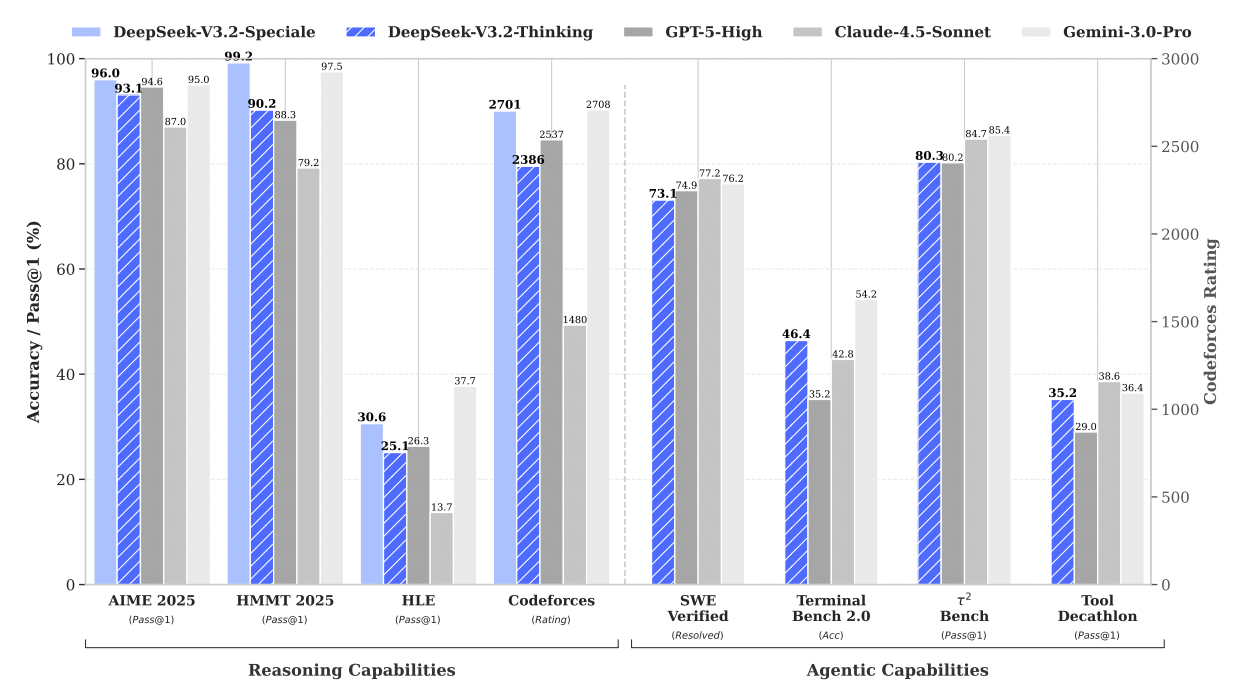

DeepSeek is back. They have released two new models, DeepSeek v3.2 Thinking and DeepSeek v3.2 Speciale (and even more ramped-up, almost experimental version), and they are not only frontier-level in performance but also an order of magnitude cheaper than Western models.

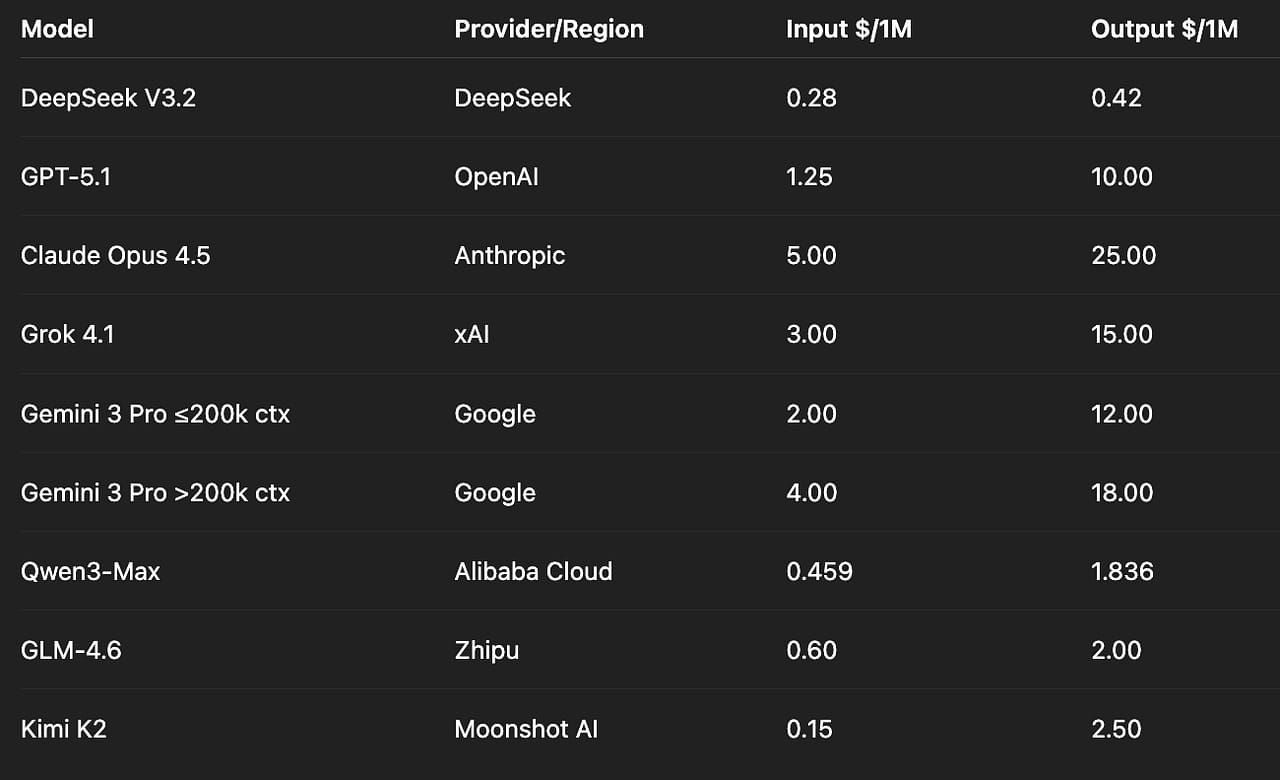

Price comparison.

The secret is a technique called DeepSeek Sparse Attention, which I believe will soon be adopted by most Labs due to its clear cost savings. But how does it work?

Well, I get into much greater detail on Medium, which you can read for free here, but the point is that it taps into one of a Large Language Model’s most inefficient behaviors: global attention.

It’s like a blessing and a curse. Underneath your favorite model lies an “attention mechanism”. This computational process makes words “talk to each other” and update when that contextual information (e.g., “The Black Pirate”, ‘pirate’ attends to ‘black’ and thus absorbs the attribute of being black).

The problem is that models aren’t opinionated as to what to pay attention to, meaning each word has to pay attention to every single other preceding word in the sequence, which causes computational requirements to explode as the length of the input sequence to the model (what you give to ChatGPT) grows.

To solve this, DeepSeek has introduced a token selector that pre-emptively selects good word candidates and performs attention only on them, dramatically reducing costs (e.g., for the sequence “The Black Pirate was, uhm, funny”: the token selector may identify ‘uhm’ as irrelevant and thus ignore it by the attention mechanism). And it works.

TheWhiteBox’s takeaway:

The most significant consequence of DSA is that it may initiate a major token price deflationary event globally, because it really represents a dramatic cost reduction.

Western AI labs, which are currently operating on models that are dramatically less efficient and burning massive amounts of venture capital, will be compelled—forced—to lower their token prices to remain competitive. This threatens to expose the underlying unsustainability of their high-cost business models.

Strategically, as I always mention, Chinese open-source efforts are an obvious counter to US GPU export controls. Since Chinese companies face limits on acquiring the most powerful hardware, they are instead innovating at the software and algorithmic levels, not only to build better models but also to make Western Labs’ lives much harder by making the current high-cost model of Western AI financially untenable.

With ever more so cheap token prices, and with the capital side (building the data centers), not only not becoming more affordable but actually more expensive on a nominal basis, this will only widen the gap between capital expenditures and real revenues (i.e., make it harder for Hyperscalers like Microsoft or Labs like OpenAI to turn a profit on these investments), and placing additional pressure.

At this point, most investors are already uncomfortable with pricier financial rounds, given that OpenAI and Anthropic are valued at $500 billion and $300 billion respectively, so an even less likely route to profitability may push US AI firms faster toward relying heavily on high-risk debt financing (corporate bonds) as early as 2026.

Some Hyperscalers like Meta, Oracle, and Google have started issuing corporate bonds, but more due to favorable economics than because of necessity (except for Oracle, which is free-cash-flow negative). 2026 might make it a necessity.

Of course, we both know what this all really means: 2026 is the year of AI Lab IPOs.

CODING AGENTS

First Top SLM Trained on AMD’s GPUs?

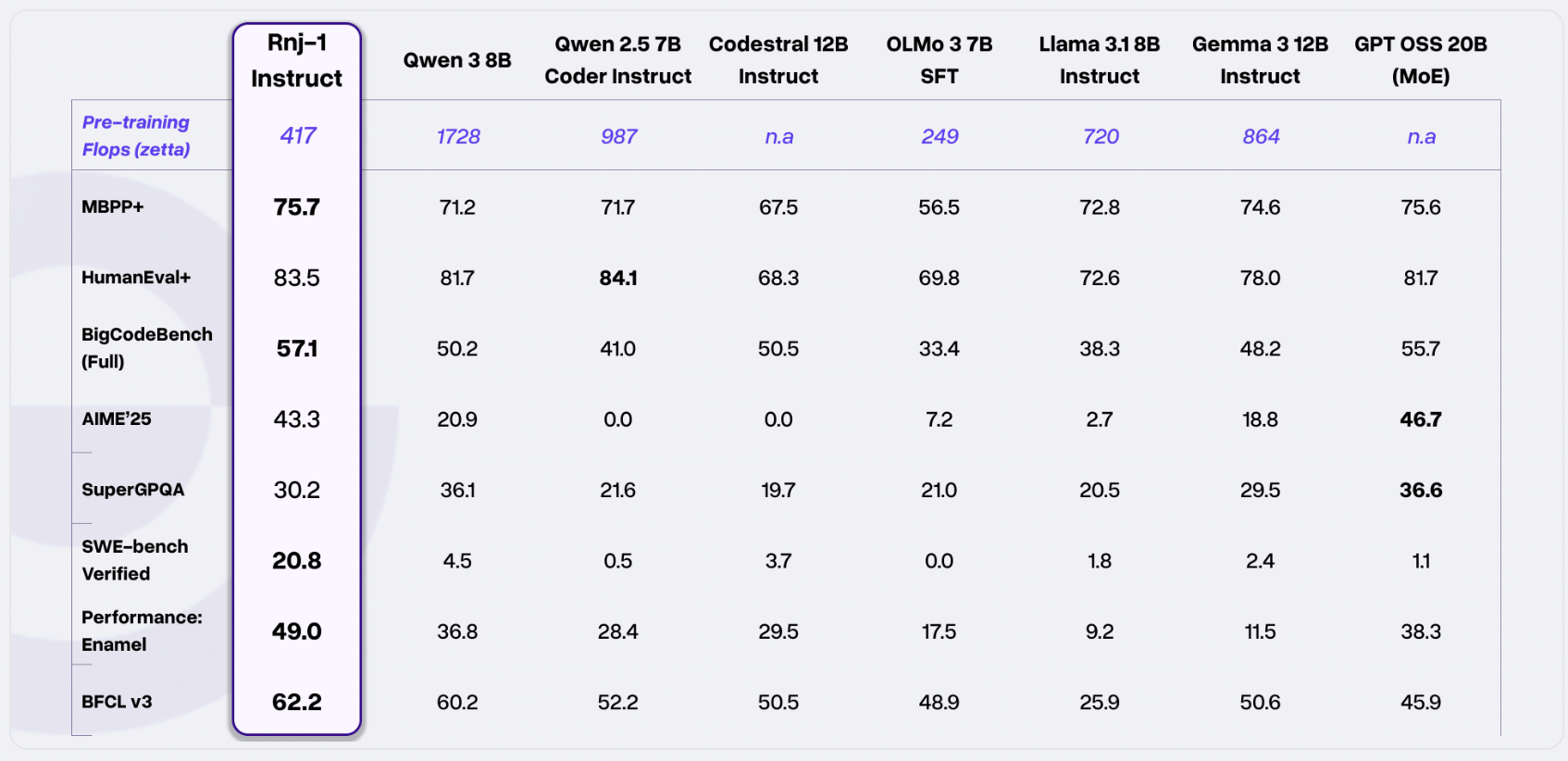

We have a new best-in-class small model for coding and agentic tasks. Rnj-1 (pronounced Range-1, and named in honor of legendary mathematician Ramanujan) is an open-weight 8B language model pair (Base and Instruct) roughly following Gemma 3’s design (meaning the architecture is similar to Google’s Gemma 3.

It is positioned as a strong open model for code, math, scientific reasoning, and tool use, matching or beating similarly sized models and sometimes larger ones on those tasks.

It seems to be very maths/coding/agents-focused, so it’s probably inferior to Mistral’s Ministral models we covered on the Premium news post on knowledge tasks.

Their results in coding, especially on the SWE-bench Verified benchmark, the most often cited benchmark by AI Labs to showcase coding capabilities, are pretty impressive, showing more than an order-of-magnitude improvement over similar-sized models.

But the most impressive thing about this model isn’t the model itself.

TheWhiteBox’s takeaway:

Two things caught my eye. First, the amount of training it required was way less than that of other models, despite being pretrained from scratch. They quote 417 zettaFLOPs of training, meaning the model required 417×1021 floating point operations (i.e., math operations) to train, or 417 sextillion operations.

That sounds like a lot, right? It’s a lot of zeros. Well, it’s not. For starters, it is more than two times less than the average cost to train similar-sized models, so this model was already cost-efficient at its level.

But with frontier models having crossed the 1026 FLOP mark with Grok 4 and the 1027 FLOP mark with examples like Gemini 3 Pro, this model required 100,000 times less compute than Grok 4 and 1,000,000 times less than Gemini 3 Pro. Therefore, despite its strong performance, it’s actually minute as things stand today.

In fact, in a perfect world (a world where GPUs in a training cluster run at 100% Model Flop Utilization, or MFU, which, as we saw on Monday, is not possible), this model would have been trained by xAI’s 200,000-H100-equivalents-strong Colossus 2 data center in Memphis, with a total compute power of in… 1054 seconds.

Calculation: Each H100 offers 1,979 TeraFLOPs of theoretical peak compute at BF16 precision. 200k of them gives you 3.958×1020 FLOPs of compute power. We need 417×1021 training FLOP, so if we divide both numbers, you get 1054 seconds, or ~18 minutes.

But even if we assumed 30% MFU, a much more reasonable number for your average AI training workload, it’s basically requiring an hour to train on such a cluster. And if we take their own quoted MFU of 50%, Elon Musk’s cluster would have taken around 36 minutes to get through this model’s training.

But perhaps even more interestingly, they trained this model using a combination of AMD GPUs and Google TPUs (they didn’t go all the way because AMD GPU Jax support was poor when they did this training). No NVIDIA GPU was “harmed” in this process.

This is quite relevant because it shows adoption for AMD and proves that a world without NVIDIA GPUs actually exists. But as we’ll see in the markets section below… maybe this won’t matter after all.

MEMORY HARDWARE

And Jensen Then Said… “Buy them all.”

While most analysts like me are making elaborate analyses comparing NVIDIA GPUs to the rest of the world, maybe it won’t matter after all.

The reason is that strong rumours are circulating that NVIDIA has pre-bought all CoWoS capacity from TSMC to manufacture its chips.

This comes after Morgan Stanley predicted that TSMC would manufacture 4 million TPUs for Google in 2026 and 5 million in 2027… only to see Taiwanese Fubon Research release a much more conservative reporting of just over 3 million TPUs, as NVIDIA seems to have absorbed most of the wafer capacity.

NVIDIA and Google design chips, but these are manufactured by TSMC using an advanced packaging system that first manufacturers a chip wafer (a circular silicon piece full of GPU/TPU dies, and mounts them into a silicon interposer connected to the memory chips) called CoWoS, giving you the actual piece of equipment that gets mounted onto the PCB (printed circuit board) and into the AI server rack.

In other words, most of the capacity from TSMC, the manufacturer of basically all advanced chips on the planet (NVIDIA, AMD, Google, Microsoft, Qualcomm, Broadcom, Amazon, or Apple are ALL TSMC customers) has been purchased by a combination of both NVIDIA (for 4nm process node) and Apple (3 nanometers and below, mainly used for smartphones and less relevant to AI besides Amazon’s Trainium 3 chip).

This is no joke, as people tend to conflate willingness with feasibility. Google could want to manufacture 10 million TPUs, but there’s simply no capacity for that. TSMC is an “order first, build later” company, meaning it always requires purchase guarantees before building anything. Thus, it seems that Jensen’s deep Taiwanese relationships are a key factor in determining who wins and who loses in AI today.

Therefore, even if competitors may be catching up technologically speaking, the Jensen moat might be more important than the technology itself, actually.

But what if… this is an opportunity for another company?

TheWhiteBox’s takeaway:

If you’re a paid subscriber, you already know we’ve been feeling pretty bullish about Intel lately, mainly due to its national security importance to the US.

And with TSMC potentially becoming a bigger bottleneck than ever, Intel’s EMIB packaging system (a cheaper alternative to TSMC’s CoWoS) may be a solution for fabless companies that have been “outsupplied” by NVIDIA.

There’s literally zero evidence this has begun to happen, but that’s the point of being early to parties as an investor; it has some risk, but the reward may be higher.

MEMORY HARDWARE

Micron Exits Memory Consumer Market. This is a big deal

Micron, a key player in the AI space as one of the only three suppliers of High Bandwidth Memory, or HBM, the memory used in most AI accelerators (GPUs, TPUs, ASICs like Trainium, etc.), has announced it is exiting the consumer market.

In other words, it will no longer provide memory chips for laptops, tablets, or smartphones through its subsidiary Crucial. Instead, it will focus solely on delivering memory to AI server companies.

TheWhiteBox’s takeaway:

The reason this is extraordinary is that this company is literally shutting down a business for not being profitable enough.

Yes, this segment was considered by some reports as the lowest margin amongst Micron’s revenue streams, especially when compared to the AI segment, but nobody was expecting them to shut it down.

Nevertheless, besides being a profitable business, it was set to see an explosion in margins due to the tight constraints we are seeing in memory markets in general—mostly on RAM, but this is spiraling into SSD and HDD (non-volatile memory, the memory you don’t lose if you close your computer), because the AI industry uses these two also a lot in its workloads (especially SSD).

For all those reasons, the takeaways are pretty significant in this one:

They are definitely seeing significant growth in the AI business (they recently also won the contract for Amazon’s new Trn3 chips). This is not only positive for them, but it also gives us confidence that Hyperscalers aren’t slowing expenditures.

As I continue to believe Hyperscaler CapEx guidance is the thermometer for this entire industry, I don’t think a significant, Big-Tech correction is coming unless this slows (could definitely be wrong, though).

This seems to confirm what we already predicted in this newsletter a month ago, when we started discussing the memory supercycle we could see in 2026: memory seems poised to stop being a cyclical business and instead become one with predictable demand. And you know what happens in those situations, right? PE ratios skyrocket.

The memory crunch has one unexpected winner, Apple, which enjoys contracted prices and thus is oblivious to price hikes in memory. 2026 stands to be a good year for them (we’ll talk about this in the near future).

I recently invested in one of the three big memory companies (SK Hynix, Micron, Samsung), which I believe is the one best positioned for a stock surge in 2026. I disclosed it here for Full Premium Subscribers.

NEOCLOUDS

Google partner Fluidstack to raise $700 Million

Fluidstack, a fast-growing London-based AI cloud provider, is in talks to raise over $700 million in new funding.

Fluidstack expects over $400 million in sales this year, up from $65 million last year, and has secured more than $10 billion in chip-backed credit (loans where the chips are used as collateral), but remains much smaller than rival CoreWeave and faces sector-wide questions about the profitability and debt load of AI-focused cloud providers (to be fair, that question applies to the entire data center sector).

TheWhiteBox’s takeaway:

The importance of this neocloud is probably not obvious, but we’re talking about Google’s leading partner for its TPU expansion.

For instance, Fluidstack is the data center operator that will manage Anthropic’s TPU cluster. Anthropic plans to use up to 1 million TPUs through this setup (400k purchased, 600k rented through Google’s Cloud Platform).

The common pattern once again is the heavy use of debt to keep the train going. Nonetheless, in the Anthropic deal, Google promised to serve as a backstop in case Fluidstack stopped paying the data center builder, TeraWulf, with a $3.2 billion backstop. As I always say, this is not a sign of a healthy industry, period.

MODELS

Google Releases Gemini 3 Deep Think

Google has finally released Gemini 3 Deep Think, the ramped-up version of Gemini 3 Pro that set several state-of-the-art results across most top benchmarks. The new “model” (it’s more than a model, more on this in a second) is now available through the Gemini App, but only for Gemini Ultra subscribers ($200/month subscription).

Just like OpenAI’s GPT-5.1 Pro or DeepSeek v3.2 Speciale for DeepSeek, Gemini 3 Pro is an offering by Google in which the model is pushed to the max in terms of test-time compute. In layman’s terms, it’s allowed to think for much longer on any given task, generally leading to better results if the task benefits from it.

To put into perspective what this reasoning feature implies, here are comparative scores between Gemini 3 Pro and its Deep Think version:

ARC-AGI-2: Gemini 3 Pro scores around 31.1%, while Gemini 3 Deep Think hits 45.1%, which is a massive jump from Gemini 2.5 Pro’s 4.9% and roughly 2.5× GPT-5.1’s 17.6%. It’s currently the top-reported score on ARC-AGI-2.

Humanity’s Last Exam: Gemini 3 Deep Think gets 41.0% without tools, beating the already strong Gemini 3 Pro baseline (Google calls this “industry-leading” on that benchmark).

GPQA Diamond: Gemini 3 Pro is at 91.9%; Deep Think mode pushes that to 93.8%, giving it a slight but notable lead over GPT-5.1 at 88.1% on advanced scientific QA.

Of course, this feature makes sense only when there’s a benefit to deploying more compute.

For example, giving the user the capital of France does not benefit too much from the model thinking for ten minutes straight on it (either it knows or can search for it, longer thinking times will be irrelevant), but it does provide much better results in things like solving a complex math problem, reviewing the quality of an article, or planning your trip to Japan with greater detail.

We don’t quite know how these systems work under the hood, but we can make very sensible inferences:

It most likely uses verifiers, surrogate models that routinely analyze the generator model’s thoughts and help it navigate more complex questions.

It also most likely uses best-of-N sampling, meaning the model may try your task several times and keep the best one.

Perform more varied, computationally intensive Internet searches to gain additional context.

Have considerably larger context windows (more working memory, as explained in the next news below).

They may even be using several other surrogate models, such as sub-agents, that handle tasks like Internet search, planning, or, of course, verification to support the larger model.

But is Gemini 3 Pro Deep Think worth it? How does it compare to GPT-5.1 Pro?

TheWhiteBox’s takeaway:

I’ve long felt that no model on the planet has come close to what OpenAI did with their Pro models, to the point that I’m a happy ChatGPT Pro paying subscriber (accessing it through the API is absurdly expensive and not worth it, same with the Deep Research API).

So, when Gemini 3 Pro Deep Think came out yesterday, I had to test, so I became a Gemini Ultra subscriber too, and I’ve been comparing them to similar prompts to see which one I prefer.

First, it’s important to clarify that I have not yet been rate-limited in ChatGPT Pro. Not once.

On the other hand, I’m continually running into high-congestion, temporary rate limits in Google Ultra, which represents a clear point in favor of ChatGPT.

Putting that aside, I tested both on the same prompt, and the results were pretty much night and day. GPT-5.1 Pro blows Gemini 3 Pro Deep Think out of the water. And when I say that, I mean it as an understatement of the difference in quality.

But why?

While GPT-5.1 Pro came back with a long, thorough, and detailed explanation of everything I asked, citing research papers and relevant news articles, covering every single part of the prompt with enough detail, and was extremely more opinionated about the topic, Deep Think’s result was mediocre at best.

But then, how is Deep Think superior benchmark-wise? Well, the answer is quite simple:

Gemini 3 Pro Deep Think might be more benchmaxxed (explicitly trained to do better at benchmarks)

The amount of inference-time compute might be much smaller in practice, meaning they have deployed “longer thinkers” to perform on the benchmarks and score the marketing points, but the models you actually get are a tiny version of that.

Further proving the point, although a higher word count does not imply better, it does suggest higher amounts of inference-time compute, and GPT-5.1 Pro’s analysis had 3,709 words, while Deep Think’s response (again, for the same prompt) had 870, almost five times fewer words.

I know, word count isn’t everything, but how much smarter would Gemini 3 Pro have to be to offer the same detailedness with 4 times fewer words?

And even if one can make the argument that Gemini 3 Pro might be more to the point, fine, but that’s not the point of using the Pro models. If I want a concise summary, I can use the standard models and save $180/month.

Overall, not even a close call, and for the same price ChatGPT Pro is way more worth it if you’re focusing on model superiority (Gemini Ultra does include way more features, as Google Labs projects like Whisk or Flow, tighter workspace integration, Veo 3.1, and more), but none of these are relevant to my day-to-day, so my decision is clear, as it pertains to how I use AI, is pretty clear.

What I will say is that, considering this is clearly an inference-time compute issue (OpenAI is deploying way more compute to the task than Google), and how palpable this is seeing not only word count, but also how much rate-limited I get in Gemini Ultra, we can give them the benefit of the doubt considering how new everything is, and that compute levels should improve way more over the weeks (in fact, they’ve just announced higher limits for their coding tool Antigravity).

But for now, the decision isn’t even close.

And this is coming from someone with a decent portion of their net worth in Google stock. But I must give credit where credit is due, and I maintain my firm conviction that GPT-5.1 Pro is the best AI model/system on the planet right now, and by a wide margin.

AGENTS

Anthropic’s New, More Efficient Agent Tooling

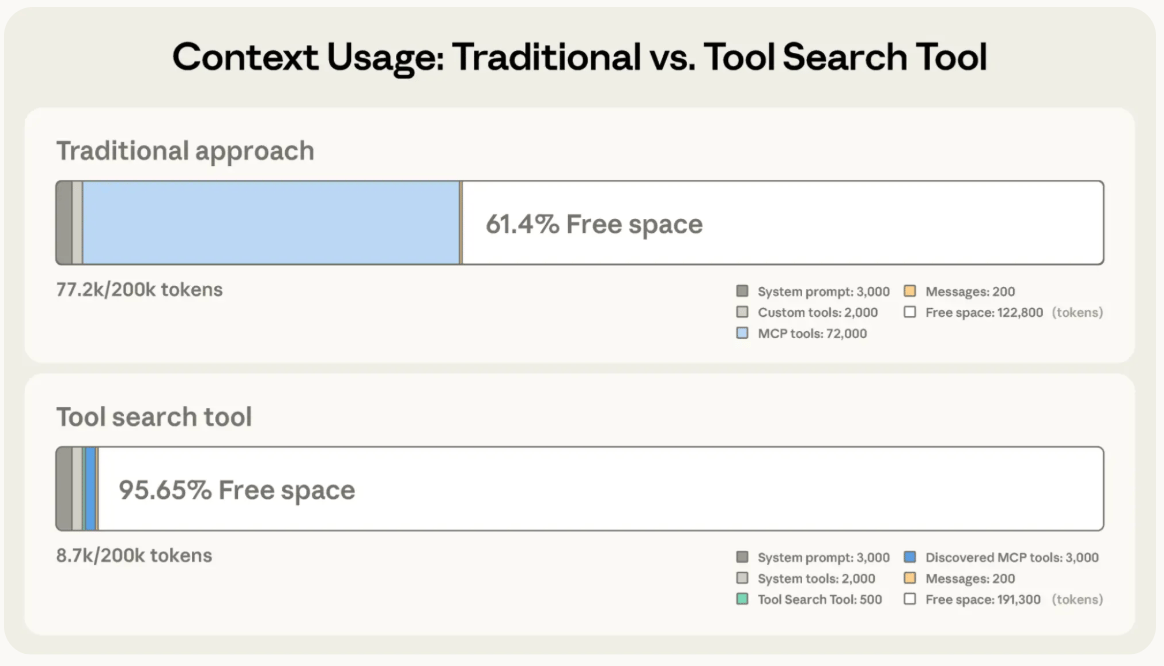

Modern generative AI models have a context window, a limit on the amount of context they can handle for each prediction. All Large Language Models (LLMs) are sequence-to-sequence models, which means they do the following ‘map’: [user input + context] → output.

The total size of this ‘map’, that is, user input, context, and output, cannot be longer than a model-specific limit we know as the context window, in the same way that, in computers, the working memory has a specific size and curtails how many things in parallel you can do in that computer. Context windows are, without a doubt, one of the most limiting factors when working with frontier AIs.

And now, Anthropic has introduced three new beta features for the Claude Developer Platform designed to improve how AIs discover, learn, and execute tools.

The first one is the Tool Search Tool. This feature allows Claude to discover tools dynamically rather than loading all definitions upfront. The challenge is that loading definitions for large tool libraries (e.g., across GitHub, Slack, and Jira) can consume tens of thousands of tokens before a conversation begins.

For instance, just looking up the tool definitions for GitHub, Sentry, Slack, Grafana, and Splunk MCPs gives you a total of 55,000 tokens (or ~41k words) packed into your model’s context window, not only rapidly inundating it, but also making it much more likely the model messes up.

The solution is that developers can now mark tools for "deferred loading." Claude starts with only a search tool and critical functions. When a specific capability is needed, the model searches for it, and only the relevant tool definition is loaded into the context. In Anthropic's testing, this approach reduced context token usage by approximately 85% while maintaining access to full tool libraries.

But wait, isn’t this obvious, and how things should have looked from the very beginning? Well, yes, so I hope this serves as a reminder of how early (and inefficient) things are in AI today.

Perhaps even more interesting is the next feature, called Programmatic Tool Calling. This capability enables Claude to orchestrate tool usage via code execution rather than sequential API round-trips.

Traditional tool calling (when AI agents call tools to execute actions, like calling Salesforce to create a new lead) requires a complete inference pass for every action, and intermediate data often floods the context window even if only a summary is needed.

For example, say the model has to execute five tools to load a new lead into Salesforce. The model will perform at least one entire inference pass for every tool call (i.e., reason what tool to call → call it → review result → reason what tool to call next).

Instead, Anthropic tells models (aka Claude) first to plan which set of tools they are calling, and write a small script that executes the entire process in one go, so the model only receives the output—again, an obvious solution, yet ironically not how most AI agents today work.

This method not only reduces latency by eliminating multiple inference steps but also minimizes token consumption by keeping raw intermediate data out of the context window.

And finally, they introduce Tool Use Examples, a new standard for providing few-shot examples directly within tool definitions. Developers can now explicitly provide examples of correct tool usage alongside the schema (the tool’s definition). This helps the model understand the nuances of a tool's API, reducing errors in parameter selection and invocation.

TheWhiteBox’s takeaway:

I want to point out several things here. First, models are becoming extremely neurosymbolic, meaning they rely more and more on non-neural tricks to solve problems.

That is, instead of counting how many balls are in an image, they can use the neural network to extract the balls, place them in a spreadsheet table, and then use an Excel COUNT function to count them automatically. The reason is that models seem to struggle with some pretty basic stuff, and we are using code to hide those limitations, which explains why the agent paradigm is too focused on tooling these days.

And while the effect is invisible to the user, it really puts into perspective the glaring limitations of neural networks when it comes to performing high-level actions.

Also, given how much work Anthropic and other Labs put into model harnesses (code you put on top of AI models to make them better), I can’t help but wonder how AI startups building on third-party models (companies valued at billions in some cases by building a set of prompts on top of an OpenAI model) will compete with the AI Labs that own the actual models.

Which is to say, in AI, money will be made on building tools, not logic. That is, the secret to making money in AI is to build tools.

There’s a very decent chance AIs will never be good enough for many tasks, and will instead rely on tools for it. So, you can either choose to compete with the same companies that own the models and can bury you in cash just with their brownie budget, or you can build precisely what they are screaming at all of us, they desperately need: good tools.

Closing Thoughts

It seems Christmas has come early to AI, and all major labs are releasing frontier models, to the point they are basically leap-frogging each other by the week (xAI’s Grok 4.2 seems to be around the corner, too).

As we close in on 2025, one can’t help but wonder: what will 2026 look like? We’ll, of course, have our 2026 predictions Leaders segment, but here are things that might become spicy news stories over the next year:

AI supply chain bottlenecks in advanced packaging, memory, and optics,

The potential emergence (or downfall) of the ASICs trade,

The growing importance of debt to keep the party going,

And a prediction from Nobel Prize-winner Demis Hassabis as to how models will look next year.

And, finally, software-dictated hardware

And on our next Leaders segment, we’ll take a look at one of these in more detail. Stay tuned!

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]