Writer RAG tool: build production-ready RAG apps in minutes

Writer RAG Tool: build production-ready RAG apps in minutes with simple API calls.

Knowledge Graph integration for intelligent data retrieval and AI-powered interactions.

Streamlined full-stack platform eliminates complex setups for scalable, accurate AI workflows.

FUTURE

Is AI Really Slowing Down?

The entire industry seems to be in the ‘acknowledgment phase’ that AI progress is slowing. However… is it though?

On the one hand, some are continuing the pompous claims, fearing that investors might call it a day. Conversely, LLM skeptics are taking self-awarded victory laps, boasting how they were right all along.

But who is actually correct?

The key to that question is none other than the emergence of a new paradigm, one that not even OpenAI predicted or dared to implement yet, which has seen an explosion in interest and hype over the last months. The success or failure of this new proposal feels like the very last bullet AI has to prevent all hype around it from crumbling down.

Today, we will introduce ourselves to the most significant shift AI has made in years, describing this ‘next big thing’ with a level of detail and intuition you will hardly find elsewhere.

Damning Evidence

First and foremost, it’s not only AI that seems to be in a state of poor progress; it’s the scientific community overall.

A lot of Research is Bullshit.

As illustrated by Sabine Hossenfelder, most academic papers today are bullshit.

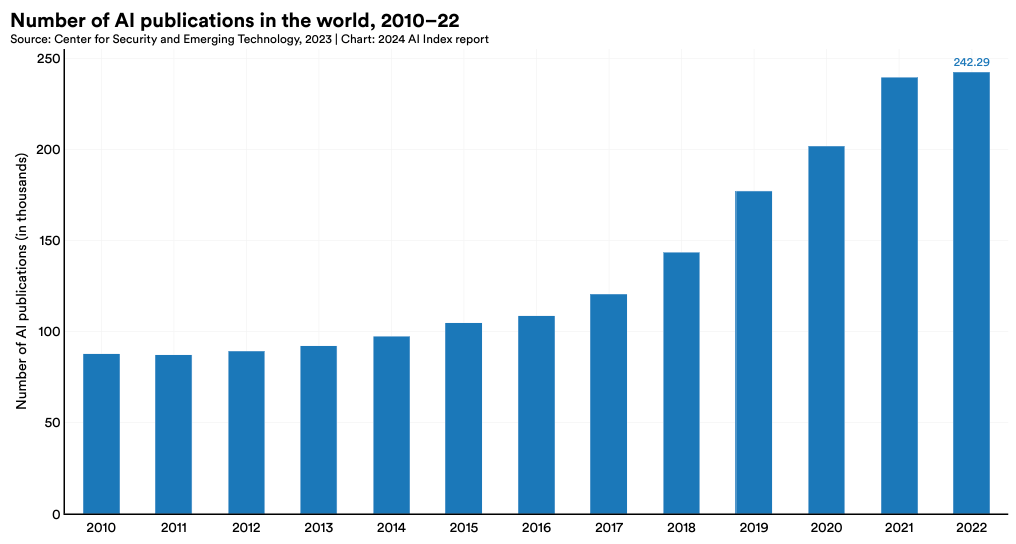

This is extremely weird, considering that being a researcher has never been more glamorous. Concerning AI, the trend is faster than anywhere else, as shown below:

But is this exponential increase in research publications being met by an exponential increase in breakthroughs?

It's not even close. Most of this new research does not entail breakthroughs but marginal improvements over decades-old discoveries. Nonetheless, most of the research that led to our frontier AIs is old.

For instance, ChatGPT’s prowess is based on the following:

MultiLayer Perceptrons, invented back in 1958

Self-attention, invented back in 2014

Residual connections, another crucial element, were first presented in 2015

The optimization algorithm used to adapt the weights of the model during learning, AdamW, was published by now Anthropic researcher Durk Kingma in 2014

And the Transformer, the paper Google published that pieced all together, was published 7 years ago.

See what I mean? We have been riding the wave of breakthroughs that are generally around ten years old or more and, in some cases, older than humankind’s first moon landing.

For instance, the Llama 3.1 models are almost identical to the research published by Google in 2017, except for minor adaptations.

But if you think OpenAI’s o1 models are any different, think again, as the pattern continues.

Monte Carlo Tree Search (MCTS), a fundamental piece behind not only o1 models but also AlphaGo and AlphaZero, two of the most important AI models in history, is based on the Monte Carlo method discovered in 1946 in Los Alamos by Stanislaw Ulam during the process of building the Hydrogen Bomb, and first used combined with Neural networks to create the MCTS method we still use today in 2006, already 18 years ago.

In other words, despite the boom in interest in AI, no meaningful breakthroughs have been made in at least seven years.

The reason? Well, because we—arrogantly—stopped caring. Today, research isn’t about producing new paradigms; it’s about improving results on an already-saturated AI benchmark by 2%, or tweaking a decades-old algorithm so that it runs faster on your GPU.

But why? Well, there’s a thing called capital that has completely altered research incentives. It has moved the community from pushing civilization forward to trying to get filthy rich. Today, new research on LLMs gives you eyes and ears from deep-pocketed AI labs that turn your poor academic salary into a $500k compensation and the world's respect.

I do not blame researchers who take this route, but the effects on research are palpable. Is current research actually research, or non-reproducible, easy-to-publish papers looking to land your dream job?

I don’t know. But what we do know is that this has led to a point where AIs are showing diminishing returns.

From Believers to Doubters

For a few years, all this evidence I just provided wouldn’t have mattered. The reason was simple: research breakthroughs were no longer needed.

As beautifully explained in this blog post, echoing what we have discussed several times, many in Silicon Valley believed that the only thing that mattered was unlocking compute (The Bitter Lesson first introduced this idea). They believed that the breakthroughs we mentioned in the previous section were ‘enough’ to sustain ‘infinite’ compute and data increases, and that was all we needed to reach AGI.

Scaling is all you need, they said. But as it turns out, they were wrong.

A first piece by The Information explained how OpenAI was running into serious trouble in showing significant improvements in the next generation. A few days later, the same publication followed suit with a similar piece pointing to Google.

Nevertheless, Google released Gemini-Exp-1121 a few days ago, becoming the best LLM overall (even above LRMs like o1 models), but the naming was, mildly put, unconventional. Thus, it doesn’t take that much to at least speculate that this model is, in fact, Gemini 2.0, but the marginal improvements aren’t good enough to sustain the new branding.

Scale AI’s CEO, one of the main providers of human contractors to labs like OpenAI or Anthropic, recently stated that AI pretaining has “genuinely hit a wall”, and according to Reuters, Ilya Sutskever, one of the brains behind the original GPT models and one of the most—if not the most—influential figures in the scaling mantra, recently acknowledged that pre-training scaling was plateauing.

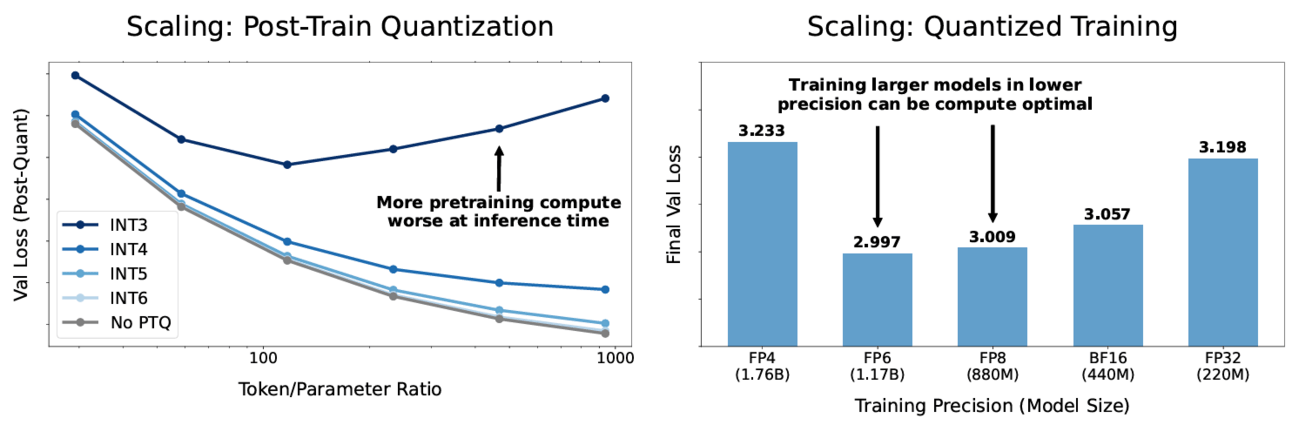

To make matters worse, a very recent Harvard/Stanford/MIT/Databricks study, described by famous AI researcher Tim Dettmers as “the most important AI paper in a long time,” describes quantization scaling laws for the first time, and it’s not good news.

Think Before You Scale

One of the most recent trends in AI is increasing model size but gradually decreasing parameter precision (the digit precision of each weight’s value) to reduce memory constraints, which are the main engineering bottleneck for LLMs.

For instance, if you train a model in INT8 (1 byte per parameter) instead of the standard FP16 (2 bytes), you decrease your memory requirements by 50%, which is a lot when talking about models with hundreds of GB or even TeraBytes of data.

The issue?

It turns out, there’s a limit to how much you can reduce precision. As shown below, if you use too much pretaining data (the training phase we are discussing is plateauing) and then perform the quantization, you decrease performance.

In other words, increasing the training scale is damaging if you intend to quantize later! This is a tragedy because large frontier AI models are utterly unusable unless tricks like quantization are done.

It’s quite possible that most of these labs are experimenting with lower precisions to train larger models. Maybe, this quantization law is the precise reason why the training runs have appeared to be underwhelming.

In a nutshell, our hopes and dreams of ‘AGI by 2027’ seem to be tearing down in front of our very own eyes, right?

Well, not quite.

In September, OpenAI released the o1 family, a set of fundamentally different models, even changing their name, probably hinting that we were indeed hitting a wall with the previous generation. In the process, they proposed a self-proclaimed new paradigm: test-time scaling.

In simple terms, test-time scaling refers to increasing the compute the model uses to respond to a given query. You can think of it as ‘giving models time to think,’ just like you would take longer to solve a complex task.

But as it turns out, it seems that the fundamental ground-breaking paradigm was another one, one you will know everything there’s to know today.

The Active Learning Era

If you look closely at recent AI research, you will notice an increase in the novelty of the proposed approaches. Notably, some new ideas are finally being published.

But what is this new era all about?

Learning like humans do… kind of.

A long-standing problem with current AI models is that they can’t learn forever. Their learning is stuck to a particular training phase. From then on, they only made predictions but could not learn about new developments in the open world.

We humans don’t work that way. We are on a conscious and, most often, unconscious, never-ending learning spree, continuously updating our beliefs about the world, allowing us to adapt to ever-changing environments. However, this human feature has been prohibitive for AIs since the industry's inception.

But, almost 80 years after Alan Turing kickstarted the AI era, that’s no more.

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- NO ADS

- An additional insights email on Tuesdays

- Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more