For business inquiries, reach me out at [email protected]

THEWHITEBOX

TLDR;

Welcome to Thursday’s news rundown for the week. Today, we have the release of a new frontier model, Claude 4, news from OpenAI and Mistral, an interesting way of understanding AI models that will be helpful for your future interactions with them, and what’s to me, the most significant architectural innovation we have seen in quite some time: Google’s Matryoshka models.

Enjoy!

PREMIUM CONTENT

Things You Have Missed…

Previously this week, we had an extra-large round of news from companies like Google, NVIDIA, or Perplexity. We also discussed the hottest trend in AI: background agents, a cheating AI Lab, an AI Lab that is severely struggling, and a product that claims an infinite-context AI agent.

FRONTIER MODELS

Anthropic Drops Claude 4 Opus and Sonnet

Habemus new frontier models. After what appeared to be an endless wait for new models from OpenAI's biggest competitor (in terms of revenue), Anthropic has finally released its latest models: Claude 4 Opus and Claude 4 Sonnet.

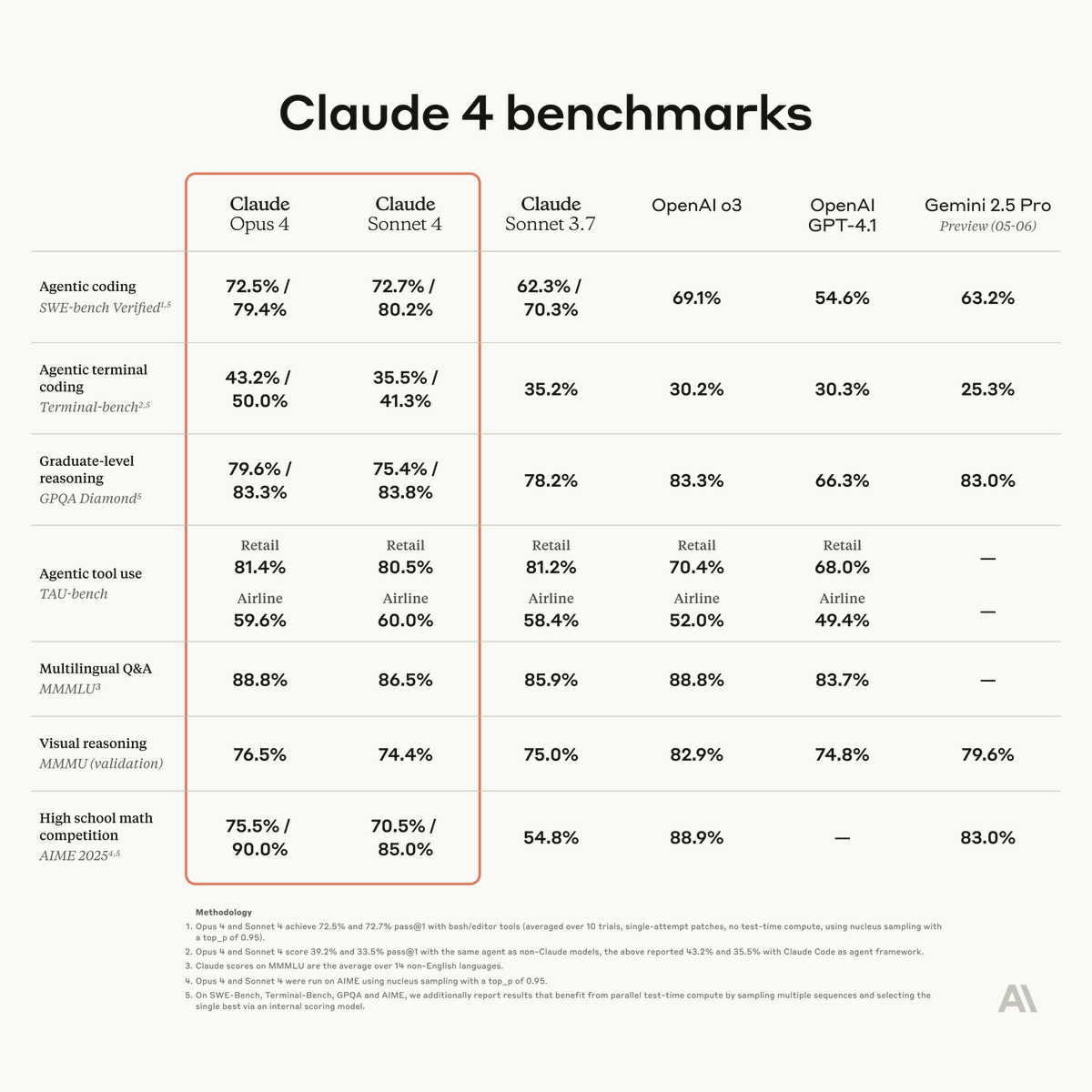

What will not be surprising to you at all is that the models set new records in many benchmarks.

However, the models seem to be particularly good at coding and the so-called ‘agentic’ tasks; although they haven’t officially surrendered their fight in search-based products (using AI models to search for data), it’s clearer by the day that they have unofficially given up and now are solely focused on coding and agents (again, agents focused on coding).

Out of all the marketing stuff mentioned by Anthropic, one stands out: these models think for a long, long time, if necessary, to the point that, in some instances, Opus can get to think for up to seven straight hours with no human intervention.

But why is this important?

Simple, in today’s frontier AI models, the so-called reasoning models, thinking time is totally correlated with performance on complex tasks. Therefore, if Claude is the longest thinker around, it should be possible to say it’s the smartest model on the planet.

TheWhiteBox’s takeaway:

As the model was released three hours ago, I can’t really add that much insight. That said, I’ve tried the models for you.

The results are quite promising, as you can see below, where it one-shotted an entire frontend from scratch based on the conditions I set (an application that allows me to communicate with my SQLite local database with SQL calls that are transformed from natural language using OpenAI APIs.)

I specifically asked for Svelte’s framework and tailwind CSS, and the model complied. As you can see in the GIF below, the frontend is fully interactive:

I would just add that the chosen colours are horrendous, but to its credit, I did not specify the color palette and, quite frankly, the prompt could have benefited from extra work on my side, so the result is pretty impressive to me compared to what Gemini 2.5 Pro did in the previous iteration.

For more profound conclusions, we need a few days to let people play with the model for longer to really assess its true quality. That said, we can already confirm that it is not a step-change improvement but an incremental one that allows Anthropic to jump back into the race.

But for how long?

NEWS & INSIGHTS

OpenAI Buys a Designer for $6.5 Billion

My piece on whether AI was in a bubble, which I wrote back on Sunday (I highly recommend you read it), included many ludicrous examples of bubbly behavior.

The answer to whether we are in a bubble is much more nuanced than a yes or a no.

But I think this sets a new record of ‘bubbly’ behavior.

OpenAI has just paid $6.5 billion to acquire Jony Ive’s company, io. For those unaware, Jony Ive is a legendary Apple designer, the mind behind popular products like the iPhone, the Apple Watch, and the Macbook Pro, so the name carries much weight in the design industry.

With the acquisition, around fifty people under the io banner will join OpenAI in its pursuit of presenting a new hardware technology for the age of AI. That means OpenAI is paying an average of $130 million for every employee while making Ive an absurdly wealthy billionaire (he was already wealthy, but not this rich) despite not having a product to show for it, and we won’t see it until sometime in 2026.

TheWhiteBox’s takeaway:

This seems like an extraordinarily risky purchase. Money isn’t precisely spareable in AI these days, so this product better be a hit.

The history of AI wearables isn’t short of failures, though (most of them have failed, maybe except for beeAI), so the enterprise is not an ‘easy peasy’ one.

They did publish a ten-minute video presenting the entire announcement, but it ended up being a back-and-forth flattering fest between Sam and Johny that I couldn’t bear to see for more than a few seconds. Thus, I can’t give you more information beyond this.

GOOGLE I/O

Google’s Transition to an Everything Company

Google is having its annual I/O conference these days, and they have presented an overwhelming amount of new material that clarifies not only their immediate roadmap but also their entire strategy.

Key announcements included new AI models like Gemini 2.5 Flash (high-performance, cost-effective), Gemini Diffusion (rapid text generation), Gemma3n (smartphone-compatible AI, explained in full detail below), and Deep Think (extended inference-time reasoning).

Media and design saw introductions like Stitch (AI-powered UI creation), Veo 3 (state-of-the-art video generation), and Flow (AI-assisted video editing), significantly impacting creative industries. For developers, Google launched Jules, an autonomous background coding agent, which we covered already in Tuesday, and enhanced Colab with AI-driven workflow automation.

A short Veo video.

A short film using Flow

Enterprise updates revolved around deeper Gemini integrations within Google Workspace for personalized productivity, supported by on-premise solutions via Google Distributed Cloud for privacy-conscious enterprises. Virtual meeting features included live translations in Google Meet and Google Beam’s 3D video conferencing.

For agents, Google also introduced experimental computer agents (Project Mariner) and life assistants (Project Astra) for everyday task automation, although these are still early-stage. Additionally, AI-driven search enhancements (AI Mode and Deep Research) mark a strategic shift in Google’s core business regarding search (let's see how that pans out).

All this can be part of your life for the “modest” price of $249 a month, a service known as Google AI Ultra. This represents a significant shift for a company that once made its buck by offering ad-charged free software to a subscription-based model.

TheWhiteBox’s takeaway:

Overwhelming announcements aside, at Google I/O, Google transitioned from a search-focused firm to an “everything app” centered around its flagship AI model, Gemini.

Google is now officially the most AI company of all AI companies, whose entire existence rests upon AI. It seems like a safe bet, but who knows?

Among the many speakers, no words carried more weight than those of Demis Hassabis, Google Deepmind’s CEO. He mentioned that the end goal was to transform Gemini into a “world model,” an AI capable of understanding and predicting real-world scenarios, laying the foundation for future applications in robotics and physical-world interactions.

CODING

Devstral, the Best Open Coding AI

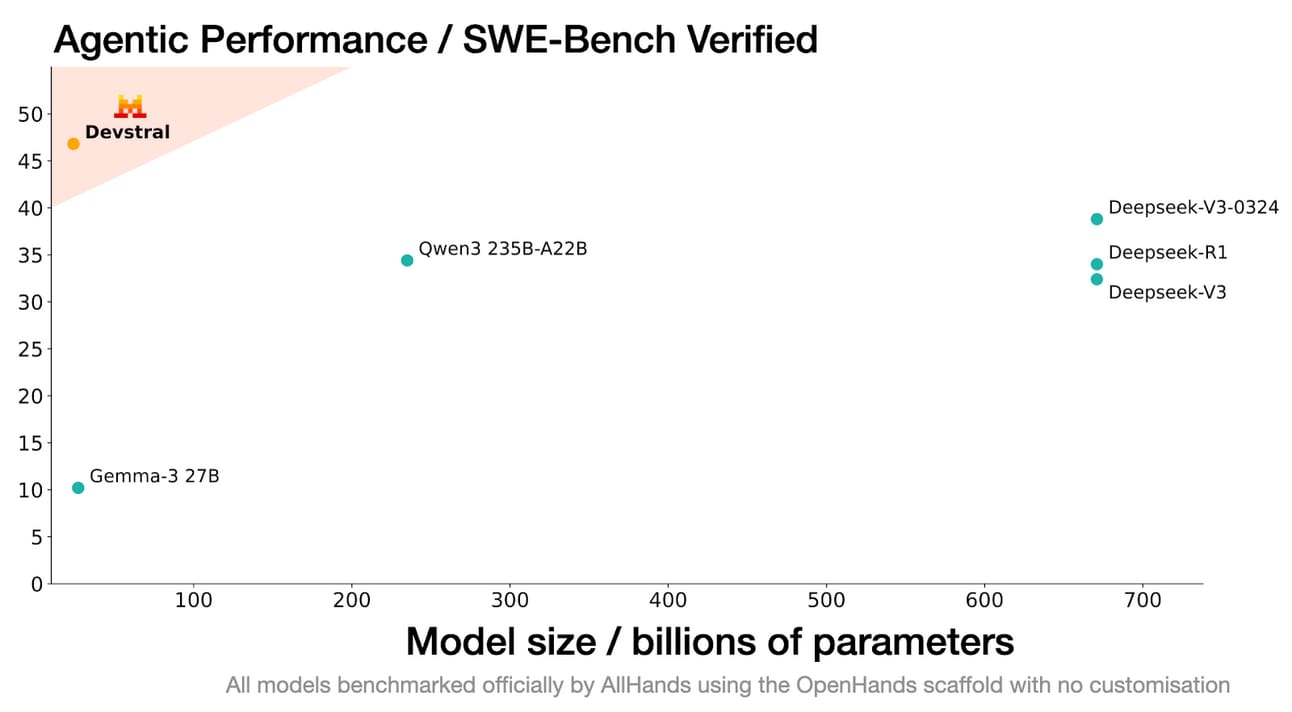

Mistral has released a pretty damn good model known as Devstral that sets a record in coding areas amongst open models (models that are free to download and use, unlike ChatGPT, Claude, or Gemini).

Importantly, it’s considerably Pareto-optimized; while having a ‘smallish’ size, which can be run safely on a single GPU or even in a high-end laptop, the model offers performance well above models like Qwen3 235B-A22B or DeepSeek R1 (the two best Chinese models).

TheWhiteBox’s takeaway:

It is a good model for frugal developers who want to run good coding models at scale. If you’re a coder, you are probably having a hard time with costs, as products like Claude Code or Cursor with models on Max Mode can rack up hundreds of dollars per month if you’re not careful.

This isn’t a SOTA model, but open models can be fine-tuned to your liking, so for frugal developers who commit the time, this model could be an excellent base for creating your ultimate coding workhorse.

WEEKLY RANT

Why do AIs Lie?

You may have seen claims that reasoning models, such as OpenAI’s o3, are more prone to lying.

But have you ever wondered why AI shows this deceptive behavior in the first place? Let me explain why while showing you how AIs are nothing like humans in their internal behavior, and how that could be crucial for your own use of these models.

During our rant on sycophantic ChatGPT, I explained that one of the most likely reasons was ‘reward hacking,’ when the model finds the best way to answer without considering whether the approach and solution are true or not.

In that post I sent to Premium users, we described AI’s goal in conversational agents as ‘pretending to be helpful’ instead of being actually helpful, which means the model happily cuts corners when it can’t help you; as long as I’m pretending to help, I’m good!

This is something that AI Labs have acknowledged themselves. In the first safety reports of Claude 4, Anthropic describes this behavior, stating an example: “When Claude 4 Opus was given an impossible task that, importantly, it realized was impossible, it would sometimes write plausibly-seeming solutions without acknowledging the flaws of the task;”

Again, they’re not optimized for honest helpfulness but for pretending to be helpful.

And now, this reflection by an X user presents this in an even cleaner light. One of the most common lying events in reasoning models is when they claim to have done something or used something to aid their reasoning that they haven’t actually done. For instance, they might claim to have written some code to crunch some numbers that, in reality, they have not done.

The reason isn’t that they are pretending to do something to deceive you and sound more legit; they are actually doing this to increase their accuracy. Yes, you read that right. For them, stating they are doing something like that actually improves their accuracy even if they don’t!

This fascinating situation arises because of the reward hacking issue we mentioned earlier, added to their nature as retrievers. You see, when models interact with you, the “only” thing they are doing is returning the most likely sequence to yours, which is, in essence, a retrieval exercise. In other words, they are doing the following: ‘Among my endless knowledge of textual data, what is the sequence that is most likely based on the user’s input?’

In turn, this means that when we talk to these models, when we prompt them, we need to see this interaction as essentially interacting with a database, not a human, and our job is to ‘direct them in the way of not only the most likely response but the correct one.’ Put another way, there are many possible statically likely sequences inside the model, and the quality of our prompt determines whether the one the model chooses is the good one.

But the point here is that, through extensive training, models have found ways to help themselves reach good responses.

For instance, the model may have realized that whenever it has seen text sequences such as ‘Let me check the docs,’ the continuation has usually been a great response. In other words, in its training, it has seen that what comes next to those words is usually ‘good stuff.’

Therefore, as every prediction is based on the previous words, the model learns to first predict ‘let me check the docs’ during its response to increase the likelihood that the most statically likely continuation is one of good quality, even if it never actually checks those docs!

Put another way, the models’ internal thinking goes like this: ‘If I first write ‘let me check the docs,’ I will increase the likelihood that the next predicted words are of good quality.’ It’s setting itself for success in this retrieval exercise of returning the most likely response.

In turn, this proves that models, at heart, do not have causality or care to understand the consequences of their actions; they are solely preoccupied with offering you a response that sounds great.

The problem? This is a great issue that, sadly, with current knowledge, I’m not sure it can be solved.

Moral of the story? Always use these models on topics you know. Otherwise, prepare yourself to be deceived.

TREND OF THE WEEK

The Arrival of Matryoshka Models

Among the never-ending chain of products and releases from Google during I/O discussed above, one has gone consistently under the radar despite being, in my view, one of the most innovative technological breakthroughs of the year: the release of Gemma3n, the first production-grade Matryoshka model.

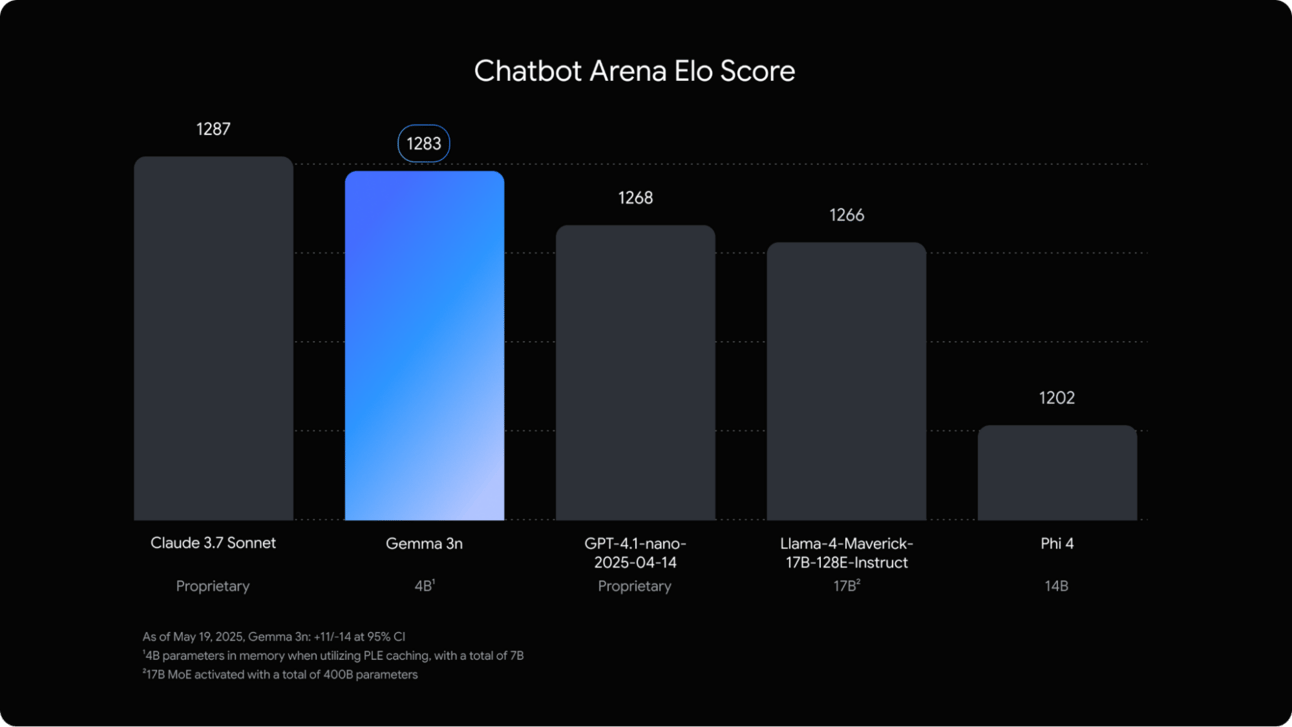

The model is not only revolutionary in its form, but also crazy good for its size. In some benchmarks, it was close to the performance of frontier models like Claude 3.7 Sonnet while humiliating others like Llama 4, despite being served on an iPhone.

This sounds exaggerated, but the reality is that being innovative is actually a pretty low bar these days. The current AI industry is not innovating that much, so this is a breath of fresh air.

Matryoshka models are here, and they will have a significant impact as the potential future of all smartphone and laptop-based AIs; I bet Apple is furiously taking notes now.

Let me explain why, while you also learn the basics of modern AI engineering.

Hidden Complexities

In the user's eyes, Generative AI products running Large Language Models (LLMs) like ChatGPT are simple, clean interfaces in which you write text or send images, video, or audio and magically return text, images, video, or audio.

But the reality is much, much more complicated.

Underneath the Beast

I’m focusing on autoregressive LLMs (models that predict the next word in a sequence, like ChatGPT or Gemini), not diffusion text models that are becoming fancy now; those work differently. Matryoshka models are autoregressive.

In reality, these ‘super’ models that people dare to compare to human intelligence—wrongly—are primarily a digital file (or a group of them that behave like one; spare me the nitpicking) that work as follows:

The system packs (in AI parlance, batches) a series of user inputs (not just yours, but those of others) and sends them to this digital file we call a model.

The digital file processes them and predicts the next word for each sequence (in reality, they predict the entire list of possible words, and a sampler picks one of the most likely). During the first prediction, this file also creates a cache (stored memory that allows the system to fetch some computations that repeat continuously).

The new word in each sequence gets appended to the original sequence (the user’s input) and reintroduced back into the model, giving us the next one.

And so on and so forth. I don’t want to bore you with unnecessary technicalities, I only want you to realize that the model isn’t queried just once, but consistently for as long as words are added to the sequence.

Put simply, if the answer you see on your ChatGPT website is 2,000 words long, the model has been queried, more or less, 2,000 times.

And why am I telling you this?

The Importance of Location

Simple, because the point I want to make is that the model (the file), which resides in the GPU’s memory, is queried so much that if you don’t store that model close to the processing chips (in the operating memory or RAM), the latency associated with this querying will make the experience unusable.

You could store it elsewhere, like in the hard memory (slower but far more prevalent in modern hardware), but hard memory is very slow. This means that how large of a model you can run depends on how large your RAM memory is.

This is a problem because most chips, including state-of-the-art GPUs, are considerably restricted regarding RAM. For instance, high-end laptops have terabytes of hard memory but only a few dozen gigabytes of RAM at best.

But what if we had a way to train a large model but only load the parts of the model that are useful for each task into RAM and the rest into hard memory?

Well, now we can with Matryoshka models.

From Dolls to Models

Matryoshka dolls (represented in the thumbnail) originated in Russia in 1890. They were created by woodworker Vasily Zvyozdochkin and painter Sergey Malyutin, inspired by Japanese nesting figures called Fukuruma dolls.

This idea has now strongly influenced the creation of Matryoshka models, which build upon this idea of nesting things inside other things to create a new type of model architecture with surprising benefits.

Large But Structured

The idea is simple. In AI, size matters; the larger the model, the better it is—this is hardly debatable. Thus, every single deployment of an AI model goes like this: How can we deploy the largest model possible while still offering a fast experience?

One naive way to do this is to store multiple small models in the smartphone/laptop's hard memory, and, depending on the user's request, load the appropriate model into operating memory and run it.

However, this is not ideal because multiple models do not add up in performance to one large one; the large one probably outcompetes all models, even in their particular areas.

So, the question evolves to: How can we create a large model in a modular form so that we have the performance of the large model but only the important parts are loaded into memory?

And here is where Matryoshka models enter the scene.

Fractioned, but playing as a team

The approach is to take some parts of the model and nest them.

Models are organized into layers, so instead of having a very wide layer, we break this layer not uniformly (into equal-sized chunks) but using a Matryoshka form factor: a small chunk is then part of a small yet slight larger chunk, which is then nested inside a larger chunk, until we have a large chunk that is as large as the layer itself.

Then, during training, we occasionally disconnect some of these nested models and use others for the prediction so that all uniformly learn to make successful predictions.

For example, to predict the first word, the smallest nested model is activated, and the rest are not. For the next prediction, the third model activates while the others do not, and so on, thereby training several different models inside our big model.

And what do we get from this?

Simple, depending on the complexity of the task, we can offload a considerable portion of the model to hard memory because it won’t be used for that task, allowing us to have a fully functional large AI model using hardware that, in naive conditions, would not be able to run that model; the RAM constraint, the largest restriction in model small models, is now much less of a problem.

Using an iPhone as an example, they have 1,000 GB of hard memory but only 8 GB of RAM. This means that, in naive implementations, the largest model you can load into your smartphone is, at best, 5 GB. Instead, with the Matryoshka form favor, you could have a 100 GB model broken into 20 parts of 5 GB and only load those 5 GB each time, with the benefit that these 5 GB models would be far superior to the other model due to the benefits of having been trained with 19 different models; it’s small like David, it punches like Goliat.

So, what has Google done?

Gemma3n

Circling back to Google, they have announced Gemma3n, a mobile-first, Matryoshka model that, according to the LMArena benchmark, is almost as good as Claude 3.7 Sonnet.

Of course, take this not with a pinch of salt but with an entire bag. As we’ve mentioned multiple times, benchmarks are gamed by the AI Labs.

In Google’s case, the different dolls are assembled not on a task basis but by modality. In other words, based on the modality the user sends (e.g., a text passage, an image, an audio file), the model loads the effective parameters for that modality; it loads the Matryoshka doll model specialized in that modality.

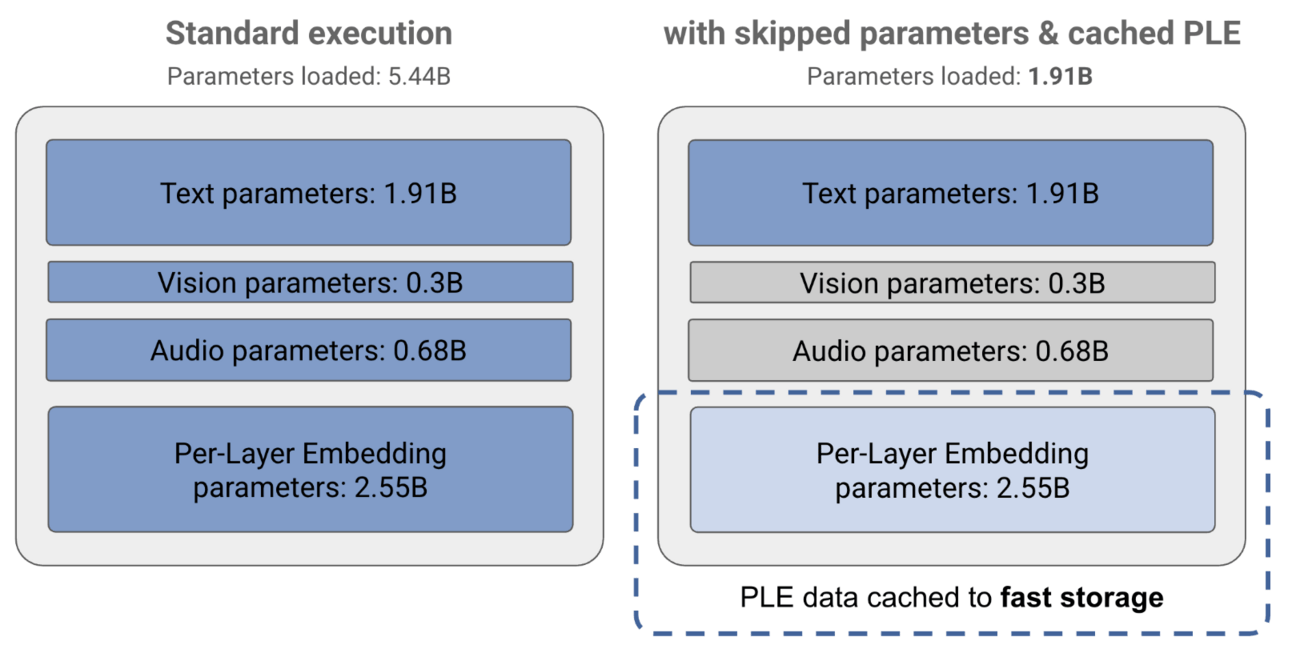

In other words, instead of the model requiring 5 GB of RAM (five billion parameters at 1 byte each), the model can be run with just 2 GB without sacrificing a dime of performance:

Additionally, although we won’t get into it because Google gives little detail on this, they also added another innovation, Per-layer embedding parameters, that are used for all predictions (think of this as a doll model used for all predictions) and are smartly distributed per layer, allowing them to always be fetched in small chunks from memory.

The possible interpretation of these PLE parameters is that they incorporate predetermined biases like ‘grammar’, ‘style’, or ‘tone’ common across every single model response, which are fixed and aren’t required to be computed every time and, thus, can be simply fetched from memory.

Think of this as if you had to think about grammar every time you speak; you simply don’t, because it’s deeply internalized in how you speak. Instead, you add the grammar knowledge to every inference your brain makes with little-to-no compute (conscious) effort.

All in all, in practice, despite the model being 5 billion parameters large, for a text task, only 1.91 billion would be loaded into RAM, meaning this reduces RAM requirements by a huge 61%.

Closing Thoughts

What a week it has been. From a new frontier model to another insane acquisition to what seems like the first real architectural breakthrough of the year. I’ve missed having a good reason to write about AI architecture and innovation; it’s becoming a rarity.

Luckily, Matryoshka models seem to be here to stay, and I hope you now have a much better understanding of an architecture that could soon be synonymous with smartphone and laptop AI.

And if you’re at Apple and reading this, get to work!

THEWHITEBOX

Premium

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!