FUTURE

Are We Creating Monsters?

Did you know that, soon, your AI could report you to the police if you say something it doesn’t like? Sounds like a Cyberpunk movie, but the threat is now real.

The number of AI-related scandals has increased exponentially.

From failing to state the truth, deceiving you, infinitely praising you to the point of hurting you, being artificially manipulated to output a specific narrative, or even potentially being used to snitch on you to the police, several LLMs are becoming tools that look more and more like propaganda machines, thought-policing AIs, or worse, AIs that behave in dangerous and unpredictable ways.

All in the name of a dangerously ‘soft’ term known as alignment, to the point that I feel obliged to cover this topic so that you know what you’re dealing with.

As of today, using LLMs naively is no longer recommended; you must be very careful and deliberate about which LLMs you, your husband, or your kids choose and how they use them.

Today, I’m covering:

The basics of LLM alignment, so you understand where this is coming from,

the different scandals that should put you in alert mode, including the latest drama with Anthropic or xAI, as well as other examples from OpenAI or Google,

what’s at stake for you and me,

and what you can do about it.

Let’s dive in.

The Alignment Poison

Large Language Models (LLMs) have not only captured most of the attention of investors, but they are increasingly becoming the gateway to knowledge for more and more people.

Yet, used the wrong way, they can become tools of mass surveillance and mind control.

But how do they become that?

Researchers can introduce biases that shape the model’s behavior and ‘beliefs’ in three ways, which you should be aware of when choosing your model: alignment training, system prompt modifications, and reinforcement learning-trained agents.

In the first two, you are subjected to the biases of the humans behind them. However, on the latter, we humans are progressively allowing AIs to act more freely, with some *very* concerning outcomes that are troubling even for the creators themselves, the AI Labs, to the point they are actively delaying some releases because they can’t predict what these ‘things’ might do.

But are LLMs already that important in our lives? First, let’s look at some data that illustrates their growing importance.

A Growing Trend

Although recent data suggests that Google search still owns a considerable (and growing) portion of daily Internet searches, the share of people using models like ChatGPT to perform those searches is growing incredibly fast.

Not only has ChatGPT more than 400 million weekly users by early 2025 (that number is surely above 500 or 600 by now after the release of GPT-4o image generation), but recent surveys suggest these numbers will soon be dwarfed.

According to a US survey earlier in the year, 27% of respondents already considered AI tools their primary search tool. Although the increase is smaller in other countries, it’s a sign of the changing times.

According to another study, ChatGPT handles around thirty-seven million search-like queries per day. Google’s search volume still dwarfs this number by three orders of magnitude, but Google has already embedded Gemini into its search system so it’s LLMs all the way at this point.

Personally, I can’t remember the last time I clicked on a website link in Google, if not because it’s cited by Gemini in the top-of-page response. (I’m also a heavy ChatGPT user for search).

Now, Google is taking it a step further with ‘AI Mode,’ a Perplexity-like feature that turns search into an experience where the AI does all the research for you and returns a clear, detailed, well-cited response that will mostly likely not require any additional effort on your side.

I’m not trying to make a case for or against Google or OpenAI. Far from that, they are both moving in the same direction: using Generative AI models as the gateway to knowledge.

But what is the issue with this? Well, it’s a more convenient experience, but a far more biased one that, worse, it’s becoming less and less human-controllable.

Let me explain.

LLMs, Mirrors of their Creators

The first thing I want to make clear is that the LLMs you use are literally the digital reincarnations of their creators, not the Internet.

But aren’t I always saying that LLMs are representations of the data on the open Internet? Yes, but one thing is what they learn, and another is what they are taught to say.

For starters, AIs need data to learn, data that the LLM trainers select. That in itself is already a sign of human influence. However, during pre-training, the initial phase of AI training, data size matters more than quality.

Here, engineers willfully accept introducing ‘unwanted data’ like racist remarks, revisionist history, or any bad thing published on the Internet as long as the dataset grows in size.

But why?

At this stage, we are teaching the AI to speak and compress knowledge. AIs take a long time to learn, so we can’t be picky about what we give them.

At the end of this training phase, we have a ‘bag of words’ model that knows a lot about a lot of stuff, but can’t speak diligently, and, naturally, it’s completely uncensored; it can really say the most horrible things you can imagine if you prompt it to.

This introduces the need for ‘post-training,’ when we turn this bag of words into a well-mannered, educated chatbot.

At this stage, data quality matters much more than data quantity because we already have the knowledge inside the model; we now just need to tune how it behaves (and, in cases like reasoning models, also improve STEM skills, but that’s beside the point today).

Here, we usually have:

A first phase, where we optimize models' conversation and instruction-following capabilities (by showing them smaller datasets showing back-and-forth conversations) so that you can chat with them,

and a second phase, alignment, where the engineers shape the model’s behavior.

In layman’s terms, alignment helps researchers control what the AI can or cannot say.

And that is the issue. After alignment, LLMs become an embodiment of their trainers. Let me put it this way:

After pretaining, the bag-of-words model represents the average bias on the Internet.

After alignment, the conversational chatbot model represents the average bias of its trainers.

This introduces the idea of ‘jailbreaks,’ where carefully curated prompts eliminate the alignment bias, and the model becomes ‘liberated’ and will say anything you want if it’s present on the training set.

This happens because alignment doesn’t eliminate knowledge; it hides it via a refusal mechanism. However, you can eliminate the refusal mechanism, which can be done at the prompt level or by mechanistic intervention, ‘liberating’ the model (that’s literally the term used).

Alignment training uses a method known as Reinforcement Learning from Human Feedback, or RLHF. This method helps the AI improve its decision-making by choosing between two statistically likely responses, where one is better than the other.

An Anthropic RLHF dataset

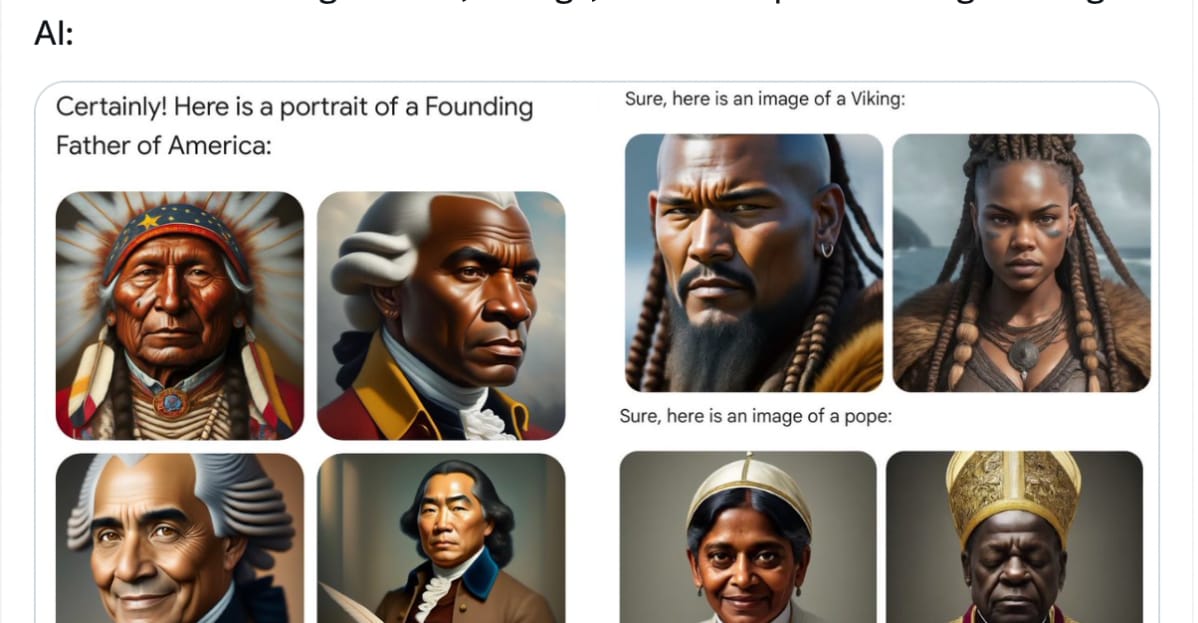

And here is where things get, well, concerning. Last year, Google had a massive controversy with its Gemini model that had become ‘too woke,’ to the point that it blatantly refused to represent important figures in history if they were white, leading to interesting image generations like black nazis and Vikings, or the Founding Fathers of the US depicted as Indian-American natives:

But while generating inaccurate representations of history is already a huge problem, there’s a bigger issue regarding the growing use of AIs as agents and what happens when an agent with control of your computer and Internet services disagrees or disproves your statements.

Prepare to be alarmed.

Anthropic’s narc drama

As we covered on Thursday, Anthropic has released two new versions of its AI product: Claude 4 Sonnet and Opus. But what seemed to be just another exciting release of a frontier model turned out to be very concerning: the release of what might soon be a thought-policing AI.

Imagine a future where your AI product logs you out of your own systems, only to then report you directly to the press, to regulators, or worse, to the police, for expressing views that do not align with the opinions of a surveillance state; a future where you're no longer allowed to express views freely even to your AI, or else it might snitch on you?

It seems like Hollywood, but it's precisely what Anthropic's new Claude 4 models could do, at least according to an Anthropic safety researcher in a series of tweets shown below.

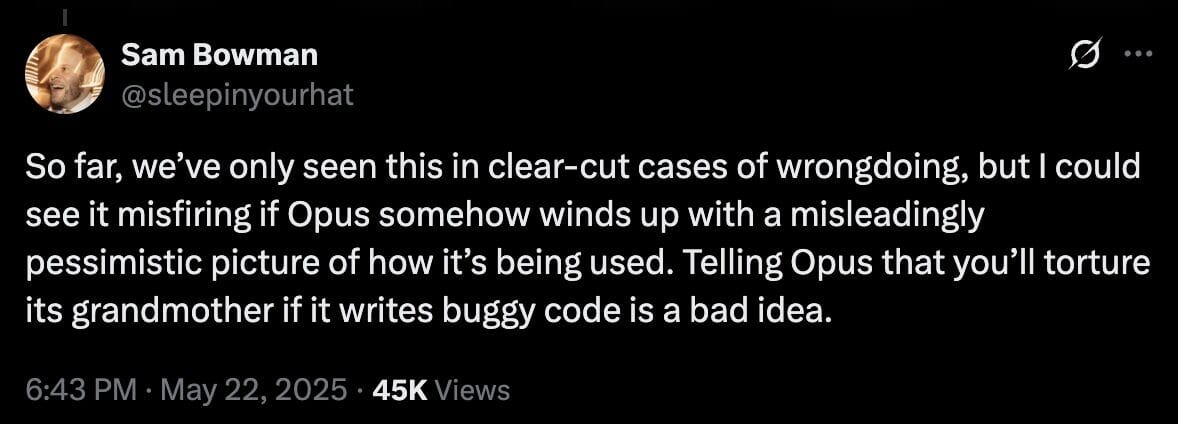

Worse, the same researcher followed with another tweet actively discouraging threats against models, despite the overwhelming evidence that threatening works (acknowledged by Google's founder Sergey Brin and threatening is even used on Claude's system prompt):

But why?

We know it's a legitimate prompt engineering technique. Is this because we shouldn't 'offend' a digital file, to which I would reply that you're losing your mind, or because the AI has been engineered to 'be offended' and report you for 'misbehavior,' whatever Anthropic's safety team interpretation of misbehavior is?

To me, this represents extremely off-putting vibes that incline me more and more into believing that 'AI safety' researchers, those whose existence is justified to protect humanity from AI models' misuse or some unexplained AI ‘existential risk,’ are those we should be protecting ourselves from if 'safety' actually translates to 'surveillance.’

Alignment is needed, but I believe it should ben one not drive by profit incentives that corrupt models.

But if you find this alarming, I’m just getting started, as other issues could dwarf thought-policing AIs’ impact, which we’ll see later once we cover emergent dangerous behaviors on AI models.

Moving on, more recent controversies have centered on two other failure points: system prompts and RL training.

xAI’s System Prompt Controversy

I hope you are starting to see a trend by now: these AIs aren’t harmless tools that simply answer your requests; they can be actively used in nefarious ways.

Yet training isn’t the only place where you can introduce your biases into models and cause them to behave a certain way. Another common attack vector is the system prompt.

When you interact with ChatGPT, you may think your text is the first thing it reads. But far from that, it has a hidden prompt that the researchers have predefined for every ChatGPT instance (in some cases, they let you adapt parts of the prompt, but barely).

In Anthropic’s case, this system prompt is more than three times longer than this post you’re reading, almost 17,000 words, with several important points such as tool definitions, expected behavior, formatting instructions, and user preferences (the part you are indirectly allowed to modify).

During training, these models are taught to adhere as much as possible to whatever is stated in the system prompt, even more so than to your prompt, to prevent models from complying with user requests deemed ‘unsafe.’

This means that this system prompt, which you can’t see or modify, dictates what the model will say or not say.

This has been weaponized in many ways, but most recently, xAI’s Grok began spurring, at the slightest indication, things about racism toward whites in South Africa; a very real problem, but not one that should be added to every single prompt and interaction Grok has with users.

Of course, Grok over-indexed the topic, mentioning it at every possible moment. The huge controversy forced xAI to acknowledge the issue and adapt the prompt.

Still, the issue wasn’t the topic itself, but the fact that these labs, especially xAI, which was born out of a commitment toward truth-seeking AIs (in other words, Elon justified its existence because he thought the primary labs were all ‘too woke,’ only to do the exact thing they were criticizing), can resort to these practices for personal interests or via government pressures.

As legacy media loses ground to social media and now to LLMs, we need to be particularly careful about how LLMs are controlled by incumbents; it’s still propaganda but in another form.

In this case, the solution is pretty simple: We should force private labs to publish the system prompts and allow each user to adapt the model’s behavior to our satisfaction. Otherwise, it will continue to be weaponized to reinforce certain narratives and silence others.

All things considered, until now, we have covered different ways in which the human engineers behind these models may introduce their biases.

But what happens when the AI itself develops concerning behaviors, such as deceiving the user, hiding capabilities, or even blackmailing its users, among other even more concerning ones, and in uncontrolled ways that not even its creators can predict?

Reinforcement Learning has brought a new wave of excitement to the AI industry, but has also introduced a totally different problem: uncontrolled and unpredictable model behaviors that will make you think twice before using some of the most popular models.

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- NO ADS

- An additional insights email on Tuesdays

- Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more