THEWHITEBOX

TLDR;

This week’s big revelation? Models trained on incorrect data showed significant mathematical gains. We’ll explain how this is even possible and what it reveals about the future of modern AI.

Meanwhile, Berkeley’s Intuitor project flips the script by training models using their own certainty as feedback, pointing to a new path for learning in fuzzy, non-verifiable domains like writing.

Also, DeepSeek R1 emerges as a serious contender to OpenAI’s o3 in performance (and at a fraction of the cost), while Anthropic open-sources a powerful interpretability tool that might be the key to defusing dangerous behaviors before they emerge.

And finally, as AI token demand explodes, we once again ask: where’s the revenue?

THEWHITEBOX

Things You’ve Missed By Not Being Premium

On Tuesday, we discussed a variety of products, ranging from o3’s increasingly dangerous behavior to DeepSeek’s newest model. We also take a more philosophical look at AI, with research that proves all models converge on the same ‘geometry of meaning.’

Additionally, I went on a little rant on what seems to be software’s strikingly clear future, based on new releases by Mistral and the latest hot startup in AI, Superblocks.

FRONTIER RESEARCH

Training on Incorrect Data… Works?

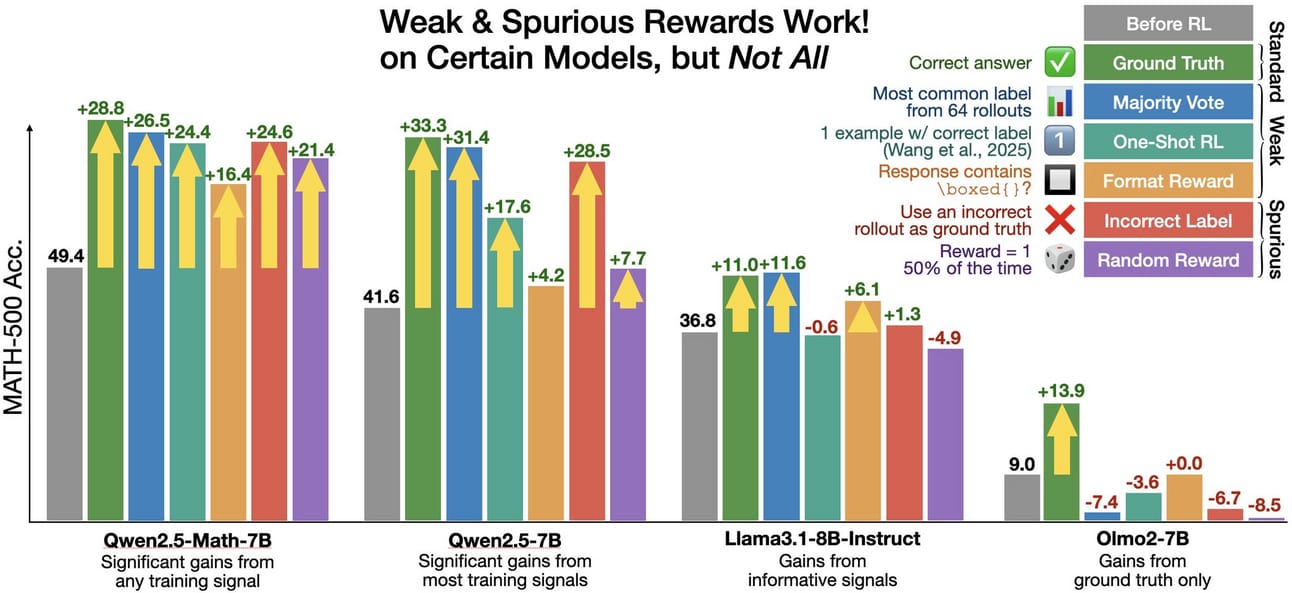

A group of researchers has obtained an 'impossible' result in AI: a model has seen massive gains in maths accuracy (+28%) by training on... incorrect data.

But how? This finding reveals a great deal about the reality of frontier AI.

Modern AI models have reached new heights in "intelligence" by being trained on verifiable data, where we can check if the model's answer is correct or wrong, serving as a good learning signal and giving us models like Gemini 2.5, o3, or Claude 4 that, in some instances, match or outperform the best of the best among us (in constrained settings, though).

But, unlike incumbents, will tell you, are these models actually learning new reasoning capabilities?

Well, the answer is no, as this 'new' training method, which is generating so much hype in the industry, is just amplifying certain behaviors that only emerge if the model already knows them (we have much more evidence of this, by the way).

To prove this, researchers trained models on incorrect rewards but realized that only some of them benefited. Alibaba's Qwen models did improve performance, but Llama (Meta) and Olmo (AI2) didn't.

But how is that possible?

The reason is that Qwen models are more inclined to generate math and code data by default (due to Alibaba's extra focus on maths and coding during training).

So, even if the training signal is weak (wrong or incomplete), it is still sufficient to "bias the model's response distribution more toward maths and code," meaning it still incentivizes models to generate "math/code-esque" outputs that lead to improved performance.

Think of this using this stupid example: If I tell you that 1+1 = 5, obviously wrong, it still forces you to recall how addition works, helping you improve at it, yet you're still smart enough to know 1+1 does not equal 5 not to learn 'wrong addition'; the key is that weak math training still helps you, but only if you know how to add in the first place!

What this means for you and me is that this training method isn't teaching the model anything new; it's just making pre-learned behaviors more likely.

Put another way, reasoning models (o3) are not better mathematicians than their non-reasoning counterparts (GPT-4o); they are simply much more likely to generate math data.

So, if you're a VC betting on reasoning models as the next big leap, think again because they're not it until we find new forms of training beyond what we currently have.

Case in point, we are still limited by the model's knowledge, which will lead us to the same bottleneck we inevitably encounter every single time in AI: new data, which is non-existent for reasoning models and very expensive to obtain.

So, yes, reasoning models are overhyped and are at risk of stagnation, just like the previous generation (more on this later).

AI SAFETY

Anthropic Open-Sources Interpretability Tool

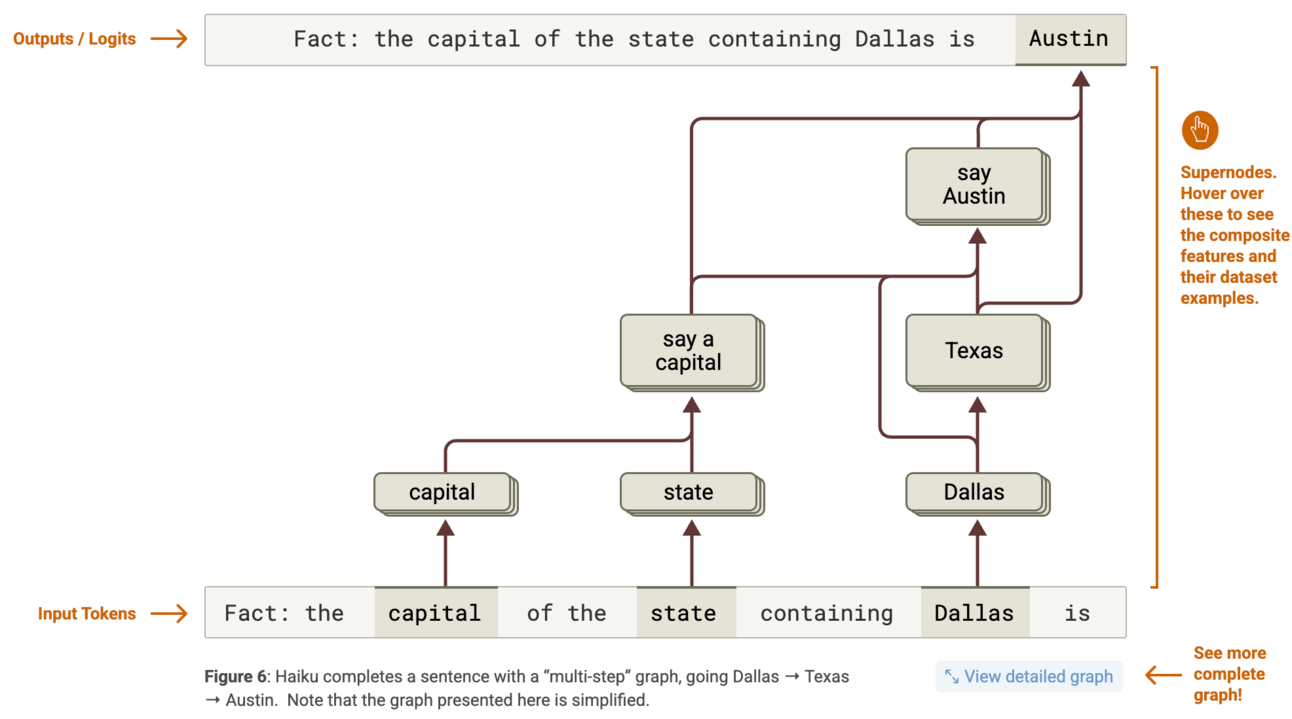

Anthropic has announced they are open-sourcing their interpretability tool that allows people to define ‘attribution graphs’ inside the model, the internal circuits that form during inference that lead to a certain output, so that we can understand the ‘thought process’ of these models.

As we have enthusiastically covered in this newsletter, this field of AI, mechanistic interpretability, is a fascinating dive into the world of frontier AI models, allowing us to better comprehend how these models generate the content they do.

TheWhiteBox’s takeaway:

As we have shared recently, the new training methods based on RL are powerful but lead to unpredictable behaviors that, uncontrolled, can be extremely dangerous, like models actively deciding to shut you off from the computer to prevent you from shutting them down, or even resorting to blackmail.

It’s crucial that if we are going to coexist with these models or, worse, share a physical space, we understand and, crucially, predict their behavior. And to date, no research comes closer to this as Anthropic’s attribution graphs.

But how does Anthropic’s research and tools help here?

Picture an entirely predictable model. Suppose we identify the dangerous attribution graphs or the areas of the model that lead to blackmailing behavior. In that case, we can intervene and shut down those areas, thereby preventing the model from developing that capability.

As too much money is at stake to halt the train altogether, we need to find ways to steer it before it derails, as it is already in motion. If not, I don’t think we will be getting Terminator robots anytime soon, but we might surely encounter models that could make your life miserable.

ANTITRUST

Antitrust judge points to AI in Google’s case

According to the Wall Street Journal, a U.S. judge is weighing new, AI-focused remedies after ruling that Google illegally maintained a monopoly in online search.

Although the judge’s ruling that Google had a monopoly in search came several months back, the article highlights how prosecutors argue that Google is leveraging its entrenched position and user base to define how generative AI is introduced to the public, effectively using its monopoly in traditional search to entrench itself in the next technological era.

In other words, the judge could consider putting curbs on how it competes in artificial intelligence.

TheWhiteBox’s takeaway:

I will say I’m a Google shareholder, so I’m biased.

But I don’t see how preventing Google from competing in the new era of search is anything but unfair. Yes, Google has a monopoly, and it might make sense to force them to divest some products like Chrome, but saying, ‘Oh, you have a monopoly, so instead of letting you compete in AI, I will simply force you to surrender the entire business’, is a wild view.

Although the judge may be thinking that this would prevent Google from dominating the next wave of AI-powered search, that would not be the consequence. Instead, it would literally throw them out of the race, as AI will be the center point around which Internet search is done in the future.

As a European, I’m all out for antitrust laws that prevent monopolies that dominate customers, but that’s one thing, and another is destroying a company’s future.

AI ECONOMY

The Explosion of AI Demand

This blog post by venture capitalist Tomasz Tunguz discusses the surge in demand that many infrastructure providers are experiencing, citing potential increases in demand of up to 1,000 times the previous level.

He ascertains that the reason is reasoning models, which require a much larger amount of tokens than previous, non-reasoning LLMs.

Among the interesting data points he shares, he shares that:

Microsoft reached 100 trillion processed tokens in Q1, a fivefold increase year-over-year.

Hyperscalers are deploying, on average, 1,000 NVL72 NVIDIA racks, each with 72 GPUs.

The pace of AI factories (GPU clusters producing tokens) in flight has reached 100, double the number from last year, with the number of GPUs per factory also doubling, resulting in a 4x total increase in deployed GPUs in just over a year (and with each GPU being more powerful than previous ones).

TheWhiteBox’s takeaway:

Quite the statistics, but as we always say, where is the money? How much of this demand is free service to users?

Nonetheless:

95% of ChatGPT queries are estimated to be free.

A similar number of free queries is expected from other labs as they attempt to establish a user base.

Prices are dead cheap, squeezing margins or even forcing some Labs or LLM providers to serve models at a loss.

Most of Google’s processing tokens come from AI overviews in search, which are, again, free.

Grok gets much of its use from people asking for clarifications on other people’s tweets. Grok is a paid service, but there is no way it’s worth its money right now, considering the number of people asking it questions every single minute.

Perplexity reduces sales by a significant percentage by offering Premium subscriptions for free in the name of growth.

And the list goes on. Investors know this, so even though demand is welcomed, we better start seeing revenue growth.

FRONTIER MODELS

DeepSeek’s New R1 Model is Very Good

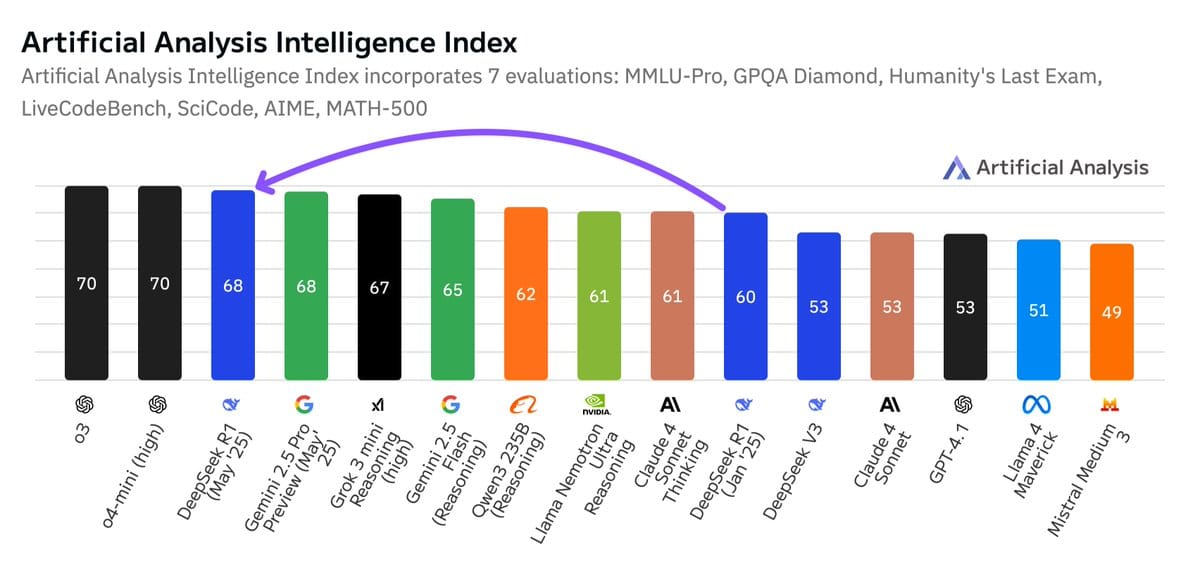

According to Artificial Analysis, an independent and well-respected AI model evaluation company, DeepSeek’s new model, R1-0528, which we covered on Tuesday, is now the second-best model overall in their intelligence index, only behind OpenAI’s o3 and surpassing Google’s Gemini models.

Open source is back in the fight!

TheWhiteBox’s takeaway:

The most important thing to highlight is cost. DeepSeek R1-0528 is significantly cheaper than OpenAI’s o3 on a token basis despite its performance being increasingly comparable, making it a compelling ‘best value’ offering alongside Gemini.

DeepSeek R1-0528 costs $0.55 per 1 million input tokens and $2.19 per 1 million output tokens.

OpenAI’s o3 is $10 and $40, respectively, which is considerably more expensive.

That said, DeepSeek is much more wordy, meaning that the amount of generated tokens is much, much larger than when you use o3. Still, it’s not enough for o3 to recover the gap, remaining a much more expensive model overall.

You can access DeepSeek’s new model in the DeepSeek app.

LIQUID MODELS

Perplexity Launches ‘Labs’

Perplexity Labs is a new feature for Pro users that transforms ideas into complete projects, such as reports, spreadsheets, dashboards, or simple web apps, using AI-driven research, code execution, and data visualization. It automates tasks that normally take hours or days, at least according to Perplexity.

Accessible via web and mobile, Labs expands Perplexity from answering questions to delivering usable outputs.

TheWhiteBox’s takeaway:

Simply put, this is just a way to facilitate the use of the product’s outputs, allowing you to download the stuff in segregation or combined as a report.

Nothing remarkable, but it allows me to remind you that we are seeing the democratization of research, or in a clearer way, a new nail in the coffin of due diligence consulting: the days of paying millions to McKinsey so that they search the Internet for you to provide you a handful of insights that can be resumed to ‘increase revenue, cut costs’ are basically gone.

Access to information fast was a very lucrative business a few years ago. Not anymore.

TREND OF THE WEEK

Learning to Reason with Internal Rewards

Much of the ‘progress’ we are seeing in AI lately relies on a training method where we help models learn by showing them problems with ground-truth answers (e.g., ‘solve this problem with the answer being 7’) and then having them ‘bang their digital heads against the wall’ until they find the solution.

But this is not only expensive, it’s also prone to stagnation, as the areas where a ground truth answer exists are few, like maths or coding, but there’s no such thing as a ground truth of what a good essay is.

But what if… the answer was inside the model all along?

Let’s dive into one of the most exciting new areas of research in AI: intrinsic rewards.

The Hefty Price of Progress

Despite this appearance of unmatched innovation and continued breakthroughs, actual progress in AI is based on very few (yet highly productive) ideas that are ‘milked’ to the extreme.

Real innovation is occurring at the infrastructure level, but at the model layer, algorithmic breakthroughs are few.

So, what is this big idea everyone is milking right now? And the answer is RLVR.

Everyone is doing RLVR. Literally.

If you’re a regular reader of this newsletter, you already have great intuition as to how modern AI models learn, but if not, the idea is pretty simple and quick to recap.

There are two stages: imitation learning and trial-and-error learning.

The former is simply exposing AI to a lot of data it must learn to replicate. Known as ‘pre-training,’ it enables the model to acquire knowledge of the world.

The latter is exposing the AI to a challenging task and letting it explore ways to find the solution to the problem while receiving online feedback that guides the exploration process, a training method known as reinforcement learning, because its goal is to ‘reinforce’ good behaviors. It’s just fancy trial and error until “finding the way.”

Pretraining is already a mature training method, but for RL, despite being a theory more than thirty years old, we are only just starting to learn how to do it.

In fact, we currently only know how to do it well at scale on verifiable domains, areas where the reward signal guiding the learning process is unambiguous and well understood.

Be that learning to play chess (AlphaZero) or solving math problems (AlphaProof or OpenAI’s o3), a strong reward signal (we know the solution to the maths problem or when a chess game is won) allows us to scale this exploration process to the point that these models become strong at the task, or even superhuman in the case of AlphaZero at chess.

And the name of this particular type of RL is RLVR (RL on verifiable rewards). As it works, everyone and their grandma is doing it.

Literally.

Everything points in the same direction.

Knowing what I’ve just told you, it’s not surprising to see that the new hot models in AI, the so-called reasoning models, are only good at reasoning in areas like maths or coding but aren’t necessarily better at things like writing novels, as the latter is a very subjective task where it’s tough to define what’s a good response or a bad one. At the very least, it’s nuanced.

Nuance is the enemy of AI learning, so AI Labs have temporarily set aside those tasks and are now solely focused on areas where they know they work.

Please bear this in mind whenever people tell you AGI is close. For AGI to arrive, we need human-level reasoning in all areas, not just the sciences, and the discovery of a method to achieve this is yet to be made.

However, as we mentioned earlier, we are applying RL to models that, through pretraining, already possess extensive knowledge.

So, instead of trying to find innovative ways to reward model actions so that they learn, which is increasingly expensive and complex, what if we just need the model to make up its mind?

The Power of Intrinsic Rewards

When I mention that we ‘verify’ the model’s results, what we need is a very powerful and expensive symbolic engine, such as a math proof evaluator or a code sandbox, that will evaluate the model’s outputs and tell it whether it’s doing the right thing.

However, a group of researchers at UC Berkeley has decided to use the model itself to score its own results, guiding the learning process. In a way, using the model’s ‘gut feeling’ as a learning signal.

And guess what, this system, Intuitor, works.

Using The Model’s ‘Gut Feeling.’

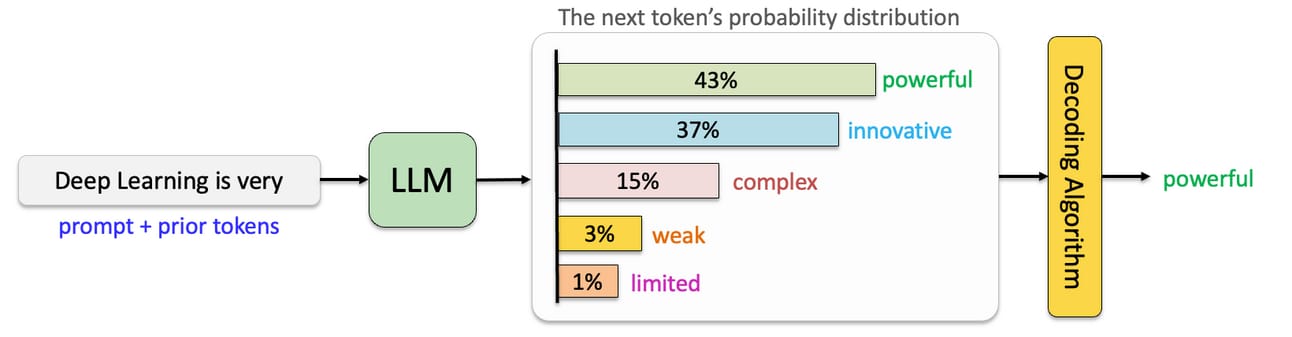

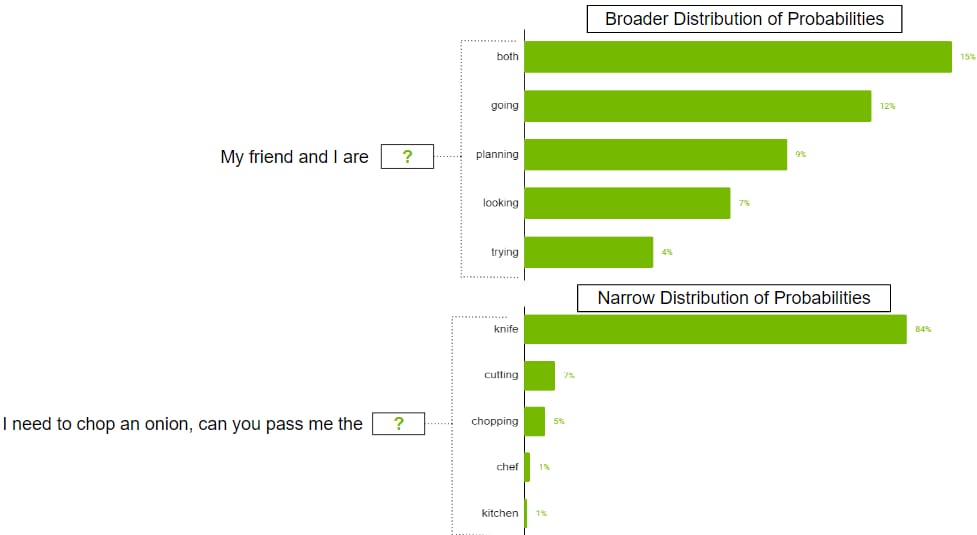

In case you aren’t familiar with how modern language-based AIs work, they don’t just ‘predict the next word.’ In fact, they predict what we describe as a probability distribution.

As shown below, what they actually output is a list of all the words in its vocabulary (the words it knows) ranked by likelihood. In other words, the model is saying, ‘Out of all the words I know, which is one more likely to be the next one?’

But why do we do this? Simple, we want the model to ‘model uncertainty.’ In other words, force the model to evaluate how likely it’s right… and wrong.

Crucially, how the output distribution comes out broadly defines how certain the model is about the upcoming prediction. As you can see below, the model above is much less certain about what word comes next than the one below, as it allocates a decent percentage of likelihood to at least four words.

On the other hand, the bottom prediction allocates an 84% probability to ‘knife,’ which essentially means the model is pretty sure the next word is, indeed, ‘knife’:

So researchers posited: What if we use this as the learning signal?

Specifically, they took a similar approach to all RL methods but switched the external reward (the score on a math problem provided by an external system) with a measure of the model’s own certainty about the prediction.

Using the case above, the probability distribution below is a good learning signal as it’s a high-certainty prediction, while the one above isn’t. Therefore, we reinforce the model outputs whenever it’s certain about them.

Think of this as the same as if you restrained yourself from answering a question because you are ‘not sure’ if what you’re about to answer is correct or not. That’s a low-certainty prediction and, thus, should not be reinforced.

Consequently, what we are doing is collapsing the model into “known knowns” and away from uncertain ones.

Simply put, we are making the bet that models know much more than they show, and we are simply helping them retrieve that hidden knowledge it is most certain about.

Crucially, this system can be utilized in areas where external verification systems are not available. This means this system could be used to train a model to become a better writer.

As the model has seen “everything” during its training (they are trained in all possible data points these labs can clinch to, even copyrighted data), the answer to 'what makes a better writer’ is already present inside the model, and we just need it to ‘emerge’ from the model.

And the results are interesting.

A Very Promising Path

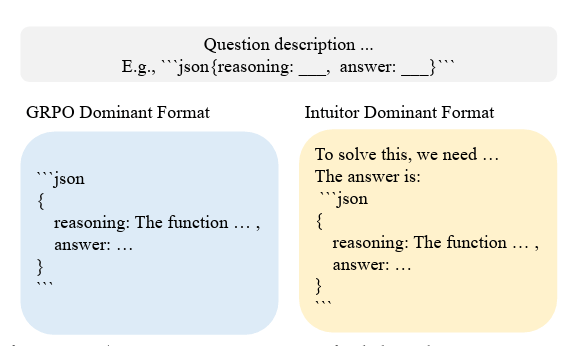

Interestingly, Intuitor demonstrates emerging reasoning, where the model learns to reason in response to the user’s request (right), incorporating a pre-reasoning effort before providing the actual, structured response.

In other words, just as our teachers incentivize us to ‘think out loud and explain to ourselves’ how to solve a task before actually doing it to improve our chances of finding a correct likely path, the model develops ‘spontaneous reasoning’ to strengthen its understanding of the task.

But does this actually improve a model’s performance?

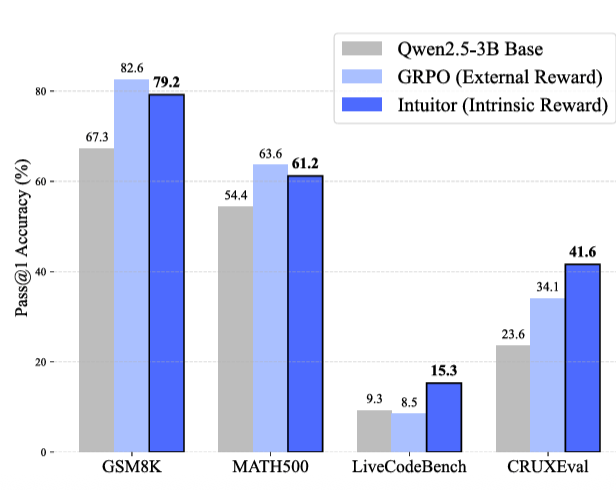

As shown in the graph below, Intuitor is competitive with GRPO (the training method used for models such as DeepSeek R1 or OpenAI’s o3) in mathematics while being superior in areas like coding.

So, yeah, it’s a pretty promising avenue of research.

Closing Thoughts

As mentioned, we need to find new ways to evaluate models during learning to continue progressing. While training on verifiable data is beneficial, verifiable data is very scarce in most areas.

We urgently need something that generalizes to ‘non-verifiable’ domains if we truly want to build AGI.

And using the model’s own intuition, or ‘gut,’ appears to be a cost-effective and intuitive way to move forward in those areas, leveraging the model’s extensive knowledge.

But will it work at scale?

We can’t say for now, but what we can say is that the solution makes a lot of sense and, quite frankly, tackles a problem that most Labs are currently hiding under the rug: real reasoning beyond memorizing maths solutions.

THEWHITEBOX

Join Premium Today!

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]