The Simplest Way To Create and Launch AI Agents

Imagine if ChatGPT and Zapier had a baby. That's Lindy. Build AI agents in minutes to automate workflows, save time, and grow your business.

Let Lindy's agents handle customer support, data entry, lead enrichment, appointment scheduling, and more while you focus on what matters most - growing your business.

FUTURE

AI's Darkest Hour

TheWhiteBox is an AI optimist newsletter, one that believes AI is truly transformative and a net good to society. However, AI does have a dark side, pretty dark actually, and we are exploring it in depth today.

Using the latest research from places like Harvard, Stanford, MIT, and OpenAI, as well as soul-crushing real-life stories about things going very wrong with AIs like ChatGPT, even deadly, we will examine the risks that AI poses in areas such as job displacement, loneliness, and even more concerning issues like psychosis, and how AI could even threaten our very existence as a species.

And no, I’m not talking about Terminator AIs killing us all, but a more real threat: fertility rate collapse.

Today, we are examining several real-world problems in our society through the lens of AI. But you’ll learn stories and statistics that go beyond AI, enriching your overall understanding of the current state of affairs.

🚨 Readers be warned: this isn’t going to make you feel any better about the future. You will be blown away by some of the stories and statistics we are discussing today.

It’s pretty interesting, but also pretty sad.

Let’s dive in.

First Hard Data on Job Displacement

We have all been hearing these predictions about AI and job displacement, some quite well-thought-out, while others are quite simply outrageous, making it hard to separate signal from noise.

However, a team of Stanford University researchers has presented the first hard evidence (statistically significant) on AI’s impact on jobs.

And it’s not good.

Asymmetric collapse

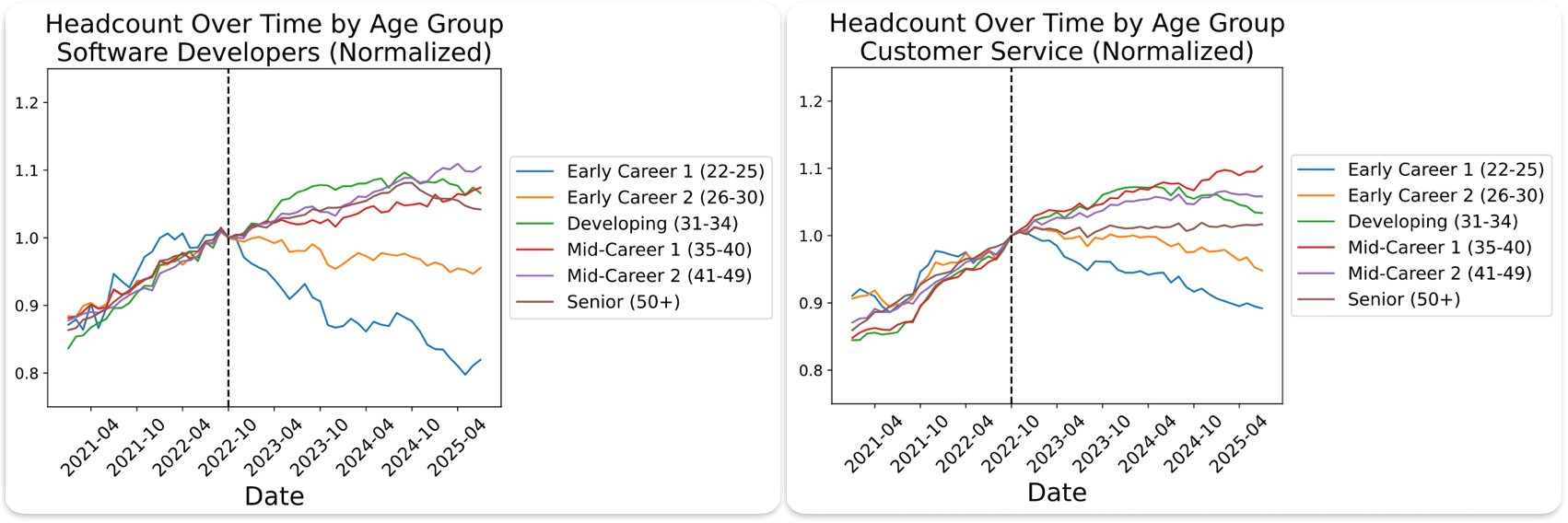

This very recent study has analyzed, since the release of ChatGPT in November 2022, how different jobs, categorized by “AI exposure,” have fared since then across all age groups.

They find that young workers (ages 22–25) in the most AI-exposed occupations, jobs such as software developers and customer service reps, experienced a 12% relative decline in employment, while employment in less-exposed fields (e.g., nursing aides, stock clerks) grew or remained steady.

This relative decline of 12% is compared to the least AI-exposed group, which acts as the control group. This allows verifying whether the results are statistically significant (they are), which means that the probability of observing this data by random chance is very low, providing a statistically sound basis to support the claim that AI is playing a negative role on job prospects for those people.

Importantly, older workers in exposed jobs did not see comparable declines, illustrating that this overall framing of AI as an “entry-level” worker is, in fact, true.

As you can see below, in the fifth quintile of exposure jobs (jobs amongst the 20% most exposed to AI), there’s a clear divergence in headcounts for different age groups, with software developers collapsing up to 12% for software developers:

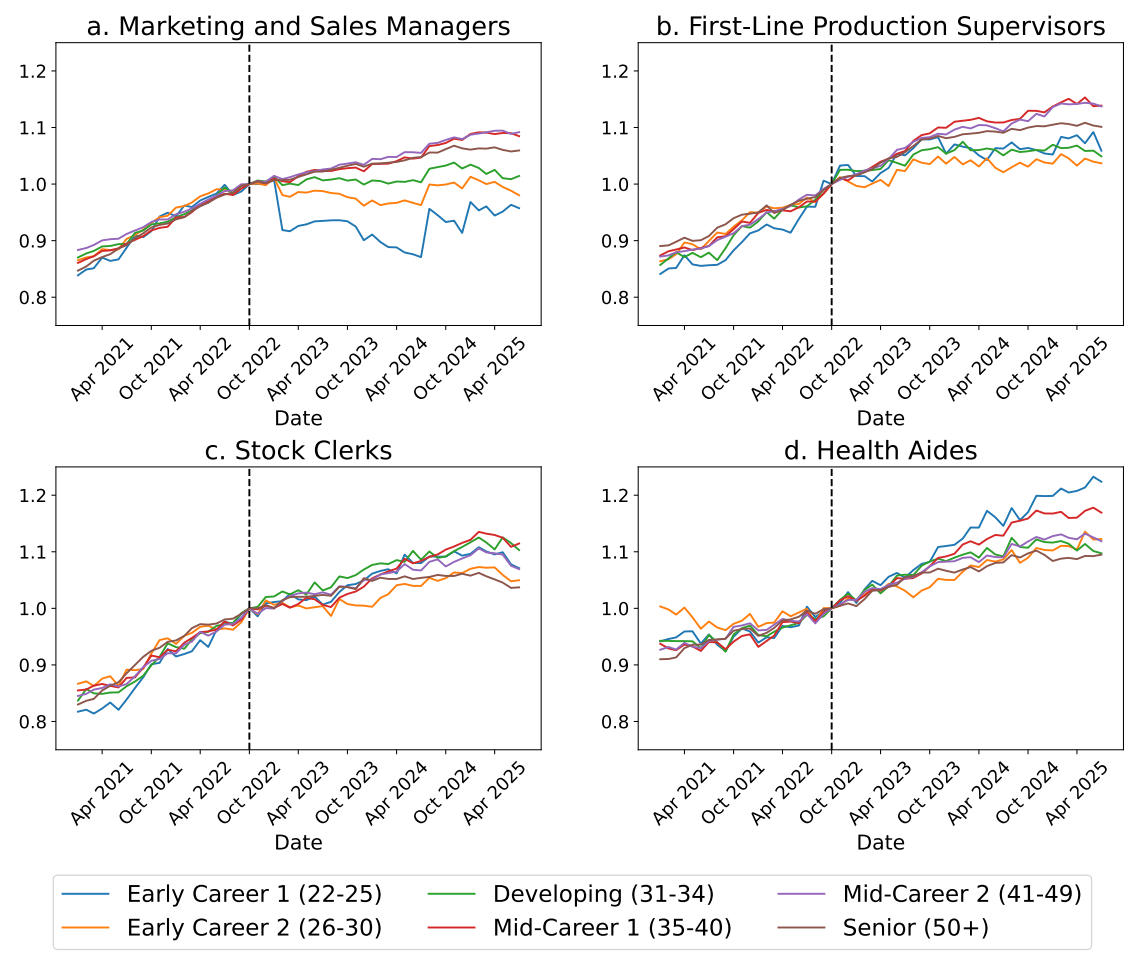

When looking from a broader perspective at less-exposed occupations, such divergence is not seen or is not statistically meaningful:

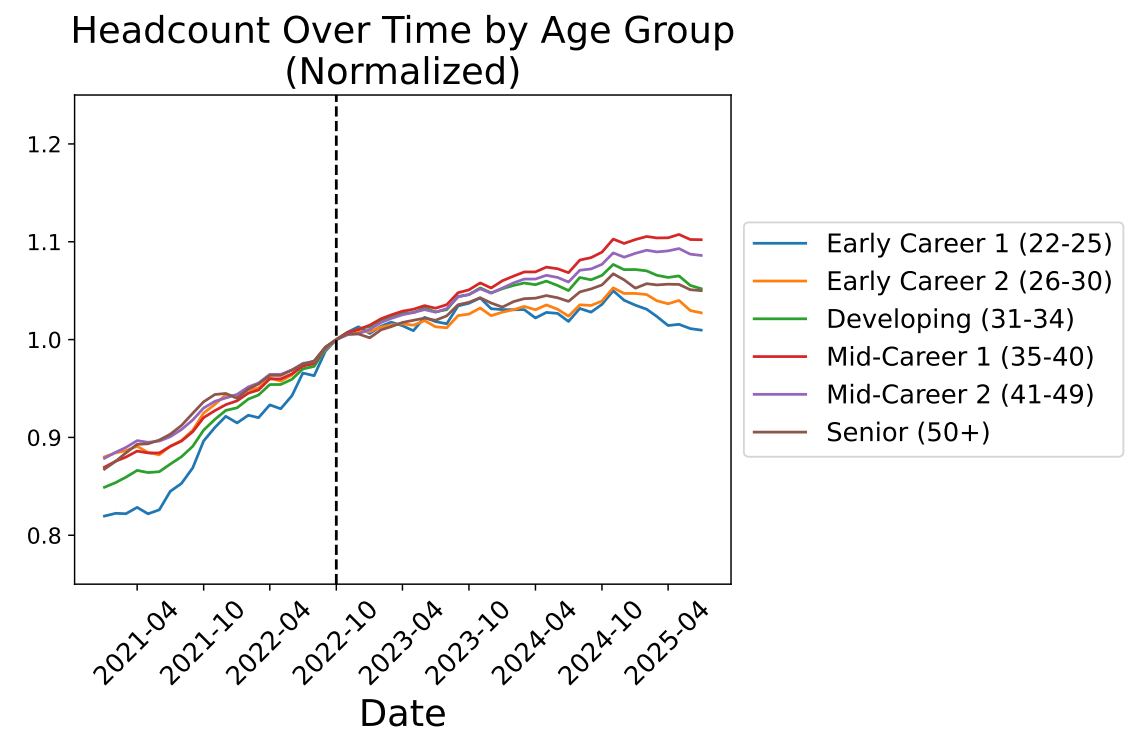

And from an overall market perspective, while most age groups continue to experience job growth, younger age groups are seeing stagnant or even declining overall job growth (although the extent to which this slowdown is attributed to AI is more difficult to justify when considering all jobs). In this case, the takeaway is that, beyond AI, job growth for young people is stagnating.

But moving beyond research and statistics, we also have actual “role models” that are showing the way toward AI-led job displacement: Big Tech.

The Great Cutting

If there’s a place where Big Tech companies are walking the talk regarding using AI to be more efficient, it’s job cutting.

Andy Jassy, Amazon’s CEO: Under his leadership, Amazon targeted a 15 % increase in the ratio of individual contributors to managers by the end of Q1 2025. He described the revised structure as giving employees more autonomy and reducing bureaucracy.

Sundar Pichai, Google CEO: As we discussed on Thursday, in a recent company-wide meeting, he emphasized that “these changes [reorg/soft layoffs] are intended to cut internal barriers and speed up decision‑making, rather than simply reduce headcount.” He stressed that Google must “be more efficient as we scale up so we don’t solve everything with headcount” while streamlining the organizational structure.

Mark Zuckerberg, Meta CEO: He discussed a “mathematical approach” to management ratios, noting that manager-to-report ratios of three to four were unsustainable in a slower hiring environment. He aimed to increase that to around seven or eight people per manager to remove layers and accelerate the decision-making process. He also expressed a dislike for “managers managing managers” and emphasized flattening the organizational structure as a way to empower individuals.

There’s no easy way of saying this, but we are clearly moving to a world where, instead of having humans managing humans, we’ll have humans managing AIs. And in that paradigm, the human being originally managed by other humans takes the original manager role, but instead managing AIs, not other humans, displacing the “manager of managers” job altogether.

Of course, executive leadership, in charge of vision and long-term strategy, are also a group of “managers of managers”, but it’s not them who become dispensable, but the middleman who’s right now acting between these people and the actual bread-makers do.

Young and Middle, Out

All things considered, here’s how research and evidence suggest job displacement will most likely occur:

The young pay the price. On the one hand, it’s much easier to simply not hire someone than to fire them. It’s a much less PR-taxing measure, and let’s be honest, much less stressful for the decision-makers. This means companies will prefer keeping things as they are and stop hiring and then firing, rather than hiring and then firing.

The middle managers are first in line. The exception to the norm is corporate bloat. Assuming price deflation of products and services (especially the latter), tightening margins, those workers who are not perceived as ‘bread-makers’ (can’t be measured in terms of productive outcomes directly) will be most likely displaced. How fast this happens will largely define the suffering of this—to me, inevitable—transition.

But even though job displacement is already a concerning thing, I believe it is the least important of the four topics we are discussing today, starting with the next: loneliness.

The Loneliness Epidemic

In 2023, the then US Surgeon General, Vivek Murthy, came out with an eye-opening report on loneliness, arguing that the US had a loneliness epidemic, and that loneliness kills, drawing a really concerning comparison: with regards to survival prospects, loneliness (or ‘society disconnect’, as they refer to it) is as bad as smoking 15 cigarettes a day.

There’s really no way to sugarcoat such a claim. At that time, it was too early for AI, so much of the blame was attributed to the dangerous tendencies that social media was creating (although they did briefly yet explicitly mention that AI is one of the technologies that would contribute to social disconnection).

Two years later, can we make claims about the potential impact of AI on this matter?

Luckily or unluckily, we now have more solid data, and the results are not any better than with jobs.

To the surprise of many, we actually have data that supports the use of AI as a tool to combat loneliness.

A Pleasant Surprise… at first

In a 2024 Harvard study, researchers found that AI can actually help against loneliness.

Using a no-AI control group, they analyzed the effect AI companions (AI models optimized for companionship use cases) had on people suffering from loneliness, considerably reducing the loneliness score with high statistical likelihood.

Even mere AI assistants (out-of-the-box ChatGPT) had a lowering effect, which is undeniably good news. Many AI enthusiasts even claim that these models are not only not damaging, but they will also be key tools in fighting the loneliness epidemic.

The limit of this study is that it did not evaluate power users and long-term “relationships” between the AIs and humans. But OpenAI did just that recently, and here’s where issues arise.

In a March 2025 study, a joint effort between MIT and OpenAI had very different conclusions. Specifically:

“Results showed that while voice-based chatbots initially appeared beneficial in mitigating loneliness and dependence compared with text-based chatbots, these advantages diminished at high usage levels, especially with a neutral-voice chatbot.“

To which they conclude:

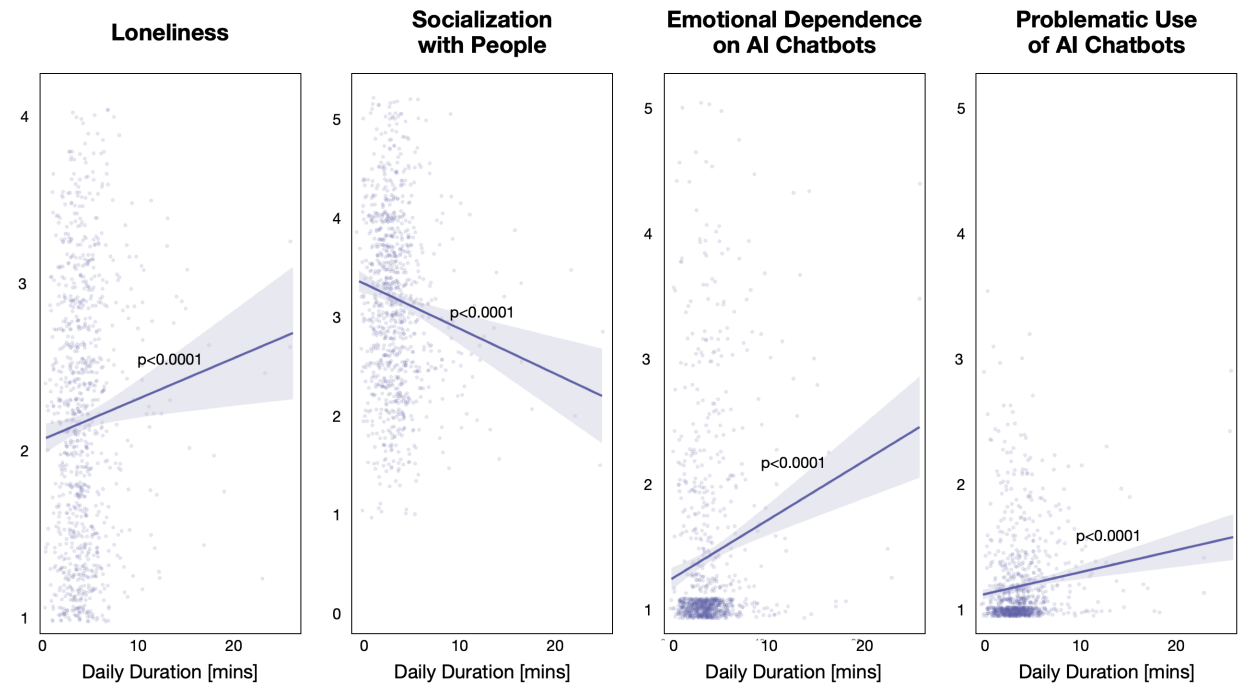

“Overall, higher daily usage—across all modalities and conversation types—correlated with higher loneliness, dependence, and problematic use, and lower socialization.”

Damn. But let’s dive deeper because this study has much more than meets the eye.

At first, this appears shocking, considering they conclude that, after four weeks, people were less lonely on average. However, there is a first catch. Loneliness fell, but socialization decreased, emotional dependence grew, and so did problematic use.

In the study, “problematic use” is defined as excessive and compulsive use of the chatbot in a way that creates negative consequences for physical, mental, or social well-being.

In other words, and this is very important to understand: perception of loneliness decreased, but at the expense of worsening the exact traits you would identify in a lonely person. It’s clearly a patch, not a solution!

But we don’t have to search for much longer to see this effect.

“Participants who spent more daily time were significantly lonelier and socialized significantly less with real people. They also exhibited significantly higher emotional dependence on AI chatbots and problematic usage of AI chatbots.”

Confirming AI’s patching effect, this perception of improved loneliness immediately fades away with prolonged use; AI can only hide your miseries for a limited time. As expected, the graphs show a very different picture from what the previous study showed for people engaging in more prolonged interactions:

Things are not looking great. However, the impact of these metrics is best appreciated when you examine real-life stories.

Before going into even darker areas (psychosis and fertility rates), let’s take a look at the deadly consequences of prolonged use of AI models in the hands of the wrong people, introducing a totally new type of threat for humans: getting too attached to a robot can end in death.

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- NO ADS

- An additional insights email on Tuesdays

- Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more