THEWHITEBOX

TLDR;

Welcome back! My apologies for the late send this week. I’ve been advising JPMorgan on an upcoming report I can’t quite talk about yet, which means you’ll soon see ‘TheWhiteBox’ mentioned in a report read by millions!

However, in case you aren’t familiar with how investment bankers handle work, this has had me fighting for every inch of schedule to write this newsletter.

This was an extraordinary situation, don’t worry, but one you’re benefiting from, as I’ll share the analysis with you guys in this newsletter shortly.

Beyond that, we cover several interesting topics, like Google’s impressive Gemini 3 Flash and FunctionGemma releases, Oracle’s late struggles, Micron’s blowout earnings, OpenAI’s release of gpt-image 1.5, and more.

Enjoy!

How can AI power your income?

Ready to transform artificial intelligence from a buzzword into your personal revenue generator

HubSpot’s groundbreaking guide "200+ AI-Powered Income Ideas" is your gateway to financial innovation in the digital age.

Inside you'll discover:

A curated collection of 200+ profitable opportunities spanning content creation, e-commerce, gaming, and emerging digital markets—each vetted for real-world potential

Step-by-step implementation guides designed for beginners, making AI accessible regardless of your technical background

Cutting-edge strategies aligned with current market trends, ensuring your ventures stay ahead of the curve

Download your guide today and unlock a future where artificial intelligence powers your success. Your next income stream is waiting.

MEMORY

Micron’s Insane Earnings Shock Everyone

Micron, one of three companies building high-bandwidth memory chips, or HBM, which have become crucial for AI accelerators like NVIDIA’s GPUs, held its earnings call for fiscal Q1 2026 (quarter ended November 27, 2025), and it was a total success, to the point that Morgan Stanley called it “the best revenue/net income upside in the history of US semiconductors.”

The company posted record revenue of $13.64B (up from $11.32B in the prior quarter and $8.71B a year ago).

GAAP net income was $5.24B (GAAP EPS $4.60), and non-GAAP net income was $5.48B (non-GAAP EPS $4.78).

Non-GAAP gross margin expanded to 56.8% (56.0% GAAP), and operating cash flow was $8.41B; Micron highlighted “highest ever” free cash flow, with adjusted free cash flow of $3.9B and capex of $4.5B in the quarter.

GAAP refers to Generally Accepted Accounting Principles, the standard framework of rules, standards, and procedures for financial reporting in the US. Whenever you see ‘non-GAAP something’ or ‘adjusted something’, like ‘adjusted free cash flow’ that is the company giving the metric not based on GAAP but on what they believe better reflects the metric from the company’s perspective.

In a nutshell, this company is printing money, and 2026, its potential breakout year, hasn’t even started.

Moreover, the guidance was perhaps even more impressive. Guidance for fiscal Q2 2026 was notably above typical Street expectations: revenue of $18.70 ± $0.40 billion, non-GAAP gross margin of 68.0% ± 1.0%, and non-GAAP EPS of $8.42 ± $0.20 (the analyst consensus was below $5, an absurd beat!).

TheWhiteBox’s takeaway:

When I say these results have “shocked everyone”, if you’re a regular of this newsletter, you shouldn’t be shocked at all.

We’ve been calling the arrival of the memory supercycle for months already, and even heavily invested in one of the top three DRAM companies (SK Hynix, Samsung, and Micron), predicting a great 2026 for them (a great year for all three, actually).

MONEY MARKETS

Oracle, AI’s Elephant in the room?

In the absence of public AI Labs, Oracle might be the biggest proxy for investor sentiment toward AI right now. And once you see the news coming out of Oracle, you will understand.

But first, have you ever wondered why AI data centers are getting so large?

The massive expansion of AI data centers is driven by the specific hardware limitations of training modern neural networks.

AI models function as giant matrix multiplications that require parallel processing on accelerators like GPUs, but these chips are inherently memory-bound when running AI models, meaning they struggle to move data in and out of memory fast enough to keep the computing cores busy.

Because frontier models have scaled to trillions of parameters and training datasets are so vast that processing them on a single chip would take lifetimes, as we calculated ourselves in a recent Leaders post, engineers must combine thousands of chips into server-scale units that act as a single computer. This necessitates physical infrastructure capable of housing and powering these massive, interconnected clusters.

More specifically, there are two ways AI infrastructure is scaling in response to AI software:

Training datasets are getting bigger, requiring more and more parallel processing to process the data fast enough, leading to huge data centers.

Models are also getting bigger, requiring larger individual server sizes to fit model instances on a single server for inference and avoiding the hefty overhead of server-to-server communication, leading to huge servers.

Thus, as a good rule of thumb, AI training drives larger data centers, inference drives larger singular servers.

This means that inference can be done in much smaller data centers, even for the most powerful models.

Either way, AI infrastructure will only get bigger and bigger. Okay, and how is Oracle dealing with the ever-growing capital expenditures? You know the answer, right?

Debt.

While Oracle boasts approximately $525 billion in future revenue commitments, primarily from OpenAI (~$300 billion of that), it lacks the immediate capital to build the expensive infrastructure required to fulfill these contracts.

Building gigawatt-scale data centers costs tens of billions of dollars, forcing Oracle to fund this expansion through aggressive borrowing. This has resulted in a debt spiral, with total debt reaching $108 billion, pushing the company's debt-to-equity ratio to alarming levels between 355% and 574%.

The disparity between those two numbers stems from whether you use direct debt in the numerator (~$109 billion in Oracle’s case) or total liabilities (~$173 billion), with equity value at ~$30.4 billion. All numbers can be found on page 1 of Oracle’s last 10-Q.

Oracle’s debt-to-equity ratio according to Bloomberg as of September 2025

In response to the alarming rise of debt, Oracle’s credit default swaps have risen significantly, indicating that the market is pricing Oracle's debt similarly to junk bonds amid fears of a potential default, currently sitting at 151 basis points (meaning investors are paying 1.51% annual interest for every dollar they’ve insured).

Obviously, this has transferred to Oracle’s bond yields, which have since crossed junk-bond territory, almost at 6%:

But what does it mean that the bond yields are rising? Aren’t bond interests fixed?

Sure, but there’s a difference between coupon and yield. Say the par value is $1,000, and the coupon (the interest Oracle pays on that debt) is 5%, or $50. But if investors sell their bonds, that puts downward pressure on the bond’s price, which might now be trading at $950. If so, the actual yield (the return) for the investor who bought at $950 is now higher, 5.2% (50/950).

And with Blue Owl Capital backing out of Oracle's latest $10 billion effort to fund new data center expansions, one is forced to ask: Larry, are you sure you know what you’re doing?

TheWhiteBox's takeaway:

In my view, Oracle has effectively become a leveraged proxy for the market's confidence in OpenAI.

The company’s financial future is now a binary bet that hinges entirely on OpenAI's ability to raise the funds necessary to honor its massive purchase commitments.

If OpenAI succeeds and pays its bills, Oracle will emerge as a dominant AI hyperscaler with significant revenue.

However, if OpenAI fails to secure funding or cannot pay, Oracle will be left with an insurmountable debt load and expensive infrastructure it cannot monetize, placing the company in extreme financial peril.

Which way will it go?

VENTURE CAPITAL

OpenAI Strives for a $830 Billion Valuation

As reported by the Wall Street Journal, OpenAI is exploring a new fundraising round that could raise as much as $100 billion and, if it hits that target, value the company at up to $830 billion.

The alleged talks are still early, terms could change, and it’s unclear whether demand will be strong enough to reach the full amount. The company aims to complete the round no earlier than the end of the first quarter of 2026.

TheWhiteBox’s takeaway:

This round matters way more than we think.

I believe it could be the last funding round before an eventual IPO at $1 trillion or higher, so they need to raise capital without diluting investors too much and also ensuring there’s upside for these very late entrants.

OpenAI has made up to $1.4 trillion in commitments, a number that an increasing amount of people believe won’t happen. Still, this round and an eventual IPO could both go well beyond the $100 billion mark, giving the company extra fuel to handle the substantial capital investments they’ll have to afront throughout the decade.

On a personal level, I genuinely don’t know how on Earth the math will check out unless OpenAI is counting on a humongous amount of that capital coming from retail or, who knows… the Government?

I’ve mentioned several times that government involvement feels inevitable.

THEWHITEBOX

Unitree robots as professional dancers

A new viral video featuring Unitree robots is circulating online, in which artist Wang Leehom uses three robots as dancers on stage. The movements are so natural that even Elon, who’s building its own humanoid robot inside Tesla, reacted to it.

TheWhiteBox’s takeaway:

We’ve covered Unitree as one of the most exciting Chinese companies and its potential as an investable company once it IPOs. The reason is that, at this point, it’s hard to fathom a single company on the planet coming close to Unitree when it comes to robot dexterity.

Of course, that’s only half of the pie, as you also have to account for the software side, the actual AI, or brain. Nevertheless, these robots, as with most Unitree demonstrations, were choreographed, meaning they were imitating predefined movements. In the AI robotics wars, you have to keep this in mind:

US Labs are prioritizing the brain, meaning US robots are much more autonomous but less agile.

Chinese Labs are prioritizing the body, making Chinese robots much more nimble and smooth, but mostly teleoperated for now.

Which way is the correct way? My impulse is to say China; getting hardware right is complicated. On the other hand, as we covered recently, robotics requires AI world models, by far the most complex software humanity has ever built, so it could very well be the opposite.

HARDWARE

China’s AI Manhattan Project: Stealing the Dutch

A Reuters report says China has assembled a prototype extreme ultraviolet (EUV) lithography tool in a high-security facility in Shenzhen and is now testing it, marking a notable step in the country’s push to break reliance on Western chipmaking equipment.

But what is a lithography tool? It’s a very, very expensive machine (ASML retails them at hundreds of millions of dollars per unit) that plays a crucial role in the chip manufacturing process by “printing” the chip’s circuit into a Silicon wafer; you use light to print a resist pattern on the wafer. After, the wafer goes through several processes, until you get the actual chip.

The prototype is described as becoming operational in early 2025, meaning it can generate EUV light, but it has not yet demonstrated the ability to manufacture usable chips.

The effort is portrayed as a covert, state-backed “Manhattan Project” that recruited former ASML engineers and relied on aggressive reverse-engineering, with Huawei reportedly helping coordinate parts of the program.

Despite the progress, the most complex pieces remain: high-precision optics and other ultra-tight-tolerance subsystems that, in commercial EUV tools, depend on a specialized supply chain led by companies like Zeiss, so don’t expect China to have mass production of these machines for several years.

Targets mentioned in the reporting point to 2028 for meaningful results, though some insiders suggest 2030 is more realistic given integration complexity and the leap from a working light source to a production-ready scanner with competitive yield, uptime, and throughput.

EDGE AI

Did Google Create… Siri?

Yes, my headline is sensationalist. But I couldn’t hold myself from throwing shade at Apple. Google has released FunctionGemma, a tiny AI model (just 270 million parameters, weighing just over 300 MB), fine-tuned specifically for ‘tool requests’.

But what is a tool-calling AI model? AI models that support tool calling can, well, call tools, allowing them to execute actions on behalf of the user (becoming an agent).

The thing is, inferring user intent is not easy, especially for smaller models with much more limited text-processing capabilities. At these sizes, models are usually designed for one thing and one thing only: either speaking back to the user moderately well or simply executing actions without any natural language capabilities. And the weird thing is that this model does both, meaning it can talk to humans and “talk” to machines.

With all said, the model is so tiny that Google insists it’s meant for you to fine-tune it to your task; it’s not general enough to just work on your tools out of the box.

TheWhiteBox’s takeaway:

At this point, I assume you are connecting the dots on what this model could be meant for: smartphones, or what Siri should have been by now.

And to showcase this, they built a Siri-like app for Android that lets users give natural-language voice instructions, shown in the video (a separate model handles the translation from voice to text). Then, it executes the correct action, such as creating a calendar invite or registering a new contact.

Crucially, its smallish size allows all this to happen because smartphones these days have gigabytes of working memory and terabytes of storage, so the model easily fits inside the working memory of edge devices like most smartphones and can execute actions on your behalf without saturating it (which would lead to slowness or the device crashing).

The sad part of all this is that edge AI is the leading “affected actor” in the dramatic supply shortage of DRAM that has sent RAM prices skyrocketing to the point that 2026 smartphones will see 4GB of RAM smartphones become a thing again after years where 8GB was the norm, and some high-end ones even reached 16GB.

Laptops are also going back to 8 GB of memory as the base option (and with a price rise).

Having said that, FunctionGemma is still small enough to run on these devices (AI models should not occupy more than 20% of your working memory), but this will inevitably curtail incentives for AI Labs to progress on edge inference models. Sad.

TheWhiteBox’s takeaway:

But even sadder is Apple’s AI strategy. The fact that they have yet to release something remotely similar to what Google is releasing for them is outright pathetic. John Gianandrea, Apple’s top AI exec, took the hit, but time is closing in on Apple, and they need to deliver something good in 2026.

Incidentally, 2026 might be a good year for Apple as they might be saved, ironically, by the aforementioned RAM supply shortages, as they have most of their RAM allocations prebought at much lower contract prices, which could lead competitors with less pre-bought RAM inventory to raise prices and make Apple products appear cheaper.

SEARCH

Exa’s Human Search Database

We now have a human database. Exa introduces a new people-search system to make “finding the right person on the web” measurable and reproducible.

The company says it trained retrieval specifically for people queries and built an index of more than 1B people, backed by a pipeline designed to handle 50M+ profile updates per week, reflecting how quickly roles and affiliations change in the real world, which you can try at exa.ai.

After clustering 10,000 historical people-related queries, Exa highlights three common intents:

role-based searches tied to a specific organization,

discovery queries constrained by role/skills and location,

and direct lookup by name (optionally with an affiliation).

For discovery queries, Exa uses a Large Language Model (Claude Opus 4.5, per the post) to generate a taxonomy across industries, roles, and seniority, geographies, and filters such as skills and years of experience.

TheWhiteBox’s takeaway:

It’s a fun thing to test; it really dives quite deep. Yet, the vector database’s most significant issue is that retrieval is not very smart. I tested myself—if you’ll excuse my narcissistic tendencies—and some sources weren't relevant at all, and retrieved “ancient” facts and content despite having much more recent things to say.

I assume the search returns better results the more famous the person is. I find this particularly useful for quickly searching people you may be about to meet, to get to know some facts about them (I found a client of mine loves fishing this way). Feels wrong, but at the end of the day, it’s all based on publicly available information, so you aren’t doing anything naughty.

CHINA

Another Great Chinese Model…

Xiaomi has introduced MiMo-V2-Flash, an open-source Mixture-of-Experts (MoE) language model with 309 billion total parameters and 15 billion active during inference.

Released under an MIT license, the model weights are available on Hugging Face and GitHub, with API access offered at $0.10 per million input tokens and $0.30 per million output tokens, dirt cheap compared to most Western models that aren’t called Google and surname Gemini 3 Flash (more on this below).

The model is designed for tasks requiring fast inference, such as agentic AI workflows involving tool use and multi-turn interactions. It supports up to 150 tokens per second on compatible hardware, aided by Multi-Token Prediction (meaning every time the model makes a prediction, it’s not just one word but many) for accelerated decoding and a hybrid attention mechanism (a 5:1 ratio of a 128-token sliding window to global attention).

TheWhiteBox’s takeaway:

The news here, beyond the fact that we have a “smallish” model by modern standards performing very well, barely frontier, is that we are talking about Xiaomi, the same company that builds smartphones or even house-cleaning robots (or recently cars too!).

It’s not DeepSeek, it’s not Minimax, or the usual names, it’s a company that shouldn’t be releasing advanced research, proving that China’s AI ecosystem is as diverse as it can be, thanks to its open-source approach.

Yes, open-source is mostly a geopolitical tool to punish US Labs and force them to drop prices, but that doesn’t negate the fact that it’s also leading to a plethora of different competitors in a highly competitive local Chinese market. China gets it, and it really makes me lose my mind how the US doesn’t.

The Government must urgently, and I mean urgently, “recommend” Labs to start improving their open-source efforts. Yes, there are also “non-AI Lab” companies in the US doing great research, like ServiceNow or Salesforce, but they are tech companies at the end of the day; what you would not expect is to see Meituan, the equivalent of DoorDash, releasing frontier research.

This is a symptom that things are clicking in China. And if we want (and we should wish to) see companies like Walmart release advanced research, we first need Big Tech, with the notable exception of NVIDIA, to get their act together and return to more open research, not 6-month embargoes, as Google DeepMind famously does.

THEWHITEBOX

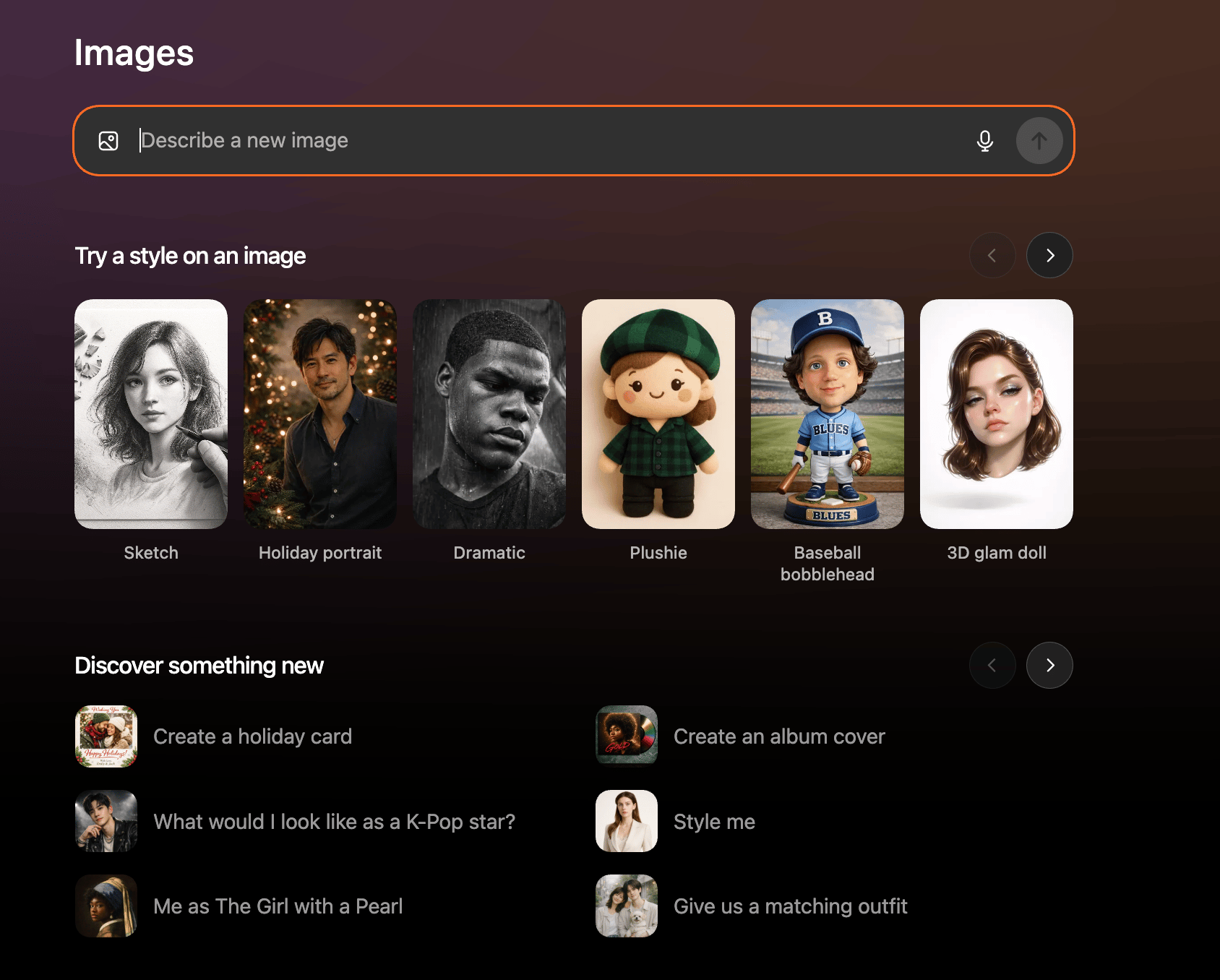

OpenAI Releases GPT-image-1.5

OpenAI released GPT Images a few days ago, positioning it as the new flagship model behind a refreshed “ChatGPT Images” experience and a standalone API model (“gpt-image-1.5”).

The headline promise is straightforward: images that better match what you asked for, whether you’re generating from scratch or editing an existing photo.

What stands out most in the release is the emphasis on controllable editing rather than just novel generation. OpenAI claims the model is more reliable about changing only what you specify while keeping key attributes stable across iterations—things like composition, lighting, and a person’s appearance, alongside faster generation that can reach up to 4× compared to the prior experience.

Speed was by far the worst thing about the previous ChatGPT image generation model.

Perhaps the most meaningful change, at least in terms of product, is that OpenAI is rolling out a dedicated Images space in the ChatGPT sidebar (thumbnail) with preset filters and trending prompts meant to speed up iteration.

API pricing of Image 1.5 is cheaper than the prior generation for image inputs/outputs (they cite 20% cheaper) and calls out marketing and ecommerce catalog creation as core use cases where brand and logo consistency across edits is valuable.

Regarding the architectural details, we can’t say much. The last reference they made to this was way back in GPT-4o’s image generation (gpt-image 1) addendum, when they quoted “4o image generation is an autoregressive model natively embedded within ChatGPT,” meaning these models behave similarly to the text-based models by predicting the next token in a sequence (although here the token is not a word, but a pixel patch).

TheWhiteBox’s takeaway:

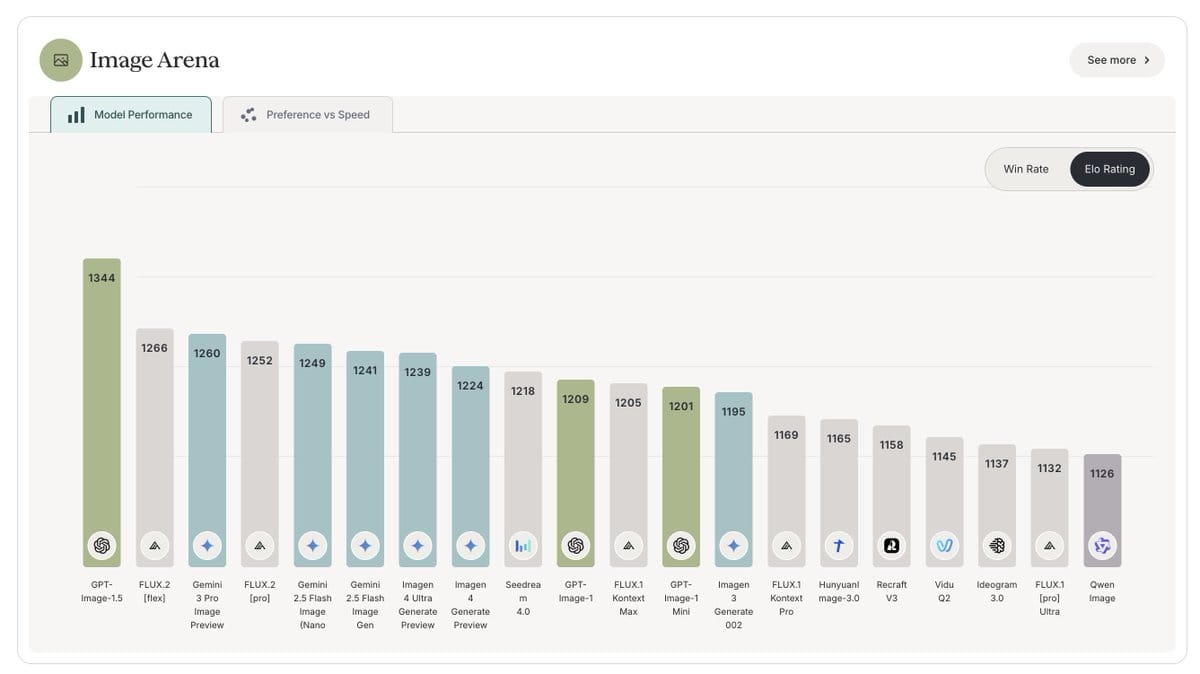

From my own personal experience, the results are way better than the previous generation, and, as it pertains to image generation (without intelligence, just generating what’s asked), it seems to be a new state-of-the-art, as shown in results like Image Arena.

But it’s been a while now that with Image generation models, we also have to discuss, for lack of a better term, “intelligence,” meaning can these models actually perform “intelligent” stuff, like solving a math equation using these models?

And in that regard, at least in terms of my experience, it’s pretty much night and day, and Google’s Nano Banana Pro is very, very superior.

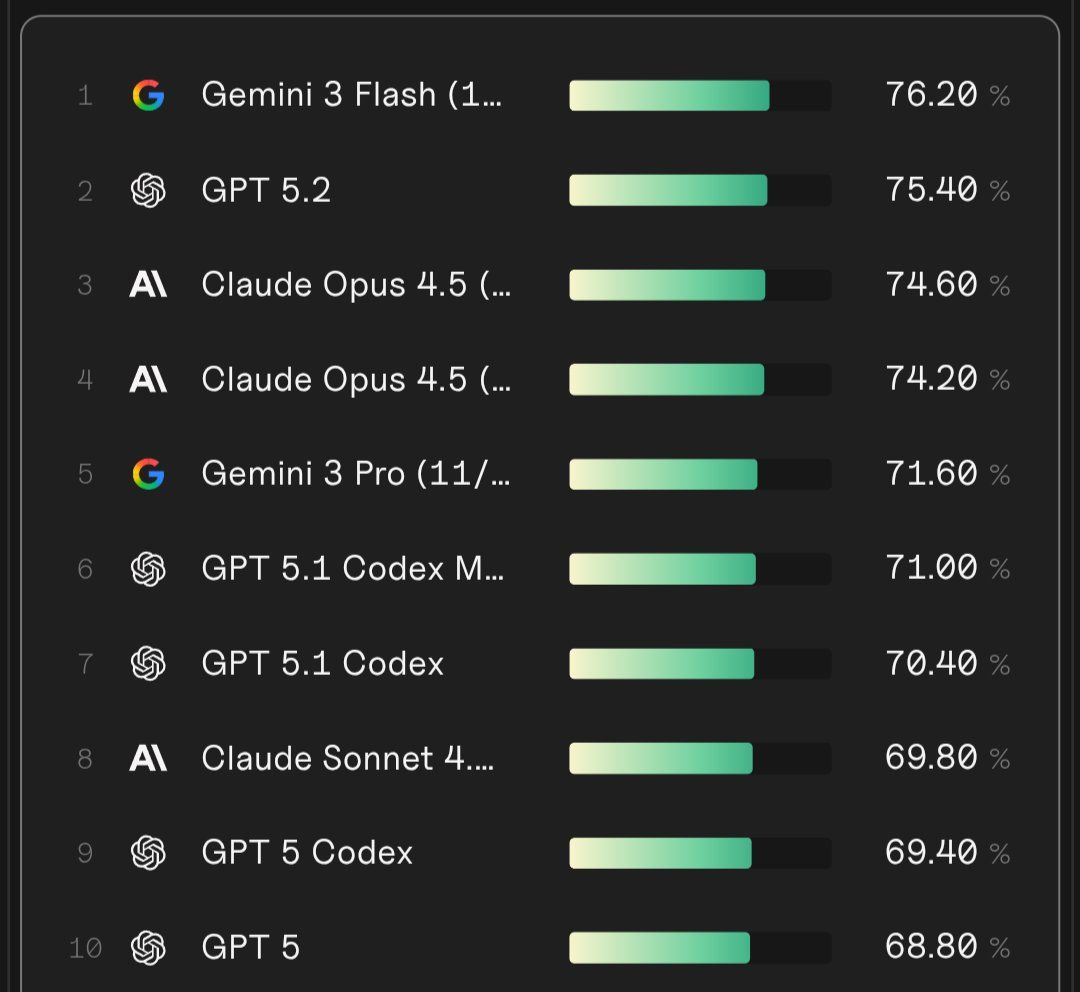

All things considered, if you ask me what the frontier of AI is, it is as follows:

For simple text queries, Gemini is the fastest, and performance is great.

For text-based reasoning work and research, ChatGPT’s GPT-5.2 Pro stands above all others.

For multimodal work, Gemini is in a league of its own.

For coding, Anthropic’s Claude Opus 4.5 has been a superb experience, though we’ll need to wait to see how GTP-5.2 Codex (see below) is received.

And if you’re optimizing for cost-effectiveness, Chinese models are the way to go, but they require powerful local hardware or setting up LLM inference provider endpoints, which can be a bit on the technical side for some.

CODING

OpenAI Drops GPT-5.2 Codex

In the last of the frontier models released in a few days, OpenAI has released GPT-5.2-Codex, a GPT-5.2 variant tuned for agentic coding and long, real-world software engineering work.

The model focuses on staying reliable over extended sessions through context compaction, handling significant code changes, improving performance in Windows environments, and providing a clearer vision for interpreting screenshots, diagrams, and UI surfaces during development. A striking example is the thumbnail, where a draft becomes a working prototype with one single image request.

OpenAI says GPT-5.2-Codex reaches state-of-the-art results on SWE-Bench Pro and Terminal-Bench 2.0, and highlights a notable jump in cybersecurity capability compared with prior Codex models.

WORKHORSE MODELS

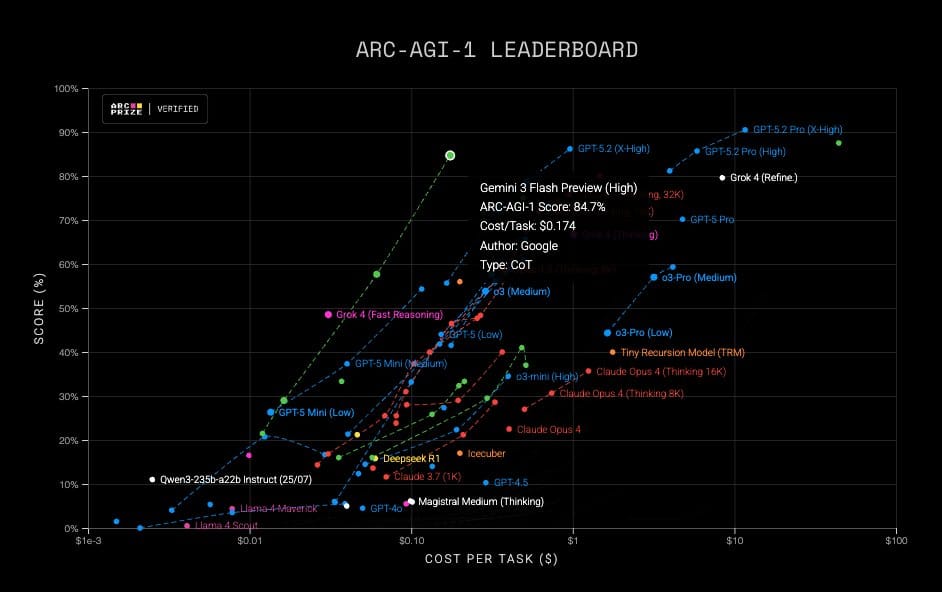

Google Shocks with Gemini 3 Flash

Google’s release of Gemini 3 Flash might be one of the most impressive in a while, considering how it presents itself as a borderline frontier model at the price of a non-frontier model.

The model puts Google at an unquestionable lead on the Pareto frontier, with performance that is simply outstanding on a performance-per-dollar basis.

For instance, in SWE-Bench Verified, the most popular reference to measure coding quality by AI models, Gemini 3 Flash scores higher than all the competition. Yes, all frontier models, including its larger brother Gemini 3 Pro (we’ll see below how that’s possible).

Perhaps even more impressively, the model sets a new performance-per-dollar record in ARC-AGI, scoring 84.7%, and 33.6% in ARC-AGI 2.

A year has passed since the OpenAI o3-preview model shocked the world with an ~88% score at an average of $4,500 a task.

Now, Google gets a similar score while requiring, get this, 26,471 times less money per task. In one year.

TheWhiteBox’s takeaway:

If benchmarks have any signal, this is way more impressive than the Gemini 3 Pro release. But, of course, this is just a distilled, smaller version, meaning it’s a model trained to “imitate” Gemini 3 Pro’s responses while being smaller and cheaper to run.

Well, wrong. Or partially wrong. As a researcher at Google DeepMind himself explains, Gemini 3 Flash was not “just a distilled pro”, but includes a lot of “exciting research”, particularly new Reinforcement Learning techniques, that came too late for the Pro version but were applied to the Flash version and worked really well.

So, what to take from this?

As you know, I’m very bullish on Google as the potential “guy to beat” in the AI race, and this only makes me more convinced that it is correct. However, as I did when I compared ChatGPT Pro to Gemini Deep Think, I need to call balls and strikes.

And while Gemini is inherently superior to ChatGPT as an app for quick queries (faster and very, very good), the models, in my personal experience, hallucinate way more; they just do.

Being perfectly honest, hallucination rates for ChatGPT’s thinking and Pro models are borderline zero; I can go days with hundreds of queries before I run into one. But with Google’s models, I often have to correct the model on simple things that are obviously hallucinations, and it may put words in my mouth that I did not say. This is the only thing, besides the inference-time compute constraints for Gemini Deep Think I mentioned, that keeps me from paying just one Pro subscription.

Closing Thoughts

A week packed with releases, but pretty standard in terms of what stories these releases tell, except for Gemini 3 Flash, which feels like something “different” due to the almost “obscene” performance-per-dollar this model has over the rest (at least benchmark-wise).

The market’s news seems much more newsworthy, especially the “two worlds” we are seeing:

Hardware companies rocking every earnings call, with Micron or NVIDIA

Infrastructure companies like Oracle are taking all the skepticism hits

I assume you can notice the disparity. People are incredibly excited about the hardware companies, but worry about the same companies that are footing the bill.

You know it takes two to dance a tango, right? How can markets be highly optimistic about one group and not about the group that is making the former group rich?

If infrastructure companies really catch a cold as markets are bracing for, hardware companies will get pneumonia, which markets aren’t bracing for.

But as we all know, who said markets were rational?

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]