Smarter Investing Starts with Smarter News

Cut through the hype and get the market insights that matter. The Daily Upside delivers clear, actionable financial analysis trusted by over 1 million investors—free, every morning. Whether you’re buying your first ETF or managing a diversified portfolio, this is the edge your inbox has been missing.

FUTURE

What’s Next in AI?

Although this newsletter has been transitioning to more hands-on information, we mustn’t forget that theory and research are the foundation of AI.

So, realizing I haven’t given you guys an updated of what’s new in AI, I’ve spent two weeks going deep into research (ah, the old times) to answer:

What are the most exciting pieces of research right now? What’s up next?

Thus, today, we are examining the latest research from Google, Cohere, Apple, MIT, Mistral, NVIDIA, and more to determine what the incumbents are most excited about and what breakthroughs will matter in the coming months.

It’s an honest, hard look at what AI is (and isn’t) currently, and, by the end, you will be fully up-to-date with the industry on a deeper level hardly attained by almost anyone.

Specifically, we will:

understand current limitations,

learn how models work in first principles,

list the challenges faced by AI Labs and what they are doing about,

and, notably, describe what I believe the next generation of AI models will be.

Let’s dive in.

First, the Real Issues

To understand what’s troubling AI Labs and what they are doing about it, we must first acknowledge the issues models currently exhibit.

The Big Constraint

Models need context to work well. In fact, just today, an executive at Google claimed AGI would be built by whoever solves context and memory, not intelligence.

Put simply, if we could feed a model all the relevant context it needs to execute a task, it would likely do it well. With foundation models like ChatGPT, it isn’t about whether the model can do something, but instead whether it has the context to do so correctly.

So, why just not send them infinite context? The big problem with that is that they suffer from a compute and cache explosion due to the way they process data.

The reason models like ChatGPT work so well is that they have an inductive bias (an architecture choice) known as ‘global attention;’ when given the context, they pay attention to every single part of this context, no matter how large it is, which implies an enormous “cognitive effort” and, worse, a tendency to lose itself amongst the noise.

The reason we do this is to ensure the model has full context to predict the next word.

For example, if you want to talk with ChatGPT about a Sherlock Holmes book you’ve just sent to it, and you ask it, ‘What was the butler’s name?’ and that name is only mentioned once at the beginning of Chapter 2, way back in the context window, the only way the model can respond is if it scrutinizes every single word in the entire book.

More formally, they are stateless, meaning they don’t have a persistent, compressed memory; they simply reread everything all the time.

Put another way, if you give ChatGPT a million words of context, the model will scrutinize every single word, giving all of them the benefit of the doubt (i.e., the chance they are relevant to the model’s response), as if you had to remember every single event that happened in your day, including evening events, to recall whether you had breakfast; doesn’t seem very efficient, right?

Put another way, the model will ‘attend’ to every single part of the context rather than prioritizing those parts that appear to be more valuable.

Imagine you are reading a book and are on page 345. To read the next page and understand it, instead of having stored a summarized representation of the previous 345 pages, picture you having to reread all 345 pages every time you want to read the next word in page 346.

That, my dear reader, is how ChatGPT ‘reads.’

But wouldn’t that imply a complete overkill of compute effort?

Yes, so we have a trick: I lied when I said these models don’t have memory… they do have, kind of. Instead of having the model reread everything every second, we store the required computations of all previously-processed context in memory.

So, problem solved?

Not quite, as what I also said is that this memory is uncompressed. Unlike humans, who summarize the previous 345 pages into the key things they deem worth remembering, these models retain the 345 pages in memory, literally.

But wouldn’t that defeat the purpose of memory in the first place? For humans, perhaps, but for AI models, it speeds up the next prediction, so it’s still worthwhile on an engineering basis. What it doesn’t do is prevent this memory from exploding in size the longer the model’s context is.

In practice, this means that the longer the sequence of text, images, or video is provided, the memory requirements grow proportionally, thus representing a significant bottleneck for AI models in terms of their context window and the amount of context they can handle at any given time.

While humans have a limit to what we can store in our minds, which leads to forgetting some information to make room for new, these models don’t. As a result, memory and associated computation explode as sequence length grows, placing severe constraints on how much real context we can feed them.

Some people have proposed alternatives that do compress memory, like state-space models like Mamba, but largely underperform and thus are not adopted.

But is this that bad?

Oh boy, it is. In fact, for very long sequences, their associated cache requirements (the memory the model needs to handle that sequence) can represent a larger portion of your computer’s memory than the model itself, sometimes reaching TeraBytes of memory size despite our most powerful hardware stuck under 200GB per GPU limits and with memory chip costs well above the $100/GB threshold (which implies tens of thousands of dollars in COGS for NVIDIA to build its GPUs), thereby severely constrain how much memory each accelerator (GPU) has.

If you’re an investor and were wondering why NVIDIA’s gross margin is collapsing, look no further as number of GB of memory per GPU is exploding due to reasoning models.

Therefore, in the current paradigm, it’s not compute that keeps Sam Altman awake at night; it’s memory limits.

But that’s hardly the only issue with current models. The second major problem is that models remain stuck in knowledge land.

Unfamiliar = Wrong

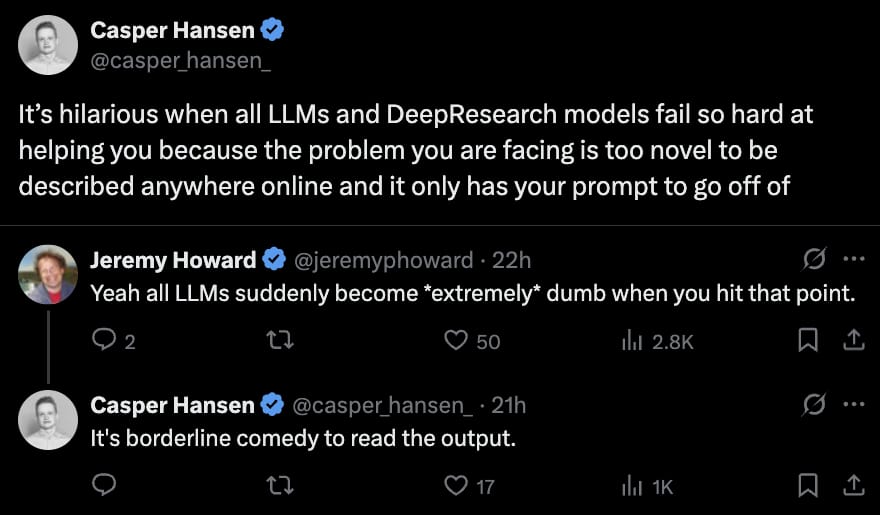

Going straight to the point, modern AI models can’t generalize (make successful predictions over data it has not seen before).

Using Jean Piaget’s definition of intelligence—“Intelligence is what you use when you don’t know what to do”—which is a simple way to describe intelligence as the capacity of humans to adapt to new environments successfully, we clearly observe AI’s intelligence limit.

More formally, Jean Piaget considered intelligence as the means by which humans find equilibrium with their dynamic environment by adjusting their behaviors, thereby framing intelligence as an adaptation task.

And the problem is that current AI models demonstrate zero adaptation capabilities and zero performance in areas where they haven’t internalized what to do beforehand; in a Puritan, Piaget-ish view, they have not yet shown a single ounce of intelligence but instead a remarkable use of memory (in part, aided by the fact that they are cheating as they hold the entire context in memory).

At this point, though, you are probably tempted to disagree with me. For instance, I can ask ChatGPT to create a poem in Dante’s style about rockets.

That’s proof of AI doing something new, right?

No, because we are confusing interpolation with extrapolation, or confusing in-distribution generalization with out-of-distribution generalization.

ChatGPT is an interpolative database, a system that retrieves facts and concepts from its knowledge and associates them to create new, similar things with intelligence; it remixes what it knows to create “new stuff.” This is what we define as an in-distribution generalization, when the model generalizes (does well) in areas that are new but still very similar to what it has seen before.

Therefore, in reality, it’s not creating anything new; it’s taking what it knows and regurgitating it back to you in a different way that sounds smart and, crucially, novel.

As Apple pointed out (albeit clumsily), it’s an illusion of thinking.

But AI problems don’t stop there, as models are being masqueraded by what they aren’t.

RL in Non-verifiable Domains

Models can’t reason about what they don’t know, that should be clear by now.

But what if… there’s a way? That potential way is reinforcement learning (we’ll later discuss a fascinating paper that is going extremely viral on this precise matter).

However, we have a problem.

When examining unsolved problems, none stand out more than training AI models in reinforcement learning (RL) environments on non-verifiable domains, domains where we can’t verify whether the model’s response was good or bad.

As discussed multiple times, RL is a way of training AIs in a trial-and-error format, where the model throws things at the wall to see what sticks and learn from it.

As also discussed multiple times, this is the only training method humans know that has led to superhuman AIs, with examples like AlphaZero, or AIs that are extraordinarily good at a given task.

But then, why not use it always?

RL’s biggest problem historically was generalization; we only knew how to apply it to one task. Now, with foundation models like ChatGPT, we have the opportunity to apply it at scale (one model that becomes extraordinary at many tasks), but we have only managed to do so in verifiable domains, domains where the quality of the action can be immediately verified.

Put simply:

While we know how to apply RL in areas like maths, coding, or chess, training models that perform impressively on very challenging problems, feeling almost superhuman,

We don’t know how to train a superhuman writer because we don’t know how to define ‘what good writing is’ quantitatively. Thus, we don’t know how to provide quality feedback to the model, and, therefore, there’s no such thing as a superhuman AI writer (in fact, they quite literally suck).

Let me be clear this is an unsolved problem, and no so-called ‘reasoning models’ exist in non-verifiable domains.

Moving on, knowing how to train RL models at scale theoretically is one problem, but the truth is that even if we knew how to untap non-verifiable domains, we could still not know how to proceed because, frankly, we have not yet fully learned how to train these models at scale.

That is, RL is not only a theoretical problem; it’s an engineering one, too.

Scaling Limits

In AI, we obsessively focus on the limits of software (the AI models themselves) and not on the real elephant in the room: energy, hardware, and talent.

The first and last ones are obvious: we don’t have enough energy to sustain the enormous demand that—allegedly—AI will see in the following years, and we don’t have nearly as much AI talent. Thus, we can build AGI, but we may not be able to run it under current constraints.

But hardware represents an equally complex bottleneck: we don’t quite know how to scale it.

The reason is that this compute has to be stored in a collocated fashion (close to each other, saturating local grids to the point Hyperscalers are literally buying nuclear plants—or the energy they’ll produce for the following years) and suffers from a massive engineering problem: it’s excruciatingly hard to run efficiently (getting returns on capital is a huge problem).

The main issue is that inference-heavy workloads, like RL, which generate long chains of thought to answer, yield very low arithmetic intensity values that make running GPUs a highly unprofitable business. I covered this concept in detail for Premium subscribers here.

Furthermore, AI requires costly hardware that depreciates rapidly (every 3-6 years, a new batch is needed) and, worse, almost always necessitates redundancy (multiple GPUs per workload), resulting in idleness across the board.

Expressed in simple terms, we purchase expensive hardware that fails quickly, and this same hardware remains idle for a significant portion of its useful life, making it an uncomfortable investment or, euphemisms aside, a capital expenditure nightmare.

So, to summarize all covered points:

AI is inefficient to run,

fundamentally limited in what it can learn and do well,

and at the hardware level, it’s even more constrained and highly unprofitable.

Put another way, AI is living in borrowed time, only sustained by the massive hype that surrounds it, a hype that:

Exaggerates what it can do while hoping someone finds a way to turn lies into truths

It has a massive elephant in the room, silently staring at it, that could stomp the industry at any time, called energy and infrastructure limits.

Knowing this, finally, what is the industry doing about it?

We are focusing this analysis on two perspectives: the research that aims to improve the status quo and the research that suggests we scrap it all and start again by revisiting techniques that are older than me, but could finally be viable.

Fixing What’s Broken or Starting All Over Again?

The research breakthroughs we are focusing on today are the following, covering the bleeding edge of:

memory compression techniques,

Edge AI, mainly Apple’s LoRA fine-tuning framework and Google’s Matryoshka models.

how top AI Labs are scaling their RL workloads via embarrassingly parallel RL training based on Magistral’s training regime,

Unique proposals like Cohere’s model merging technique,

Pre-training on RL techniques from scratch, a revolutionary approach to imitation learning

and the paper of the month, Pro-RL, that teaches models new capabilities for the first time,

And, finally, we will discuss a fundamentally unique proposal hinted at by Sam Altman, that could lead us to scrap current models altogether and build something entirely new (it’s not JEPAs; it’s even more disruptive).

This is quite literally the state-of-the-art of AI research, covered in growing importance.

Local/Global Attention Hybrids.

As mentioned earlier, current frontier models incur significant costs to maximize performance by employing global attention.

But what does that mean? What is attention?

When we say ‘ChatGPT,’ what we are referring to is a model that applies what we know as ‘attention,’ a query lookup between tokens in the input sequence.

In other words, for a model to ‘understand’ what the user is sending, it makes words in the user’s sequence ‘talk to each other’ so adjectives can identify their nouns, adverbs can identify the verb they are affecting, and more; it’s quite literally an exercise where words in the sequence communicate with one another to update their own meanings.

For example, for the sequence “The red carpet”, ‘carpet’ will use the attention mechanism to pay attention to ‘red’ and update its own meaning to ‘red carpet’.

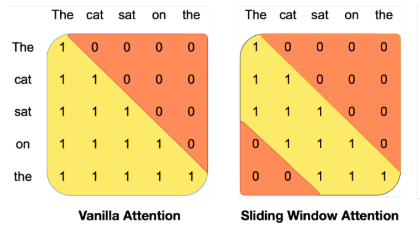

The problem is that this attention mechanism, as mentioned, is global; each word applies this computation to every single other word in the sequence (as long as it precedes it).

Thus, the word in position one million and two will have to pay ‘attention' (hence the name of this technique) to one million and one individual preceding words, which means your compute and memory costs escalate proportionally to the sequence length.

This may be tolerable for context windows in the thousands, enough for many text tasks, but if we truly want these models to change the world, we need these context windows to be in the millions or even billions to manage things like video or DNA sequences.

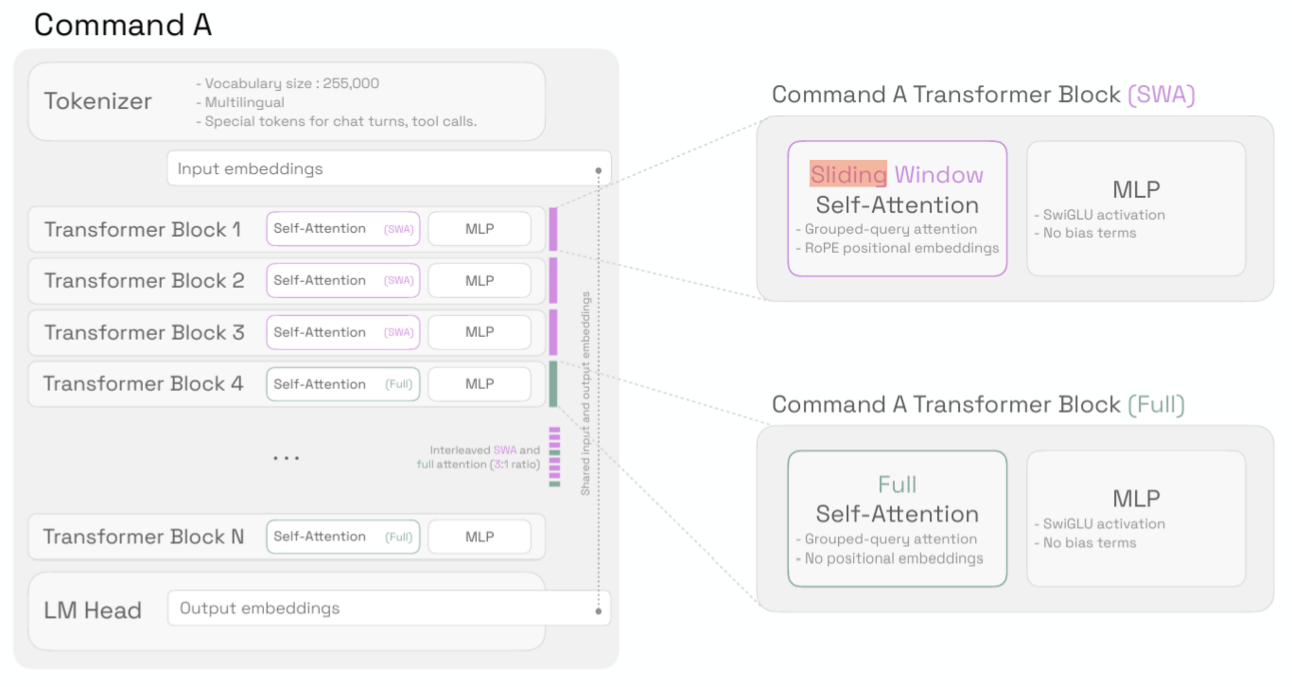

To mitigate this, many labs opt for some trade-off here. The most common one is the use of sliding-window attention, as per Cohere’s Command A model recent paper.

The difference is that when using a sliding window (SWA), each word can only ‘attend’ to the last M words, with ‘M’ being the size of the sliding window, making it a local attention mechanism.

In vanilla (full), words attend to every previous word. In SWA, only the last ‘M’ (here M is 3). Source: Mistral

In turn, that means that the model could forget previous facts way back in context; we are saving huge compute costs at the expense of the model potentially forgetting stuff that is present in its context.

To mitigate this, AI Labs interleave local and global attention layers in the same model, an in-between solution that can balance the cost of global attention with the efficiency of SWA:

‘Models’ are a concatenation of attention segments. The point here is to balance full attention with SWA, dropping costs considerably while also allowing the model to retrieve key facts back in context.

All current models, in some shape or form, perform this trade-off to some extent. Besides this widely accepted method, Labs also have their undisclosed proprietary techniques that are being potentially used as we speak.

Google has Titan, a memory module for historical data that enables the LLM to focus its attention solely on local context (closer in time) and utilize this memory module to extend its context further into the past.

DeepSeek proposes using a cache compression called Native Sparse Attention. Instead of storing every single word of the context in memory, it employs a mechanism that stores the ‘gestalt’ of the past context.

The same DeepSeek team also leverages Multi-latent attention, again compressing the cache (memory), using low-rank matrices. It’s too long to explain, but the idea is based on the assumption that most past memories are irrelevant, and thus leverages a clever mathematical property of matrices, known as rank, to store only the essentials, potentially reducing memory requirements by up to 94%. In other words, it’s a try at compressing memory to make it more manageable while minimizing accuracy loss.

As you can see, all ideas follow the same pattern, tackling the biggest issue with modern AI inference: the memory requirements.

In fact, progress in this area represents one of the ‘only’ areas where some frontier models have an edge over others in what otherwise appears to be essentially commodities.

In particular, Google stands out by offering a context window that can be up to ten times larger than its competitors, making it the only viable solution for use cases such as video understanding or large codebases.

But nothing gets me more excited than our next trend, the impressive evolution we are seeing on edge AI.

The Age of Small Models

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- NO ADS

- An additional insights email on Tuesdays

- Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more