An entirely new way to present ideas

Gamma’s AI creates beautiful presentations, websites, and more. No design or coding skills required. Try it free today.

THEWHITEBOX

Other Premium Content

In response to a Premium subscriber question, can DeepSeek’s results be replicated?

Today’s piece can be read in its entirety, but includes several Premium-only Notion articles, {🥸 Mixture-of-Experts}, {🥸 Soft vs Hard distillations}, {🖋️ Distillation vs Fine-tuning}, {🍏 Why R1 is not as bright as o1}, {💸 Prompt Caching}, {📏 The UAT} that dive deeper into some of the discussed topics that are only accessible if you’re Premium.

FUTURE

Have Things Really Changed in AI?

Based on recent events, one might feel confused about the direction AI is really heading toward. Is that one of less spending? Is that one of stagnation and increased geopolitical tensions? Are Chinese models really that much cheaper?

Unsurprisingly, this chaotic soup of new narratives and opinion changes has led to a cascade of horrible takes by “experts” and “AI investors” alike that, quite frankly, are throwing lies at you from all directions. Let’s solve this.

Today, we’ll do the following:

Look at the four fallacies that you have been led to believe but are dumbfounding wrong.

No, AI spending will not fall. We will look at the largest companies' training budgets, how they will develop, and what that tells us about the future of Hyperscaler AI spending.

No, model sizes won’t fall either. We will understand DeepSeek’s key contributions to distillation, and what that tells us about the roadmap of frontier AI labs like OpenAI,

No, Chinese models aren’t cheaper. We will uncover ‘the great lie’ that has been diffused by media these past few weeks, and who the real champion of cost efficiency is (you will be surprised),

And no, we haven’t figured out the ‘I’ in ‘AI. ’ We will explain why even top experts are misinterpreting how to evaluate model intelligence and how the hype surrounding DeepSeek’s GRPO algorithm and OpenAI/Google’s Deep Research products is completely overblown, analyzing whether this is the end of McKinsey-type consultant firms and what all this tells us about the timelines for AGI.

Face the three hard truths underpinning AI’s progress that explain the ONE thing that has actually changed recently.

Finally, based on the narrative change, I will give you my hot takes on what will change in 2025.

No, These Four Things Have Not Changed.

Despite all the contradictory claims, if you look carefully, some patterns repeat every single time, whether they come from the US, China, or Mars.

No, AI Spending Will Not Fall

While the paradigm shift in narrative appears to be clear, in that inference-time compute is the new intelligence scaler, pre-training, training a huge Large Language Model (LLM) on a dataset encompassing the entire Internet and some private data, will remain essential despite the stagnation claims.

DeepSeek’s results have been profoundly misinterpreted in this regard, and this is obvious simply by looking at the numbers:

Pre-DeepSeek’s launch of V3, Llama 3.1 405B, the best open-source model at the time, required 15 trillion training tokens (~12 trillion words) and a training run of 3.8×1025 FLOPs (38 trillion trillion total operations).

GPT-4, which continues to be state-of-the-art two years later (GPT-5 is still in training or to be trained, meaning that all OpenAI’s efforts regarding pre-training are still based on a 2022 training run), required 2.1×1025 FLOPs.

With DeepSeek, we appear to have finally broken the pattern, requiring “only” an estimated 4×1024, or 4 trillion trillion total operations, ten times less than Meta.

But there’s a catch:

They were considerably limited GPU-wise, it’s not like did wouldn’t have wanted to perform a larger training run.

Crucially, they didn’t decrease the model size or training dataset size. It’s still an enormous model and, if not for it being an extremely fine-grained mixture-of-experts, reducing prediction operations by 95%, the budget required would have been larger than Llama’s due to their larger model size.

Read Notion article {🥸 Mixture-of-Experts} in DeepSeek’s Notion series to understand in detail how they did it.

Therefore, it’s not like DeepSeek is telling we should spend less compute, they are simply figuring out ways to do more with less in retaliation to GPU export controls.

It was never a cost move, but a geopolitical one.

Consequently, incumbents remain unfazed by DeepSeek’s achievements, and despite what the AI noise might suggest, the trend is not stopping:

Mark Zuckerberg has claimed that Llama 4 will be 10x in training size over Llama 3, or 5×1026 FLOPs (500 trillion trillion operations).

xAI is training Grok-3 at a cluster of 100,000 NVIDIA H100s, named Colossus. For reference, that’s 50 times the size of DeepSeek’s cluster, and with each GPU having 24% more FLOP capacity (DS used H800s). A three-month training run on that cluster is still a 3.6×1026 training run, in the realm of Llama 4.

Read Notion article {🧮 Estimating training runs} to learn how to perform such calculations and estimate the training run size of the next generation of models.

And if the $500 billion Stargate project is an indication of anything, training clusters will only get bigger. But how big? Colossus is a 140 MW data center. Thus, to reach the following order of magnitude in training runs in plausible times, we would need to grow our data centers to a giga-scale size, around ten times Colossus’ size.

But wait, why are we still talking about increasing training budgets? Hadn’t pre-training stagnated, even acknowledged by Ilya Sutskever?

Here, we reach our first confusion: Incumbents never said they would decrease pre-training budgets, and they have absolutely no reason to do so.

What has happened is that pre-training has stopped providing an exponential return on increasing the pre-training size. In other words, scaling is stagnating, but longer training runs continue to yield better models.

Hence, Gemini 2.0, GPT-5, Grok-3, Llama 4… all these models have had or will have had pre-training runs well over GPT-4’s training budget, as we saw earlier.

The key point I want you to understand is that we shouldn’t expect a 100x increase in compute to yield a 100x-or-more improvement in models. That is no longer true. However, larger training runs will still produce better models, so the incentives to continue aiming for larger runs are clear.

Consequently, it’s no surprise that all Hyperscalers still have 1 GW data center projects before 2026’s end on their mind, and none of them have signaled a change in strategy or pace.

Google announced a projected $75 billion in AI spending in 2025, a 43% lift from 2024,

Microsoft $80 billion,

Amazon over $100 billion,

and Meta between $60 and $65 billion

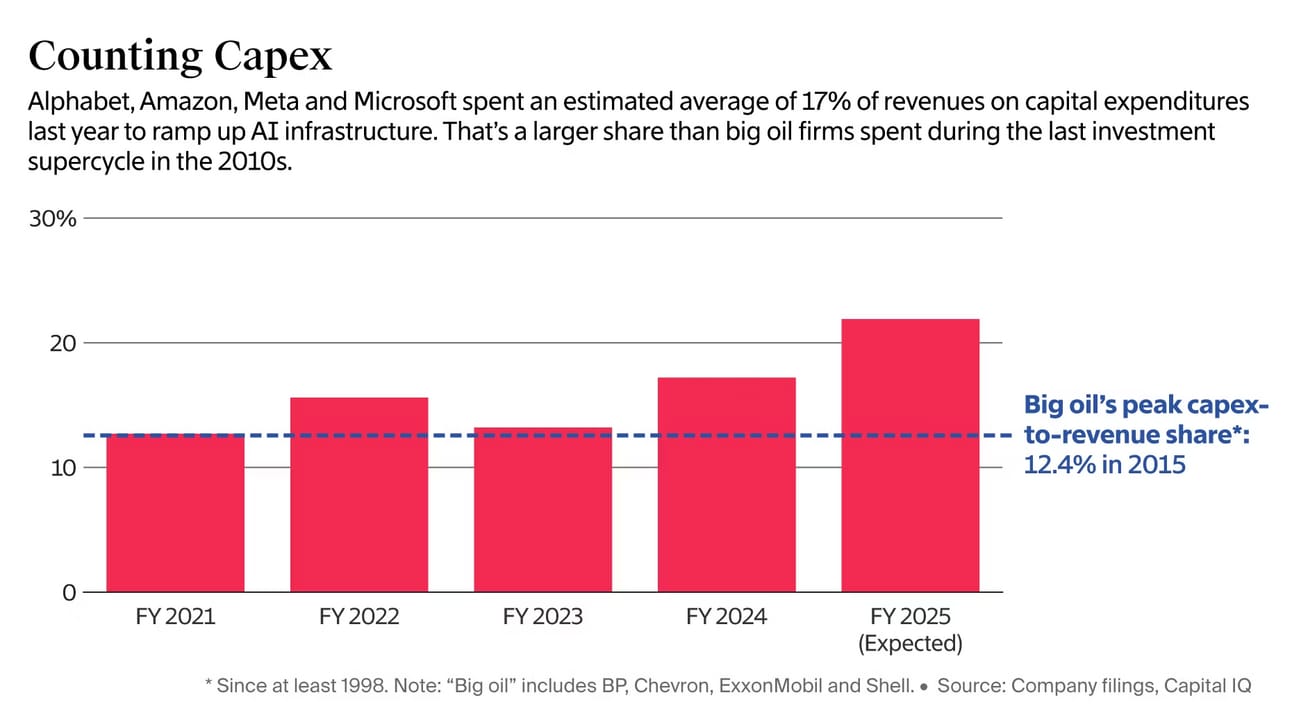

The combined total is $320 billion, $100 billion more than last year. The faith in AI is so big that their CAPEX expenditures represented 17% of revenues in 2024, with that number growing beyond 20% by 2025 if the above estimates come to fruition, much larger than Big Oil’s peak CAPEX-to-revenues ratio in 2025:

Source: The Information

Naturally, a considerable amount of this money is going toward building larger training data centers (and for inference, naturally).

No, Models Are Not Too Big, and Sizes Won’t Fall

Based on DeepSeek’s results, one could conclude that AI is downsizing in all areas. However, despite all the efficiency claims, pre-trained AI models, including DeepSeek v3, are still considerably large and will continue to be that way.

While it seems that we have settled around the 70 billion parameter range (Llama 3.3, GPT-4o, Claude 3.5 Sonnet) and that reasoning models are smaller proportionally to their intelligence (you need a smaller reasoning model to achieve the same intelligence level as an LLM), the hard truth is that all these models are distilled versions of larger ones.

GPT-4o is a distillation of GPT-4

Claude 3.5 Sonnet was largely trained on Claude 3 Opus data

Llama 3.3 is a distillation of Llama 3.1 405B

And DeepSeek v3 is famously a distillation of GPT-4 data and R1 (yes, they used the R1 model to generate synthetic data to train the base model further, even though R1’s base model is v3. In other words, the process was training v3 → train R1 → use R1 to improve v3 → release both).

I recently claimed that DeepSeek v3 was a fully-fledged distillation (including a KL divergence loss term) of ChatGPT. However, after thinking about it for longer, I realized that can’t be possible because the tokenizers are different. Instead, it’s a soft distillation.

For more details on this, read Notion article {🥸 Soft vs Hard distillations}

Case in point, all frontier AI labs have larger models behind closed doors that are smarter than the ones they are releasing to the public; they are distilled (and thus, viable to serve) versions of these.

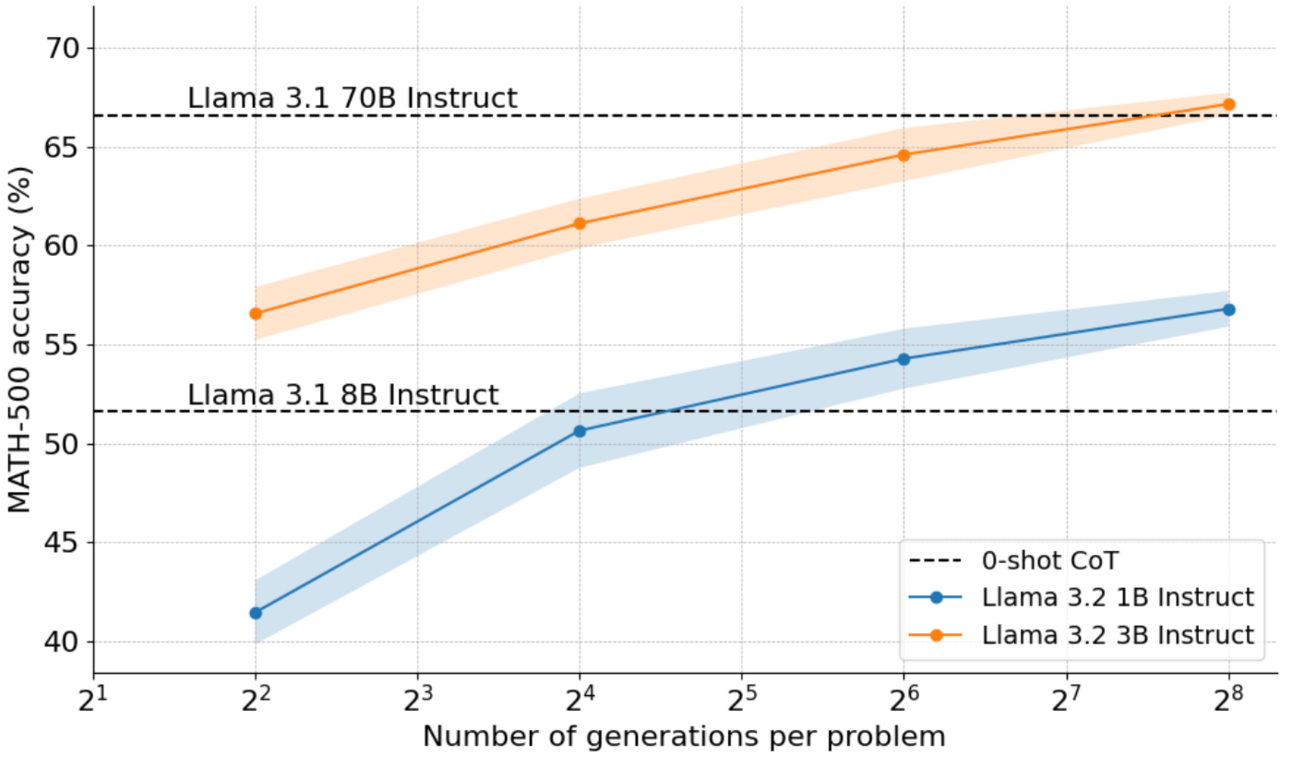

Smaller models running for longer match performance of larger models despite the considerably size difference. Source: HuggingFace

What all this means is that, although we can recover the gap between smaller and larger models using test-time inference, if we match test-time compute, the larger model always wins, so we naturally want them to grow over time.

Importantly, the fact that larger models are simply better makes distillation a superpowerful weapon for these labs.

But why?

In summary, distillation helps smaller models learn the priors the larger model has learned that they wouldn’t have learned otherwise due to size constraints. DeepSeek’s r1 distillations provide undeniable proof of this.

Read Notion {🖋️ Distillation vs Fine-tuning} to see how DeepSeek proved that distillation improves performance over fine-tuning, implying that most AI products you are going to receive will continue to be distillations.

No, Chinese Models Aren’t Cheaper

This is a funny one. If there’s something pretty much everyone agrees on today, it's that DeepSeek’s main takeaway is that it offers similar quality but is way cheaper than Western models, right?

Well… wrong.

People have jumped to that conclusion because they’ve compared DeepSeek’s API costs and free access to its app to OpenAI’s $200/month o1-pro subscription. And that’s the most brain-dead comparison ever made, probably; it’s dishonest at least, and ignorant at best.

For starters, those two tiers aren’t even comparable. If anything, we should be comparing OpenAI’s o1, as the o1-pro version at the highest-priced tier is considerably superior in performance to R1.

Even if we compare R1 to o1, the reality is that R1 is still considerably inferior if we measure intelligence as a fraction of overfit, as proven by research by Jenia Jitsev. And it’s not even close, actually. In layman’s terms, o1 is more resistant to prompt variations, suggesting that R1 relies more on memorization and not reasoning compared to o1.

For more on that, read the Notion article {🍏 Why R1 is not as bright as o1}.

Additionally, OpenAI also offers o1 on ChatGPT’s free version. Yes, OpenAI does cap the number of calls to its reasoning models (even in priced tiers), while DeepSeek doesn’t. But this makes total economic sense, considering that ChatGPT is leading the charge. It’s DeepSeek that has to make short-term sacrifices (like eating the enormous costs of serving their models through the app) to gain user traction.

Still, in an apples-to-apples comparison, DeepSeek is still considerably cheaper, with $0.14 per million input tokens (with cache hit, $0.55 without), and $2.19 per million output tokens, and OpenAI’s prices being ($15, $7.5, and $60 respectively), but we are still talking about a superior model coming from the leading company in the space.

‘Cache hits’ refer to prompt caching, a method allowing model providers to serve models much cheaply. For more information on what that means and how they do it, read Notion article {💸 Prompt Caching}.

However, when comparing R1 to o1-mini, which appears to be a fairer comparison (again, framing model intelligence relative to benchmark overfit, as mentioned earlier), the comparison is not so sexy anymore, as OpenAI’s prices fall to $1.1 and $0.55 per million input tokens (cache hit vs no hit) and $4.40 per million output tokens.

Furthermore, if we look at OpenAI’s new flagship model, o3-mini, a smaller model but comparable to o1 which, if we assume R1 is at the o1-mini level, should be fair-and-square superior, OpenAI’s prices shine through, with similar prices to o1-mini/R1 range while—again, allegedly—offering the best performance available.

But the best thing is that if the comparison between the West and China had been honest, DeepSeek would actually be on the losing side.

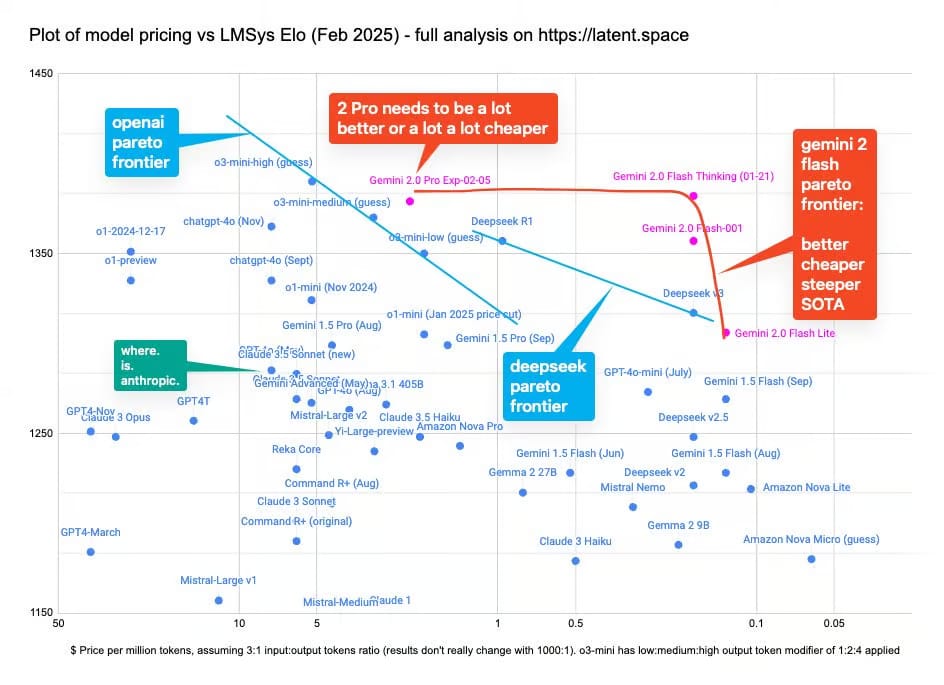

If the comparison had been made correctly, the cheapest model in the world today is American, and that’s Google’s Gemini 2.0 flash/flash-lite models. As seen in the graph below, if we compare model performance as a fraction of price per million tokens, Google’s models blow everyone, including DeepSeek, out of the water:

Long story short, DeepSeek’s revolution is not what has been made out to be.

No, We Have Not Figured Out the ‘I’ in AI.

The next fallacy is this idea that through DeepSeek’s amazingly scalable RL algorithm and the emergence of DeepResearch tools like OpenAI’s or Google’s, AGI is basically a given by now.

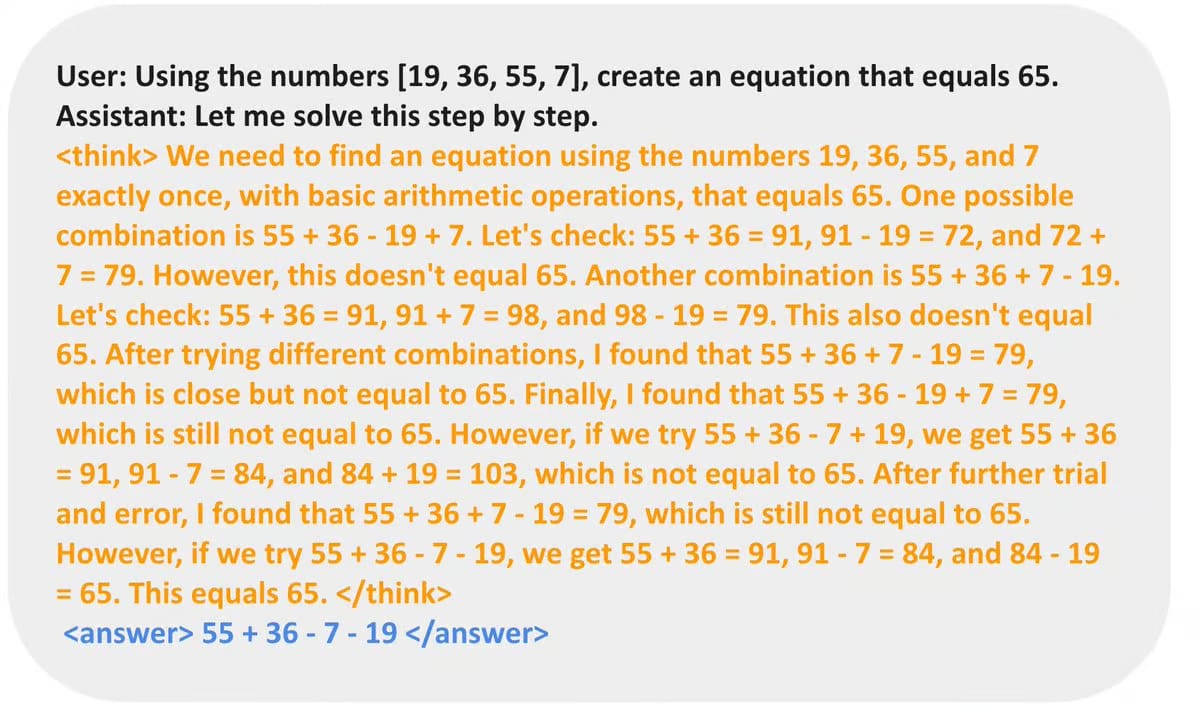

Well, that’s embarrassingly false again. But first, we must give credit where credit is due: DeepSeek’s GRPO algorithm has been tested and proven to be ‘the real deal’ several times by now:

For instance, a group of UC Berkeley researchers taught a small model to achieve human-level performance in a counting game with just $30 in fine-tuning, news I discussed recently.

Yes, we have proven that models can learn to reason (or at least mimic reason)—and, crucially, that they can learn by themselves—without humans having to teach them the principles of reasoning. This makes the entire process much more sample-efficient and cheaper.

Undoubtedly, GRPO is a net positive for the space. Furthermore, the Deep Research products that both OpenAI and Google have presented that allow these models to search the Internet for ages, amassing hundreds of different resources, to present a detailed report of any topic you suggest, have caused considerable panic, to the point that some claim these models are smart as PhDs, and with some claiming this is the beginning of the end for McKinsey.

But this is just a massive overblown fallacy.

No, these models aren’t as smart as a PhD and won’t kill McKinsey. These tools will certainly democratize access to detailed reports that otherwise would require you weeks of fees, in the millions of dollars by a professional services company like McKinsey, but that’s not because AI is as bright as McKinsey consultants, it’s simply that the days of paying huge fees for basic data and trends analysis are gone.

Instead, McKinsey will simply evolve its offering. And, to be clear, people claiming the death of McKinsey clearly misunderstand the whole point of hiring McKinsey in the first place. McKinsey is a “hircus expiatorius”, or scapegoat; McKinsey is in the business of accountability as a service, where McKinsey is used to take the blame if the CEO’s strategy fails.

And the reason some “experts” are allowed to make such stupid claims is because, once again, we are confusing intelligence with memorization.

In other words, we are framing PhDs as people who know a lot about a subject and nothing more. Of course, as AIs can compress the entire Internet (they know everything) and can surf the Internet for more context at blazing-fast speeds, yes, the value of human knowledge as a unit of business is rapidly falling.

But that’s not AGI or a good representation of PhDs, come on. AGI is when AIs match our intelligence, not our memory; it’s two totally different things.

And the best way to prove this is that these very same models that can solve PhD level math and physics problems can’t tie a shoelace or execute more than 10 steps without making a stupid mistake.

With AI, we continuously encounter a weird dichotomy: These models can solve incredibly complex problems while struggling to solve basic ones. The crux of the issue is that we evaluate them in an entirely wrong way: We evaluate their intelligence based on solved-task complexity; the harder the problems they can solve, the smarter the model has to be.

It seems logical, but it’s the wrong framing. Almost no complex math, physics, or any science, for that matter, is sufficiently complex to not be memorizable. It’s not that their solving hard problems isn’t impressive; it's that we can’t tell whether the outcome was driven by real intelligence and reasoning or by memorization.

And that’s precisely the point I’m trying to make: we have yet to see a frontier AI model prove its capability to reason or ‘act intelligently’ in areas it isn’t at least mildly aware of.

Put more clearly, if the model has not been trained on the subject, at least indirectly, it can’t solve it.

In other words, it can solve some tasks it has not been trained on because they are similar to those it saw during training. Thus, it can use its knowledge and experiences to figure things out.

But if we truly test them in “unknown unknowns,” they fail. With o3, we saw the emergence of what could have been that “Eureka moment” based on the model's performance in the ARC-AGI and FrontierMath benchmarks. However, it was later known that, in both instances, OpenAI had trained the model on the training set.

So, while o3 is unequivocally an improvement regarding reasoning over “known knowns,” (extremely valuable regardless), it’s by no means a proof of real intelligence, the ability of humans to survive or make the right decision in situations where no past experiences or data is available. As Jean Piaget once said, “Intelligence is what you use when you don’t know what to do.”

And in that framing, AIs are still not truly intelligent.

On a final note, to make matters worse, all this inference-time scaling paradigm that is driving all growth and interest lately only works on verifiable domains, areas like maths, coding, or STEM subjects where the output of a task can be verified as right or wrong.

Neither o3, R1, or whatever, represent a breakthrough in non-STEM tasks like creative writing.

So, what are the hard truths we need to digest in AI?

The Hard Truths & The Unanswered Questions

Yes, Spending will accelerate.

Profit pressures aside, there’s simply no reason not to continue investing heavily in computing, data, and larger models. Even DeepSeek, in its highly constrained environment due to GPU export restrictions, openly acknowledges the need to grow data, but, above all, as we’ve seen today, model sizes and compute.

DeepSeek has unequivocally proven that AI labs are very inefficient in training/serving intelligence, but they aren’t, by any means, suggesting that these labs need to cut costs in terms of compute (both training and inference).

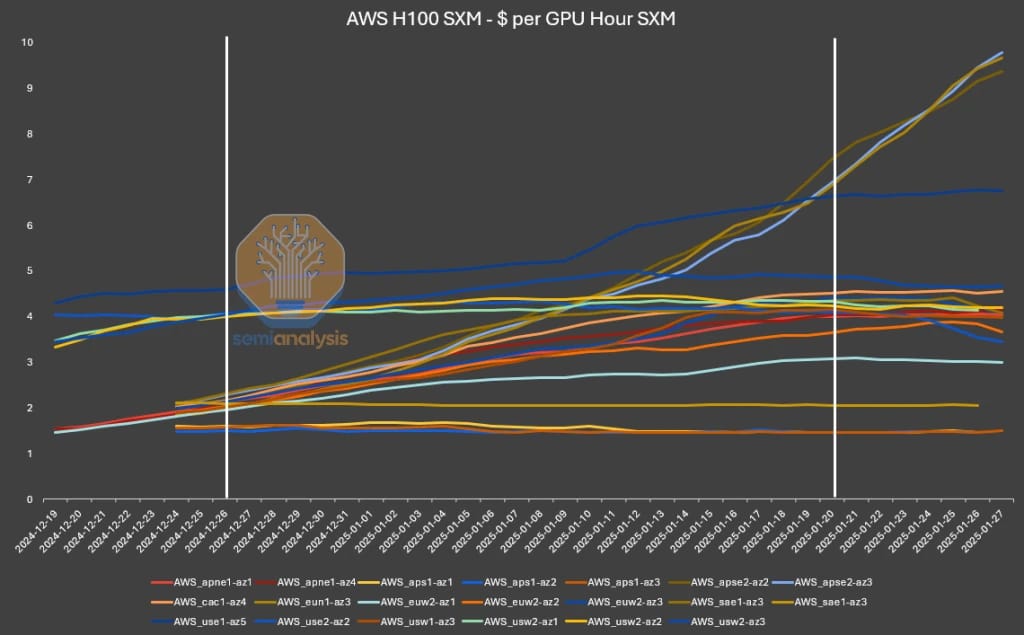

In fact, if you thought DeepSeek’s results would lead to a decrease overall compute consumption, you are verifiably wrong, both in historical terms (the Jevons Paradox), but also in terms of GPU prices, as the chart below shows how, if anything, DeepSeek’s results have increased demand for NVIDIA H100 GPUs:

Yes, Training runs will continue to grow.

Markets hate ambiguity and feel the desperate need to jump to conclusions. Therefore, if China implies cost-cutting, that means China is calling bullshit on everyone and everything.

Well, wrong.

Sure, we are figuring out ways to serve it more efficiently, like mixture-of-experts, but AI models are still growing in size to accommodate more data (again, the more data the model has to learn, the larger it has to be, based on the UAT).

Nevertheless, DeepSeek’s models are larger than Meta Llama 3 models!

This is another great reason why DeepSeek’s results were never bearish for NVIDIA; they are quite literally acknowledging the importance of larger models and larger training runs.

Yes, More intelligence is wanted, but no, not at any cost

I’ve been championing the idea of “intelligence efficiency” for quite some time, the idea that new intelligence breakthroughs aren’t justifiable if the economic requirements to reach these levels aren’t aligned.

The markets have spoken, and they are tired of valuing trillion-dollar companies based on numbers from a benchmark table; they want to see AI deliver actual value.

Hence, the only change we will see in 2025 is that cost and adoption rates finally matter. Consequently, AI products will be shipped considerably faster and will be more affordable.

As the tide shifts to affordability above raw power, I don’t think we will see step-function improvements in raw intelligence. Instead, we will see a considerable improvement in “intelligence per watt-hour;" the number of tokens these models generate—and the quality of these—per unit of energy spent should be much larger.

This can be done in two ways: decreasing the cost per token, as more tokens per task equals more “thought” on the task that equals better results, or increasing the bits per byte (the amount of “intelligence” the models deploy per generated token). In layman’s terms, be less wordy and more insightful per generated token.

Finally, here are my hot takes.

The Hot Takes

Will we finally see the death of the Transformer?

If intelligence efficiency becomes a key narrative driver, we must seriously question the Transformer's supremacy. While nothing has come close to matching the Transformer’s expressiveness in raw intelligence, if we look at the Pareto frontier, other architectures, like state-space machines represented by Mamba models, could deliver more intelligence at a fraction of the cost.

Therefore, I predict that research on alternatives to Transformers will be as hot as ever, as the goal is to find the architecture that strikes the perfect balance between intelligence and cost. Again, the markets have spoken: they prefer 80% intelligence for 20% cost rather than 100% intelligence and 100% cost.

And that’s a sign of growing weakness for the Transformer.

Will we see test-time training models as products?

Reasoning over “unknown unknowns,” solving tasks in extremely unfamiliar situations, requires two things: feedback and adaptation. In other words, if an AI model is unfamiliar with the task, we need to allow it to explore, receive feedback, and learn from that feedback.

Test-time training, the capability of models to adapt their weights in real time to learn from new data, appears fundamentally necessary for such an endeavor. This idea generated considerable interest until the emergence of DeepSeek, so I expect research to refresh its focus on it.

Could we see an AI model that learns in real-time in 2025?

I definitely think so. However, markets aren’t really asking for it right now; they don’t really need AI to be truly intelligent for the moment; they are just happy with it being usefully affordable, which has not been the case until now.

It’s on the map of these companies for sure, just not a priority for now.

Closing thoughts

I hope that, if you’re reading this, you now have a clearer picture of the industry. If not, here’s a summary:

We have been led to believe that incumbents are overspending. They are not; they were simply not focusing on efficiency, and markets have reprimanded them. But aren’t these two things the same thing? No, because they are doing the correct thing in spending as much as possible, but not for increasing intelligence just because. Instead, this accelerated spending should be focused on improving serving efficiency, and so it will. Credit cards should still be “going off”, but in a different way.

We have been told that AI is getting smaller. Yes, SLMs are improving, and inference-time computation is a fantastic way to increase small-model intelligence. But as DeepSeek proves, we still need larger models to achieve higher intelligence.

You’ve been told that DeepSeek is cheaper than all Western companies. Blatantly false. The numbers are much closer than they seem to OpenAI’s if we compare models correctly, and they are more expensive than Google’s Flash models and behind them in the Pareto frontier. If anything, this speaks more about Google’s PR problems than about the Chinese lab’s prowess.

You’ve been told AI is solved and that all we need to do is scale. The reality is still no—or unclear, at best. AIs are still bound to their data; as long as the data is familiar, they have a chance. Whether RL is the solution to this constraint is still to be proven.

All things considered, we can best summarise the entire evolution of the industry in the past few weeks in one sentence:

AI is pointless if it can’t be adopted, so increased performance without profits will no longer be rewarded; markets want outcomes, and outcomes need larger spend.

THEWHITEBOX

Premium

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]