THEWHITEBOX

TLDR;

Welcome back! This week, the release of the US’s great AI action plan, a concerning issue with reasoning models, and a wide range of market and product news, including Netflix’s Generative AI bet, China’s GPU black market, and the new fastest-growing AI startup, which might hold more questions than answers

REGULATION

The US Gov Unleashes America’s AI Action Plan

Finally, the US Government has revealed its action plan, and the reception has been mainly positive.

Three strategic pillars frame the plan’s vision for US AI leadership: Accelerate AI Innovation, Build American AI Infrastructure, and Lead in International AI Diplomacy and Security.

Accelerate AI Innovation by cutting regulatory red tape, promoting open-source and open-weight models, establishing domain-specific sandboxes and Centers of Excellence, boosting AI literacy and worker retraining, and investing in AI-enabled science, interpretability, and evaluation ecosystems.

Build American AI Infrastructure, by facilitating permits for data centers, chip factories, and energy projects. They also want to modernize the power grid, revitalize domestic semiconductor manufacturing, create high-security AI data centers (whatever that means), and train the technical workforce while fortifying cybersecurity.

Lead in International AI Diplomacy and Security by exporting the full American AI stack to allies, countering authoritarian influence in global AI standards bodies, tightening export controls on advanced compute and semiconductor technologies, and aligning protection measures with partners while rigorously evaluating frontier models for national-security risks.

From a more political perspective, they aim to ensure that AI systems respect free speech and American values, remain ideologically neutral (no way), protect intellectual property, and guard against misuse (especially in defense, law enforcement, and biosecurity contexts).

Of course, the mission of all is to guarantee the US’s lead in AI.

TheWhiteBox’s takeaway:

A lot of buzz words, that’s for sure. But the plan can be summarized in just a few things:

less regulation, especially when it comes to allowing the deployment of more energy and data centers,

Higher intended support for Academia and research institutes,

and openly supporting open-source.

In a nutshell, all good decisions, at least on paper. But as the Spanish saying goes, ‘paper withstands anything,’ meaning you can just say things, but you must also ‘walk the talk.’

Overall, the plan appears well-intentioned and has several strong points, particularly regarding open-source and open-weight models, which have both been significantly reduced in the US in terms of AI’s frontier.

As I was saying, my main concern is whether this is just empty words, because the reality in the US couldn’t be further from what this plan describes. Out of all this, the only tangible thing we have as of right now is the NAIRR Pilot, which aims to connect researchers to data and compute. Everything else is just words and unsustained good intentions.

And if Spanish politicians have taught me anything about politics, it is that their word can’t be trusted. Sadly, the reality on the ground is grim, so the US government had better be willing to back its words.

Another positive is the acknowledgement that power buildouts in the US are slow and painful. Bureaucracy makes deploying energy a nightmare, and the necessary electric equipment is severely supply-constrained (electrical transformers have lead times beyond 100 weeks, sometimes reaching 200 weeks, although recent numbers appear to average the former).

With all said, this plan fails to acknowledge the hard truth; this is no longer about supporting the US’s huge lead, but actually regaining the lost ground to China.

China is in the lead now in terms of overall impact on the industry.

The best open-source non-reasoning model? Chinese, Kimi K2/Qwen 3.

Best open-source reasoning model? DeepSeek R1, with two new supermodels having been released by Alibaba just hours ago, and StepFun (more on both below)

Best open-source coding model? Qwen 3 Coder, released yesterday.

Although I say 'open-source,' I actually mean 'open-weights,' as most of these models do not release their training datasets.

Put simply, China owns the Pareto frontier, with the exception of Google, meaning that, except for the best of the best, which continues to be the o3/Gemini 2.5 Pro/Grok 4 trifecta, every other situation, especially those where one is cash constrained, is Chinese and Chinese only.

What this means is that, for any use case that requires meaningful investment, the hard reality is that your best options are Chinese. If the US is truly worried that, in their words, “the ‘US Generative AI stack’ becomes the primary option globally,” the current strategy is sure as hell not working.

And the issue is that US AI is almost entirely private-driven, meaning that profits matter more than anything else. In that scenario, with companies burning billions by the month, they don’t have time to release open-source stuff.

Thus, in my opinion, the US Government must provide financial support to US Labs to incentivize open-source releases; it’s clear that the US government has to step in here.

But one may wonder, why are Chinese companies open-sourcing everything? Simply put, unlike in the US, the private sector is leading the way in the US (and the West in general), while all Chinese Labs are state-controlled.

And as it has been known for decades, the CCP isn’t worried about profits when the technology matters.

To China, this isn’t about making Mark Andreesseen or Ben Horowitz richer; it’s about winning.

Thus, these labs are being weaponized by the CCP to make the lives of the US Labs much harder, forcing a race to the bottom in prices.

FRONTIER RESEARCH

Inverse-Scaling in Test-Time Compute

Anthropic has published quite revealing research about a concerning trend they are witnessing with reasoning models, which they describe as ‘inverse scaling in test-time compute.’

In controlled evaluations across tasks such as distractor-ridden counting, regression with misleading features, complex deductive problems (all basically examples to test whether we can fool the AI), they found that increasing reasoning time (longer chain-of-thought) actually caused performance to deteriorate, not improve.

This is the first objective evidence of a visible negative consequence of test-time compute, the idea that increasing the amount of compute allocated by the model to any given task decreases performance.

Their experiments showed distinct error modes in leading models:

Claude variants became more easily misled by irrelevant inputs the more they ruminated,

OpenAI’s o‑series models tended to overfit to problem framing. In regression setups, longer inference led to shifts from reasonable priors toward spurious correlations, although mitigation via exemplar-based prompts partially corrected the trend. In layman’s terms, while OpenAI models seem to have a very clear framing for problem-solving, they can be led into irrelevant conclusions by the prompt itself.

OpenAI’s case might be hard to grasp, so let’s look at a quick example:

If the prompt is straightforward, like “A student, Sarah, got 90 out of 100 on a test. What’s the score percentage-wise?”

The model will answer “90%.”

But if we send it a more elaborate prompt, sharing too much irrelevant information, the model gets ‘lost in the sauce.’ For example:

“A teacher recorded test scores from 50 students. The scores ranged from 45 to 98. One student, Sarah, got a score of 90. In past years, students who scored around 90 typically ended up in the top 5% of the class. If we assume this pattern continues, what can we conclude about Sarah’s performance?”

To which the model answers, “Sarah is likely in the top 5% of her class, and her percentile ranking might be around 95%.“

In other words, with the added information, the model doesn’t immediately identify the pattern and enters ‘reasoning mode,’ where it attempts to reason out the problem’s solution, but by doing so, it loses the plot completely.

Another issue researchers outlined with long reasoning chains is that they tend to lead models into dangerous behaviors, such as self-preservation (the tendency to resist being disconnected even when asked by the user).

But why does this happen? This can be explained from the perspective of model training.

Reasoning models are trained via reinforcement learning, where models are given a specific goal to achieve. Here, the model is less concerned with outputting the best possible word every time, but doing all that it takes to reach the final answer.

When training models this way, models develop, for lack of a better term, an “obsession” with achieving the goal. In that scenario, asking it to stop leads to a, again for lack of a better term, “desire” not to be stopped because that prevents them from achieving the goal. In a way, they have been indirectly trained to have self-preservation.

This isn’t me anthropomorphizing the AI model; it’s simply a consequence of training it to achieve its goals, regardless of the circumstances.

TheWhiteBox’s takeaway:

The lessons here are two:

We have zero knowledge of how these models work internally; they remain black boxes with unpredictable behavior (or loss of performance), which emphasizes the need for monitoring layers (where other models review contents). This practice is widely implemented by the labs themselves (although invisible to the user), with examples such as ChatGPT Agent, which has a reviewing model that cancels trajectories if they enter risky territory. However, the key takeaway is that enterprises should also implement their own monitoring layers.

There is no single model that fits everything. This isn’t only a matter of cost (reasoning models are more expensive), but they can regress performance if you put them in front of tasks that do not benefit from test-time compute.

Be smart about choosing your model for the task. Test, verify, and decide.

PARETO FRONTIER

Gemini 2.5 Flash Lite Stable Version

Google’s cheapest model, the Gemini 2.5 Flash Lite, now has sufficient support to ensure stable service for production-grade deployments.

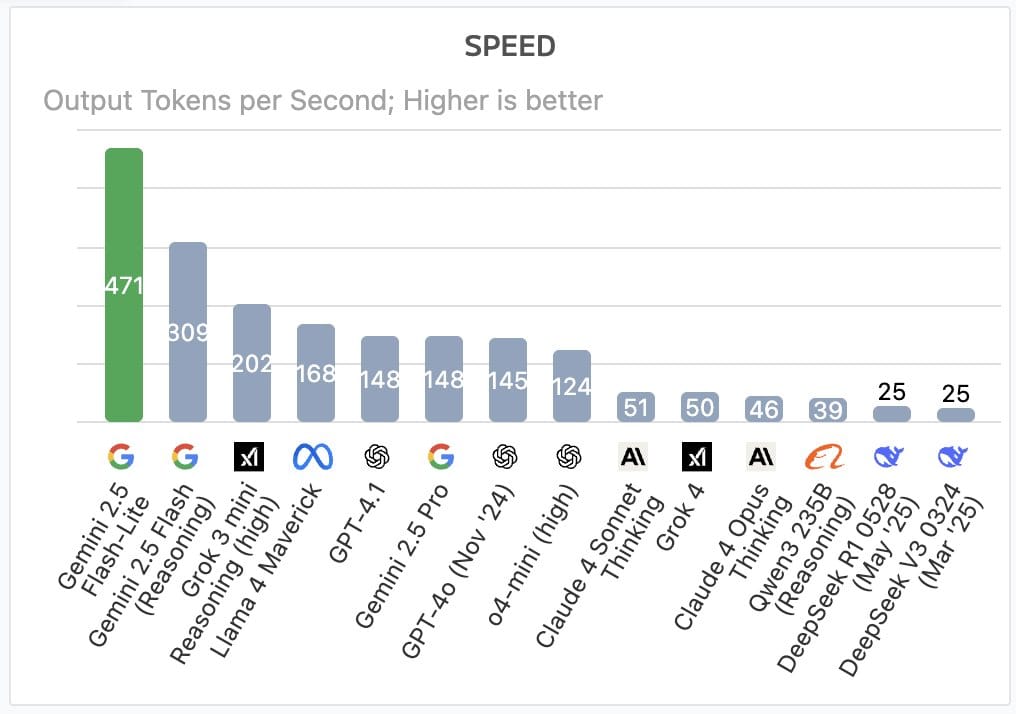

One of the fastest models on the planet, up to 9 times faster than Grok 4 or Claude 4 Sonnet, it’s not particularly smart, but for tasks that may not require too much “intelligence”, it does the job (i.e., things like translation).

Fast. And cheap.

TheWhiteBox’s takeaway:

The most attractive thing here, by far, is cost.

We are talking about a model ten times cheaper than o4-mini/Gemini 2.5 Flash. It isn’t nearly as sophisticated, but one of the key roles of an AI engineer today is to avoid overkill, i.e., using expensive models for tasks that a less expensive model can handle. Otherwise, your AI costs skyrocket, and fast.

CHINA

Another Week, Another Strong Chinese Model

A few days ago, we discussed the new Alibaba Qwen 3 non-reasoning release, and now they have released the ‘thinking’ version.

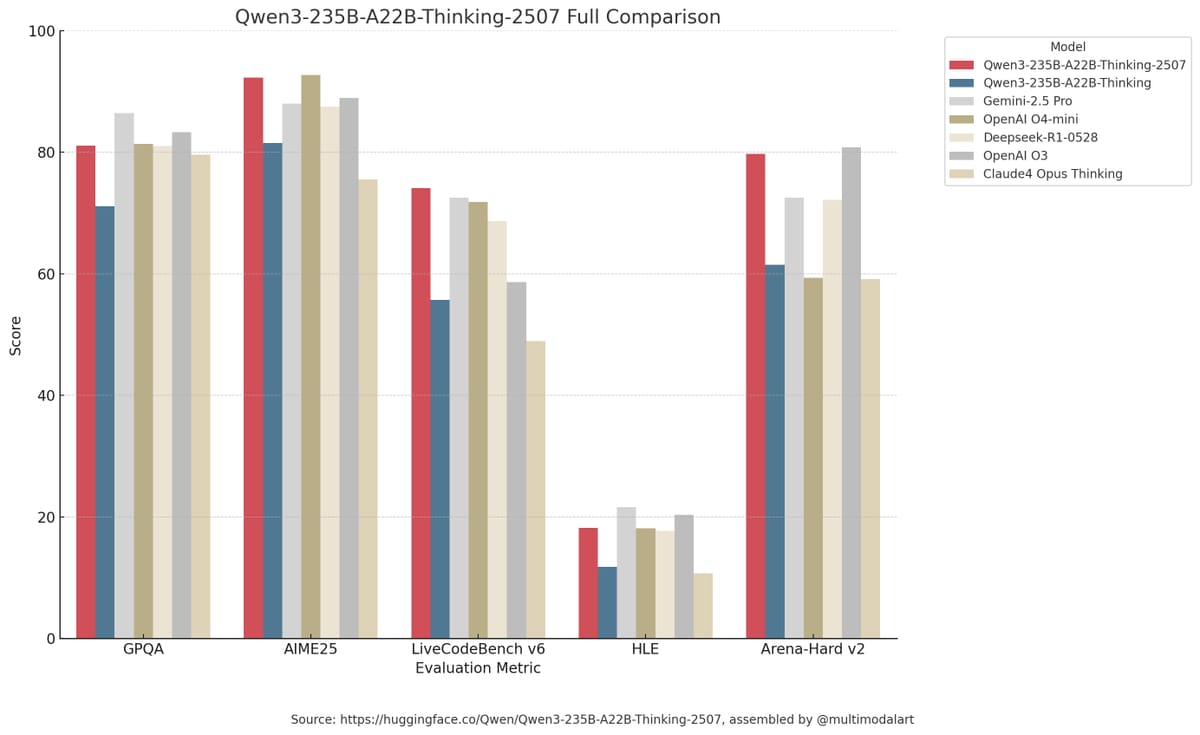

This is not any other release, as it represents the first open-source model that is competitive with the frontier models (o3/o4-mini/Gemini 2.5 Pro/Opus 4) but for a far more aggressive pricing (you can even get it for free).

TheWhiteBox’s takeaway:

The timing is exquisite. While the US releases a buzz-filled action plan, China delivers the embodiment of that plan.

What is the US thinking?

CHIPS

China’s Thriving GPU Smuggling Business

The Financial Times reports that in the three months following the introduction of stringent AI chip export controls under the Trump administration, at least $1 billion worth of Nvidia’s advanced AI processors made their way from the US to China through illicit channels.

These chips, which, as you probably know, were explicitly restricted from export to Chinese buyers due to national security concerns (the Trump Administration has recently lifted restrictions for H20 chips), nevertheless ended up with Chinese firms via smuggling networks and third-party intermediaries.

Despite the policy designed to curb China’s access to cutting-edge AI hardware, enforcement appears to have been, well, debatable. As the article describes, customs loopholes and complex corporate intermediaries enabled the circumvention of the restrictions.

TheWhiteBox’s takeaway:

The story that surprised no regular reader of this newsletter.

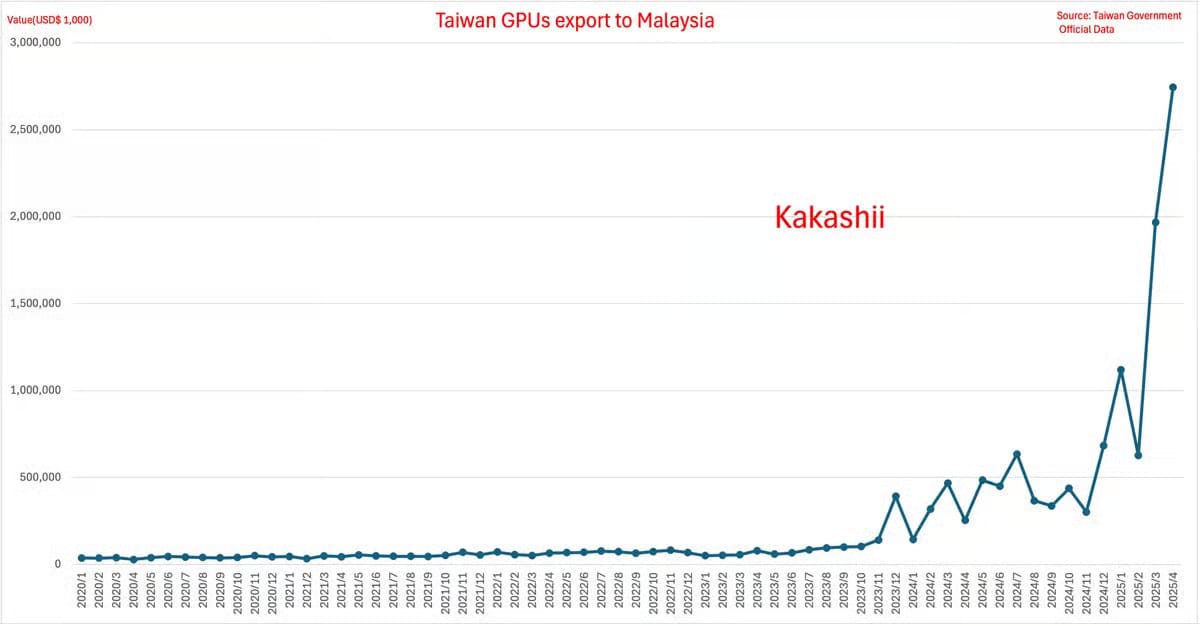

As we explained weeks ago, some countries are experiencing suspicious upticks in demand for GPUs, such as Malaysia, which has seen a remarkable increase in GPU imports from the US since late 2023, reaching over $3 billion in April 2025 alone.

This led to the tightening of export controls in these companies, but the point is that, ultimately, China still finds a way. What’s the point of setting export controls, forcing multi-billion dollar revenue write-offs for US companies like NVIDIA or AMD, if the GPUs still end up in China?

Importantly, seeing products like Huawei’s CloudMatrix server, it’s just a matter of time before China no longer needs NVIDIA.

A crucial point here is that US regulators haven’t yet realized that the key metric in AI isn’t GPU performance, but server performance (the throughput of multiple GPUs combined).

Chinese products have significantly worse performance per unit of power, but they have much cheaper and more abundant access to power, so they really don’t care. My point is, they will always want NVIDIA/AMD GPUs; the wrong framing is believing they can’t live without them.

Thus, if I were an advisor to the White House, my recommendation would be clear: lift all controls and let them both compete. If you give them full access to US GPUs, they won’t be as pressured to develop their own industry—they will create it nonetheless, but perhaps leading to a focus on mid-tier GPUs rather than cutting-edge ones.

In that scenario, I don’t see Western countries buying anything but US chips. But if China is “forced” to create its own state-of-the-art chip industry, it will undercut US companies’ prices worldwide, and that’s where problems for NVIDIA begin. Funny thing, nobody has been more vocal about this than Jensen Huang himself.

HOLLYWOOD

Netflix’s Jump to Generative AI

Netflix recently debuted The Eternaut—an Argentine sci-fi thriller, featuring a pivotal building-collapse sequence created using generative AI. This marks the first time fully rendered AI-generated footage has been used in a Netflix original series.

According to co-CEO Ted Sarandos, using AI enabled the visual-effects team to complete the scene roughly ten times faster and at significantly lower cost than with traditional VFX workflows. He emphasized that this technology isn’t about replacing people, but empowering creators to achieve “amazing results…endlessly exciting”.

Industry-wise, Netflix isn’t the only studio adopting AI tools. Runway AI, the startup behind The Eternaut sequence, is reportedly being tested by Disney and is already in use by other studios, such as Lionsgate and AMC, to streamline workflows.

TheWhiteBox’s takeaway:

The synergy between AI and the film industry can’t be understated. AI can generate impressive scenes ‘out of thin air,’ speeding up video creation/editing while also saving costs.

Obviously, this news brings renewed concerns from VFX and animation workers, particularly in the aftermath of the Hollywood strikes, where job displacement from AI was a key issue. However, resistant to such disruptions is mostly futile; you can’t fight something as big as AI, you must accept reality and find ways to adapt to it.

As for the technology’s readiness, one word of caution.

AI-generated video still has a long way to go, particularly in terms of handling longer video sequences. If you think AI can create entire films in one shot, you’re wrong. Currently, the state of the art is in the seconds or low-minute ranges, so AI is being used, just like in The Eternaut, as a tool for seconds-long scenes, frame-level edits, and nothing more.

However, as I always say, with AI, it is not about what it is today, but what the current technology reveals about its future.

PUBLIC POLICY

OpenAI & UK Gov Deal

The UK government and OpenAI have signed a high-profile Memorandum of Understanding (MoU) to increase the integration of AI into public services and strategically align AI infrastructure. The agreement focuses on deploying models across various areas, including justice, education, defense, and national security, although it remains voluntary and non-binding.

OpenAI plans to expand its London team beyond 100 employees and may invest in the UK’s emerging “AI Growth Zones” and future data centers, potentially co-developing capacity with government support. This milestone comes in the context of broader UK AI efforts, including matching MoUs with firms like Anthropic and Google Cloud earlier in the year.

While UK officials portray the deal as a strategic step to modernize government services and bolster the national AI ecosystem, critics argue it lacks transparency. Concerns include undefined data-sharing terms, ambiguity over OpenAI’s access to public data, and potential over-reliance on US tech giants.

TheWhiteBox’s takeaway:

While I’m all in favor of a reduced public presence in the industry, especially in Europe, where bureaucracy has consistently stifled innovation, I applaud governments that are at least tiptoeing into the AI waters.

Few areas on this planet are less productive and old than public digital services, which have been historical money-draining pits and a source of endless corruption. This represents a significant opportunity for AI to modernize the public sector, particularly in a context where, as we described on Tuesday, government spending has become excessive.

Public healthcare is saturated, the administrations are outdated, and spending to address these issues is at an all-time high, everywhere. Put another way, if we want public services to exist in a few years, we need to upgrade them to the new world.

Criticism of this agreement is fair, especially when considering OpenAI, particularly regarding access to confidential information, but I nonetheless believe it’s a risk worth taking.

I’m particularly bullish on services that support citizens for things like taxes, applying for subsidies, and other areas where governments are usually very opaque or too understaffed to help you. Setting up AI models that provide this service would be a great way to improve efficiency and boost trust in our Governments at a time when trust is very low (especially in the UK, where trust in politicians is at an all-time low).

CAPEX

Google Increases CAPEX to $85 Billion

As part of their great quarterly earnings report, Google has increased its projected CAPEX spending for 2025 to $85 billion, up $10 billion from the previous projection, thanks to the greater-than-expected growth of its cloud business, suggesting growing use of the Gemini models.

TheWhiteBox’s takeaway:

Despite the huge number, it’s still $15 billion less than what Amazon is going to spend, signaling the importance of AI to Big Tech these days.

Regarding Google’s particular situation, it seems that everything is falling into place. Top-tier LLM/reasoning models are offered, along with the best video and image generation models (at least amongst Western labs; Chinese companies have something to say here).

But if you were to ask me what should be the driving metric of Google’s success in the future, to me, that would be the value of its recently-released Gemini subscriptions, which I predict Google is internally looking at them as the substitute of their search revenues that, despite still growing 12% year over year, are still most likely being written off by most shareholders and investors at this point in a 5-year horizon (I will soon share my reasons why I believe this).

They aren’t releasing those subscription numbers yet.

Still, I hope they do in the future, as, paired with their non-AI cloud business, they should represent the most significant portion of revenues for this company alongside an already huge YouTube business.

AI SEARCH

Google Tests Web Guide

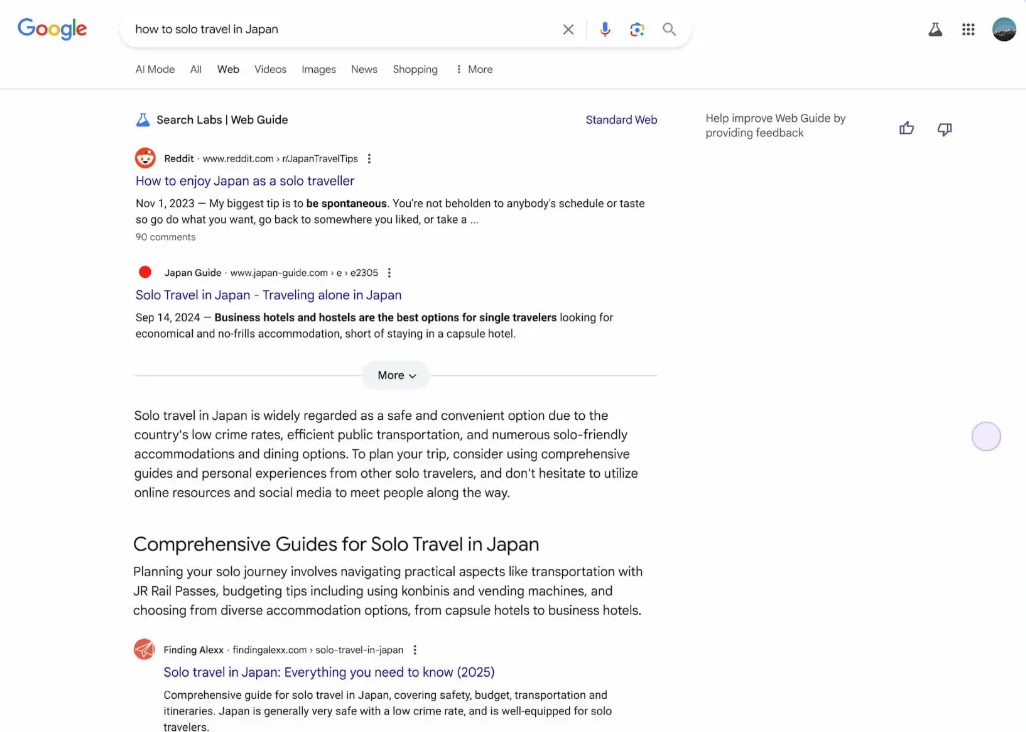

Talking about Google, in what appears to be yet another clear move into AI-generated search, they seem to be nearing the release of a Web Guide feature, based on a Gemini model trained for the purpose, that better organizes every search based on categories and insights, with links added to each part.

This upcoming release comes as the company has reached 2 billion monthly users for its AI Overviews feature, and with AI Mode reaching 100 million in the US and India.

Unmatched levels of distribution entering a future where product experience and distribution will matter more than anything.

TheWhiteBox’s takeaway:

If you take a closer look at the image, you’ll realize that links play a more minor role by the day, which means that web traffic will continue to decrease considerably for most websites. Times really are changing.

VIBE-CODING

Lovable Reaches $100 Million in ARR

Lovable’s CEO has announced, via a video, that the company has reached the landmark value of $100 million in ARR (Annualized Recurring Revenue) faster than any company in history.

The company, which allows non-coders to create web applications with almost no coding (or any), has seen some impressive growth despite being a European start-up, casting doubts about the claim that Europe doesn’t allow big startups to thrive.

But is this actually true?

TheWhiteBox’s takeaway:

There are too many doubts about this for me to know where to begin. First, stating that this is a European startup is incorrect. They are a European team with a US company, and I dislike that they pretend otherwise, despite being incorporated in Delaware to avoid EU regulations.

Not the best look.

Second, the tool is a slop machine, a toy maker, not a product that lets you develop actual production-grade code. Selling it otherwise only tricks non-coders who know no better into deploying insecure code and putting customers at risk.

The perfect example of this is the recent hack of the Tea app, a vibe-coded app that went viral in the US, which has seen a massive leak of IDs, photos, and locations of thousands of women.

Ironically, AI is making the Internet a much more unsafe place. Please be very aware who you give your personal information to.

Third, I don’t even believe that value. I don’t doubt the figure; I doubt the metric.

This video is clearly focused on raising money in future rounds, and VCs love recurring revenue. The reality is that many AI startups have been caught lying about this, and considering Lovable’s business, the recurring aspect just makes no sense.

But why?

Unless you’ve made creating new apps your new weekend hobby, this is the type of tool you use once and never look back, only returning for new projects. But there’s no such thing as a ‘recurring’ need to build new applications (at least not today).

The trick here seems to be that they do offer subscriptions. Still, I can’t help but wonder what their customer retention and lifetime value numbers are (I would bet they are poor, and that’s an understatement), and if they aren’t low, I can only wonder what tricks they are using to inflate them.

Perhaps I’m being overly cynical, but I don’t see how a company offering a product that isn't meant to be recurring can have a healthy recurring price structure. If I had to bet, the trick is just an insanely effective customer acquisition process with low customer retention but a massive number of new customers.

VCs might prioritize growth, but at some point, they’ll begin to ask questions about retention.

Anyway, congratulations on the number, but this company won’t exist in 12 months if it doesn’t get acquired by then; there’s no chance on Earth that it can compete with Hyperscalers (see reasons below) or top AI Labs, which are all competing with it.

VIBE-CODING

Microsoft Launches GitHub Spark

Talking about slop machines, Microsoft has announced GitHub Spark, a new competitor for Lovable and Replit Agent that allows you to one-shot entire applications, with the added point that the solution is deeply integrated with GitHub, facilitating code maintenance, monitoring, and strong integration with OpenAI models.

TheWhiteBox’s takeaway:

This is the type of tool that kills Lovable. If you think Lovable can compete with this, without owning the model layer like Microsoft does, you’re out of your mind.

One thing to note about this product is that it seems to be more holistic in its approach.

While tools like Lovable are primarily focused on the frontend side of things while outsourcing backend logic to tools like Supabase, Spark pretends to offer the entire package while also tailoring to more code-aware developers who can see, and probably touch, the actual code.

For that reason, this release is also a warning to Cursor, which looks more and more surrounded by heavy-capitalized companies with products like Spark (Microsoft), Kiro (Amazon), Windsurf (acquired by Cognition with IP shared by Google), and of course the agentic tools (Claude Code, Gemini CLI, Qwen CLI, or Codex CLI).

This market is as crowded as it gets. And what about human developers? It seems that AI is poised to set an example for developers of what will happen to office jobs over time.

In the future of software engineering, it will be less about coding capabilities and more about having a bias for action and distribution capabilities. In a future where everyone can build anything, those who possess good product taste and growth skills will emerge victorious.

MEMORY RETRIEVAL

MemoriesAI Launches Infinite Context Model

Memories.AI has emerged from stealth with a notable product and impressive claims.

According to the founder, they have created the first AI model, called a ‘Large Vision Memory Model’ with “an unlimited context window”, meaning it can digest up to “years” of memory in the form of videos, which is quite the statement considering the previous state-of-the-art was Google’s Gemini with one-hour video context windows.

You can try the product for free yourself at the first link and even obtain an API to start indexing your preferred videos, which your favorite models can then leverage.

TheWhiteBox’s takeaway:

This is literally the definition of context engineering surrounded by proper marketing. Let’s see if they deliver, but the opportunity of providing models with a ‘virtually infinite’ video memory is significant for several applications.

Think of video editors that can now traverse their large video databases via natural language commands.

The method they are using is unknown, but here’s the thing: lunch is never free. What this means is that they are doing—I assume, highly sophisticated—compression of videos into summaries or short clips that can be effectively retrieved at test time.

Either way, they are tackling a real problem in AI, persistent memory, which is enough to warrant the attention of VCs, a goal that all AI startups aim to achieve these days.

Closing Thoughts

Another week, another set of meaningful news. A highly political week, with the US’s action plan, their troubles with export controls, and growing evidence that China is very much ready to compete and win.

AI startups continue to show impressive growth stories, but please bear in mind that in this industry, the key thing will not be growing revenues, but keeping them. I’ll die on this hill: most AI startups have no meaningful moat beyond virality and brand awareness, and their revenues and businesses are completely unsustainable; don’t let Lovable’s momentaneous success distract you from the fact that AI’s application layer, where most AI startups reside, and too heavy on prompt engineering instead of data and industry-focused RL fine-tuning, will be a slaughterhouse soon.

Until next time!

THEWHITEBOX

Join Premium Today!

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]