THEWHITEBOX

TLDR;

Welcome back! First, I owe you an apology. Many of you have noticed the slower cadence these past two weeks. I’ve been mostly out of work due to personal reasons, but I can officially confirm I’m back.

There’s a lot to catch up on, so I’ll first send this email and another one on Saturday/Sunday based solely on news, so you can get back on track with AI. I promise you that, in two days, you’ll feel like I never left.

Let’s dive in.

FUNDING

Anthropic’s Margins Fall

Based on reports, Anthropic has quietly lowered its outlook for 2025 profitability, projecting a 40% gross margin after inference and selling costs, 100 basis points lower than the original expectation of 50%, because it is paying more than expected to run its models for customers on Google and Amazon infrastructure.

Internal projections showed inference costs coming in 23% above plan, dragging margins even as the company’s gross margin has rebounded sharply from a deeply negative level in 2024.

The revised outlook highlights how cloud dependence is squeezing leading AI developers. Despite what we thought until now, Anthropic appears less efficient than OpenAI, which has projected a roughly 46% gross margin in 2025, even after accounting for inference costs for free ChatGPT users.

Despite the margin reset, Anthropic is still forecasting explosive growth. The company expected $4.5 billion in 2025 revenue, nearly 12 times its 2024 total, driven primarily by API sales to businesses and developers, with subscriptions making up the remainder.

TheWhiteBox’s takeaway:

As we saw in the IPO prospectuses from Chinese Labs Zhipu and Minimax (and with OpenAI below), Labs continue to struggle to escape the high costs of progress (recall that Anthropic is talking about gross margins, meaning they aren’t considering operating costs—wages, R&D, and, of course, CapEx investments in hardware).

Thus, the profitability guidance remains, unsurprisingly, terrible. The problem? I believe it’s mostly out of their control. AI workloads are memory-bottlenecked, meaning the accelerators, priced at huge markups like NVIDIA’s 4x over production costs, are mostly underutilized; what could be theoretically done with two accelerators has to be done with four due to memory limitations.

Coupled with continuous pressure to lower prices because the underlying technology is mostly commoditized, it makes it literally the worst business possible: unprofitable, costs growing faster than revenues, and products with heavy competition and comparatively low differentiation.

The only reason to stay is that those who win will win big, but only after leaving an entire cemetery of companies behind.

With such a dark picture, it’s no wonder that Anthropic, as OpenAI, is leaning on massive fundraising: Anthropic is discussing a round above $10 billion at a reported $350 billion valuation, while OpenAI is in talks for financing that could reach $100 billion and value it around $750 billion.

And talking about OpenAI…

CAPITAL

OpenAI’s Desperate Capital Requirements

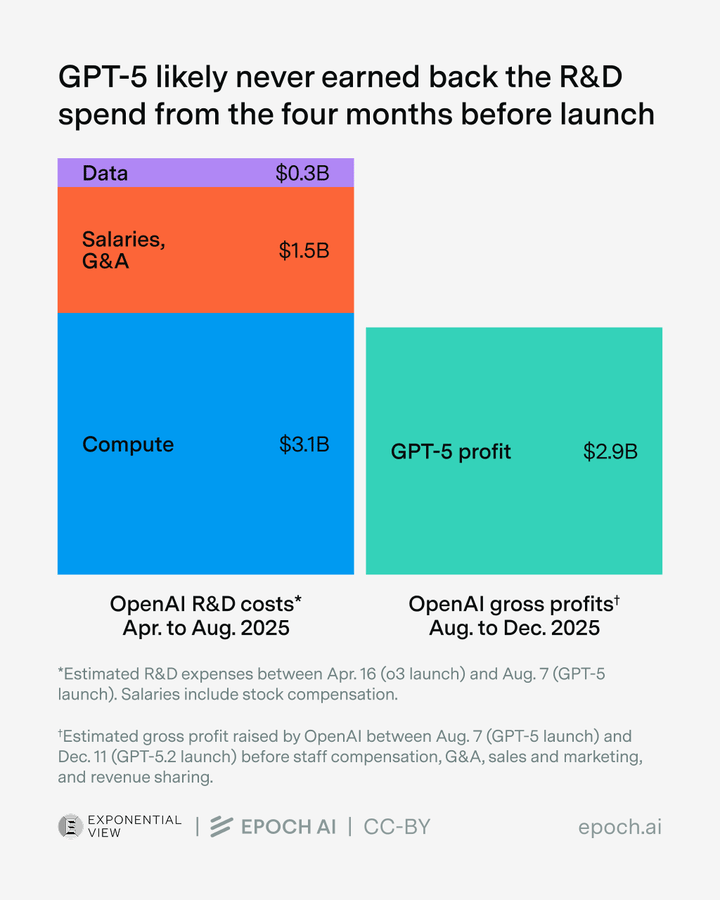

Talking about OpenAI, EpochAI has done a thorough analysis to estimate the “real costs” of AI. But what does ‘real’ mean here?

On rare occasions when AI Labs disclose numbers, they always focus on gross margins, suggesting apparent profitability. That is, the price they sell tokens is higher than the cost of generating them. That’s not remotely the entire picture.

The real bloodbath is in operating costs, which include salaries and, above all, compute investments (R&D and CapEx). Only then does the real picture emerge and show why AI Labs need so much money. Well, because they are losing a lot of it. A lot.

We already knew this in this newsletter because we looked at Zhipu and Minimax’s IPO prospectus, two popular Chinese AI Labs, and showed the same pattern: apparent profitability on gross margins and rapid growth, but a huge net loss due to compute spending. At that point, we knew US Labs’s picture was not only the same, but much worse, as they are much richer.

Importantly, the graph above only shows estimates for the “overall cost of GPT-5”, showing how OpenAI never actually made money from training such a model. We also kind of expected OpenAI to have such huge quarterly losses, as Microsoft basically told us when it recognized the loss in its own balance sheet (as the owner of 27.5% of OpenAI’s stock).

That's because Microsoft handles its OpenAI stake using an accounting approach known as the equity method, in which it simply reports its share of losses or income at the AI company.

Considering this, it’s much easier to understand why OpenAI is looking to raise up to $100 billion, of which $60 billion could come from three companies: NVIDIA, Microsoft, and Amazon, which would join a new owner of OpenAI, potentially looking to get some of OpenAI’s booming business.

But if Microsoft 12% crash from today tells us something, it’s that AI companies no longer have a get-out-of-jail card for everything, as the fall was caused by its cloud division’s growth “slowing” despite still growing revenues in that division at a staggering 39%. Still, the smallest sign of weakness and the increased AI spending were too much to handle.

TheWhiteBox’s takeaway:

If gold and silver’s sudden crashes and Microsoft's huge plunge despite good results teach us something, it is that this extreme volatility seems to be the new normal. It’s important that, in this market, you avoid trading and instead focus on your high-confidence picks while ignoring the rampant volatility any company in the AI trade will endure at some point.

VENTURE CAPITAL

humans& announces huge seed round

San Francisco-based humans&, a new human-centric frontier AI lab, launched last week. The company was founded by alumni from OpenAI, Anthropic, xAI, Google DeepMind, Meta, and leading universities.

It focuses on building AI that strengthens human collaboration and relationships rather than replacing people. The news comes from the startup's $480 million seed round, one of the largest ever (the second-largest, behind only the $2 billion 2025 round from Thinking Machine Labs).

The mission centers on developing AI with strong social intelligence, multi-agent systems, long-horizon reasoning, and advanced memory to improve teamwork, coordination, and human-to-human connection, moving away from autonomous task automation.

TheWhiteBox’s takeaway:

Absolutely monstrous round that confirms agent-harnessing will be a big theme for investing in 2026.

Harnesses are code and workflows that sit on top of AI models to improve their performance. For example, doing a simple thing like defining a good entry prompt, or a ‘reasoning loop’ that lets the model work on its own thoughts multiple times before answering, is a simple and effective way to improve model performance.

And the guys behind this new startup have made themselves known by doing exactly this, giving investors enough confidence to invest more than $400 million in a company whose biggest contribution to society is probably a pitch deck.

Seeing products like Claude Code and how independent, lowkey labs like Poetiq can beat the top Labs using their own models with a smart harness, people are realizing that while the actual AI is commoditized, the system does matter and can make a difference. As I said last week, the real moats are built at the product level, leveraging as much compute as possible and with the “smartest” harness.

These guys are surely going to take a shot at training AIs, too, but I’m pretty confident that the reason they were invested in has nothing to do with the AI and a lot to do with the system.

I still reserve the right to be skeptical about how attractive harness companies really are to investors; I still believe owning the model layer is crucial. But what’s undeniable is that they've generated investor interest. A lot of it.

PRODUCT

OpenAI Wants to Own Your Discoveries

According to OpenAI’s CFO, Sarah Friar, OpenAI plans to take a cut of every discovery made with the provable help of a ChatGPT model. “Value sharing,” they call it.

This is quite a bold thing to say, given the extreme competition their company currently faces, signaling the extreme revenue needs these companies have in a year that should represent a breakout of some sort in their respective enterprise businesses.

This means that, for instance, if a drug-discovery start-up uses ChatGPT as part of the process to find a new drug, OpenAI reserves the right to partially own the drug's license.

TheWhiteBox’s takeaway:

This is crazy work from a company that literally built its business “stealing” the work of others.

However, they aren’t stupid and know how hypocritical this is; it’s done out of pure necessity and desperation because revenues aren’t coming in as fast as they should, full stop (if you disagree, just read the previous OpenAI news).

MODELS

A New DeepSeek Moment?

I wrote a complete article covering the topic in case you want to read it directly here.

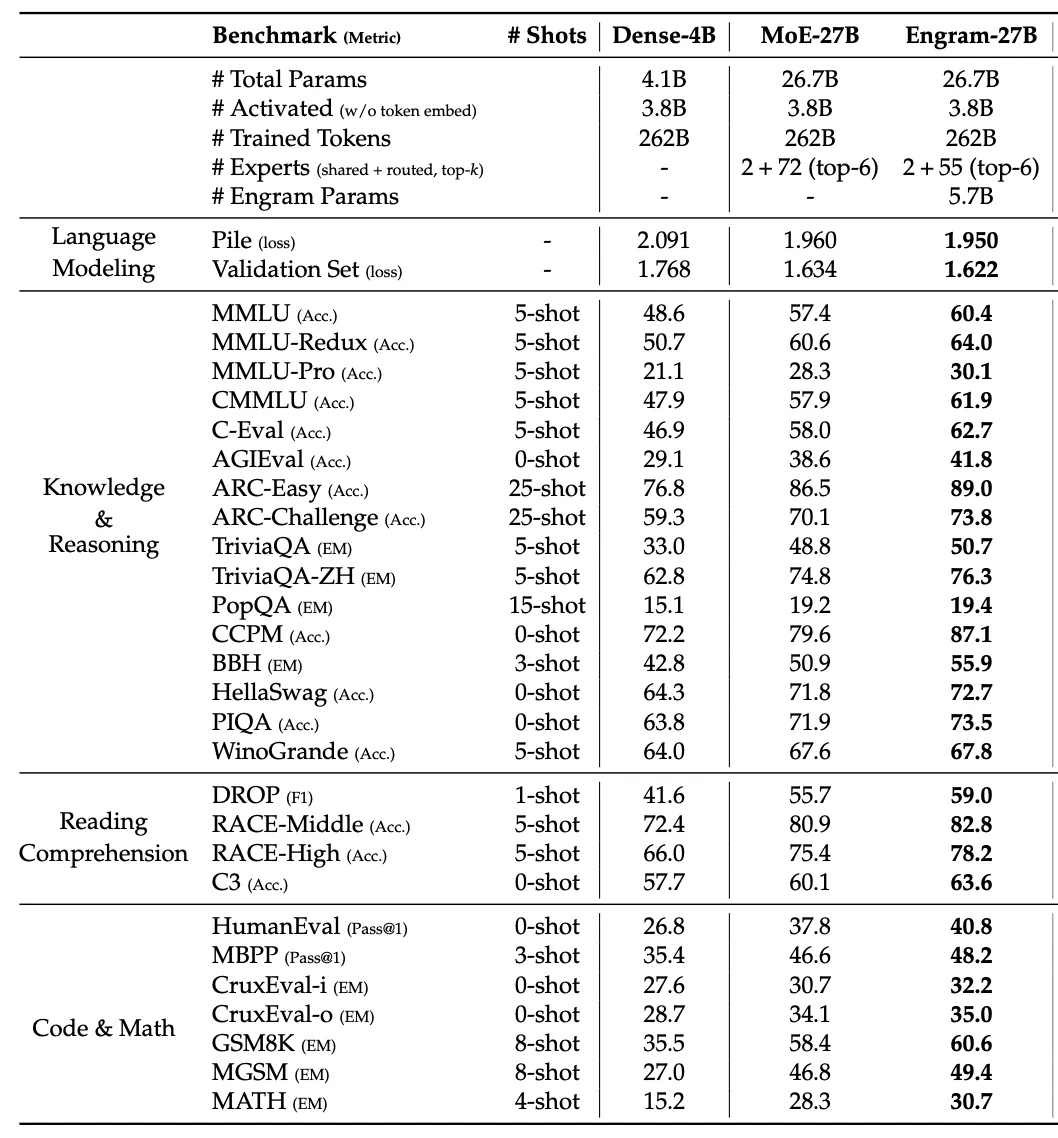

DeepSeek always delivers. Every single piece of research is worth reading. This time, it’s with the release of Engram, a new Transformer architecture that uses n-gram tables to retrieve local dependencies rather than recomputing them.

But what does that even mean?

AI models are all Transformers, a type of architecture I often liken to “knowledge gatherers.” To make a prediction, they look at both the sequence (the context, including the user’s input and other context we provide models to enrich, such as Google search) and their own memory. Models can (and do) memorize lots of stuff, so to make a prediction, they leverage both.

The problem with Transformers is that this memory they have has to be computed. What this means is that it’s not a direct retrieval, but a “reasoned” one.

Think of it this way: instead of automatically retrieving from memory “Paris” to the question “What city is the capital of love?”, Transformers process that memory every single time, which could be similar as you have to reason your way into a memory (e.g., “the user is asking about love and a city, I think love is associated with France, and the user is talking about tthe capital so it has to be Paris”). As DeepSeek researchers note, Transformers “simulate memory through computation.”

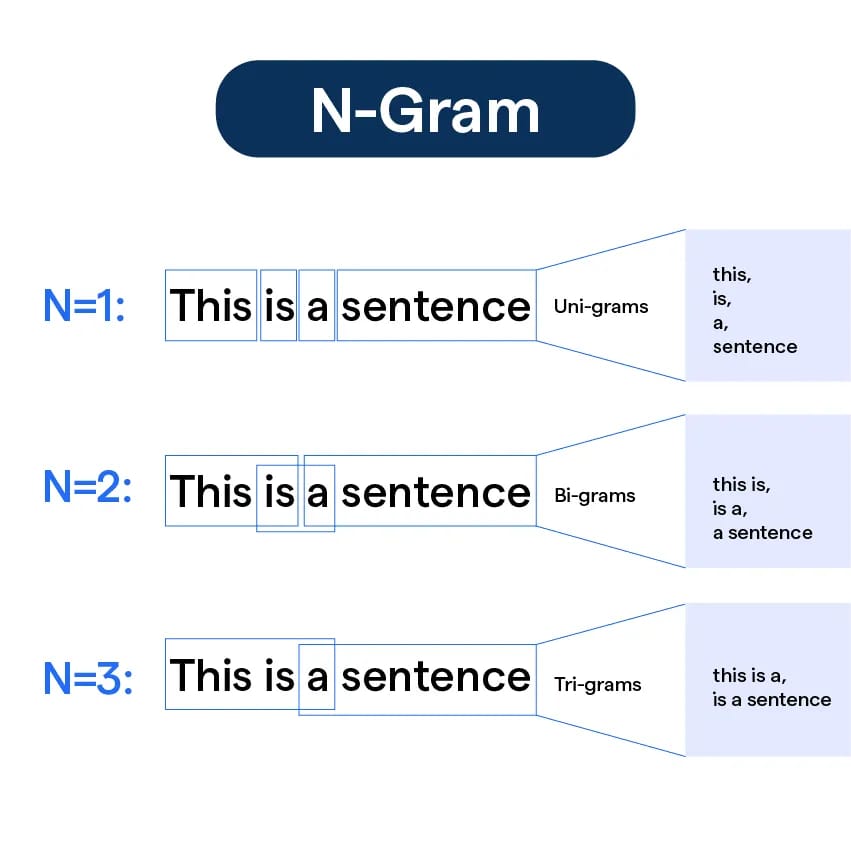

And now, they take a different approach by combining this Transformer, knowledge-gathering architecture, with an ‘n-gram’ model. But what is an n-gram model?

In simple terms, an n-gram is nothing but a model that, similarly to a Transformer, predicts the next word based on previous ones. However, they are limited; they make the prediction based on the n-1 previous words.

So a 2-gram means the model predicts the next word based on the previous one.

3-gram models make predictions based on the two previous words. You get the gist.

But why is this useful, considering it’s obviously worse to make predictions than a Transformer (Transformers behave like infini-grams, as they can look back all they need)? Well, because it’s not about making predictions, but storing relationships.

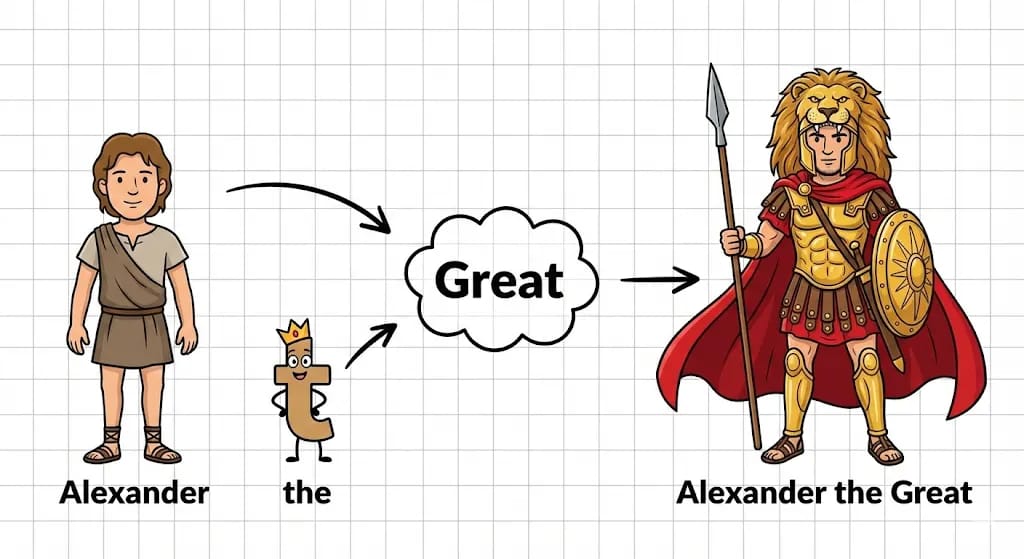

Say we have the three words “Alexander the Great”. These are three separate words, but you’ll agree with me that they go together. What Transformers do is “build” this three-word relationship on the fly, identifying that ‘Alexander’ and ‘the’ go with ‘Great’.

But what if we could store the relationship in a table (called an n-gram table) and just retrieve it? That’s exactly what Engram does.

Besides the ordinary knowledge-gathering capabilities of a standard Large Language Model (LLM), an Engram model includes a list of two and three-word relationships stored in tables, so when the model sees ‘Alexander the Great’ in a sentence, it can immediately retrieve the relationship from this table, acting as a direct memory retrieval system.

This new architecture has a lot of benefits:

It benefits models for long sequences, as they can be less concerned with local dependencies that can be retrieved from memory and instead focus on longer patterns. This is like your brain not thinking about having to reason what the capital of Poland is for the question “Prepare a plan for things to do in Poland’s capital,” and just retrieving it from memory while working on the part of the question that requires actual reasoning (i.e., preparing the plan).

It improves the use of storage hardware, reducing the model's reliance on the extremely constrained HBM supply.

It packs more intelligence per unit of size. As the model now has actual memory to retrieve from, it can allocate more compute to actual hard tasks, making these models smarter and faster thinkers.

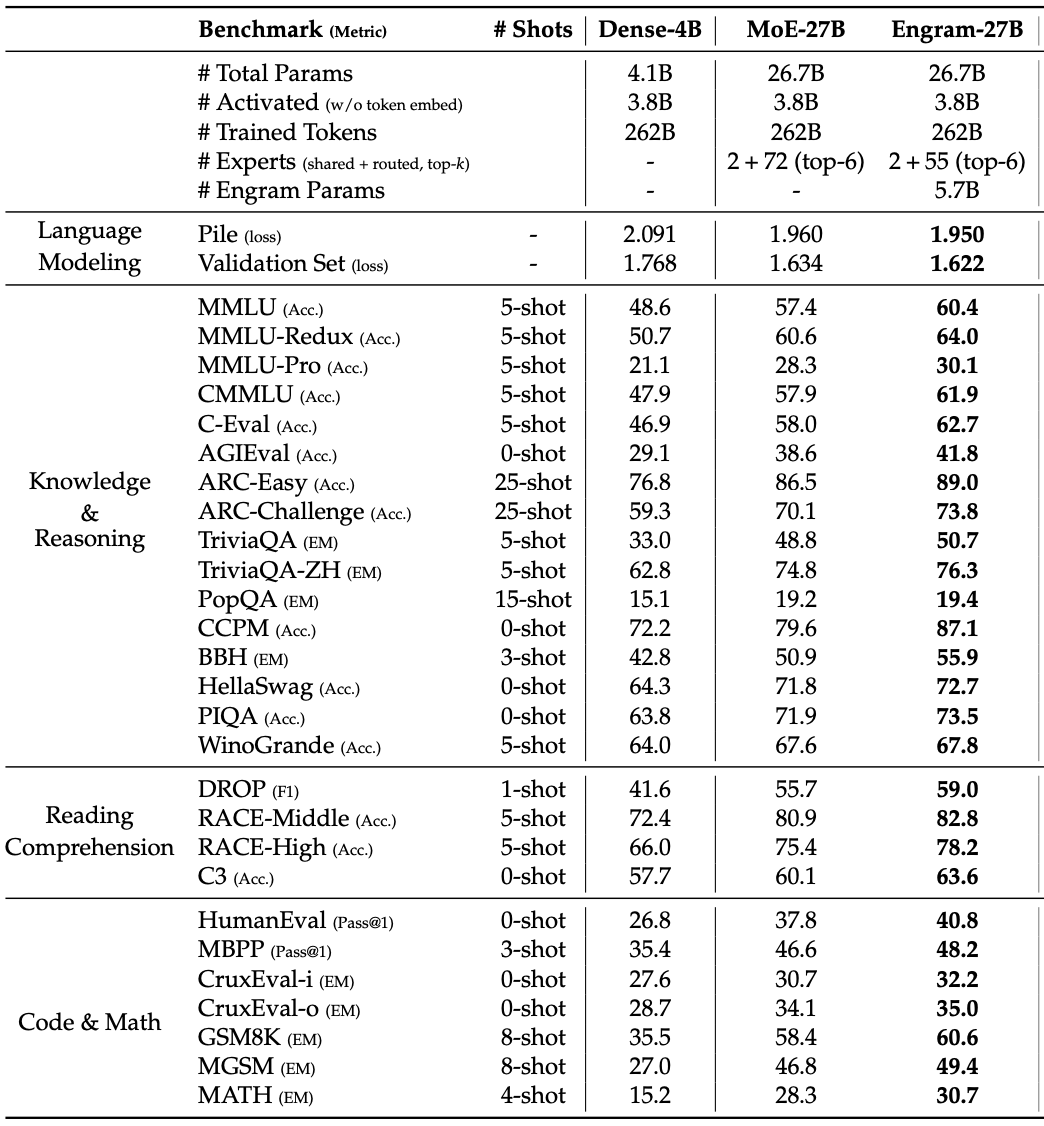

Interestingly, as it’s not that common, the new architecture outperforms a standard Transformer of the same size literally everywhere:

Overall, once again great presentation from DeepSeek. And with DeepSeek’s new model around the corner (allegedly slated for February), things are looking promising for the great Chinese hope of leveling up the game against the US.

MODELS

Apple Readies ‘Apple AI Pin’ for 2027

Render generated by the author

Apple is exploring an AI-powered wearable “pin” about the size of an AirTag, outfitted with multiple cameras, microphones, a speaker, and wireless charging, according to people familiar with the project.

Still in early development and potentially cancellable, the device is being targeted for release as soon as 2027, with Apple reportedly considering an unusually aggressive pace and a large initial build of roughly 20 million units.

The pin is described as a thin, flat, circular disc with an aluminum-and-glass shell. It would include two front-facing cameras (standard and wide-angle) intended to capture the user’s surroundings, three microphones for ambient audio pickup, a physical button, and a magnetic inductive charging system similar to Apple Watch.

While it could pair with an iPhone for heavier processing, the on-device microphones and speaker imply more standalone interaction than an accessory like an AirTag, though attachment methods and bundling plans—potentially with future glasses or AirPods—remain unclear.

The product would place Apple more directly into a rapidly forming category where rivals are moving quickly. Meta already sells AI-enabled smart glasses, Google is working with Samsung on smart glasses with AI capabilities, and OpenAI is developing multiple AI devices of its own, including a pin, pen, glasses, and a desktop speaker, plus earbuds that could arrive sooner.

Apple’s broader pipeline reportedly includes sensor-enhanced AirPods, a security camera, smart glasses and AR glasses, and a home device with a small display, speakers, and a robotic swiveling base that could debut as soon as this spring.

TheWhiteBox’s takeaway:

I get the wearable excitement in Silicon Valley, but the commercial success is far from guaranteed.

Humane’s high-profile AI pin struggled in 2024, reportedly selling fewer than 10,000 units before parts of the company were sold to HP, highlighting the risks around speed, battery life, and real-world usefulness. The Rabbit R1 wearable, which came out in 2024, was also a massive failure (borderline a scam).

In my opinion, I’m not even convinced this will ever be a thing. I’m not sure whether switching my smartphone for a nerdy wearable pin is a dream come true for me or a life-changing change, especially given how often Big Tech’s enthusiasm for something (e.g., the Metaverse) was far from shared by consumers.

COMPUTER USE

Finally Confirmed: ChatGPT Ads

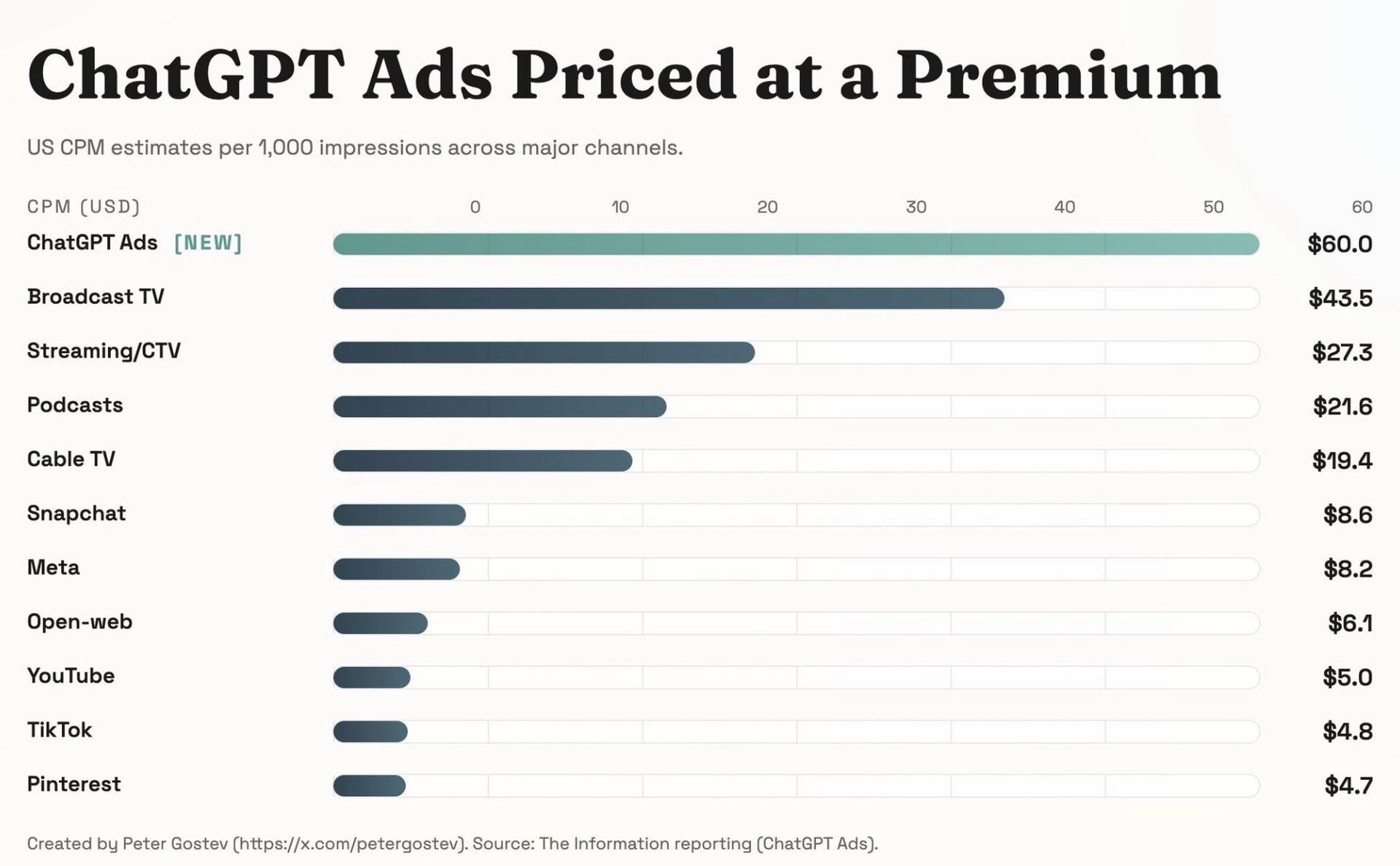

OpenAI is beginning a limited rollout of ads inside ChatGPT, offering the new format to a small group of advertisers ahead of a broader launch expected in early February. The initial pricing is impression-based, charging by ad views rather than clicks, and advertisers are being asked to commit under $1 million each for a multi-week trial.

Furthermore, The Information reports that ChatGPT’s ads will be charged at a very impressive premium of $60 per 1,000 impressions, 15 times more than companies like TikTok or YouTube charge. If true, OpenAI believes either one of two things (or both):

Advertisers simply cannot be left out of this new advertising channel; being excluded from a pool of 900 million users is not an option for anyone, which explains the high pricing.

Their channel might convert users at a much higher pace than a TikTok video reel or a YouTube short.

OpenAI has indicated ads will appear for US users on the free tier and a new $8/month tier. OpenAI may adjust pricing after testing.

TheWhiteBox’s takeaway:

We all knew this was coming; we just didn’t know when. It’s worth noting that OpenAI promises ads won’t shape answers (i.e., they won’t influence how ChatGPT responds), but I don’t think that will be true in the long run.

Once they are more mature, I believe they will start an ads marketplace that allows advertisers to buy placements and makes sure models get context based on advertiser categorization (the more you pay, the more likely you are to get seen).

Either way, ChatGPT’s ads inclusion is without a doubt one of the most important developing stories in AI today. If this doesn’t pan out, OpenAI is in very troubled waters.

EDUCATION

Gemini Launches SAT Preparation Feature

Google has released a very interesting feature for students to practice for their exams. Google is adding full-length, on-demand SAT practice tests to the Gemini app, starting with the SAT and expanding to other standardized exams over time.

The feature is positioned as a free way for students to prepare for a key part of many college applications, building on the ways students already use Gemini for studying, such as turning notes into study guides or practicing with quizzes.

The practice tests are created in partnership with The Princeton Review and rely on vetted test-prep content intended to resemble what students will see on exam day. After completing a test, users get immediate feedback on strengths and weaknesses, can ask Gemini to explain answers they missed, and can generate a personalized study plan based on identified gaps.

TheWhiteBox’s takeaway:

I love the framing Google gives its products. It’s never about substitution, which is probably a better strategy to inflate the stock, but instead about focusing AI on what it really does best: helping humans.

I can’t express in words how instrumental AI has become as a learning tool to me. The cadence at which I get answers to my questions and the interesting alternative points of view that help me better understand the issues are just worth every penny.

There is a clear risk that AI will dumb down younger generations. It really is a tool you can outsource much of the basic thinking to. It’s a deadly drug for people with no interest in learning or improving, and just “save the day.” This is everywhere, probably nowhere more than in LinkedIn, where I feel every single post is AI-generated. Everything feels the same, everyone knows it, and everyone pretends not to realize it because they are doing the same thing.

This is why I insist that the real gap AI opens is between humans with agency and those who just want to do the bare minimum. If you’re in group two, soon enough, you won’t be any better than AIs. And that is where the problems start.

FRAUD

The Gemini Siri, Coming This February?

According to famous Apple insider Mark Gurman, the new, revamped, Gemini-powered Siri will be coming to all our Apple products this February (or at least be shown for the first time).

TheWhiteBox’s takeaway:

Apple cannot shit the bed on this one. Also, it’s very likely an announced+release event because, considering how Apple blatantly lied to all of us with the first release of Apple Intelligence, nobody is going to believe their word. Investors want proof, not over-the-top keynotes with flashy demos.

And with the extreme success of Clawdbot (more on this below), the pressure is on for both Apple and Google (for Google, the pressure is to prove Gemini is a good AI for virtual assistant agents, given that Claude Opus is the primary option these days).

This is the first big revamp since June 2024, so the expectations are really, really high here. Another miss is not only unacceptable, but could really be narrative-destroying for Apple. 2026 is going to be a big year for virtual assistants, which will become an essential piece of any smartphone (and potential wearable). Thus, Apple better be ready for what’s coming.

MODELS

Alibaba Drops Qwen 3 Max Thinking

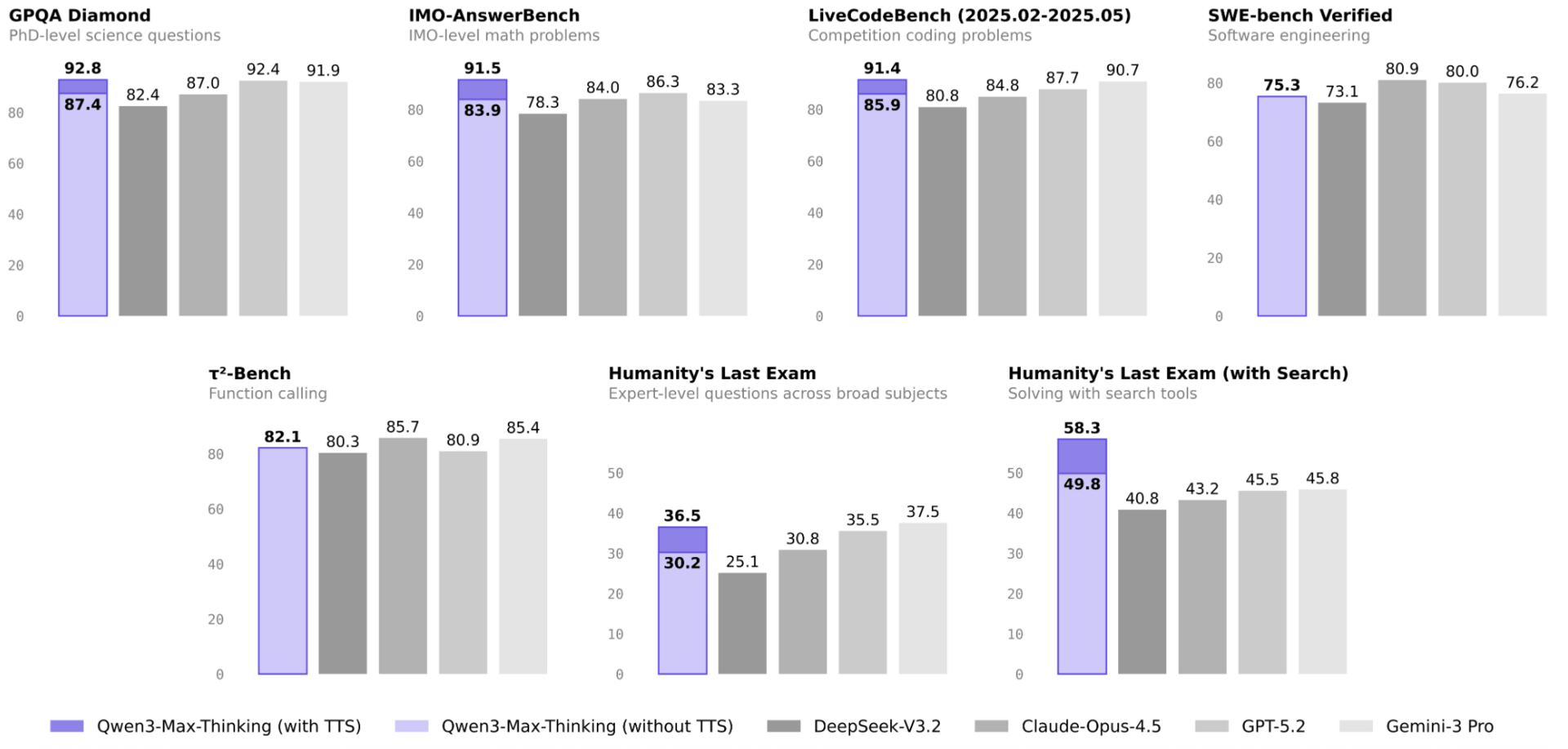

Before the next round of American model releases, which are rumored to be coming in the next two weeks, Chinese frontier AI Lab, Alibaba’s Qwen Lab, has released the latest version of its flagship model, Qwen 3 Max Thinking, a reasoning model (a model that improves performance by “thinking for longer” on every task) that basically matches most US models in most benchmark tasks.

The most interesting thing about this release, besides the obvious compute restrictions these Labs are suffering (more on this below), is the use of what they call “take experience”, a new reasoning technique.

There are two ways we increase the amount of compute we allow AI models to use for any given task:

We increase the amount of a single try at answering

We increase the number of tries, called ‘best-of-n’ sampling

The most powerful models these days, like GPT-5.2 Pro or Gemini 3 Deep Think, the best models behind the $200/month subscription tiers, besides using other models to help with the task, extensively leverage best-of-n sampling, improving statistical coverage.

That is, if the answer is inside the model (i.e., if the model knows the answer), the more tries, the more likely it is to provide the correct answer (we usually choose the most common solution amongst all tries).

This works, but as you can imagine, performance more or less scales linearly to the number of tries ‘N’ (giving O(N) complexity). Here, the very compute-starved Chinese Lab suggests taking a more subtle approach that aims to extract as much information as possible from each try. The intuition is that one try might not have gotten the solution entirely correct, but could have generated some interesting insights that could “educate” subsequent tries.

That’s exactly how this particular model behaves. It generates tries that are not actually independent; after generating the first batch of tries, it distills the lessons from those failures (probably using a surrogate, cheaper-to-run model), and adds them to the prompt for the next rounds, in the same way you learn from your previous tries when solving something like a maths problem. And with anything in AI that reads like common sense, it works.

In case needed, I’ve provided a more detailed explanation of this method here.

TheWhiteBox’s takeaway:

My main takeaway here is another, though. It’s two things, actually:

Boy, are Chinese Labs compute-constrained. Not because of the clever reasoning technique, but because they took so long to deliver this new release, which is basically adding more compute to each task. Which is to say, what took them so long was not the “discovery”, which is something many other people have already tested, becoming a popular reasoning technique.

You should not care about benchmarks, because what matters is the product. It’s irrelevant if your model has great potential if you don’t have the compute to deliver that potential. China is very lucky that US Labs have not yet figured out how to sell these products to companies. But this will happen sooner than later, and China desperately needs the compute to compete with the US if the adoption train departs.

Closing Thoughts

Amongst all these news, I identify three main themes:

The Financial "Bloodbath". The industry is realizing that they are trapped in a "worst-case" business cycle: explosive revenue growth is being outpaced by the staggering costs of compute.

This has created a desperate dependency on massive fundraising that will not end as long as hardware markups, the main component of compute spending, fall to logical levels. Currently, NVIDIA provides the shovels while the margins are as valuable as the gold the shovels uncover. Not sustainable.

From "Models" to "Harnesses". Base AI models are becoming commoditized, shifting the competitive moat from the model itself to the "system" or "harness" built around it.

Hence, investors are pivoting toward products that improve performance through reasoning loops, social intelligence, and specific workflows (e.g., the $480M seed for humans&), rather than just raw scale. May I insist on what I always say: the real moat is the product, not the model.

China. The "compute wall", specifically, memory bottlenecks and hardware scarcity, is now the primary architect of AI design, especially for Chinese Labs that are much more compute-poor than US counterparts.

While Western labs brute-force progress with capital, Chinese labs like DeepSeek and Alibaba are innovating through efficiency-first architectures (such as Engram or take-experience), using clever retrieval and reasoning techniques to overcome their lack of high-end hardware. This is not a way for them to close the gap with the US, but the pivotal role that agent-harnessing represents today might be the way China gives the West harder battles.

Until next time!

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]