THEWHITEBOX

The Secret of A Great AI Investor

The secret to being a good AI investor boils down to one simple thing: search for the bottlenecks. And that search has led me to a company that, I believe, is savagely mispriced.

Once you see the numbers, you won’t believe the price of this stock. And the worst thing is that you know this company very well. Unsurprisingly, I’ve decided to invest in it, so I’m going to explain to you why today.

But to do so, we need to lay out the investment thesis, cover the main bottlenecks in detail, and then focus on that company in particular. At the end of it all, we’ll also briefly discuss what the next big bottleneck investment opportunity could be.

This newsletter’s goal is to think of the next thing coming after the next big thing. And that’s what we are doing today.

By the end, I guarantee you’ll understand the industry way more than you do right now.

So let’s dive in.

What you are about to read is not investment advice. I’m just sharing my opinions in the spirit of transparency and because many of you have shown great interest in knowing what I invest in. But please do your own research before making any decisions.

The Search for the Bottleneck

Let’s face it. Investing in stocks in 2026 is mostly about AI, whether you like it or not.

It’s all about AI

Yes, there are opportunities outside of AI, but not that many. Nonetheless, the biggest performer in 2025 was none other than “boring” flash memory company Sandisk, which 10xed in a year (and has since doubled just in January alone).

You are going to learn why is that today, and also why, funnily enough, the “secret” company I’ve invested in is not Sandisk and won’t be Sandisk. I’m not a Sandisk shareholder nor will I be one in the near term.

Another victor was Palantir, again because of its AI exposure. And what about the Hyperscalers?

All their investors care about is whether their AI spending is too much or too little, and whether their AI cloud divisions are growing, in search of any sign of AI revenue. It’s all about AI in the US. And all these guys all have $100 billion/year booming non-AI businesses.

And don’t get me started with Asian indices. Taiwan is AI leverage on steroids. The same applies to Korea. Even Japan, which plays a low-key yet very important role in AI, is heavily exposed to the ‘AI trade’.

AI. AI. AI. And once SpaceX (which has acquired xAI today), OpenAI, and Anthropic enter the public scene with trillion-dollar IPOs, who will care about anything but AI?

But how do you traverse through this AI trade? What is a good AI investment, and what is blatant speculation, a disaster waiting to happen?

What’s the key when investing is mostly about narrative and not that much about earnings?

And the answer is bottlenecks.

The bottleneck thesis

Every single big winner in AI till today has come from the select group of ‘AI supply chain’ bottlenecks.

Because while AI is seriously commoditizing software (even the AI itself is largely commoditized), if you go up the chain, things are anything but commoditized; the entire industry hinges on a handful of companies doing their jobs and not shitting the bed.

These are companies whose production capacity represents a hardcore limit, an upper bound, of what can be produced.

Of course, you know some of which by name, like TSMC, for chip manufacturing and advanced packaging, or ASML, for EUV lithography, but, interestingly, you’ve probably never heard the name of some of these companies.

Did you know there are only two companies in the world capable of making HBM stack-probing cards? FormFactor and Micronics Japan.

They are key to keeping NVIDIA yields under control (because they test the memory chip stacks before being delivered to TSMC for advanced packaging). Unsurprisingly, they’ve performed stellarly despite being very small companies.

But these examples are in the many, actually, as the semiconductor supply chain is, without a doubt, the most specialized supply chain in human history. Each process is so complicated and requires such meticulous care (we are living in the nanometer world, remember!) that companies just specialize in one thing and call it a day.

And with AI making semiconductors the world's most valuable supply chain, it takes no genius to realize that the key is to identify bottlenecks.

Bottlenecks are great. Not only are they great narrative creators, but they are also great for earnings, because everyone will simply have to eat your price hikes. Thus, they give the bottleneck company a free ticket to expanding profits, as they guarantee revenue growth and a free ticket to raise prices.

A recent perfect example of this is the memory prices (we’ll see why in a minute):

Samsung (memory) increased pricing sharply during the 2025 shortage: Reuters reported on November 14, 2025, that Samsung raised prices of certain server memory chips by roughly 30% to 60% versus September; the same Reuters piece cites contract examples like 32GB DDR5 modules rising to $239 in November from $149 in September.

DRAM (industrywide contract pricing power) jumped into early 2026: TrendForce told Reuters it now expects DRAM prices to surge 90% to 95% in Q1 2026 versus the prior quarter, explicitly attributing the move to AI/data-center demand, tightening supply, and greater pricing power for suppliers.

DDR5 DRAM (spot/contract benchmarks) showed “multiple-hundreds-of-percent” inflation by late 2025 and further hikes into 2026: Reuters reported on January 6, 2026 that prices for a type of DDR5 DRAM jumped 314% in Q4 2025 versus a year earlier, and that TrendForce expected conventional DRAM contract prices to rise another 55% to 60% in the current quarter (Q1 2026) versus Q4.

NAND/enterprise SSD (supplier pricing action reported by industry press) also saw extreme attempted hikes into 2026: Tom's Hardware reported on January 9, 2026 (citing channel checks attributed to Nomura) that SanDisk planned to double (100%+) the price of 3D NAND used for enterprise SSDs in Q1 2026.

Samsung has raised prices on its NAND products by 100% this quarter.

Unsurprisingly, and as I’ve been telling premium subscribers for months now, things are getting interesting in the memory arena. Nonetheless, since our announcement that we were investing in SK Hynix, the stock is up by more than 60%.

By now, I hope you got the memo: search for the bottlenecks. Fine, but where are they?

There are many bottlenecks we won’t get into today. We could cover the entire supply chain in detail, but this would make the article way too long for you, so you’ll have to trust me and believe that what I’m about to say is true: the two biggest bottlenecks are chip manufacturing, advanced packaging, and memory.

The bottlenecks we are discussing today are all large and growing markets. Advanced chip manufacturing has an expected compound annual growth rate (CAGR) of 18%, HBM between 20 and 30%, and advanced packaging between 15 and 20%. So companies “bottlenecking” these markets have huge revenue growth and earnings potential.

The advanced manufacturing bottleneck

The first one is the process of taking in the designs from chip designers (‘fabless’ companies), companies like NVIDIA, AMD, Apple, and so on, and using their designs to manufacture the compute die, the actual physical chip.

Memory companies generally both design and manufacture their own chips (Micron and Sk Hynix do use TSMC to build the HBM base die, but we won’t go into that today).

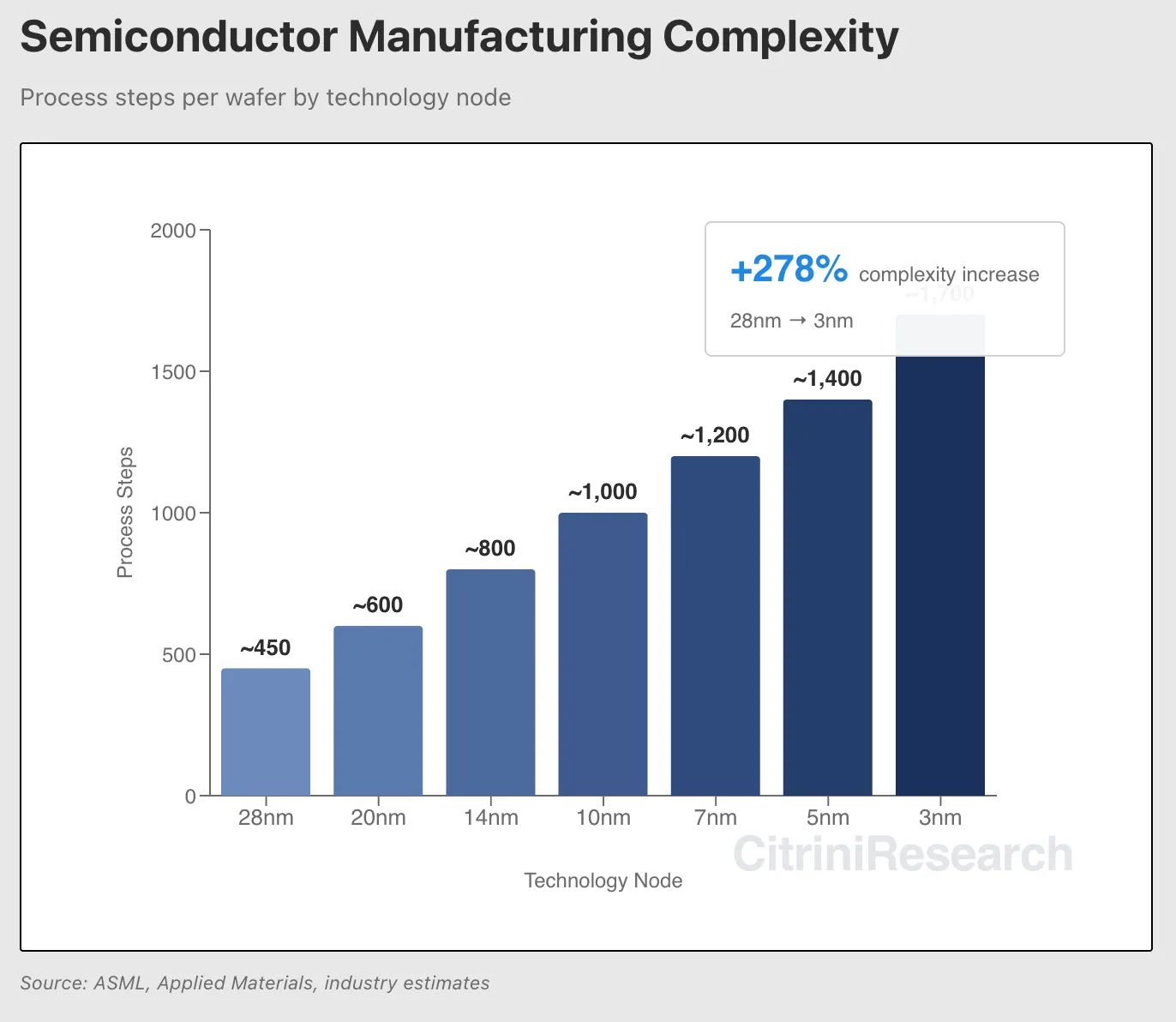

The process of building the most advanced chips, in the 3-4 and even 2 nanometer range, nowadays takes months per chip, as it requires a large (and growing) number of steps, with more than 1,700 for the 3nm processes now used to fabricate the most powerful GPUs like Rubin.

Each of these bins is called a ‘process node’ and refers to the size of the transistors used in chips. The smaller the transistors, the more you can pack inside a single chip. The more transistors you have, the more circuits you can fit on a single die. The more circuits, the more computing power the chip has.

Chiplets nowadays have hundreds of billions of transistors inside a ~800mm2 chiplet, just a handful of centimeters wide, which should give you an idea of how insanely small these transistors are.

For a more visual representation of these sizes, check this YouTube video that beautifully represents how crazy all this is.

The problem is that the smaller each transistor is, the more steps are required in the chiplet's fabrication process, as shown above. The more steps you have, the longer it takes to build, naturally, but the highest impact is on yield.

‘Yield’ is a very important element in semiconductor manufacturing because the processes are so complex that it’s inevitable that some chips come out faulty or even broken. Thus, only a fraction of the chips that start the process (called the wafer start) end up working, giving us the “yield” of the process in particular.

All this makes the process extremely delicate to the point most popular designers on planet Earth confide this effort to a single company: TSMC, or Taiwan Semiconductor Manufacturing Company, a company that doesn’t design chips at all, but builds the chips behind some of the most powerful devices, like NVIDIA’s GPUs, or Apple’s MacBook and iPhones.

Current yields for TSMC’s most advanced manufacturing process nodes for chips are estimated at around 90%.

Everything goes through them. And while TSMC has since significantly increased its capital expenditures (they expect almost $200 billion over the next three years), demand for new chips is so high that TSMC can’t keep up. Nonetheless, its CEO, CC Wei, recently stated that demand was about three times the supply.

You would then assume manufacturers are investing every single dollar they make to expand capacity, right? Well, think twice. The truth is that we have to clearly distinguish the different levels of ‘AI-pilled’ that different companies are at.

While Hyperscalers like Microsoft are extremely AI-pilled, meaning they pay any price and invest as much as they can in their AI journey, manufacturing semiconductor companies, which aren’t as rich as these groups, play a safer game of first racking up orders into the future, and only then commit to expansions.

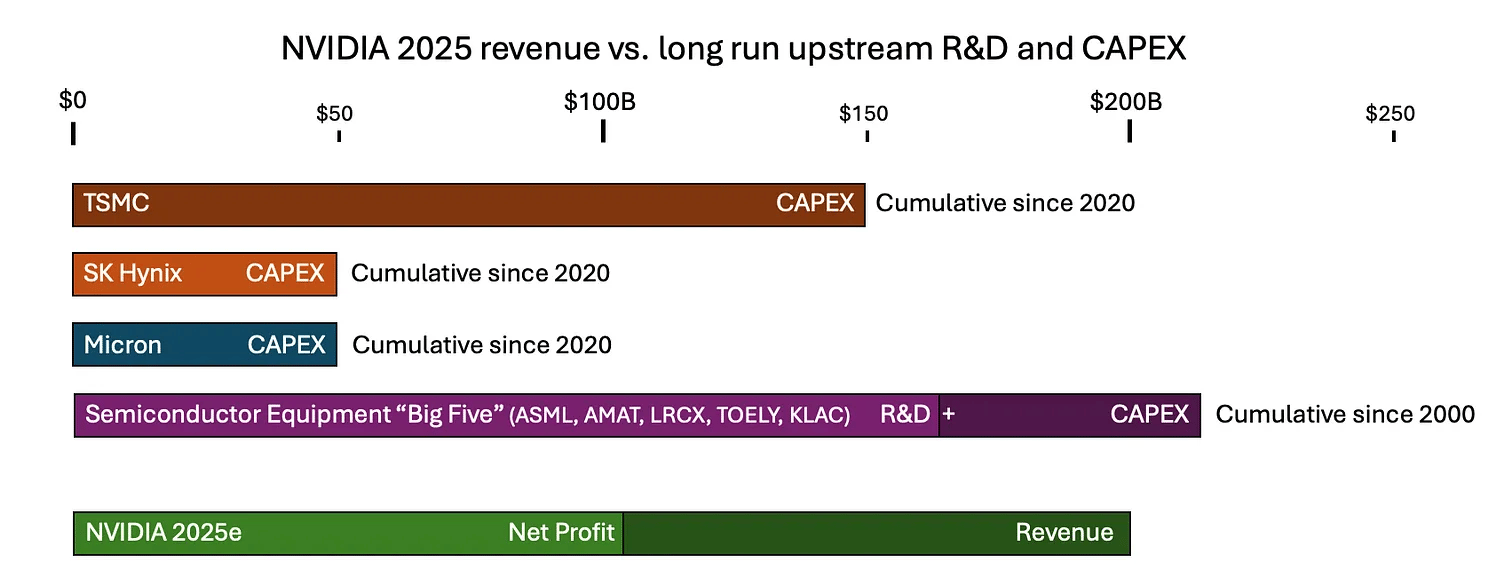

To put this into perspective, as you can see below, NVIDIA’s revenue in 2025 was larger than TSMC’s, Hynix's, and Micron’s cumulative CapEx spending since 2020, and almost as large as the cumulative expenditures of Big Five semicaps, the biggest semiconductor equipment companies led by ASML's efforts… since the year 2000!

In terms of money, Big Tech is just speaking another language, so seeing TSMC commit almost $70 billion per year is the kind of news you want to see as an investor, because it means these guys must be seeing unparalleled demand.

But while chip manufacturing is already a bottleneck, advanced packaging is a totally different beast. But what is that?

Traditionally, a “low value” part of the process, where the chip was packaged to protect it and improve heat dissipation, the huge computational and memory demands of more recent workloads and software have transformed this part of the process into a manufacturing bottleneck.

The problem is two-fold:

One compute die is not enough. As we can’t increase chip size due to lithography yield constraints, modern GPUs and TPUs have two (and soon, up to four with the Rubin Ultra chip) compute chiplets stitched together laterally (known as 2D packaging).

The amount of on-chip memory (each GPU chiplet has several hundred megabytes of SRAM) isn’t remotely close to meeting the requirements of modern AI (more on this in a second).

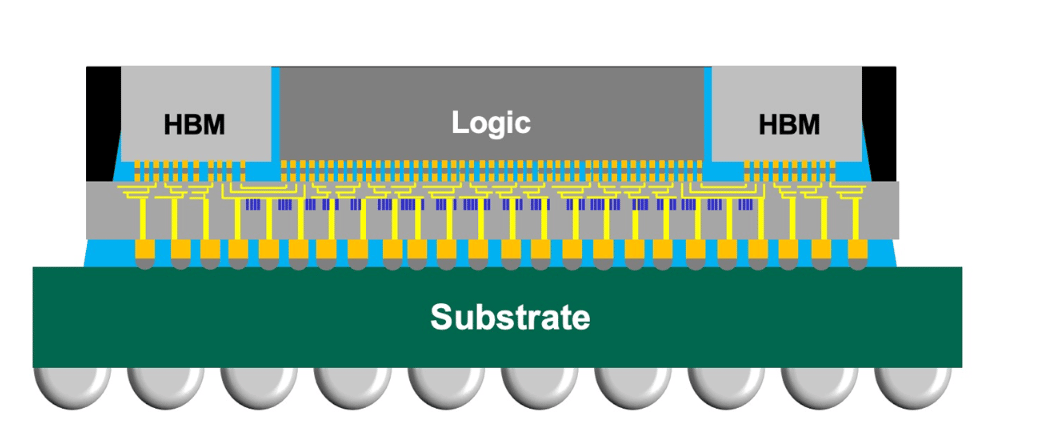

This means the definition of ‘package’ has changed. Now, a GPU package is a combination of many things:

The compute dies, placed next to each other (thereby behaving as one)

A set of off-chip DRAM chip stacks, using a technology known as HBM (High Bandwidth Memory), which we’ll talk about in a minute

A silicon interposer, a wafer that connects the compute dies to the HBM stacks through extremely tiny wires shown in yellow below,

An organic substrate that provides sufficient rigidity to the entire system (the interposer connections are extremely delicate), as well as connections to the rest of the system

Source: TSMC

As you may have guessed, this process is very complicated, which means yields can fall to the low 70s. And that’s TSMC, the absolute leader with their CoWoS (Chip-on-Wafer-on-Substrate) process, other foundries have way lower yields.

Advanced packaging yields are what have set China back the most in the past few years.

The technological complexity, combined with years of prescient arduous investment, has solidified TSMC’s enviable position in both areas. However, a change might be emerging in 2026, as we’ll explain later.

The other big bottleneck, memory

It’s important that we discuss the memory bottleneck as well. And it’s a huge one. You don’t have to take my word for it; here’s Intel’s CEO to remind us, the message of which is summarized in the tweet below:

As we hinted a few moments ago, part of the complexity of advanced packaging is that we need to connect our compute dies to our memory dies as closely as possible, forcing off-chip memory to reside within the package.

The reason is that the volume of data movement in AI workloads, especially in inference, is abnormally high. This means that memory has to either reside inside the chip (fastest access but with very limited capacity) or as close as possible, thereby being inside the package, with a very delicate silicon interposer connecting them.

And to the surprise of no one, HBM allocations on AI servers have nothing but skyrocketed, rising from 40 GB in 2020 to 288 GB for upcoming GPU generations and up to 1 Terabyte for Rubin Ultra, slated for 2027.

The memory bottleneck is so immense that it’s also driving increased allocations of both flash and hard disk storage in AI servers, turning these mature, boring markets into fully-fledged AI plays, with the prime example being SanDisk, the stock that seems to never stop growing.

But here’s the thing: I am not invested in Sandisk, nor will I be. And to understand why, we also need to first understand why it’s growing and two why, in my opinion, people are severely overextending the stock, as well as presenting what I believe is the real stock investment opportunity according to fundamentals, not highly speculative trading.

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- NO ADS

- An additional insights email on Tuesdays

- Inside the Tuesday email, deep analysis of AI stocks with potential for investment (only Full Premium subs)

- Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more