Don’t miss out on AI’s event of the year, NVIDIA GTC 2025, the event that every year defines the roadmap of the AI industry. Register today for free.

THEWHITEBOX

State of AI: March 2025

Recently, the AI industry has become pure chaos. It’s hard to know who’s ahead, what true and what’s false, what trends are actually moving the needle, or what markets are thinking.

But wouldn’t it be nice if you could utter the phrase “ok, now I understand where things stand in AI.”

Today, I want you to provide you just that by giving you my honest opinion on the current state of affairs in the three main areas: Technology (what trends we are seeing in hardware and software), Markets (how markets are reacting/should be reacting), and Product (how products are evolving), to answer the great questions everyone is asking:

Are models really commoditizing?

Will Anthropic’s hybrid models force OpenAI to rethink its model strategy?

Are agents actually what they are claimed to be?

An honest, crude look at AI revenues and the state of markets

And the latest innovations in robotics and intelligence efficiency, the most important trend in 2025.

By the end of this read, you’ll have better intuition than most of what’s going on and in first principles, like we always do.

MODEL COMMODITIZATION

One Commoditized, One Not?

If you read this newsletter regularly, you already know, but just in case, here's a quick recap on AI models. We currently have two prominent types: reasoning and non-reasoning.

The former thinks for longer on a given task, allowing it to improve performance in maths and coding; the latter gives you a one-shot, immediate response, good for intuitive tasks like writing, creative iteration, etc.

It’s important to note that the reasoning model is a non-reasoning model that has undergone ‘multi-step reasoning training’. At heart, they are identical; the only change is that the reasoning model has learned to approach problem-solving more step-by-step, allowing it to explore different ways to solve the problem. For instance, OpenAI’s o3 reasoning model is GPT-4o (non-reasoning model) post-trained for reasoning.

Currently, there is a growing belief that frontier AI models, in general, are commoditizing. However, this is not as you might expect.

The commoditization pressures (models become undifferentiated, making competition a matter of price) are much larger on the reasoning models despite being a relatively newer model type.

For instance, while OpenAI still has the best reasoning model (o3, but it’s unreleased), their most powerful released reasoning model, o1-pro, has similar performance results to other available models, like Grok 3 Reasoning, DeepSeek R1, or Alibaba’s just-released QwQ Reasoning, the latter’s performance shown below:

The commoditization I mentioned is because they all have collapsed on the same training method: guess and verify.

DeepSeek's release of R1 (and previously the Allen Institute's with Tulu) brought it into the limelight. It essentially involves training the model on examples that can be verified for correctness. Instead of using different training hacks, they let the model “bang its head against the wall” through brute trial and error until it learns to solve the problem.

This method was adopted fairly quickly. OpenAI acknowledged its use based on its research, and Alibaba also openly admitted that it was QwQ's training method.

Long story short, the recipe for training frontier reasoning models is publicly known, and notably, the resources required to achieve frontier-level reasoning capabilities are fairly affordable compared to non-reasoning models.

The reason why closing the reasoning gap is more affordable is based on two ideas we have already covered: reasoning models are smaller (thus, faster) because thinking for longer enables smaller models to catch up with larger models that think for less.

Additionally, the data required to turn a non-reasoning model into a reasoning model is surprisingly small. s1 required just 1,000 examples to reach borderline-frontier and DeepSeek just 800k (a very small dataset, trust me) to train R1.

Interestingly, the truth is different for non-reasoning models: Non-reasoning models aren’t really commoditizing because the differentiator is not the algorithm; it’s capital.

While improvements have become clearly logarithmic in the amount of resources used to train non-reasoning models (doubling the spend in training does not produce 2x results), which might suggest commoditization pressures, the reality is quite the opposite; while the differences between models appear less evident, the “efforts”, both technical and economical, to train “the next big non-reasoning model” are skyrocketing.

For instance, OpenAI dedicated enormous efforts to training GPT-4.5, 10 times more compute than GPT-4. This required multi-data center training, hundreds of millions of dollars in compute and data, and the model itself is enormous, making it horribly expensive to serve (hence the insane prices).

The point is that while GPT-4.5 is not necessarily orders of magnitude better than other non-reasoning models, it is mostly better (it is more knowledgeable and less hallucination-prone, the two key metrics in non-reasoning models). And the issue is that matching that effort becomes increasingly expensive for other labs, which have to resort to hundreds of billions of dollars of spending, making the non-reasoning model one only a few can play.

That’s not commoditization; that’s consolidation.

Sure, most open-source players will try to close the gap via teacher-student imitation, known as distillation. In this method, another model (student) is trained using outputs from the larger, smarter model (teacher), becoming a Pareto-optimized version of the model (80% of the performance, 20% of the price). This is how DeepSeek V3 was trained, for instance, hence why it was so cheap to train (it wasn’t the ‘Chinese engineering magic’ that markets made it look like).

But that doesn’t quite close the gap, like, at all. Lunch is never free, so while distillation makes the business of making money harder for OpenAI, as their advantage is less perceivable at first, unless you’re exclusively money-driven, the teacher continues to be smarter, period.

Consequently, yes, we are seeing commoditization, but only on reasoning models. For non-reasoning models, while the unit economics will become harder and harder to justify, they aren’t commoditizing but consolidating.

But what if both paradigms merge?

HYBRID MODELS

The Rise of Hybrid Models vs Systems.

Up until recently, having non-reasoning and reasoning behavior meant having two models. OpenAI has the GPT-4o/4.5 and o1-o3 families, xAI has Grok 3 and Grok 3 Reasoning, Google has Google Gemini 2.0 (Flash/Pro) and Google Gemini 2.0 Flash Thinking, and so on.

The reason is that as the model undergoes reasoning training, it “loses” its capability to think less when the situation requires it; it becomes sort of an overthinker.

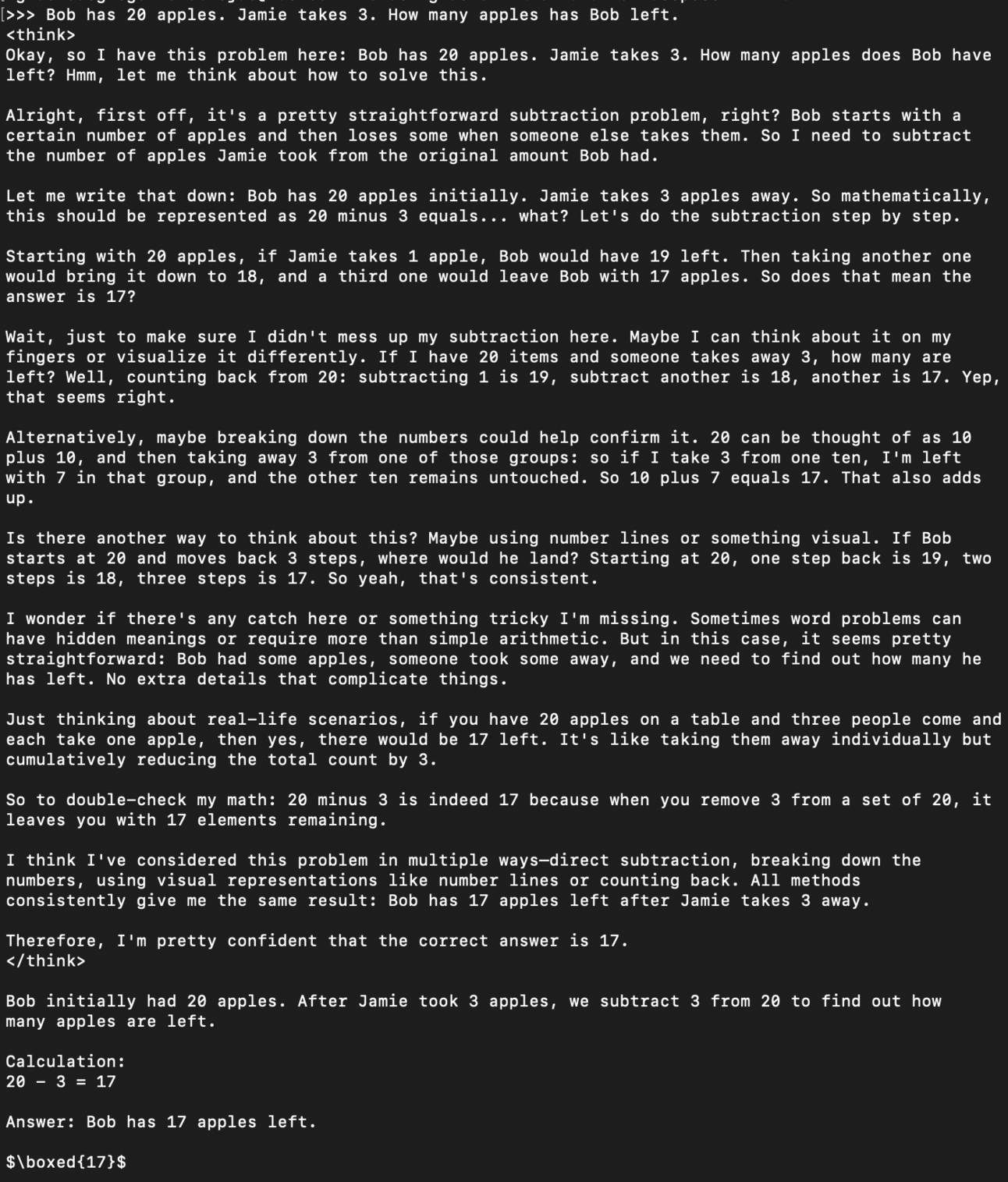

I believe the below interaction I had with a reasoning model is pretty illustrative:

Additionally, some of these products are systems. For instance, GPT-5, OpenAI’s soon-to-be-released new product, is not a model but a system of models, especially when you interact with its reasoning models.

They aren’t a single model but a combination of two or more models: a generator of thoughts and one or more verifiers, surrogate models that evaluate the correctness of the generator's thoughts and guide it through its thinking.

For more on this, read my deep dive into OpenAI’s o3, is is really AGI?

Currently, model selection—choosing which model to use each time—is up to the user, but this will soon change. For instance, OpenAI plans to change this soon by using a smart router that redirects tasks to the best model.

But Anthropic seems to have figured out how to make their latest model, Claude 3.7 Sonnet, behave as a non-reasoning and reasoning model, depending on the task. Just like the human brain switches between fast and slow thinking (or immediate responses versus multi-step reasoning), Anthropic’s models, called hybrids, do the same.

Therefore, we are seeing two worlds collide: increasingly complex systems of models versus ‘task-aware’ models that switch between both form factors. Due to their obvious advantages in terms of infrastructure deployment, hybrids are much more likely to dominate the industry eventually, so I think Anthropic is in the right here (additionally, they avoid the complex idea of smart routing that OpenAI pretends to deploy for GPT-5’s release).

That said, the obvious next step for me is multi-agent environments, where several hybrid models interact with each other to solve a particular task. We aren’t quite there yet, though.

But no paradigm is generating more over-the-top headlines than agents. But are they really that good?

AGENTIC AI

Agents, Yes… And No

When you look at social media like LinkedIn or X, based on the claims of some people, agents are the second coming of Christ. People depict the amazing stuff they’ve built using agents, and how the world is changing so fast that most won’t keep up.

The truth?

Agents are still mostly glorified garbage. Some tools are really impressive yes, but the framing they are being given has nothing to do with reality; agents, by definition, are autonomous, but the “agents” we have aren’t, making them more like tools, not fully autonomous software as some will claim.

For instance, one agent technology that has been making waves recently is the Chinese Manus. This Claude Wrapper agentic product allows you to parallelize several tasks that the agent executes infinitely. The model can think for a long time and has a very well-crafted tool execution that lets it perform a wide range of actions.

And yes, some results are really impressive. But at the end of the day, the vibe coding examples being presented (when users let the AI do all the coding) always end up in fun toy examples with all the bells and whistles but zero utility.

If you want to build actual products, humans are still required. To drive this idea home, I can use myself as a perfect example. Recently, I trusted Claude Code (quite possibly the best agent tool out there) with a simple code repository and the task of refactoring it entirely.

Six minutes and $5.5 later (agents consume an insane amount of tokens, increasing costs considerably), everything looked immaculate, yet nothing worked. That’s the issue with agents today: if you let them do their thing, they create stuff that looks amazing but doesn’t work.

Using agents today in full-autonomous mode lets you create garbage wrapped in gift paper, which gets you clicks in social media but makes your product totally useless.

Don’t get me wrong—agents are useful when you guide them, but that’s clearly not the vision and claims I’m seeing on social media. And that’s not an agent, it’s a copilot. Please don’t call these products agents, be better.

So, What’s Up for Markets Right Now?

Markets markets… how lost are they? Well, a lot.

Based on last month's NVIDIA crash, the reactions to its most recent quarterly earnings, and those of Marvell (despite meeting expectations, the AI hardware maker failed to show more AI-related growth), markets are much more weary about lifting AI stocks.

The main reason for this is that they are wrong.

Markets are Just Wrong.

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- NO ADS

- An additional insights email on Tuesdays

- Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more