For business inquiries, reach me out at [email protected]

THEWHITEBOX

TLDR;

🫡 Here’s All You Need to Know from OpenAI’s DevDay

⚡️ Pika 1.5 Shocks the World

🍎 Apple Announces MM1.5

😍 NVIDIA Crushes it with the NVLM Family

💽 The State of Semiconductors

[TREND OF THE WEEK] Self-Correction LLMs. OpenAI’s Secrete Leaked?

Writer RAG tool: build production-ready RAG apps in minutes

RAG in just a few lines of code? We’ve launched a predefined RAG tool on our developer platform, making it easy to bring your data into a Knowledge Graph and interact with it with AI. With a single API call, writer LLMs will intelligently call the RAG tool to chat with your data.

Integrated into Writer’s full-stack platform, it eliminates the need for complex vendor RAG setups, making it quick to build scalable, highly accurate AI workflows just by passing a graph ID of your data as a parameter to your RAG tool.

OPENAI DEVDAY

Here’s All You Need to Know of OpenAI’s DevDay

News from yesterday: OpenAI finally announced its new funding, $6.6 billion, at a $157 billion post-money valuation (meaning that investors invested at a $150.4 billion valuation).

OpenAI held its Developer’s Day two days ago, announcing many new features. They announced a ‘Real-time API,’ a new API (the interface you use to interact with OpenAI’s proprietary models, which can’t be accessed directly) that allows you to create voice products similar to Advanced Voice Mode (AVM), where you can talk with an AI that feels human thanks to its real-time latency.

If you haven’t heard or seen how incredible AVM is, I recommend this hilarious clip where the model imitates an Indian scammer (before you get offended, the user who published it is also Indian, it’s just a fun joke).

OpenAI also showcased a real-time demo of this API during DevDay.

They also introduced vision fine-tuning. Now, you can fine-tune your image models with your images, tailoring the experience. Furthermore, they introduced prompt caching between different API calls for an undisclosed amount of time.

But what does that mean? As we have explained several times, during inference, LLMs generate the KV Cache; they store part of the computations that are repeated between each new predicted word to avoid recomputation.

This has two effects: cost savings, as the model has to perform fewer calculations to obtain the new predictions, which in turn results in less latency. However, the KV Cache is usually particular to a given interaction. This is an issue because multiple API calls might refer to the same context or might be very similar among them.

Thus, now OpenAI stores this cache in a separate disk array and, upon receiving a new request, first checks if there’s a cache hit (if the question is related to content already cached). If so, it retrieves the cache, saving considerable costs and providing a better user experience.

Finally, they also released the most interesting new feature, model distillation. We’ve often discussed distillation as the tool that created powerful ‘medium-sized’ models that are now state-of-the-art, like GPT-4o, Claude 3.5 Sonnet, or Llama 3.1 70B. Now, OpenAI allows you to use GPT-4o responses (and I assume o1 models, too, in the future) to train a new GPT-4o mini model.

The following thread collapses all demos into one post.

TheWhiteBox’s take:

OpenAI is steadily becoming a super Generative AI platform. Having left their ‘God AGI’ nonsense behind, they have now become a full product company and are shipping valuable features.

The real-time API is clearly a differentiated product. For now, the category of use cases that require speech-to-speech interactions is entirely owned by OpenAI, as the user experience feels quite magical. In my humble opinion, this is another nail in the coffin of customer support agents and companies, as AI is inevitably poised to disrupt customer care processes, considering that:

Interactions are heavily scripted, perfect for AI.

Customer support agents have massive employee churn due to burnout.

Vision fine-tuning is a nice add-on, but image-based models continue to be more fun to play with than products that yield tangible value. This is especially true considering how LLM’s vision capabilities lag behind those of text-based models; they usually struggle with granularity (meaning they can only see the ‘big picture,’ no pun intended).

Prompt caching is great, but it’s table stakes by now; it isn’t new. DeepSeek was the first lab to offer it, and both Google and Anthropic have already offered it (and at lower prices than OpenAI).

But what seems truly transformational is the model distillation API. Distillation offers a sweet spot between size and performance; you can train a smaller model to ‘behave’ like a large one. Developers covet this feature, and it will hold many developers captive to the OpenAI platform.

Importantly, distillation includes an evaluation feature that allows you to test your fine-tunings easily. And just like last year, when OpenAI killed the LLM wrapper industry in 45 minutes, this is also a killer blow to LLM evaluation start-ups, who have lost their value proposition to a company that seamlessly provides both the models and the evals.

This feels like a great time to read my ‘Am I Getting SteamRolled by AI’ Framework, a guide for employees and companies to differentiate themselves to prevent AI from devouring them; it looks like some start-ups in the industry should have read it beforehand.

On a final note, coming fresh from an hour ago, they have released Canvas (link to demo video), a new feature that allows you to create, edit, and improve text or code in a simple-to-use environment. Feels very much similar to Cursor, but you still can’t run the code (code interpreters and compilers are surely coming soon, too, turning the OpenAI platform into a product that can help you create entire apps).

OpenAI is really widening the gap with the rest (and killing many start-ups in the process).

AI VIDEO GENERATION

Pika 1.5 Shocks the World

Pika Labs has released its new video generation model, and let me tell you, it’s the best model I’ve ever seen, period. In addition to the great quality of the generated videos, you can now add effects like melting (above GIF), inflating, and so on.

This X link shows several examples that will blow your mind (besides the ones on the previous link’s video). Honestly, this really starts looking like a real threat to movie studios (or a weapon, something that Lionsgate movie studio seems to understand, and for which Stability AI, by adding James Cameron, legendary movie director, to its board of directors, is also exploring).

Times are changing in the movie industry. Fast. Forever.

APPLE

Apple Announces MM1.5

Apple has announced a new set of small multimodal models, the MM1.5 family, that perform exceptionally well at various tasks, reaching (if not surpassing) state-of-the-art performance at various model sizes.

TheWhiteBox’s take:

Apple’s AI releases might be very underwhelming coming from news like those of OpenAI, but that’s basically not framing their strategy the right way. They are playing a different game.

Instead of training the best generalists possible, as OpenAI does, Apple’s releases are always small and clearly tailored for smartphone/laptop use cases. For instance, they released a fine-tuning version specifically for UI understanding, very similar to UI-JEPA we recently covered.

If you’re an Apple investor, you shouldn’t be concerned about Apple’s AI moves because you shouldn’t compare them to the frontier labs; you should judge whether Apple Intelligence makes AI more accessible to the people. If not, only then should you be concerned.

NVIDIA

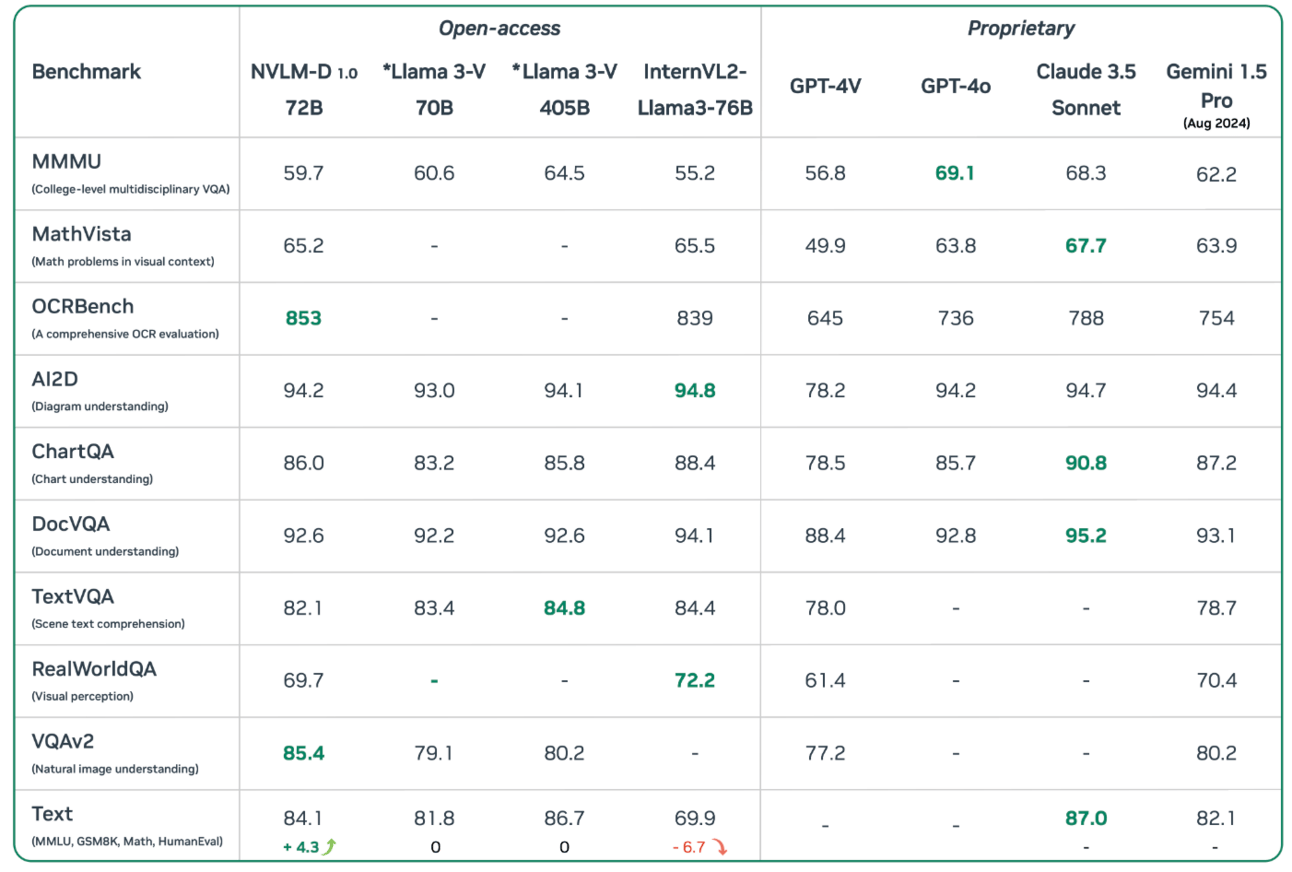

NVIDIA Crushes it with the NVLM Family

NVIDIA has released a new model family that is state-of-the-art with the best frontier models across various tasks. Crucially, it’s open-weights, meaning you can download the model and run it in your systems.

With this release, NVIDIA positions itself as a company that not only provides the industry's hardware foundation but can also build and service state-of-the-art models.

The reason why NVIDIA open-sources its models is transparent. With most of its revenues coming from hardware (almost 80% as soon as Q2 this year, probably higher by now), it is slowly but steadily working towards becoming an LLM server with its NIMs microservice offering. In other words, it is a company you can rent GPUs from, just like you can from Hyperscalers like Microsoft, but optimized through docker containers (they even run an API on top to abstract the infrastructure-level complexity for you entirely).

NVIDIA’s other big play is robotics, as we discussed in our NVIDIA deep dive.

In that business model, the only models a company would want to leverage are open-source, so NVIDIA is more interested than anyone in ensuring that open-source models remain competitive.

On a final note, notice that the frontier model is once again at the 70 billion range, proving that my recent Notion article on the convergence of labs around this size continues to be accurate (I hope you don’t mind a bit of self-praising).

SEMICONDUCTORS

Dwarkesh Interviews SemiAnalysis CEO

Here’s an interview by Dwarkesh Patel with Dylan Patel and Jon Y from the SemiAnalysis think tank, and the Asianometry YouTube Channel and Substack, respectively.

If you’re into semiconductors, understanding their implications in AI, and insights into how Big Tech will make this reality true (something we also covered in detail), this two-hour interview is an absolute must-watch. It covers the semiconductor wars with China, the US’ path to AI supremacy, and more interesting stuff.

And now, we move into the Trend of the Week, covering Google’s new breakthrough.

TREND OF THE WEEK

Has Google Leaked OpenAI’s Secret?

Google has presented self-correcting LLMs via Reinforcement Learning, a new method that teaches models to acknowledge and correct their own mistakes. This opens a new world of possibilities for AI that is smarter and more ‘self-aware.’

Interestingly, OpenAI’s o1 models excel at this particular capability, which might have been the key to their superior performance. Now, Google could have just spilled the beans on a crucial process step.

Understanding this research will give you great insight into how AI models learn, how we tune the learning objective to our advantage, and how frontier labs are training the next generation of AI models.

Let’s dive in.

The Importance of Self-Correction

LLMs are stochastic by nature. In layman’s terms, every prediction they make includes random sampling, increasing the non-zero chance that the model fails.

But what do we mean by random sampling?

How LLMs Work

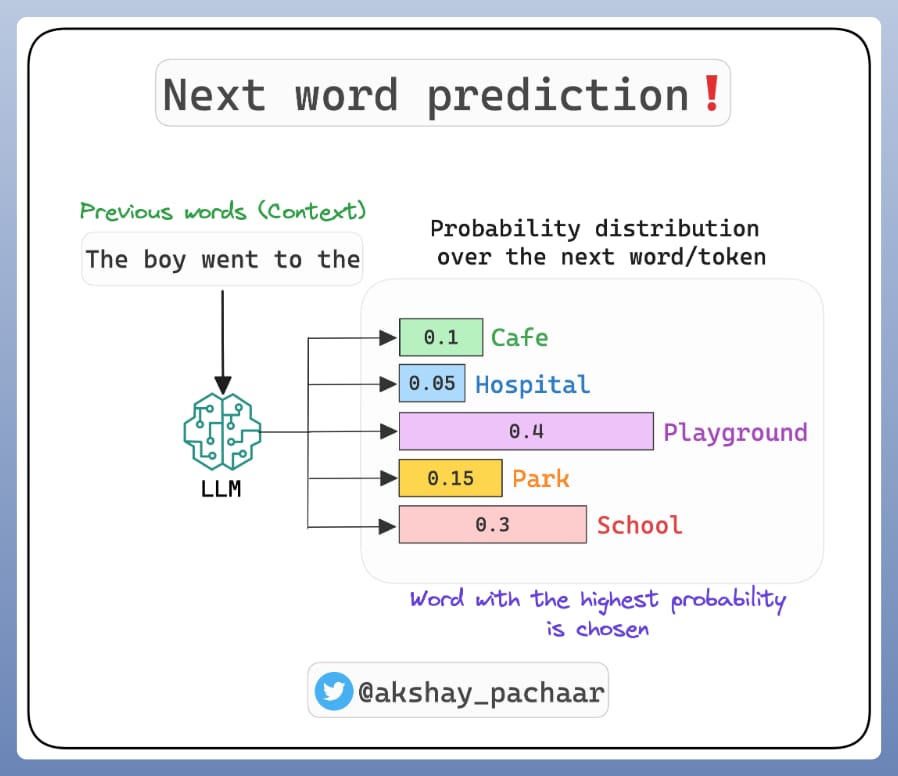

With LLMs, we want response variety.

In other words, for the same input sequence, we want the model to change the output slightly every time; otherwise, it would always output identical responses while still guaranteeing semantic consistency (that the output makes sense).

To enforce this, once the model has listed the set words that could become the next word to a sequence, we randomly sample one of the top most probable ones. This leads to variety in outcome but adds a non-zero probability that the model randomly chooses the wrong word in factual predictions (predictions where there’s no room for variety).

Worse, as the next words are conditioned on all previous words in the sequence, including the last wrong prediction, this error cascades, leading to the model outputting a totally wrong response that people usually call ‘hallucination.’

For that reason, even though we want to retain the model’s ‘creative’ nature, we wish models could acknowledge and correct mistakes upon making them; we can’t prevent model hallucinations, but we would certainly benefit from the model being capable of self-correcting them.

However, standard LLMs can’t. But Why?

Understanding How Models Learn

To understand how an AI works, you must always ask yourself: What is the model learning? In the case of LLMs, we are maximizing the likelihood that, upon predicting the next word in a sequence, the word assigned with the highest probability is the actual one it saw during training.

For instance, if the original sequence was “Alexander the Great was Macedonian,” we train the model to, upon seeing the sequence “Alexander the Great was…,” the model assigns the highest probability to the word ‘Macedonian.’

However, as we saw in the previous image, they also assign probabilities to other words, which, added to the random sampling we mentioned earlier, could lure the model into outputting other options like ‘Thratian,’ words that, while an understandable mistake considering Alexander ruled over Thrace, is factually incorrect.

Insights:

1. LLMs predict tokens, which can be subwords (think of them like syllables) instead of entire words, but the principle is the same.

2. If you interact with model APIs, you can adapt the behavior of this random sampling by modifying the temperature hyperparameter and trying different sampling methods, such as min-p.

In a nutshell, LLMs are trained to give their best response possible every time, making it very hard for them to acknowledge mistakes (if I give my best at solving the task, there’s really little I can do to improve).

And while this sounds great, it’s far from ideal for complex tasks like maths or coding.

Sure, you might be extremely experienced and capable of solving complex math problems in one go, but chances are that even the most skilled mathematicians opt for a humble approach when faced with a complex math problem.

That means that the first output they give isn’t necessarily their best attempt at solving the problem but a ‘prior’; they usually take a broad approach instead of directly committing to a particular method (unless, as mentioned, they’ve seen the problem before and know exactly how to solve it).

This means that the first try will most likely end in a wrong answer, but because the person is openly acknowledging that this first response is just a prior, that gives him/her intuition on how to approach the problem so that the second try not only has much higher chances of arriving to the correct response, but it also allows the human to correct or refine the initial try.

The intuition might seem complex to grasp, so let’s use myself as an example.

Some Tasks Require Humbleness

Let’s say I want to write an article about a research breakthrough (like today).

One option for me is to follow the LLM approach. I read the paper five times, absorb the key intuitions, and write the best possible article in one go. To do that, I commit to a certain article structure before I actually write anything, and for every new word, I ask myself whether that’s the best word I could use. I also go back to my research every other sentence to make sure what I’m saying is accurate.

Alternatively, I may read the paper two or three times and simply write a rough first draft. As I write down every part of the article, I am indirectly testing myself on the subject, making me aware of my limitations.

After finishing this first draft, I have a very good idea of what I haven’t fully internalized, revisiting these precise points in the research. After finishing the article, I also reflect on what message I’m trying to convey and whether the article actually does that. Over the next few days, I revisit the piece many times, editing points I could have worded better and optimizing my storytelling until I end with the final article.

What option do you think is best?

Obviously, option 2. Not only does it require much more thought toward the task, but the ending result has a better rhythm and is more optimized toward the goal of the article.

However, the key thing here is that option 2 has two key benefits:

It’s generalizable. Rough, edit, and edit is a process that can be applied to any writing, technical or non-technical

It gives me a margin for error. I would never publish something that I haven’t reread at least five times; you would be surprised to know that after multiple edits, I still find mistakes, be they grammatical or ideas that lack insight.

The writing is on the wall, some tasks simply require giving yourself room for correction and refinement. And if we add the fact that LLMs simply shit the bed every once in a while for reasons stated earlier, by now, you will have realized the issue with the traditional LLM training approach:

For tasks that are creative and less complex, we want the LLM to give us its best bet.

On the other hand, for more complex tasks, we want the model to give itself more time (by generating more than one try) but also learn to refine or correct its previous tries intuitively.

And here is where Google Deepmind comes in.

SCoRE, Teaching Models to Self-Correct

So, now the question is, how do we teach an LLM to self-correct?

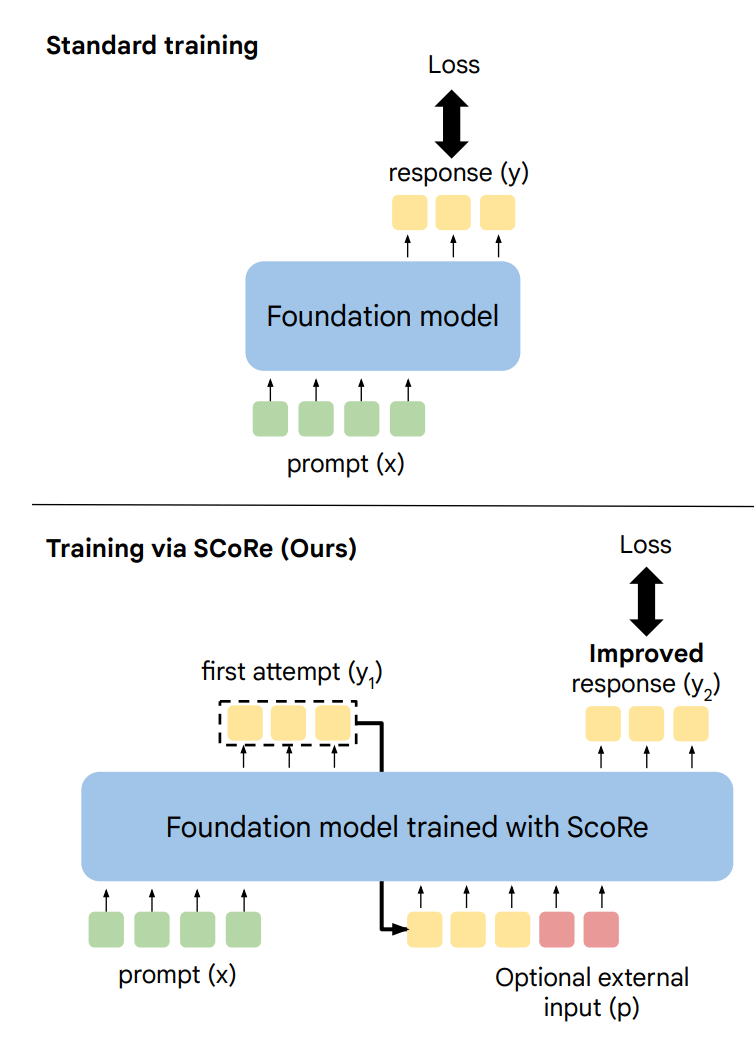

Well, we are going to vary the approach. Instead of just computing the loss for every try (how good a prediction was), leading to the model simply maximizing accuracy per try, SCoRE is a method where the model learns to do two things:

Perform a ‘good enough’ first try

If necessary (the first try might be enough), notice where the mistake occurred and correct it.

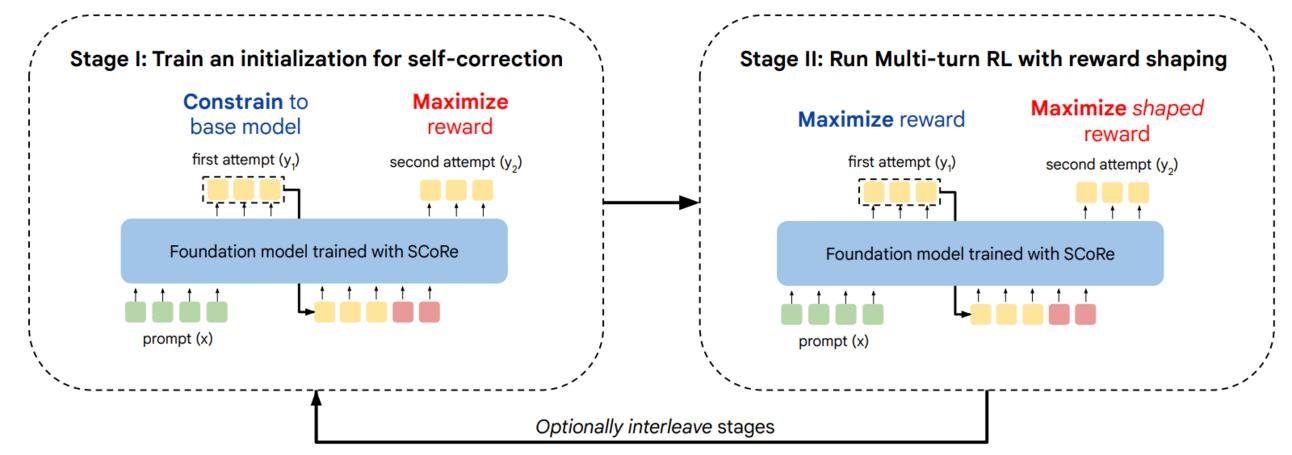

But how do we teach that to a model? For reasons I can’t fully explain for the sake of length, researchers divided training into two steps, as shown below:

Stage 1: Training a good initialization (prior)

We train the model to give a first response that stays as close as possible to what the base model would have predicted (the model's state before self-correction training).

This way, while we are already giving a try to solve the problem, we ensure the model doesn’t go wild on its first try, making the response impossible to correct on the second try. As for the second try, we then maximize reward, which means we ask the model to give its best on the second try to get the correct answer.

Technical intuition: Here, the objective function includes two terms:

1. a KL Divergence term that induces the model to minimize the ‘distance’ between its probability distribution and a model reference (the base model), de facto making the model behave ‘similar’ to the non-fine-tuned model,

2. and a reward term that rewards the model whenever it gets the response correct on the second try.

Stage 2: Multi-turn RL with reward shaping

In this stage, researchers add an interesting adaptation never seen before: reward shaping.

In this second stage, the model is tasked with maximizing reward in both tries (try its best to get the correct response in any try) but we add a penalty term that measures how different the model’s responses are from one response to the next. In other words, if the responses are similar, indicating that the model isn’t making an effort to improve the first response, the model suffers a penalty even if both responses are good.

Thus, in this stage, the model learns to ensure there’s a clear gap between both responses that, added to the fact that the model is rewarded for getting the response correct, naturally induces self-correction (a significant change from response one to response two that also ends up in a correct outcome).

In a nutshell, by actively changing what the model is actually learning, teaching it to become more aware of its mistakes and refine them, we get an LLM that can now be used in search-based systems like the o1 models, capable of self-reflecting and correcting its own mistakes, an essential component to building an effective System 2 reasoner (a model that can explore different ways to solve a problem and keep the best one).

And the results? SCoRe takes Gemini 1.5 Pro models and improves their self-correcting performance by more than 15% and, in the process, tells the world that OpenAI’s secrets might not be that secret after all.

Closing Thoughts

This research proves that while OpenAI et al. may find new technological breakthroughs, they eventually get leaked to the world, allowing open-source to catch up. This means that AI will never be a moat but a table stakes feature of your product, even if you are an AI company.

This week has been heavy in new models and developer features, which means that the industry is ‘growing up,’ leaving the hype behind and really putting the focus into building stuff people want.

THEWHITEBOX

Premium

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask your questions you need answers to.

Until next time!