Discover 100 Game-Changing Side Hustles for 2025

In today's economy, relying on a single income stream isn't enough. Our expertly curated database gives you everything you need to launch your perfect side hustle.

Explore vetted opportunities requiring minimal startup costs

Get detailed breakdowns of required skills and time investment

Compare potential earnings across different industries

Access step-by-step launch guides for each opportunity

Find side hustles that match your current skills

Ready to transform your income?

THEWHITEBOX

TLDR;

This week, we look at OpenAI’s new agent products, reasoning drones, Canada’s AI champion, OpenAI’s attacks on DeepSeek, and Google’s amazing native image generation, among other stuff.

Enjoy!

WARFARE

Thinking Drones, What Could go Wrong?

Russian scientists have developed thinking drones, the first real attempt to equip drones with powerful artificial intelligence. They combine them with reasoning and vision models.

The robot can handle tasks such as "fly through the gate with the number equal to 2+2," while the AIs handle the instructions and actions.

With the arrival of “intelligent” drones, what could go wrong?

TheWhiteBox’s takeaway:

I don’t think ‘surprising’ best describes this news; it was something everyone knew was coming. While this type of research is necessary for progress, it is not practical for now.

The AI models used in the research combine for 14 billion parameters or 28 GB of memory at FP16 precision (the most common precision size for model parameters). That means that the drone, unless we want it to be connected to a cloud environment (bad latency guaranteed), has to carry the GPU cores and the HBM memory on the drone, making them heavier, less agile, and, importantly, much more expensive.

HBMe memory, the state-of-the-art RAM memory for AI workloads, costs around $110/GB, meaning that the piece alone costs $3k, severely increasing the ASP (average selling price) of products that, due to intense Chinese pressures, should be extremely cheap. I’m only speculating, but maybe the cloud could be brought into the drones, meaning that the AI servers storing the GPUs could be deployed into the battlefield, too. The issue is that if those servers are compromised, the drones lose their entire AI capabilities instantly.

Therefore, I’m pretty sure the goal is to build AI hardware into the drone. Now, let’s face reality. I’m a research freak, but we need to acknowledge that one thing is to develop something, and another is to make it economically viable.

Either way, reasoning drones are definitely coming, as it is a great way to allow these drones to be controlled via language. However, I’m not convinced of the unit economics at the moment.

AGENTS

We Need to Talk About MCP

While OpenAI’s release below is undoubtedly the trend of the week (more on that below), the Model Context Protocol, or MCP, developed by Anthropic, is going extremely viral. This video, which uses an LLM to create 3D renders, showcases its powers incredibly well.

But what is MCP?

Put, MCP is a communication/action protocol for LLMs to use tools on the Internet. It exposes tools and information from other software to the LLM in a standardized form.

But why do we need this if we already have APIs, interfaces that do the exact same thing (more on that in the OpenAI story below). Well, the key term here is standardized.

In an MCP-based communication system, the agent acts like a ‘client’, a software connected to one or more MCP ‘servers’. Therefore, if a software exposes itself as an MCP server, the agent automatically “knows” how to use that software. APIs don’t work that way. They are somehow standardized (but not really), yet the agent has to know how to use each API independently.

However, to an AI, using software through MCP is identical to using any other software that offers its context or tools as an MCP server.

This might seem very abstract, but let’s look at an example. A few days ago, Perplexity, an AI-powered search engine, announced its MCP server. Now, any AI that goes live as an MCP client can use Perplexity for search immediately, without learning how to use the Perplexity API. In the link, Perplexity itself shows how it can provide web search capabilities to Claude (yes, Claude doesn’t have web search capabilities).

TheWhiteBox’s takeaway:

What we see with MCP or llm.txt are just examples of a larger trend we see in software. Software was made for humans to consume, but future software will be tailored for AIs to consume.

Whether it’s MCP or another protocol, the undeniable truth is that this trend is taking place and seems unstoppable.

FRONTIER

OpenAI Calls for DeepSeek Ban

OpenAI has finally openly accused DeepSeek of being state-controlled and that, for that reason, it should be banned in the US (something that’s already in the works as you read these words).

The DeepSeek app has already been banned in places like South Korea and Italy.

TheWhiteBox’s takeaway:

While I do not sympathize with OpenAI in this, as this is trying to enforce regulatory capture to prevent competition, I would understand the reasons behind a ban.

Not only has the DeepSeek app proven highly insecure for users, but it’s hard to imagine a world without it being state-controlled, at least to some degree.

Furthermore, the CCP weaponises Chinese apps to manipulate Western societies (TikTok has proven to be a clear source of antisemitism in the US), and this podcast by a China-US relations expert points at this as one of the CCP’s preferred methods to destabilize the US and its allies (although, to be fair, we aren’t saints either).

And just like NVIDIA and AMD are being weaponized by the US government, the CCP retaliates by open-source powerful AI models that make the business of earning money much harder for US incumbents.

My biggest concern here is whether their real intention isn’t going after the DeepSeek app but treating any DeepSeek product as illegal, including open-source weights that this company constantly releases. While I can get behind an app ban for national security concerns, the argument does not hold for open-source deployments, and treating open-source digital files as contraband (which would be the only way to ban them) would set an extremely concerning precedent against open-source.

It’s clear that OpenAI’s real motives aren’t the well-being of US citizens but simply removing a competitor (and a really strong one with great traction).

ENTERPRISE AND COSTS

Enterprise AI is A Path to Small AI… and Tariffs?

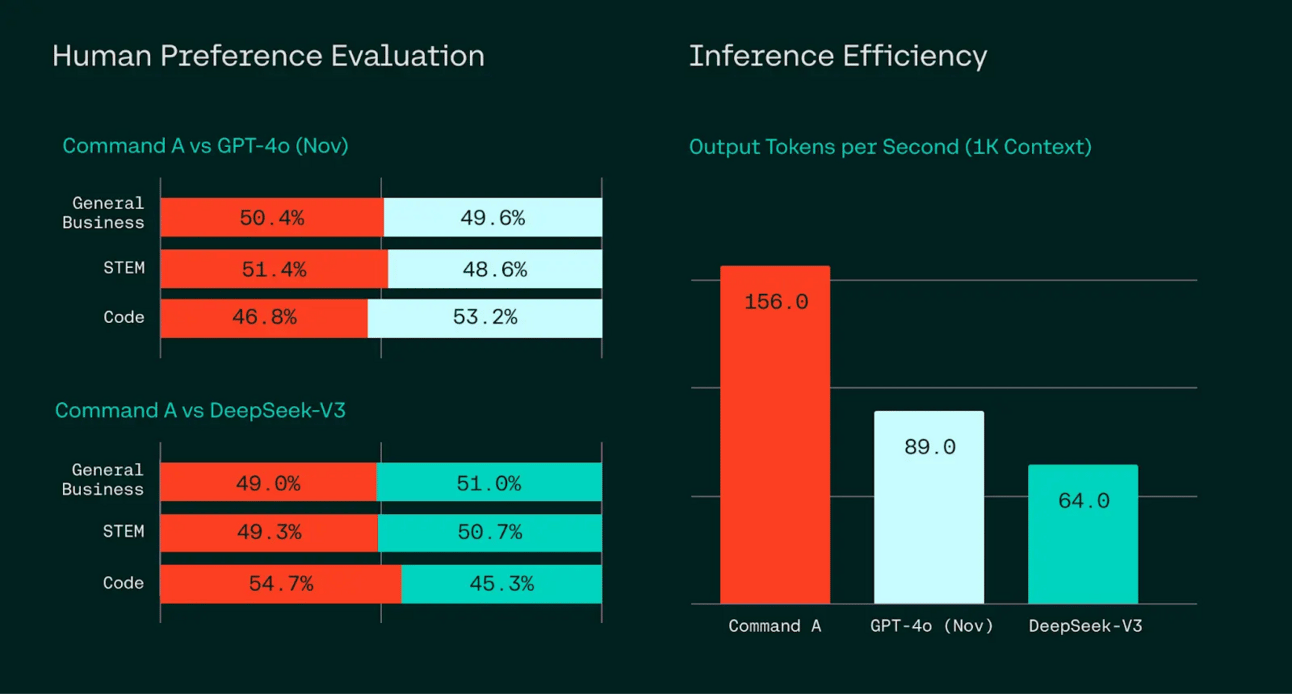

Cohere, Canada’s AI champion Lab, has released a very interesting model, Command A. This new model, presented as ‘state-of-the-art generative AI model,’ focuses on enterprises.

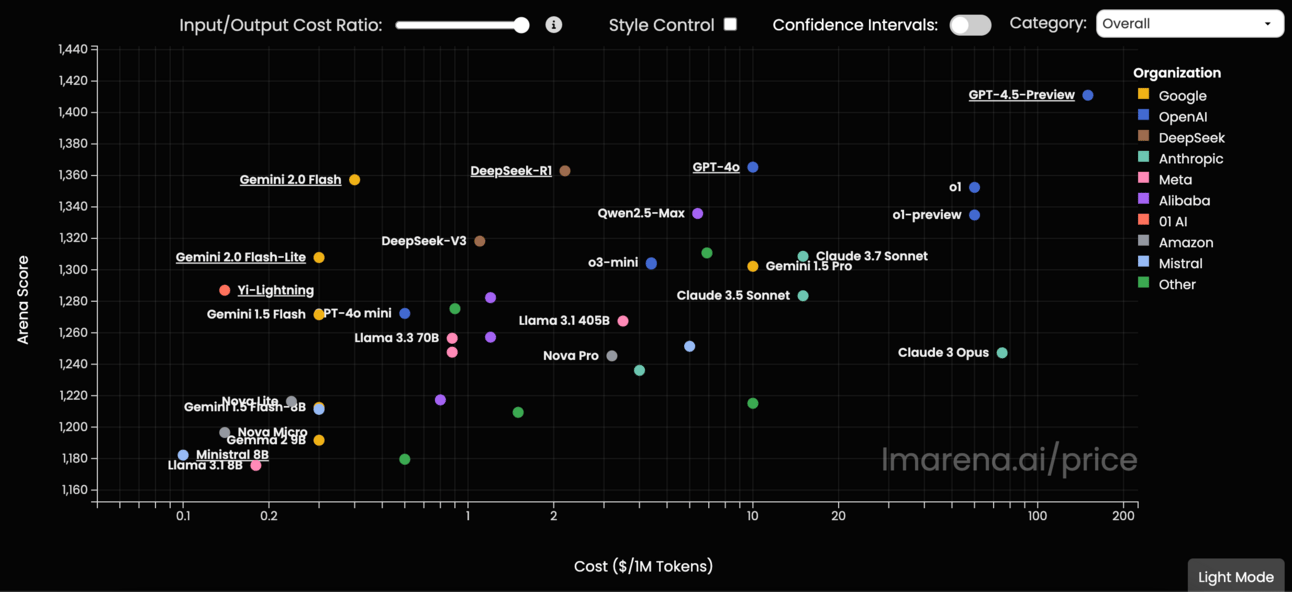

It’s a non-reasoning model that is very competitive with GPT-4o and DeepSeek V3 (so not quite SOTA because that would mean competitive with Grok 3, Claude 3.7 Sonnet, and GPT-4.5).

Still, the thing to highlight is that it’s extremely compressed and efficient for its size, which is an irresistible feature for companies. The model is 111 billion parameters large, which sounds quite big.

However, it fits inside two A100/H100 GPUs (160 GB of HBM, meaning that the parameter precision must be FP8, or one byte per parameter, to fit). That would still leave 49 GBs free for the KV Cache, which allows for a decent number of concurrent users if sequence lengths aren’t too large.

This is particularly interesting considering that the model can be served on-premise. This means that Cohere engineers will deploy your model inside your enterprise cloud, ensuring data never leaves your organization. Therefore, as the current price of such GPUs is around $2 per hour, you can serve this model to your employees (or clients) for $4 per hour.

TheWhiteBox’s takeaway:

Serving models in just two GPUs means the solution scales incredibly well. As long as that number is below 8 GPUs, these communicate via NVIDIA’s NVLink high-speed networking cables, which ensure that the entire cluster behaves almost like a single GPU. This would explain why the model is so fast (thumbnail) compared to others like DeepSeek V3, which need two 8-GPU nodes to run.

The model also allegedly excels at tool-calling (the AI using external tools, necessary for agentic use cases), meaning that this model looks better and better for enterprises the longer you look at it.

As for Cohere, it’s an intriguing Lab. We know it’s struggling in terms of revenues (it has one of the highest forward PE valuations in private markets), which tells me that the only reason they have not been acquired yet is due to the fact they are the Canadian champion (think of them as a similar case to France with Mistral or Safe Superintelligence. Inc for Israel). Nonetheless, Canadian pension funds like PSP Investments are some of the company's largest shareholders.

But the real question that comes to mind is: Will Cohere be targeted in the tariff war between the US and Canada? More importantly, will this reinforce Cohere’s need for survival in the eyes of the Canadian Government?

AI IMAGING

Does Google’s Native Image Generation Achieve Raw Generalization?

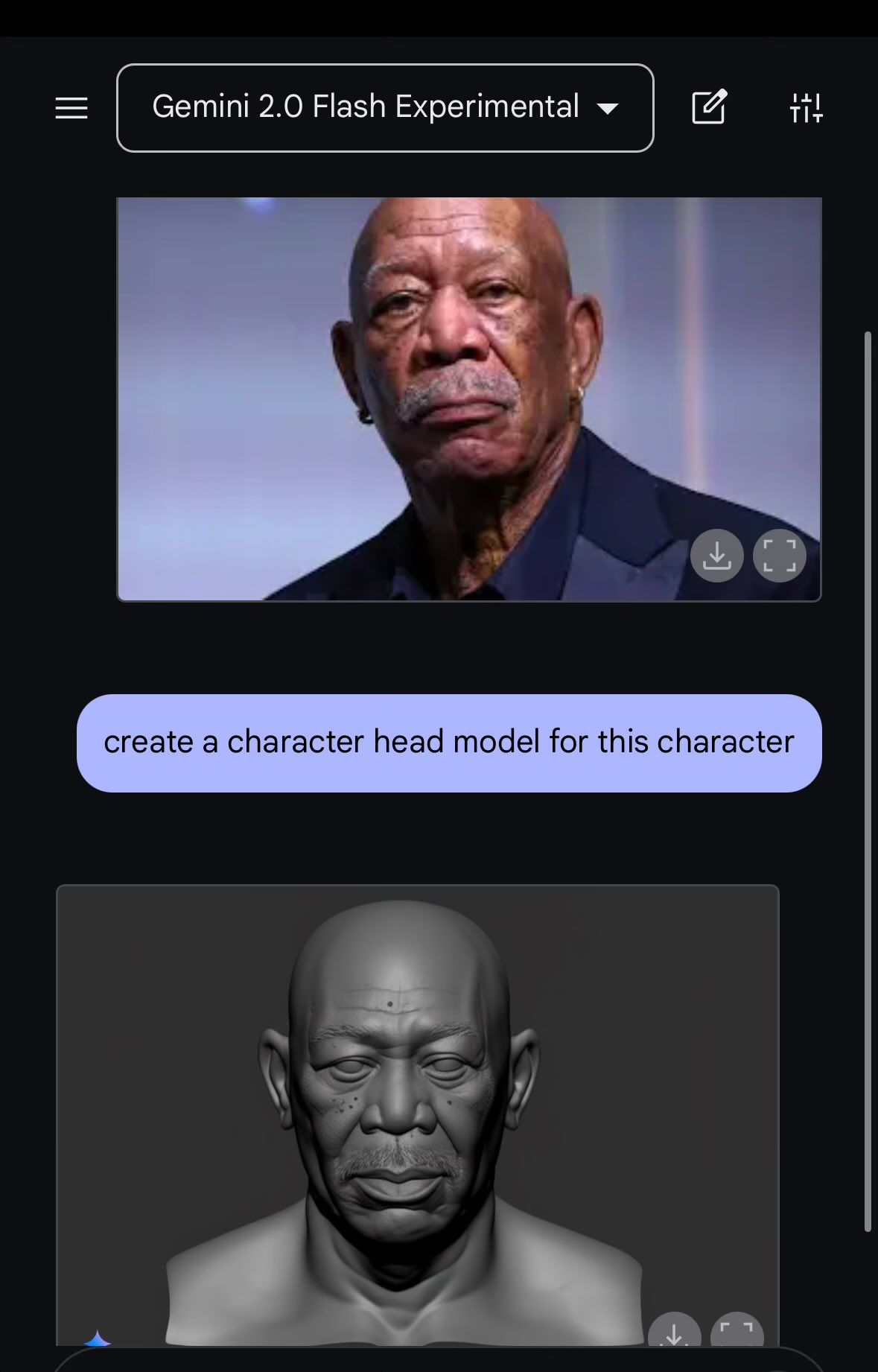

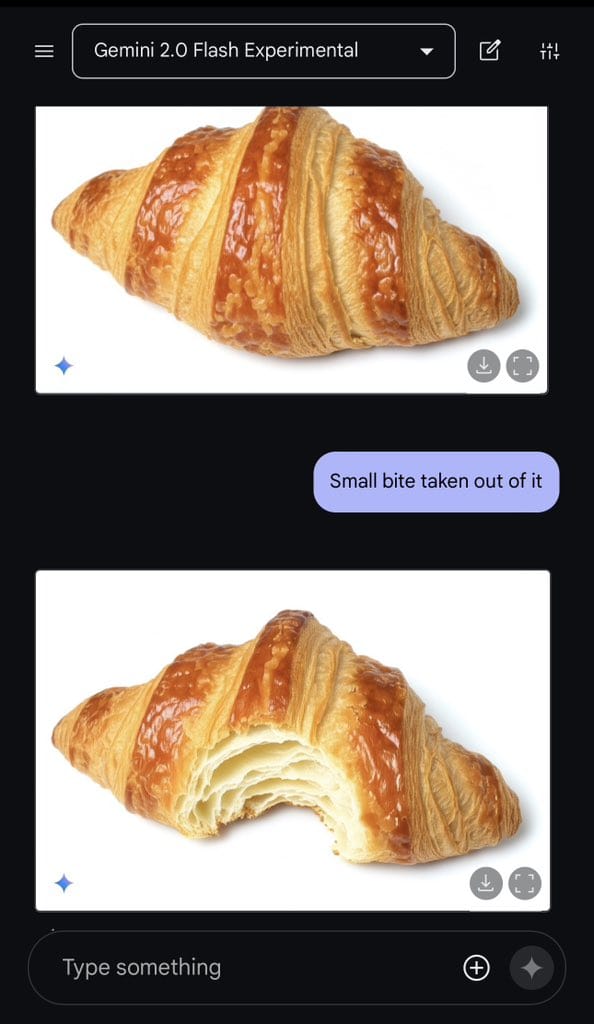

Google, which has arguably the best image and video generation models right now, Imagen 3 and Veo 2 respectively, has finally natively integrated Imagen with Gemini 2.0 Flash Experimental, allowing some remarkable creations.

TheWhiteBox’s takeaway:

Let’s explain why this is great. First, we must explain what we mean by ‘native'. Beforehand, Google’s models would send an image to Imagen, a separate model, when asked for an image.

The issue with this is that the generated image would only be as good as the quality of the prompt sent by the other model. This was a multimodal system but not truly multimodal, as the image generator was still generating images simply by generating an image semantically similar to the given text.

The image generator and the text generator use the same model, which means that when generating the image, the model has a much more complete understanding of the request. For instance, let’s say we want to add something to an image sent by the user.

Previously, the image was processed by Gemini 2.0, which described it in text and sent the text to the image generator model. Obviously, the text description couldn’t describe every feature of the image, so there was some semantic loss due to the loss of spatial features.

Now, as it’s all the same model, the image generator model can ‘see’ the image, capturing all the visual cues that the image provides that text can’t.

Therefore, by not losing the visual cues, this method can achieve amazing image edits like the one below:

However, the most interesting feature is undoubtedly 3D body generation (thumbnail). This is amazing because it appears to be a raw generalization. The model does not use a 3D rendering tool, nor was it—at least allegedly—trained for this particular use case. It has just learned to do this autonomously, which is pretty remarkable.

All things considered, we can conclude two things:

Google’s AI models are pretty damn good and their actual usage traction (especially regarding enterprises and text cases) do no justice to how good they are.

Google models also have the best performance per cost available, as the latest Gemma 3 release further proves.

And they are releasing great products like this and also like NotebookLM.

The problem with Google, as we explained in our Google deep dive a few months ago, continues to be the same: Their unwillingness to disrupt their own business; their unwillingness to change shackles them.

But if Google gets its shit together, and that’s a big ‘if’, they are better positioned than anyone to win.

AUDIO GENERATION

The Best Audio Model Open-Source?

Sesame, the company that amazed the world a few days ago with what might probably be the best audio generation model in the industry (try it here), has open-sourced the base model for anyone to try, and with an Apache 2.0 license (free to download AND commercialize). You can try that base model in a HuggingFace instance here, too.

TheWhiteBox’s takeaway:

I don’t know what you’re waiting for if you haven't tried speaking to these models (first link above).

As for the open-source release, when I say they have released the ‘base’ model, I mean that this isn’t the model you can interact with in the first link of the three (the actual model being released as a product shortly), that is a fine-tuned version (post-trained to improve its capabilities) of this model that is now free to download.

Still, a great model for any team trying to build an in-house speaking agent.

The most surprising aspect of this model is its small size (just one billion parameters). This is huge because it proves that running state-of-the-art audio generation models on your own computer (or smartphone) is actually possible.

TREND OF THE WEEK

Why OpenAI’s New Agent Products Matter

Last week, we discussed how agents were still a long way off. But two days ago, OpenAI released a new set of features that could have made agents much more real now: the Responses API and Agents SDK.

With NVIDIA’s GTC coming next week, we will see whether larger enterprises are actually adopting agents. You can subscribe for free here.

In a nutshell, these are their two new proposals focused on one thing only: agents. But this release has more significant repercussions:

It cements a future where most software is programmed with English, no coding required,

It could also confirm that, despite AI investing being the biggest driver of capital allocation, most private investors won’t make a single dime in AI because OpenAI is targeting their startups.

Read on if you want to understand OpenAI’s new business and the hard truths of AI investing.

Agents on Demand?

When you picture an AI agent, what comes to mind? Whatever that is, let’s break it down in first principles: Agents, as defined by most, are semi-autonomous/autonomous machines that can take actions on your behalf.

The High-level Idea of Agents

In practice, they are mostly based on three things:

An AI model, in charge of processing the input (understanding user intent) and choosing/guiding the actions of the model.

A memory source that provides essential dynamic context to the model based on the user’s request.

Tools that allow the model to action.

For example, an agent can be a Large Language Model (usually a reasoning model), with access to your company’s financial data, and connected to Stripe’s SDK to make/request payments. Consequently, when the user asks the agent to send a ‘make payment’ request reminder to supplier ABC. Corp, the agent will execute the following procedure:

Captures user intent (request payment from ABC).

Make a plan (if necessary)

Use the user’s input to perform a semantic search on company data to find ABC Corp’s information.

With that information, call the Stripe system via the SDK, sending the necessary information

The Stripe system executes the payment request.

Here, the human only declares what he or she wants, and everything else happens autonomously. That’s the future AI companies envision.

And now, OpenAI has pulled us closer to that future. But how?

All this… by Default

There are two ways OpenAI offers its models:

You can access the models via your browser/desktop, with the ChatGPT app, which is how most users access them.

Via APIs, a way to interact with their models via code, which is the primary method for enterprises that want to streamline/automate their business processes with AI

Regarding point 2, most of these APIs are back-and-forth chat generators. You send the API your question, the model behind that API answers, and you receive its response.

It’s basically a chatting interface. You can also send files, like images or PDFs, and then chat with the models about these files. Therefore, every interaction with the models is similar to that of the ChatGPT app, a back-and-forth conversation.

While ChatGPT has a fixed subscription price, API prices are usage-based, and every interaction is billed based on the number of input/output words (output words are ~ three times more expensive).

And what about reasoning models, the models that think before they speak? Well, as they think in natural language, its thinking words are also output words, and they are billed just like any other output word (which makes them more expensive).

Output tokens are more expensive than input tokens because the prefilling stage (when the cache is built once the input is sent to the mdoel) is highly akin to AI training, more reliant in matrix-to-matrix multiplication which is a much more efficient workload for GPUs. Autoregressive generation (when the model generates words one after the other) is a matrix-vector dominant computation and thus much more inefficient to serve.

But before today’s announcement, how did tools come into the picture so the AI could take action?

Previously, if you sent a model with a list of accessible tools and a request that required the tool, the AI would detect that need and, instead of returning a text response, would return a ‘stop_reason’ disclaimer with the tool's name. In other words, the model responded by indicating they want to use a given tool, but it was up to you to code how to use it.

Thus, the developer would then need to call the tool in question, retrieve the answer, and provide it to the model. As you may guess, this experience is not ideal, as the user manages the tool use.

Therefore, OpenAI is proposing two things:

Native tools, described below,

Tool loops via the Agent SDK, where the agent autonomously executes the tool you define and continues the process without needing your scripted intervention.

In simple words, OpenAI is adding two new abstractions (things the user no longer has to worry about), making the whole experience much more… dare I say, agentic?

As for the native tools, these are:

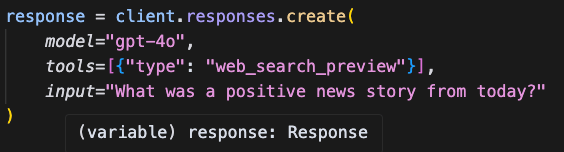

Web search: Just like in the ChatGPT app, the AI models can now autonomously use web search to surf the web in the user’s request requires it (building the web search tool by yourself, as I did once using the Brave API, is not an ideal experience, and the actual data extraction from the web is much more complex than it reads). Now, you simply make the tool available to the agent, and off to the races.

This is literally all the code needed to get a response from an AI model searching the web

File search: You can send the model files and chat with them. This was already offered previously.

This seems like table stakes, but building a file search system is much more complicated than it reads. Instead of searching by keywords (your question uses the word ‘cat’, so I will search for documents that use the word ‘cat’ several times), the model uses semantic similarity to choose which file to use as context for your response.

To do this, files are chunked into text pieces, each stored separately. When the user asks a question, the model searches for text chunks that are semantically relevant to the request, adds them to the context, and then responds.

There are too many things I’m not explaining here, so if you want to dive deep into all this, read my explanation of embeddings (numerical representations of text used to compute text similarity), my Notion article on reranking (an essential piece that OpenAI also does for you), and contextual retrieval, another Notion article describing more advanced retrieval systems.

And third and most intriguing, we have…

Computer Use: You can now also send the model an action request that it will execute on a remote browser. For instance, you can ask it to buy a flight ticket to Denver, and the model will do it for you.

Long story short, OpenAI will go to great lengths to offer extensive agent functionality out of the box with little to no effort on your side. But they didn’t stop there; they also wanted to show their vision for multi-agents.

The Future of the Future

While most people are still learning how to run agents properly, OpenAI already offers features that allow you to deploy not one agent but a swarm of them (similar to Microsoft’s proposal, AutoGen).

The Agents Software Development Kit (SDK) allows you to define agents on the fly and let them autonomously coordinate with each other. The SDK includes many cool features, the most futuristic of which is handoffs, where an agent can autonomously choose to delegate work to another agent.

For instance, you may define billing, refund, and triage agents and, if the billing agent identifies the user’s request as a refund, delegate that job to the refund agent (or in the example below, it’s the triage agent who’s in charge of making the delegation).

from agents import Agent, handoff

billing_agent = Agent(name="Billing agent")

refund_agent = Agent(name="Refund agent")

triage_agent = Agent(name="Triage agent", handoffs=[billing_agent, handoff(refund_agent)])Importantly, this is a step toward “programming via English,” a future where most of the code is actually English. Put another way:

With every new iteration of these products, humans do less and AIs more.

But the main takeaway for many is the serious repercussions this has for AI startups.

A Steamroller

In an interview last year, OpenAI’s CEO, Sam Altman, urged AI startups to stay out of the way of foundation model companies like Anthropic or OpenAI because, otherwise, “they would be steamrolled.”

The issue is that most AI startups are wrappers built on foundation models built by companies with every incentive to verticalize upward and cannibalize the AI startups using their models.

For example, many AI startups offer search, context retrieval, and other features that OpenAI et al. didn’t offer… until two days ago. Focusing on file search, unless your company explicitly prohibits OpenAI due to data security concerns or you prefer other models, it’s really hard to justify building your own RAG pipeline when OpenAI offers it by default.

I can think of dozens of startups whose business relies precisely on this, meaning OpenAI has made their value drop to close to zero.

All this takes me to a conclusion: I sincerely believe there hasn’t been a harder time in history to be a Venture Capitalist or an AI founder. Your biggest concern as an AI app-layer start-up is that you are fighting against AIs that are improving daily. Your startup's existence depends on whether OpenAI chooses to deploy your features into its API, rendering your entire business useless.

This little room for VCs, as:

Hardware and infrastructure are mostly public companies.

Model layers are insanely cash-demanding with little-to-no margins (i.e., no outsized returns unless the already-inflated companies go public one day for an exorbitant amount),

and the application layer sees how the model layer eats into it more and more by the day.

This raises the question: Is there money to be made in capital markets?

THEWHITEBOX

Join Premium Today!

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]