10x Your Outbound With Our AI BDR

Scaling fast but need more support? Our AI BDR Ava enables you to grow your team without increasing headcount.

Ava operates within the Artisan platform, which consolidates every tool you need for outbound:

300M+ High-Quality B2B Prospects, including E-Commerce and Local Business Leads

Automated Lead Enrichment With 10+ Data Sources

Full Email Deliverability Management

Multi-Channel Outreach Across Email & LinkedIn

Human-Level Personalization

THEWHITEBOX

TLDR;

This week, we cover 👾OpenAI’s GPT-4.5 debut with a focus on emotional intelligence rather than groundbreaking advancements, raising concerns over its steep pricing. Meanwhile, 🚄Inception Labs has introduced Mercury Coders, pioneering diffusion-based LLMs that generate text faster than traditional models.

Moving on, we look at Amazon and Hume AI. The former has 🏠revamped Alexa with Claude-powered AI, aiming to dominate the smart home market, while the latter has launched 🎵Octave, a voice model integrating deep emotional expression.

We then move into 🕸️Poe Apps that enable rapid AI-driven app creation, excelling in prototyping, a close look at NVIDIA earnings report and what I believe is missing and, lastly, we discuss in more detail Figure AI’s 🤖Helix, a multi-robot control system mimicking human cognition, a clear robotics breakthrough.

FRONTIER RESEARCH

Finally, OpenAI Releases GPT-4.5

Weeks after Sam Altman announced their intentions, GPT-4.5 has finally arrived. And the results are, well, interesting. From the live stream, it was clear that the model’s EQ, which stands for Emotional Quotient, would be the main protagonist, not new intelligence frontiers.

In other words, they emphasize that the model seems more emotionally aware, more concise in its responses, and more likely to understand human intent and feelings, but not necessarily “frontier” in the sense that it would not be crushing other models in complex benchmarks, with Sam Atlman himself acknowledging this.

Surprisingly, the model doesn’t seem to be a huge improvement (OpenAI acknowledged removing ‘GPT-4.5 is a frontier model’ from the system card), which makes it even more daunting given its astronomical prices: $75/million input tokens and $150/million output tokens. For reference, that’s x250 and x500 times more expensive than OpenAI’s cheapest model, GPT-4o-mini.

Yes, those numbers are actually genuine.

But what does all this tell you about OpenAI’s future? Why are they releasing a model they acknowledge isn’t “frontier”? Are OpenAI’s days long gone? Is this terrible news for NVIDIA? What other insights can be concluded based on the release?

Overall, it’s safe to say that people are underwhelmed. But I believe they are misusing the model and, crucially, not seeing the bigger picture. In fact, I’ll go so far as to say that this model was never meant to be used for anything other than a declaration of intentions. This Sunday, we’ll cover all the details from architecture, training techniques, compute, evaluations, and, crucially, what OpenAI’s real plans are.

FRONTIER RESEARCH

Meet the Mercury Family, the First Powerful dLLMs

Inception Labs, a startup started by professors at different top AI universities, has come out of stealth to present the Mercury Coder family. At first, this seems just like any other AI coding model release.

However, they have gone viral, which should signal you that it’s not.

As you may have realized from the video above, Mercury Coders represent a fundamentally different approach to AI models that makes them blazing fast. They are diffusion large language models (dLLMs) instead of autoregressive LLMs, the traditional approach exemplified by all frontier models (ChatGPT, Grok, Claude, Gemini…, etc.).

As you probably know, most LLMs (both reasoning and non-reasoning models) work by predicting the next word in a sequence. While this is not a concern during training because you can increase the number of text sequences processed in parallel without worrying about latency, during inference (when people use the model), this makes models sequential in nature, as to predict the next word, we need all previous ones. This is what makes them ‘autoregressive.’

Instead, diffusion (which, by the way, is the default approach to image and video generation) works by denoising the response. But what does that even mean?

I always quote Michelangelo, the Renaissance sculptor, to explain diffusion models. He claimed the statues were already inside the marble; he just needed to chisel away the excess or noise. Diffusion models work in a strikingly similar way. They start with a coarse (rough) representation of the output and progressively refine it by denoising (eliminating the noise or impurities).

This allows dLLMs to produce many tokens, or words, in every denoising step, progressively ‘unearthing’ the final output, similar to how the cat ‘emerges’ from the white noise in the image above.

Another way to interpret how diffusion models work is that they one-shot a rough output and then progressively improve it, like you writing a text paragraph in one go and then iteratively refining it until you end up with the final text. In contrast, LLMs predict word by word, and cross fingers that the resulting answer is good.

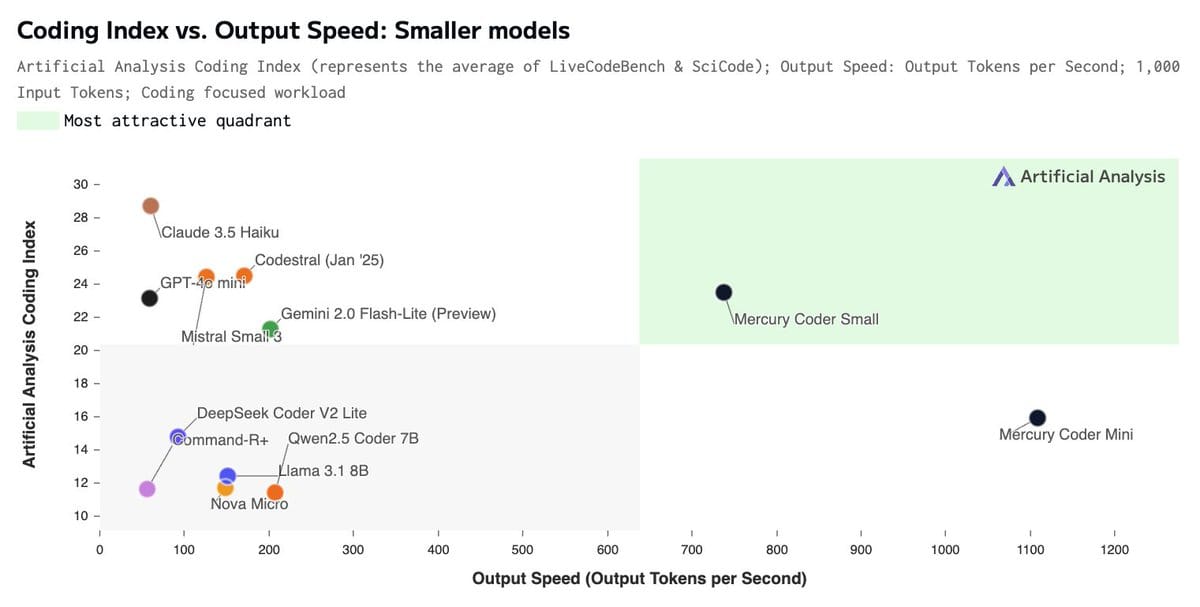

In practice, this allows diffusion LLMs to be much faster than autoregressive LLMs, because the ‘amount of output’ generated at each step is much higher. Furthermore, the models behave stupendously well, matching or exceeding similar-sized models while being up to ten or twenty times faster:

TheWhiteBox’s takeaway:

In an AI world where the amount of tokens to be generated is exploding due to the emergence of reasoning models and better video models, there are many incentives to want faster models.

Inception Labs is proposing a challenge to the status quo that is also orthogonal to hardware progress. Better hardware for autoregressive LLMs is also better for dLLMs because their processing backbone, the model itself, is almost identical. The “only” change is how the model outputs data.

In addition to inference speed, the researchers insist that this paradigm shift will also produce smarter models. The reason is that dLLMs aren’t forced to only look back (a new word can only be predicted based on previous words, while in dLLMs, words are generated simultaneously as past and future ones). This allows them to approach reasoning tasks in a more holistic view that is then refined over multiple steps.

Think of it this way: in some complex tasks, you might have a good notion of the overall solution but not quite sure of the intermediate details. With autoregressive LLMs, you’re out of luck, as you must go through the intermediate details first to reach the outcome. dLLMs, however, allow you to define a rough, overall approach to the problem and refine it over time. Issues that require complex reasoning will involve several reflection and correction steps, so this ‘coarse-to-fine’ approach (as Inception Labs describes it) might hold real promise to improve model intelligence, too. If so, what are the reasons to keep autoregressive models?

Overall, amazing release! And you know what’s best? You can try it today for free here.

HOME AI

Alexa Just Revamped. Looks Good.

In a highly edited video, Amazon announced its new generation of Alexa, Alexa+, which, a few days ago, we knew was going to be powered by Anthropic’s Claude. The assistant is now unequivocally referred to as an agent who can take in instructions, make suggestions, and even act on your behalf.

TheWhiteBox’s takeaway:

Anthropic’s strategic move to focus on coding and agents could soon be considered a master pivot.

While it’s hardly debatable that Claude models are the best for coding (Claude 3.7 Sonnet does overthink things a little bit too much though), and with Claude Code, their new coding tool, being seen as a gift from god by many top programmers, Claude 3.7 Sonnet is also great at agent tooling (using tools effectively). If Alexa+ delivers on what the video promises, it could quickly eat the smart home market. While Google still has something to say, I don’t believe Apple has any chance. They are too far behind now at the software level.

VOICE AI

Hume’s New Voice Creator

I’ve discussed Hume AI several times. The company is developing the best emotion-based speech models.

Now, it has announced Octave, the first LLM designed for text-to-speech. It lets you generate unique voices from prompts and narrate videos while displaying great emotion.

If you’re curious, you can try it for free here.

TheWhiteBox’s takeaway:

The key point behind Hume’s efforts is that they’ve focused on emotion from the very beginning. Through semantic space theory, they are not only trying to create AI voice products but are actively pushing the frontiers of knowledge regarding emotion.

They have even created the first AI-based emotion maps, where the AIs can use the cues in our voices and facial expressions to map them into new emotions not yet discovered, challenging decades-old theories like the Six Major Theories of Emotion.

SOFTWARE DEVELOPMENT

Poe Apps, Amazing for Prototyping

Poe has released Poe Apps, a new feature that allows you to create apps by interacting with AI models. The video in the link shows how you can build usable and well-designed apps via a handful of interactions.

TheWhiteBox’s takeaway:

The one-shot webapp generators, like Poe App or Replit Agent, are fuel for virality because they truly feel like magic. However, when building real apps, the human factor is still crucial.

To me, these apps feel great for prototyping (and a real threat to businesses like Figma) but not entirely building production-ready applications unless you want to upload bug-heavy products.

As a principal engineer at Google puts it:

HARDWARE

NVIDIA Presents Quarterly Earnings

NVIDIA has reported record financial results for the fourth quarter and fiscal year 2025, driven by strong demand for its AI-focused hardware. The company’s revenue for Q4 reached $39.3 billion, a 12% increase from the previous quarter and a 78% rise compared to the same period last year. Net income for the quarter was $22.1 billion, up 14% sequentially and 80% year over year.

For the full fiscal year, NVIDIA’s revenue totaled $130.5 billion, a 114% increase from the prior year. Net income was $72.9 billion, up 145% year-over-year.

Unsurprisingly, the core contributor to this growth is the Data Center segment, aka the segment where NVIDIA sells AI chips, which reported $35.6 billion in revenue for Q4, a 16% increase from Q3 and a 93% surge year-over-year.

This surge is largely attributed to the successful ramp-up of NVIDIA’s Blackwell AI supercomputers, which achieved billions in sales during their first quarter of availability. CEO Jensen Huang highlighted the exceptional demand for Blackwell, noting its impact on advancing AI capabilities across industries.

Interestingly, despite what many—wrongly—assumed based on DeepSeek’s results, in that demand for NVIDIA chips would fall, NVIDIA’s guidance was also positive, projecting first-quarter revenue for fiscal year 2026 to be $43 billion.

TheWhiteBox’s takeaway:

There are several things to point out. First, the reality that gross margins are falling (Jensen and especially Colette Kress, the CFO, were consistently asked about this) has finally hit Wall Street. At first, one might suggest that this is due to increasing competition. However, let’s be real:

What competition?

The crux of the problem is that the Blackwell chips have worse gross margins than the Hopper platform (H100/H20/H800/H200 chips). Semianalysis outlines the reason, as the ASP (the average selling price) of Blackwell chips has grown less in proportion (compared to Hopper GPUs) to the costs (which have doubled). Colette mentioned that they would expect to grow margins back into the “mid-70s,” which has been the average gross margin for 2024, while this first quarter saw margins drop to close to 70% (expected to drop to 70.6% in the next quarter).

I missed any reference to new revenues coming from the robotics and cloud businesses, which are two strategic revenue sources for a company that is basically all AI at this point and needs to diversify quickly. Data center revenue is 90.5% of revenues, and AI gaming GPU revenue actually fell by 22%.

For this reason, NVIDIA is trying to become a player in robotics (by enabling the simulation environments where AIs are trained) and cloud computing, offering its large GPU install base to customers through services like NIM (NVIDIA Inference Microservices). It announced partnerships on the latter (AWS and Verizon) but made no mention of direct revenues.

In my humble opinion, it is too early to worry about a company consistently beating analyst expectations, but I would be rattled if no revenues in these areas are announced during 2025.

TREND OF THE WEEK

Figure AI’s Robotic Breakthrough: Helix

Recently, Figure AI, a billion-dollar AI robotics company, announced the cancellation of its partnership with OpenAI, a move that appears to be as bold as it gets. Until then, the robot’s brain was a fine-tuned OpenAI model, but the company's announcement alluded to an internal breakthrough that allowed it to train its models.

And weeks later, they are currently intending to raise money at a staggering $39.5 billion valuation, 15 times the latest valuation from this past year, a multiple increase rarely seen even amidst the AI craze (companies usually grow their valuations two or three times between rounds, not fifteen).

Why so many emboldened actions?

And the reason might be Helix, their new robotics vision-language-action AI model, who also happens to be extremely bullish for, you guessed it, NVIDIA.

A First in Many Regards

Helix is a first in many aspects, such as:

Full Upper-Body Control: Helix is the first VLA capable of delivering high-rate, continuous control over the entire humanoid upper body, including the wrists, torso, head, and individual fingers.

Multi-Robot Collaboration: Helix is the first VLA to operate across two robots simultaneously, allowing them to coordinate on shared, long-horizon manipulation tasks involving unfamiliar objects.

Versatile Object Handling: Robots powered by Helix can now grasp and manipulate virtually any small household object—including thousands they have never encountered before—guided only by natural language instructions.

Unified Neural Network: Unlike previous approaches, Helix employs a single set of neural network weights to learn a wide range of behaviors, from picking and placing objects to interacting with furniture and collaborating with other robots, all without task-specific fine-tuning.

But the most impressive aspect of the release is this model's multi-robot nature. In the release video, two robots interact, using Helix to collaborate effectively to solve team tasks, which is unprecedented (video thumbnail).

This is hugely impressive. So, how does Helix work?

The Cognition Hierarchy

Helix builds on the idea that an AI model's structure needs to be divided into fast and slow parts to create fully functional AI robots.

Fast models will process sensory and semantic data and execute actions at higher frequencies, like moving the actuators in a hand.

Slow models will process the same data, but the goals they have are higher-level and, crucially, the execution speed will be much lower.

This creates a cognition hierarchy. The slower yet more capable model handles harder, higher-level tasks, like reasoning about what to do next. In parallel, the faster yet less capable model executes low-level actions, like moving the hand or the head. Think about it this way: the slower model directs what the robot should do (goal-setter), which is a slower thinking process that requires smaller output frequency, and the faster model shows how it should do it (executor), which involves hundreds of low-level movements, thus requiring faster output speeds.

And why the different speeds? The key principle is that high-level decision-making (e.g., “grab the cup”) doesn’t need millisecond precision, but motor control (e.g., adjusting finger grip) does. Using a single model for both is not only painfully slow, but suboptimal performance-wise.

This way, while some tasks take longer to process, the slower yet more thoughtful model processes them to decide what to do (plan the goal). In comparison, the smaller model executes the faster-to-process functions that require less intelligence and, importantly, need to be executed faster (moving the hand in a specific way).

And how does it look under the hood?

A New Way of Creating Models

As mentioned, Helix is a perfect representation of this fast/slow architecture.

It encompasses two models:

System 2: A VLA (vision-language-action) model, a Transformer (like ChatGPT) that processes images and the state of each actuator (wrist, torso, head, and individual fingers) at a frequency of around 7-9 Hz (it processes generates outputs at 7-to-9 times per second). This model is in charge of handling more complex tasks that require slower-yet-smarter thoughts.

System 1: A faster, convolutional, and much, much smaller model (just 80 million parameters, a thousand times smaller than the VLA) that ingests that same data inputs, too, plus a semantic vector outputted by the VLA model. The former data inputs come at a speed of 20 Hz, the latent vector at 7-9 Hz, and, crucially, this model is in charge of outputting the actual actions the robot makes at an astonishing 200 Hz (each robot executes 200 small actions per second). In other words, this model receives the same sensory inputs as the System 2, plus the high-level directives of the VLA (what it should do), to produce motor actions at around 200 moves per second.

And if you think about it, this is eerily similar to human cognition.

Thinking Similar to Humans

Humans process and execute several actions at insane speeds. At the same time, our more cognitive-heavy tasks are handled by the brain’s prefrontal cortex at much slower speed (in fact, Caltech researchers claim that speed is around 10bit/s), several orders of magnitude slower than other unconscious brain cognitive processes, and much slower than the reaction time of some individual neurons, which can process signals up to 500 Hz (500 times per second) or even 1,000 Hz.

For humans, while we consciously govern the high-level movement of grabbing the chips, we aren’t consciously aware of every precise action of our joints and muscles that leads to that action.

Furthermore, this model separation allows the machine to interact with other robots, another crucial aspect humans excel at.

As seen in the video, one of the robots corrects its movement when it realizes that the other robot has fulfilled that part of the global job. The reason this is possible is that the fast thinker reacts almost immediately (it updates its moves 200 times per second) after identifying the need for correction, which is also picked by the larger model which, after acknowledging the update, modifies the global goal (the overarching goal is yet to be achieved, yet one step of the goal is already fulfilled).

2025, The Year of Robotics?

The speed at which AI robotics is accelerating is dizzying (Figure AI isn’t the only company pushing the limits).

However, one big takeaway is that robotics companies no longer seem to require the efforts of model-layer companies like OpenAI. Instead, as knowledge on how to build the backbones of AI models democratizes, they are verticalizing upward in the AI pipeline to build the models themselves.

While there’s enormous value behind companies like OpenAI, the potential that companies like FigureAI have, because they are literally about to deploy humanoids into our homes and factories, could make these companies even more valuable than the software-only providers, which are bound to the digital realm.

Yes, model-layer companies have a huge disruption reach, especially in knowledge worker jobs, but the transformative impact of robots across the labor spectrum is enormous. The number of blue-collar workers is many times larger than that of white-collar workers.

According to a Deep Research analysis using AI, using data from ILOSTAT, the US Bureau of Labor Statistics, the UK’s Office for National Statistics, and Statista to approximate Chinese data, and using all four signals and extrapolate (hence, take the values with a pinch of salt) gives a total value of 1.11 billion white-collar workers and 2.2 billion blue-collar.

All things considered, which Big Tech companies stand to win or lose the most here? It’s important to note that Figure AI’s robots are trained in simulation, not in the real world. They are sent into digital representations of the world, are trained there, and, if the simulation adheres to real life, they are seamlessly transferred into their physical bodies with no further training required.

And who is building such simulations?

You guessed it: NVIDIA, which besides providing hardware and simulation environments, also happens to be an investor in Figure AI. Other potential benefactors of the robotics craze are Apple and Tesla, both of which have considerable hardware knowledge, data, and, notably in the case of the bitten apple, cash flows that could sustain the necessary investments. However, as with Google, Apple’s greatest enemy is itself.

THEWHITEBOX

Join Premium Today!

If you like this content, you will receive four times as much content weekly by joining Premium without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]