FUTURE

AI Businesses that Will Print Money

Welcome back! I’ve gone down the rabbit hole of AI’s most closely guarded secrets to give you a set of ideas that will shape the world to come, to the point I believe the resulting businesses will literally print money.

These aren’t ‘Agent for B2B Sales’ type of boring products we both know will fail, but ones that will actually transform entire markets, and which are a matter of time.

These are:

Agentic components, building what makes sense

Deterministic LLMs, how a billion-dollar star Lab may have solved the biggest mystery in AI: totally predictable outputs.

RLaaS, a technique that has been compared to AGI due to its transformative potential

Analog in-memory computing. You’ll learn what NVIDIA’s greatest weakness is (GPUs’ main limitation) and how an alternative that is 10,000x faster and cheaper has emerged.

We are covering them all today in words you will understand, no jargon, just the power of first-principles analysis.

I’ve written very few articles as packed with knowledge as this one. This post will change your mind about AI in the same way some of these ideas have changed mine.

Let’s dive in.

Agentic Components

This is the most obvious one, so I’ll cut to the chase. Besides, I’ve gone into this rabbit hole several times.

In a nutshell, most AI startups don’t make sense.

At all.

The Funny Thing about AI VCs

“It’s like ChatGPT, but for sales! “It’s like ChatGPT for HR, but smarter!”

If your startup’s description starts with “It’s like ChatGPT but…” I’m no longer interested because it’s very likely to fail.

The problem is that 95% of AI startups are that, because, funny thing, that’s precisely the pitch that gets you in the room with investors.

But this is misguided, your startup makes no sense, because you are building something that won’t be necessary in… months?

As we’ll see later in this post, it’s not about the scaffold (the techniques and methods built on top of AI models to make them smarter than they are), but about the data.

But then, what should be built?

Glorified Tool Callers

Most of the progress these days, at least in the frontier, is deeply correlated with one thing and one thing only: the amount of tool calls your AI makes per interaction.

When we say “reasoning,” what we actually mean is an AI doing two things:

Breaking down problems using a step-by-step approach

Profusely calling tools

If your AI model does only number one, it’s a Large Reasoner Model (LRM), or reasoning model. But if it also does number two, it’s what we call an ‘agent’.

But agents, which sound very cool, are essentially glorified tool callers. They're AIs whose sole job is to understand user requests, develop a plan, and execute tools to carry out the plan.

What this means is that AI Labs are solely focused on the logic side of things: building AIs that do better and better at the three things above with each model improvement.

This is why making AI wrappers makes little sense: you are betting against AIs getting better. Why? Simple, it’s because these AI wrappers are companies that have raised dozens of millions, or even hundreds, in some cases, to do one of the following:

Build curated prompts that improve a model’s capacity to understand what the user wants better

Build curated prompts to improve a model’s capacity to plan

Build curated prompts to improve a model’s capacity to call tools effectively.

Notice something? They are literally working on the same three things AI Labs are working on, but without building the actual models!

Put bluntly, they are developing solutions that the next generation of models doesn’t need. That is why you are betting against AI Labs; the only way your business makes sense is if models do not improve enough.

Bold move! (Personally, it’s more naivety disguised as boldness)

By the way, nothing proves this point more than Claude Code. Anthropic’s AI agent is surprisingly simple in nature (e.g., it doesn’t have complex RAG pipelines using retrieval, but simply looks for keywords) because they believe it’s pointless if Claude 5 will do those things by default.

The irony about these startups is that their scaffolding is currently necessary (or an improvement) to make agents work, explaining their rapid revenue growth, but it will become unnecessary in the future. The issue is that model iteration is not a year-long process, as models evolve every few weeks or months at best. You’re like a lollipop, tastes awesome, fades away in a few minutes.

Therefore, instead, build tools.

Agent tooling is durable

I’ve talked about this way too much, so let’s make it quick. AI Labs mostly don’t build tools. However, they heavily rely on them to make agents work. They focus on the logic, while you concentrate on the tools that bring the plan to life; it’s that simple.

The interesting thing is that your tool is just as important as the model, because without the tool, there’s no execution.

Consider an agent who handles customer disputes using Stripe. Without the Stripe tool, the AI can request any action (such as refunding or preparing a dispute answer), but it needs the Stripe tool to make it happen. No tool, no agents.

Instead of trying to outsmart some of the world's best-capitalized companies, be humble. Select a niche use case that agents can work on, build a tool to execute the task, and you’ve created a long-lasting, money-printing business.

The First Deterministic LLMs

For the longest time, we have assumed that AI models were non-deterministic. Heck, it was just weeks ago when I was “lecturing” you about this harsh reality and what to do about it (what I describe as statistical coverage, making this unpredictability statistically unlikely, with the assumption that full determinism was impossible).

But as it turns out, with this bloody industry, I was just proven wrong.

This is a nice segue to what I was saying earlier about AI startups building wrappers: In this industry, you can’t build anything on the assumption that a given limitation, be that an AI model that isn’t smart enough or a more fundamental limitation like this one, can’t be solved.

You are most likely to be proven wrong.

But first, let’s answer the important question here: why are models unpredictable?

Well, it’s complicated.

You’ve heard the mantra (even from me): Large Language Models (LLMs) are non-deterministic; for a fixed input, the outputs are slightly different every time. In fact, they are “run-to-run non-deterministic,” meaning that even two consecutive runs yield different outcomes.

But why? The answer has two sides.

On the software side, an LLM (or by extension, an agent) is mostly deterministic, except for the sampling mechanism. Won’t go into detail, but when the model is about to choose what’s the most likely next word, it chooses it at random from a shortlist of the most likely ones. That is to say, it doesn’t choose the most likely word by default.

We do this to avoid the model collapsing on the mode of the distribution. That is, always predicting the most likely word. “I like…” might have “ice cream” as the most likely next word in the English language, but thousands of other words are valid, too; it’s a way to increase model creativity.

However, during inference (when the model runs), you can force this using “greedy decoding,” where we ask the model to always choose the most likely one.

Problem solved, right?

Well, not quite, because we have to also factor in the real issue here: hardware non-determinism. This is a bit harder to see, but trust me, I’ll make it clear for you.

One of the basic rules of maths is the associative property of addition and multiplication, which states that the grouping of numbers in both operators doesn’t change the result (e.g., (4+5)+3 = (3+4)+5).

But here’s the thing: this is not true in LLMs, how incredible that may sound. The reason is that LLMs work with floating-point numbers, which are expressed in the form {significand (or mantissa) x 10exponent}.

For example, the number 1,200 can be expressed as 12×102 or 1.2×103. When we have numbers this way, we can only add them if the exponents are the same.

Say I have 0.34×102 added to 24×101, the only way I can add these is by changing the former to 3.45×101 and then adding both, giving 27.45×101.

No big deal, right? Well, actually, we do have a problem.

In computers, we have limited precision (which is particularly limited in AI cases, up to a byte per parameter (8 bits) at most, or even lower), which means that numbers usually end up being rounded up.

In the previous example, if we can only use, say, 4 bits, the number is “forced” to become 27.5×101, which means some information has been lost.

However, this does not explain the non-deterministic nature of LLMs, because for a given input, the loss is rounded up the same every time, so the output is still the same. In other words, we are losing performance due to the rounding error, but since the error occurs consistently, the output should remain the same.

The issue comes with the parallel nature of LLM workloads using GPUs. GPUs have multiple cores (thousands of them), and we want them all working to squeeze as much compute from the hardware.

Thus, we might want to distribute an addition of six numbers across six cores. The issue is that these cores finish their jobs in an unexpected order, meaning that the order in which numbers are added inside the model varies between runs.

The sum is distributed across different cores, contributing to the total in an unpredictable order between runs.

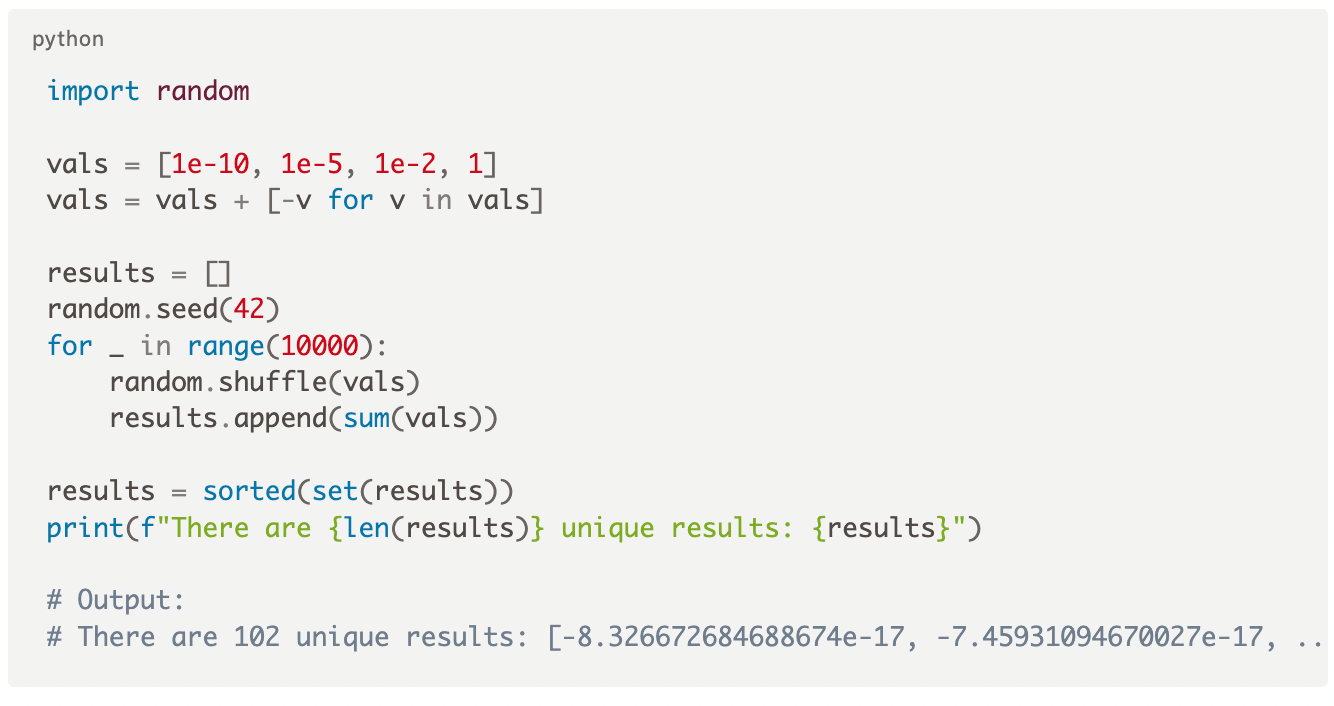

For example, looking at a very small array of numbers below (just five), there are 120 (five factorial, or 5!) different ways to add them. Of these, 102 yield different results because the information losses we described earlier occur in different ways, and thus, the result is slightly different every time. Put simply, in a simple five-number addition, we get non-determinism in 102 of 120 tries. And LLMs are thousands of different numbers at times, not just five!

And this is just the tip of the iceberg for LLMs, because matrices and by extension the attention mechanism (the key mechanism underpinning LLMs) not only have to deal with this issue, but are also batch-dependent, meaning that depending on the size of the batch (the number of concurrent users being responded to by that GPU), the result changes.

This is ultimately unpredictable because OpenAI cannot predict the number of people asking something to ChatGPT simultaneously, let alone the size of each sequence.

Since nobody really understood why, non-determinism has been accepted as “something we had to live with” for years.

But a group of researchers (mainly ex-OpenAI) from the new superstar AI Lab, Thinking Machines Labs (TML), has challenged this claim as bigotry while finding the solution. And it’s simply beautiful.

The following sections include this fantastic discovery, how RLaaS could be the real AGI (and not the sci-fi-fueled crap incumbents tell you), and how analog computers will save AI from starving from energy hunger.

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- NO ADS

- An additional insights email on Tuesdays

- Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more