For business inquiries, reach out to me at [email protected]

THEWHITEBOX

TLDR;

Today, we take a look at DeepSeek’s latest reveal, studies on AI adoption, productivity, and the viral MIT claim that 95% of AI implementations fail, ending with this week’s trend of the week, Meta’s success with AdLlama and what it tells us about the future of the company and AI.

PREMIUM CONTENT

Things You Have Missed…

On Tuesday, we took a look at a new rundown of AI news, focused on three themes:

the growing importance of small models,

How AI continues to move insane dollars,

and China’s relentless catch-up on the US, including its first-ever world model

As well as news on new data centers, Intel’s new savior, & more.

Download our guide on AI-ready training data.

AI teams need more than big data—they need the right data. This guide breaks down what makes training datasets high-performing: real-world behavior signals, semantic scoring, clustering methods, and licensed assets. Learn to avoid scraped content, balance quality and diversity, and evaluate outputs using human-centric signals for scalable deployment.

NEWSREEL

DeepSeek Drops V3.1

Out of the blue, DeepSeek has dropped a slightly larger updated version of their flagship non-reasoning model, DeepSeek v3 (the model that’s also the base of their reasoning model, R1).

DeepSeek cites three things as the most relevant to point out:

It’s a hybrid reasoner, a non-reasoner and reasoner model at the same time, meaning it will “think” for longer on tasks that require more compute; think of this as GPT-4o and o3 combined in the same model, the same principal labs like Google follow.

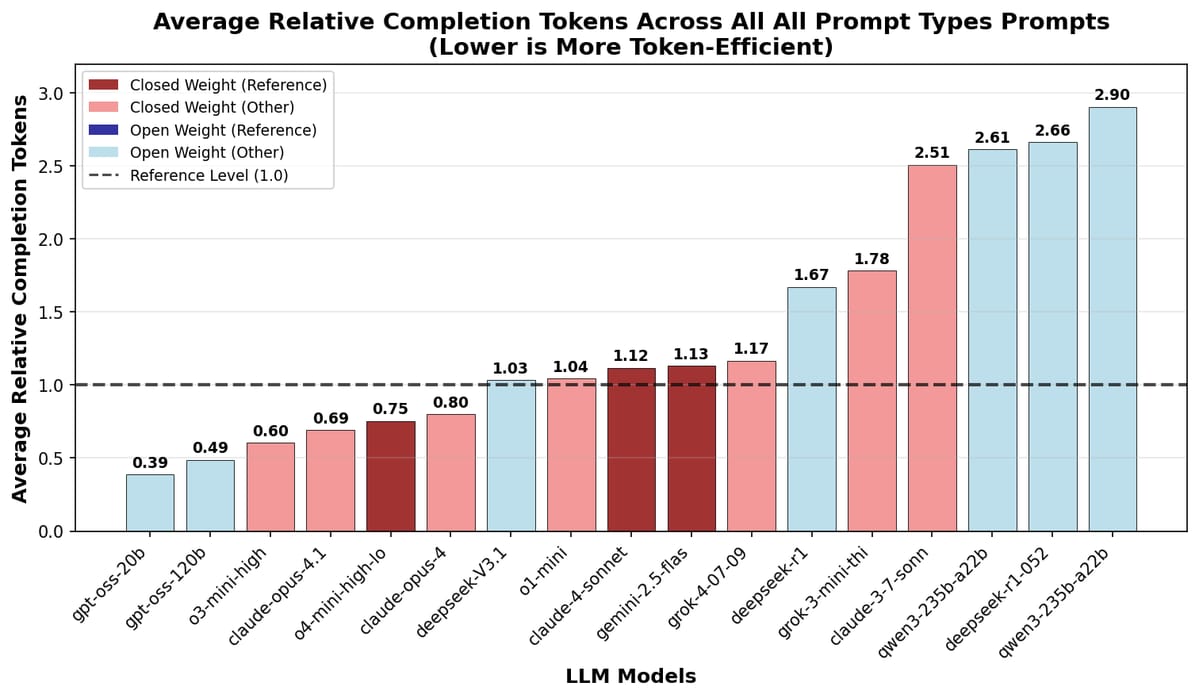

It’s very fast, thanks to fewer output tokens on average. As you can see in the above graph, the model requires fewer tokens to answer, implying it’s a very efficient model. They offer dirt-cheap prices on their API, although you send data to them at your own risk.

If you're interested in using DeepSeek models, I recommend exploring LLM providers like Fireworks or TogetherAI, as DeepSeek doesn’t have a particularly great history with cybersecurity.

Tailored for agentic use cases. As with any release these days that aims to be actually used, the model excels at tool-calling, utilizing tools to execute actions as any agent requires.

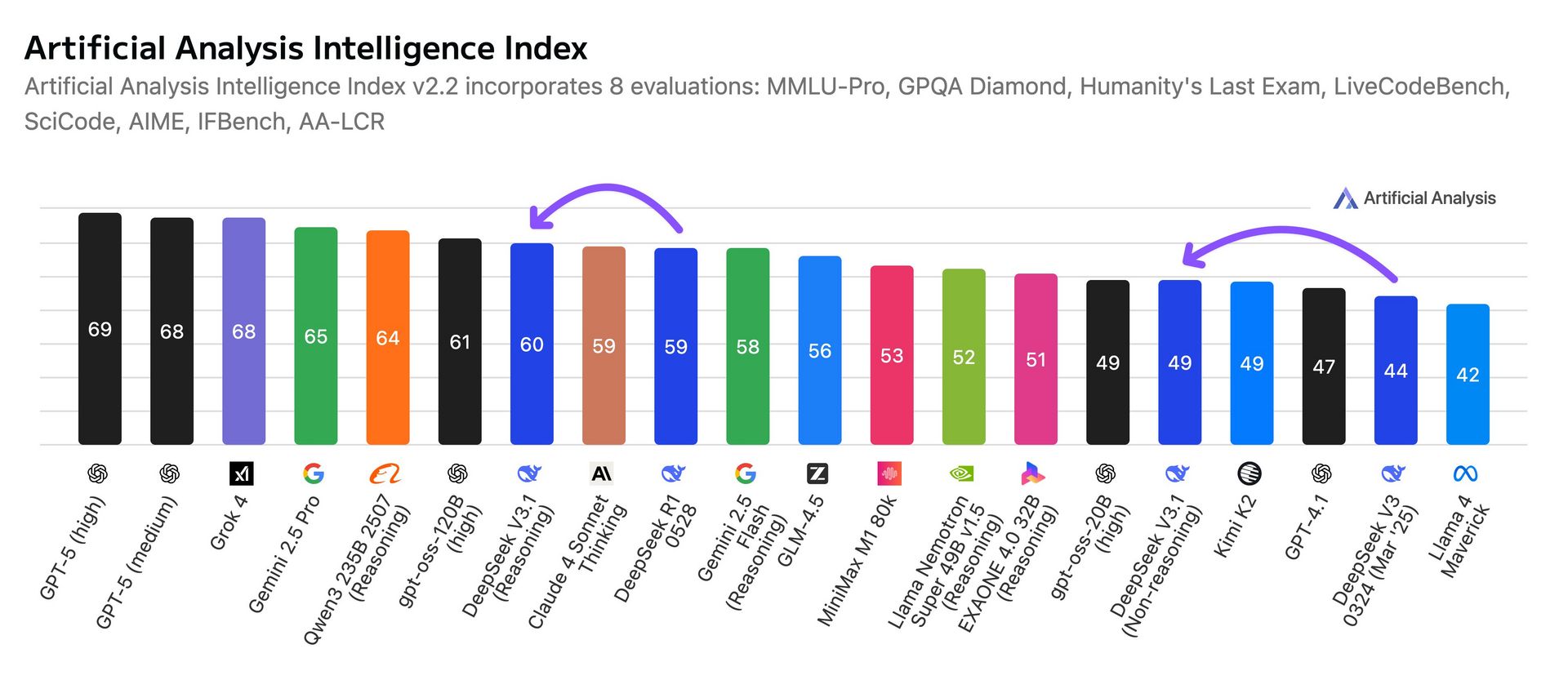

Beyond that, there’s not much to report for now, besides Artificial Analysis’s results that, well, aren’t particularly great. As you can see below, the model is a marginal improvement over R1, and still below all US frontier models and even behind another Chinese model, Qwen 3 235B:

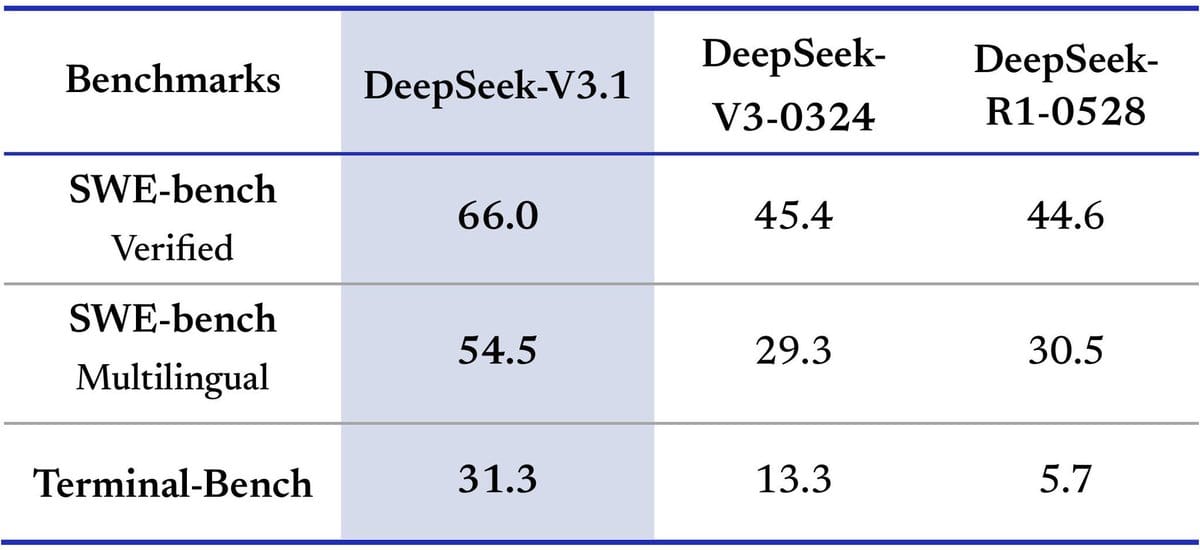

But jumping to conclusions based on a single benchmark can backfire. If you look at coding, the improvements are considerable:

Moreover, others have noted that the Aider polyglot, a tier 1 coding benchmark, achieved preliminary results with a 71% score, surpassing Claude Opus 4 and making DeepSeek Pareto again. In layman’s terms, it puts the model in the performance-to-cost curve, meaning it’s the best model at its size. Crucially, DeepSeek’s entire run for that benchmark had a total cost of a US dollar, while other frontier models’ costs grow into the hundreds of dollars.

TheWhiteBox’s takeaway:

A bizarre release that makes no sense unless they are setting the stage for the big reveal of their next big model. Meanwhile, the entire world waits patiently for the release as a means to assess China’s true stance on the US in the ongoing AI War.

However, some people have speculated that we won’t be getting a DeepSeek R2 model, the natural expectation, but a hybrid DeepSeek V4, which now appears to be much more likely based on this update. In other words, DeepSeek is telling us that they are no longer releasing separate reasoning and non-reasoning models and instead committing to hybrids.

But to me, this release is much more than meets the eye. I’m pretty sure the CCP is somewhat involved, and they won’t allow DeepSeek to release anything but something that shakes markets.

Entering absolute speculation zone, what DeepSeek might be suggesting with this unexpected midway release is that they are going to hit where it hurst most: offering the absolute king of efficiency, a model that stays close to the frontier (or at the frontier) but at least ten times cheaper than the rest by generating fewer tokens to respond (and other breakthroughs they’ve created like NSA attention).

Moreover, this model will be marketed as running on Chinese inference (chips), but it’s unclear whether it was trained on Huawei chips (Arstechnica speculates it was tried and failed).

In a nutshell, I’m pretty sure the intended narrative is as follows: a frontier hybrid model with state-of-the-art performance for ten times less cost while built natively for Chinese inference, telling the world two things:

If you want best performance-per-dollar, go Chinese,

And that China is no longer as US-dependent as it once was, both at software and, crucially, at hardware (they have even developed a data type specific to Chinese chips called UE8M0 FP8).

If this is what actually happens, then, quoting (or, rather, misquoting John Swigert, as he was talking in past tense) from the Apollo 13 mission, “Houston, we have a problem.”

But should we expect another market crash of US hardware companies (NVIDIA, AMD, Intel) if China delivers a state-of-the-art model with a huge ‘Chinese smell’?

I don’t know, but it seems more justified than the March crash; at least this time, it’s a natural reaction, unlike the previous, which people completely misunderstood.

MARKETS

Palantir’s Issues: A Sign of Market Saturation?

Palantir has had quite the rocky week, falling almost 20% in just five days from all-time highs (it has since recovered slightly).

The reasons are thought to be the following:

Overstretched valuation (P/S up to 110×, P/E over 800× at peak).

Weak analyst sentiment despite bullish technicals.

Broader AI/tech sector pullback and profit-taking.

High-profile short selling and bearish commentary.

Investor rotation into safer sectors.

Macro uncertainty ahead of Fed policy signals.

In particular, famous short seller Andrew Left from Citron Research argued that the stock should be trading at $40 a share, and that even that value would be considered optimistic.

He used OpenAI’s valuation and projected revenue multiples as a reference to get that number. Considering Palantir is trading at $156 a share, it’s not particularly flattering for the stock, even despite the more than impressive revenue growth.

TheWhiteBox’s takeaway:

Uneducated investors (most investors, even ‘smart money’) need to have AI plays in their portfolios; not even a single LP on this planet doesn’t want AI exposure.

Investing in AI should be a full-time job for these guys, so they cut corners and settle for the ‘obvious picks’. NVIDIA, AMD, Hyperscalers, and Palantir are the obvious choices, allowing the latter to stretch their forward P/E multiple far more than fundamentals would allow.

It’s a great company (trustworthy and enduring clients (primarily US Gov), unique value proposition, AI narrative, and explosive growth), but all that added to the AI craze, creating what Palantir is today: a hyper-inflated stock.

The crux of the issue is knowing whether Palantir’s revenues and profits (33% net margin) will grow into its valuation before it collapses.

At the current valuation, with a price-to-earnings ratio of 488, Palantir needs to grow revenues by ~10x to have the same PE ratio as NVIDIA. At the current revenue growth percentage of 48%, that would require almost six years (5.87 to be exact) of such impressive yearly growth.

Not impossible, but unlikely. As a reference, from 2010 to 2024, Meta grew ~37% year-over-year, a number that would be dwarfed by Palantir (although a much larger run).

That said, it did grow at a staggering 55% year-over-year between 2010 and 2016, more than what Palantir needs in that time horizon, so it’s doable; the issue is that we are comparing it to one of the greatest stories in the history of capitalism, not a small feat to match.

AI ADOPTION

Adoption among US Workers almost at 50%

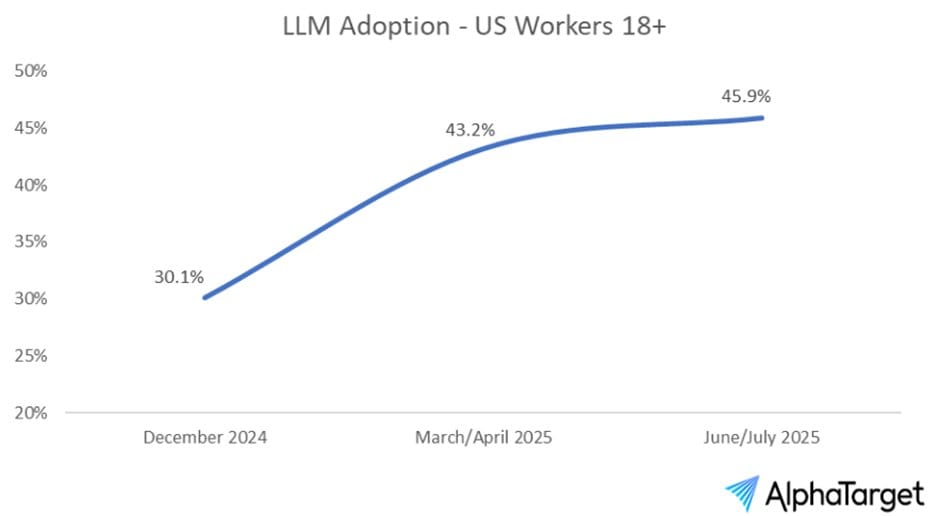

Adoption of Large Language Models (LLMs) like ChatGPT has grown to almost 50% amongst US workers, according to the World Bank and Stanford University.

TheWhiteBox’s takeaway:

I have strong thoughts on this. An unsurprising result, but nuanced. You and I both know LLMs are helpful, but has this great adoption curve represented an unparalleled improvement in productivity?

The answer is hard no. Nevertheless, François Chollet argues productivity has not only not grown since 2020, but has fallen. But how is this possible? Wasn’t this a 10x productivity booster?

LLM skeptics have latched onto this to convince themselves that LLMs are not the holy grail they were made to be. And while I am not particularly enamoured by LLMs, a strong counterpoint to this is that LLMs can’t increase productivity on jobs where productivity is already low, or zero; 0 × 100 is still zero.

The point here is that, especially in corporate jobs, there’s a decent number of them where the focus is more on appearances than actual work.

As a management consultant, I’ve encountered this reality countless times: people with their entire day booked in meetings, often on the most irksome and irrelevant subjects, which are frequently solved in a single email.

Why? Simple, these people need this calendar bloat to give the idea they are being productive while they aren’t. LLMs can’t save these people. In fact, these are the individuals who AI will displace.

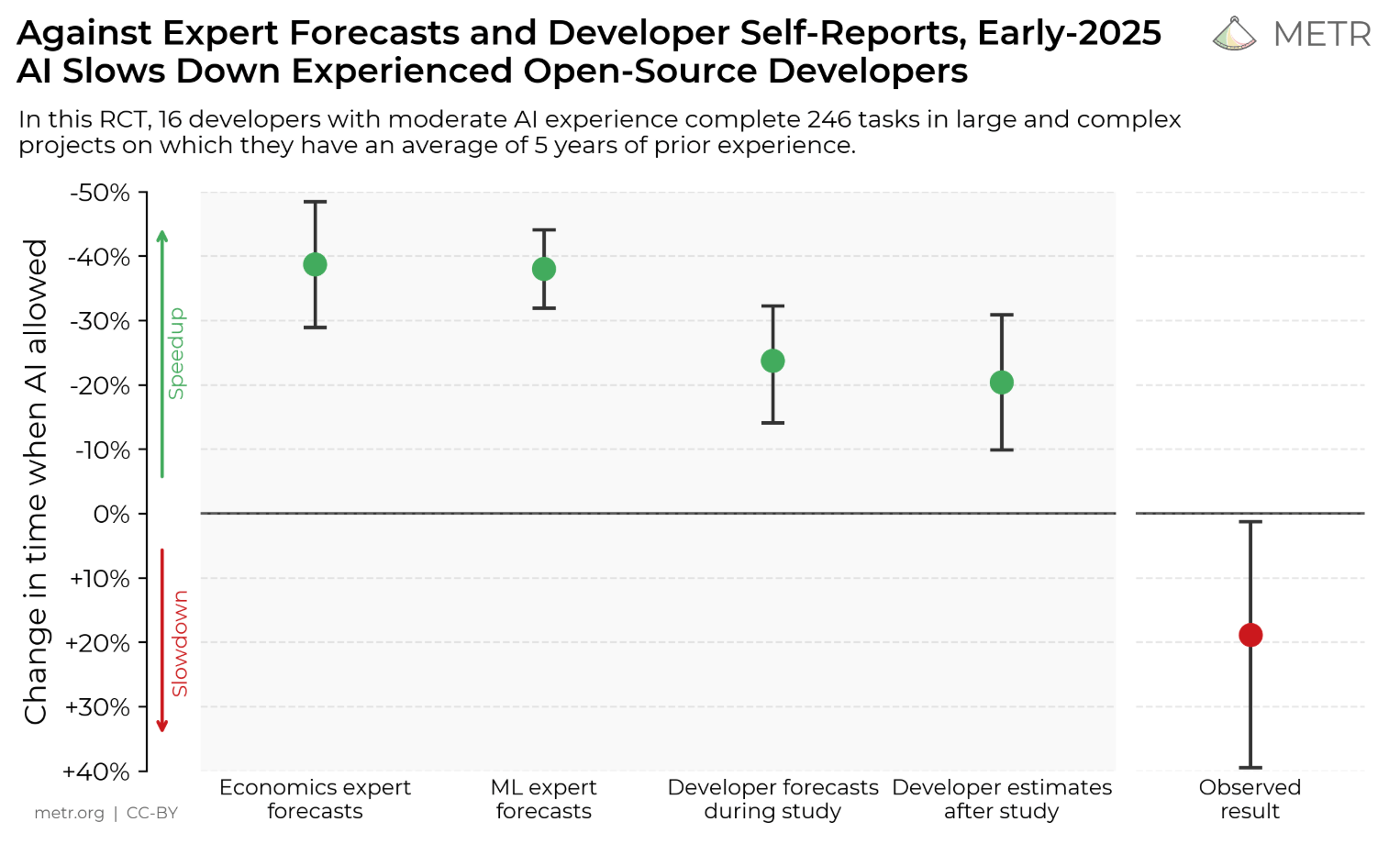

However, when considering productive jobs, LLMs do make people more productive, as seen in software engineering. Some studies have tried to disprove this, but in a somewhat disingenuous way. A study by METR claimed the existence of an LLM productivity illusion, and coders who think AI made them more productive were actually less productive:

Surprising result, only to realize the subjects of the study were star coders with a huge number of GitHub stars (a strong measure of success in the developer industry), the type of people most likely not to be that affected by AI productivity enhancements, if anything.

Luckily for you, I’m not one of those coders, and I can guarantee you that many of the things I’m doing in my daily life with regard to software development wouldn’t exist without LLMs.

In a nutshell, and this goes for both sides, claims about LLM productivity should be made with care, with proper statistical analysis (control and enhanced groups, ANOVA tests) to verify the real effect of AI. And, naturally, they should be tested on “average people,” people who actually need the empowerment AI provides. Nonetheless, we’ve been loud and clear that AIs are trained to be average—they minimize expected average loss across all tokens, so naturally, the result is an average of the training data.

Therefore, people at the top percentiles of any given job, like superstar developers, won’t see AI benefitting them—at least not pre-AGI—because they don’t actually need an average coder to be more productive.

What this means is that AIs are good enough to make people better, but not the answer to all problems, and definitely not the answer in edge cases. Verifying productivity claims on the most productive people amongst us is like testing whether a new football ball fabric is better and using Tom Brady as the tester; he would be throwing dimes with a 1920s ball.

So, to sum it up, we should restrain ourselves from making too bold claims, but we must not entertain the idea that AIs aren’t extremely useful when used appropriately.

AI ADOPTION

MIT Says Most AI Projects Fail.

LLM skeptics are having a field day, as MIT has added more fuel to the fire with a rather sensationalist quote: 95% of Generative AI pilots (small implementations) in enterprises fail.

In other words, AI is still underdelivering on its promises, and despite appearing to be useful, cracks appear.

But does this mean what it appears to mean?

TheWhiteBox’s takeaway:

For starters, the study refers to non-startups, companies that are not AI native and thus have to adapt to the new reality. They are very clear in outlining the success AI native companies are having, not only in generating revenues from AI, but also in adopting it for their operations, the first signal that this is basically a skill issue.

There’s also asymmetry in the adoption methodology. Those who adopt products instead of building the tools themselves succeed in a much wider margin. This isn’t because getting crafty with AI is a bad idea, but it’s a terrible idea if you lack the expertise. In other words, it’s not that custom software doesn’t work; again, it’s a skill issue.

Moreover, this ‘custom’ software is just prompt engineering, meaning that you are testing whether your internal team can build prompts better than startups that have raised hundreds of millions to right that same prompt scaffold. As I outline in the next section, custom software involves more than just prompt optimization; it's also about RL fine-tuning, and I can assure you that this is a key reason AI implementations fail.

And Aditya Challapally, the lead author, points precisely in that direction. When asked, he outlines that the biggest reason for the massive failure is, again, a skill issue, more specifically, poor tool integration. This is what we know as context engineering issues; the AI can’t work well if it doesn’t have the proper context.

Challapally goes deeper and points to one particular point as a core issue: AIs aren’t working because they aren’t learning or adapting to the workflows. You probably know where this is going: what I’ve been saying for months. AI implementation depends on AI onboarding through on-the-job training, which is precisely this week’s trend of the week below.

TREND OF THE WEEK

RL Fine-tuning Is the Real Deal

When I wrote my recent article on why agents were now more than ready to be adopted by companies, I did add a caveat: for actual AI adoption, we were going to need companies to start training AIs themselves.

Connecting to an OpenAI ChatGPT service is insufficient because the model lacks the necessary context and knowledge. This is akin to hiring a junior employee and providing no onboarding whatsoever, expecting them to do the work without help.

If junior employees, who are smarter than your frontier AI models—smarter as in adaptable, efficient skill acquisitioners, not ‘textbook smart’ which is the illusion incumbents play on you—require onboarding, what makes you believe AIs don’t?

And Meta’s most recent paper proves how true this is.

Meta is on a Roll

Meta is on a tear lately, with the stock increasing 25% in value year-to-date, an impressive increase considering we are talking about a company with close to $2 trillion in market cap, far larger than some of the largest economies on the planet.

This is thanks to their booming social media ad business (growing users, excellent engagement metrics, growing ad share, and growing revenues with double-digit growth between quarters); you can debate the ethicality of their business (I’m not a fan of monetizing attention and the known issues with social media addiction), but you can’t debate it’s one hell of a business and one hell of a leadership team.

We can’t be as glazing regarding their Generative AI research efforts though, forcing Mark Zuckerberg to go in a billion-dollar hiring spree of AI researchers, but one thing that makes Meta different from the other players is that the synergy between Generative AI and their business is undeniable.

While others, like Google, have the opposite reality in which AI is preying on their cash cow, search, meaning Google is actively building the technology that obsoletes its main business, Meta’s business is aligned with AI progress: better AIs allow Meta to improve its ad services.

If there’s one negative about Meta’s business, it's the ‘AI-generated slop’. A growing amount of social media content is AI-generated, which could lead to disenchantment of the average user with social media. But going as far as saying AI will kill social media is a stretch right now seeing Meta’s growing user base.

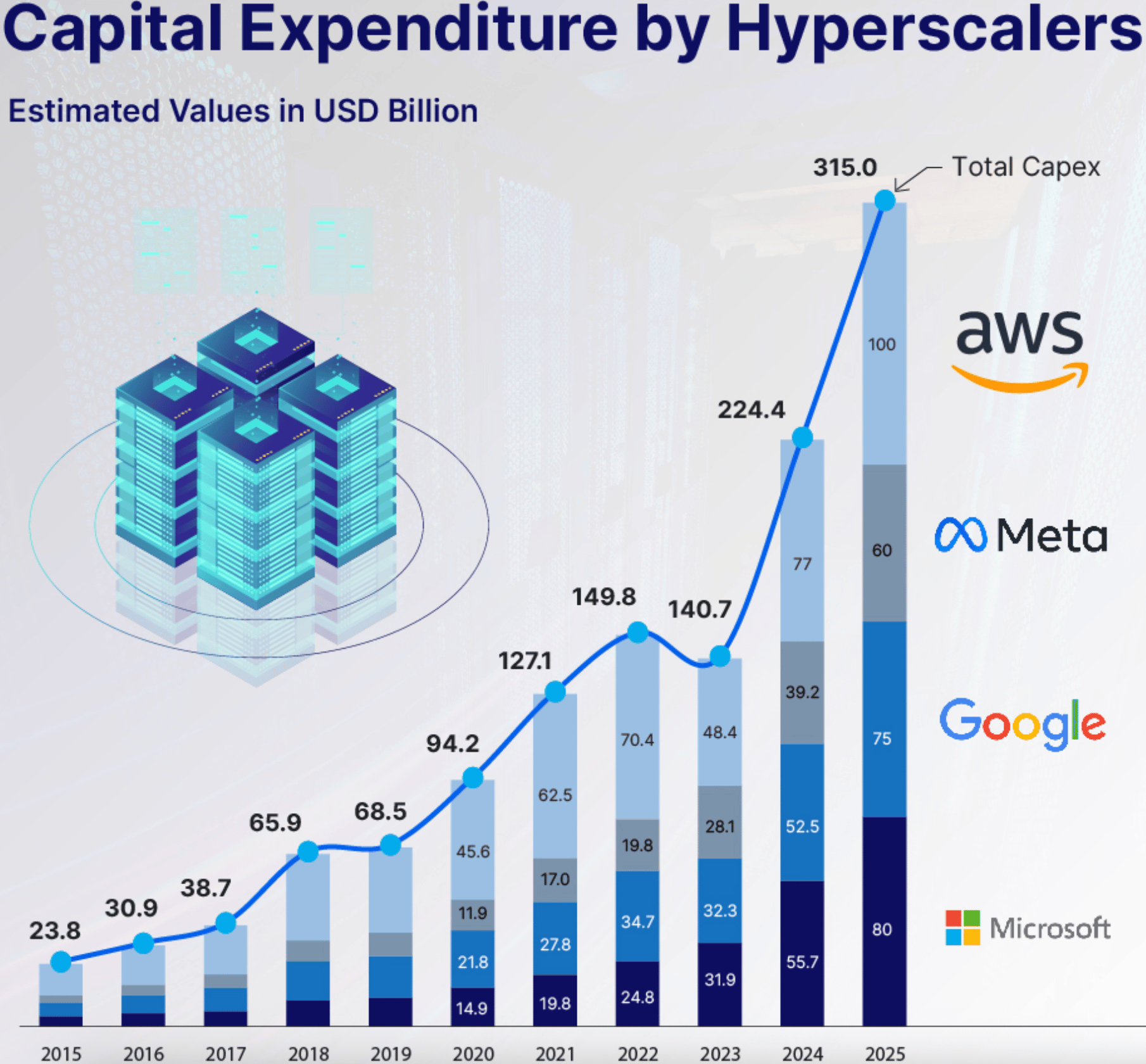

And the truth is that, since ChatGPT’s explosion, Meta has bet on this technology more than probably any other Hyperscaler. Despite having the lower investment value in nominal terms out of the four (they have since grown projections to $72 billion, still less than the others), that number is the highest relative to revenues.

Importantly, unlike the others, Meta is focused on using AI for its own services and products, not on serving the technology through accelerated cloud offerings. Unlike the rest, Meta’s entire CAPEX is focused on its products.

Nonetheless, most of AI’s impact on the revenues of the rest of the Hyperscalers has been driven mainly by growing cloud revenues. But there’s a caveat: most of that revenue growth is self-generated by investing in AI Labs in exchange for compute credits; at the end of the day, most of that cloud revenue is coming from the Hyperscalers themselves through their venture investments or cuts from AI Lab top lines (e.g. OpenAI gives a 20% revenue cut to Microsoft).

But I digress. The reason I’m going down this CAPEX rabbit hole is that Meta always used this “clear synergy” between Generative AI and its ad business as a justification for having the most significant CAPEX-to-revenues percentage amongst all Big Tech, claiming that the technology, in particular their Llama model family, was already being extensively used to improve their ad products.

However, for now, it has been a lot of talking and little showing. Until now.

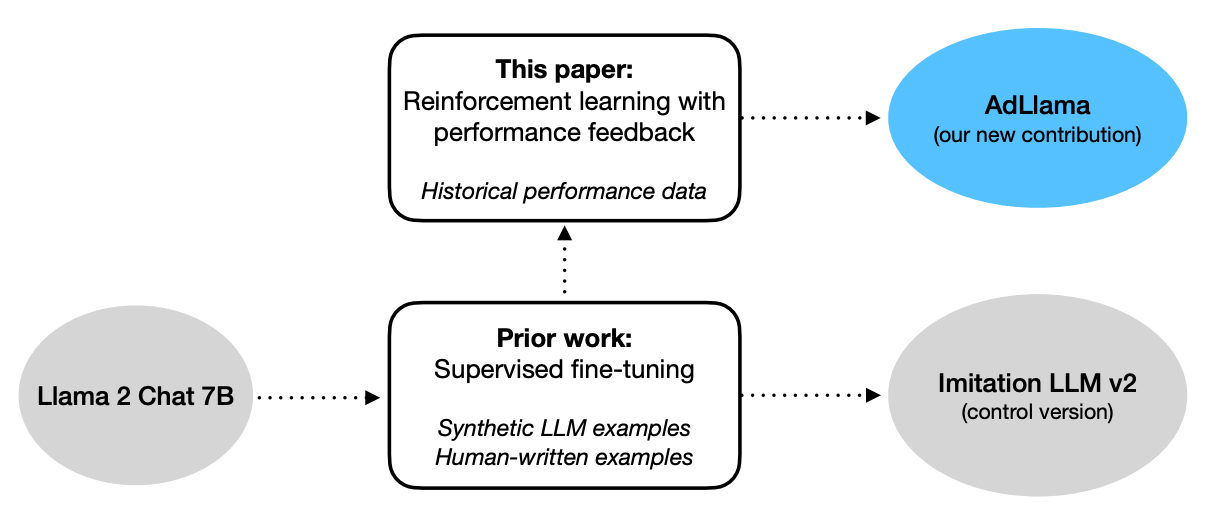

AdLlama, Meta’s First Real AI for Ads Model

In a paper published on Arxiv, Meta has introduced AdLlama, which demonstrates its value in the ad business by enhancing the CTR (Click Through Ratio) of AI-generated campaigns, marking the first real proof that AI can be optimized to improve specific metrics.

In layman’s terms, AdLlama helps publishers write marketing copy that leads to users clicking on the ad more. The more success publishers have with Meta ads, the more likely they are to stay in Meta ads, meaning AI is actively improving their top line.

Statistical Proof of Value

The model obtained a 6.7% relative improvement over the base model. Importantly, unlike many claims made in this space, Meta ensured the results were statistically significant, thanks to a p-value of 0.0296.

What kind of gibberish is all this statistical jargon I’m throwing at you?

In statistics, it’s not enough to just make a claim; you have to show it’s unlikely the effect happened by chance. A p-value of 0.0296 means that if AdLlama had no effect, you’d only expect to see a CTR improvement this large about 3% of the time due to random variation (by chance). That’s low enough that researchers reject the “no effect” hypothesis and accept that AdLlama really does improve CTR.

Most research these days avoids making this analysis for reasons you may guess.

But why is this such a big deal?

Simple, we are starting to see AIs being purposely trained for specific business metrics; we are finally onboarding our AIs, a wake-up call for enterprises around the world to start implementing AI as they should, especially considering how poor the results have been until now, as we’ve seen above with MIT’s study.

You may not see this elsewhere, but AI Labs are screaming this at us. Let me explain.

The Untapped Potential of Goal-Oriented Training

We’ve talked about this a lot, so I’ll cut to the chase. Much of the efforts in AI these days are centered around Reinforcement Learning (RL), a training method for AI models that sets goals for them and provides rewards over the model’s explorations, guiding them to the solution (hopefully). I always use the “hot and cold” game analogy, you say ‘hot’ when the player gets closer to the object, ‘cold’ otherwise.

Over time, the model learns what actions yield ‘hot’ outcomes, reinforcing them (hence the name) and which ones yield ‘cold’ outcomes, which it avoids.

Sounds like a game, but it works amazingly well. However, for the longest time, RL was not viable, and AI trainers instead relied on imitation learning, training AI models to replicate specific data. The issue for enterprises is that using models that have learned by imitation is complex in business environments where that data is rare, if it exists at all.

Imitation learning also leads to greater degrees of memorization, which makes models more overfit to the data (too focused on that particular dataset)eve and less likely to work well in new situations.

Unsurprisingly, AIs heavily trained on imitation struggle with generalization (working well in areas underrepresented in their training). Put simply, they aren’t as good with what they haven’t seen before.

The solution seems straightforward: even if imitation learning doesn’t generalize, it still enables the model to learn the task, so let’s just create the data, right? Great idea, if not for the fact that not only is imitation learning suboptimal for real learning, it is a crazy expensive endeavor.

But RL represents a solution for this: departing from a model that knows stuff, we can make the AI 'discover’ solutions to problems that don’t have a lot of data on the task, using this ‘guess and verify’ game we described earlier.

A very famous example is DeepSeek R1 Zero, a model that learned to backtrack (realize it had made a mistake during a reasoning process) despite never having been told the notion of backtracking. That’s the power of RL.

To drive this home, they tried the imitation path too (called supervised fine-tuning in AI parlance), which is precisely the model they compared AdLlama to (and the one it outperfomed):

To do so, they trained a reward model that could confidently score ad campaigns (by seeing hundreds of thousands of campaign → CTR pairs), which was then used to train AdLlama to, over time, write better ad campaigns.

Using the game analogy, this reward model is in charge of scoring the actions of the model as ‘hot’ or ‘cold’.

But I know what you’re thinking: What is this guy trying to tell me? Simple: this is the future of enterprise AI. Let me explain.

RL training will be the Norm

Thinking Machine Labs (TML), a star-researchers AI Lab in stealth mode (unreleased product despite billion-dollar valuation), is orienting its entire strategy around this idea: training custom models for companies. That is, make companies choose a business metric or job to optimize and build a customized model for it.

Wall Street is already recognizing the importance of customization, which explains Palantir’s seemingly absurd valuation.

Palantir’s entire business model revolves around building ad hoc software for companies, which they then use as a learning experience to develop commercial products.

And the key takeaway, and the reason most AI pilots fail according to MIT, is that RL training is what makes your AI custom. It’s RL what will make those pilots work.

You already have AI labs building foundation models for you (some, like DeepSeek, giving them to you for free), but it’s your job now to create a downstream model that does the job as intended.

And what does this mean? Simple, this inevitable transition to AI retraining will also lead to an explosion of interest in small models, as we discussed in greater detail on Tuesday’s Premium news rundown, and could lead to a plethora of winners and losers.

Among the winners, Chinese Labs providing cheap open-source small models, Meta and its incredible alignment between AI and its cash cow business, Apple with its state-of-the-art consumer-end hardware for AI, and TML, which, despite being very late to the party, has, in my view, the only strategy that would have worked at this point.

Among the losers are closed labs that, if not careful, might see themselves regarded as non-trainable models more attuned to particular, complex cases and consumer-end use cases; once enterprises realize they can fine-tune a Chinese Qwen model for “free” while outperforming ChatGPT and for less inference costs, why would anyone build enterprise AI pipelines with ChatGPT?

Meta is a Solid Bet, and so Is RL

So the takeaway for me is two-fold:

Meta has finally provided clear evidence that the claims they made about using AI to improve their offering were true. With a booming cash-printing business and CAPEX aligned with the best-of-the-best while having the greatest potential of all companies to use that CAPEX for returns, things are looking great for them. My only concern is, when will AI-generated content crash the Meta party?

Being a CIO and thinking ChatGPT is the solution to AI adoption should disqualify you as a tech leader. Enterprise AI is and will be about efficiency, and RL-trained small models are not only dozens of times cheaper; they are better performance-wise.

Closing Thoughts

From the outset, it looks like a rather ‘loomy’ week for AI. A lot of negative news and bad sentiment.

But by now, you already know what the solution is. AI implementations fail not because AIs lack the necessary abilities; rather, we pretend they are so smart that they don’t need actual on-the-job training, despite being very careful about providing it for our newest employees (because we know they need it).

Companies are setting themselves up for failure by not doing RL fine-tuning. I don’t blame them; it’s something that isn’t easy, and nobody is screaming that at them, so it’s not evident either. However, it’s the clear solution, and Meta’s results point in that direction.

In the meantime, markets appear to be back in nervous territory again. Palantir’s sharp fall isn’t the only one; NVIDIA and AMD are also paying the heavy toll of being too AI-exposed. And I don’t blame markets, the sheer scale of AI CAPEX expenditures should have already created at the very least 200-300 billion in value. Yet, that number is well below 100 (with the exception of hardware companies, which aren’t really value-generating but net recipients of these savage expenditures).

The truth is, markets have been way ahead of reality for a long time now, and are starting to feel the desperate need for AI hype to materialize. And I believe the answer is, you guessed it, RL. But if I’m wrong, and RL fine-tuning doesn’t lead to an explosion of enterprise AI, we might as well let everything come crashing down.

THEWHITEBOX

Premium

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!