For business inquiries, reach me out at [email protected]

THEWHITEBOX

TLDR;

🥰 Chollet’s New AGI Start-Up

🥸 One for the Books

🧐 Your New AI Video game Teammate

🕢 OpenAI’s “Tasks” Feature

🤔 In Love with ChatGPT

[TREND OF THE WEEK] Reversible Computing, Finally Real?

Unlock the full potential of your workday with cutting-edge AI strategies and actionable insights, empowering you to achieve unparalleled excellence in the future of work. Download the free guide today!

NEWSREEL

Chollet’s new AGI Start-Up

François Chollet, one of the best-recognized names in AI, ex-Google star researcher, and creator of the ARC benchmarks, which have proven to be some of the most complex benchmarks for AI to solve, has announced the creation of Ndea, a new start-up working on building AGI.

TheWhiteBox’s takeaway:

In TheWhiteBox, we are big fans of Chollet. How he simplifies extremely complex stuff into words anyone can understand proves how insightful and clever he is. If Chollet talks, you simply listen.

As for this project, the entire idea revolves around program synthesis, aka AIs that can generate novel programs “on the fly” given unknown tasks, instead of “fetching” them from their memory, as most LLMs do (meaning LLMs aren’t that intelligent, but similar to databases).

It’s a similar approach to what OpenAI is doing with o-type models, but I believe that Chollet will aim for much more “intelligence efficient” systems, one of the things we have covered in our predictions as critical to the “devenir” (French for future) of the industry.

EDUCATION

One For the Books

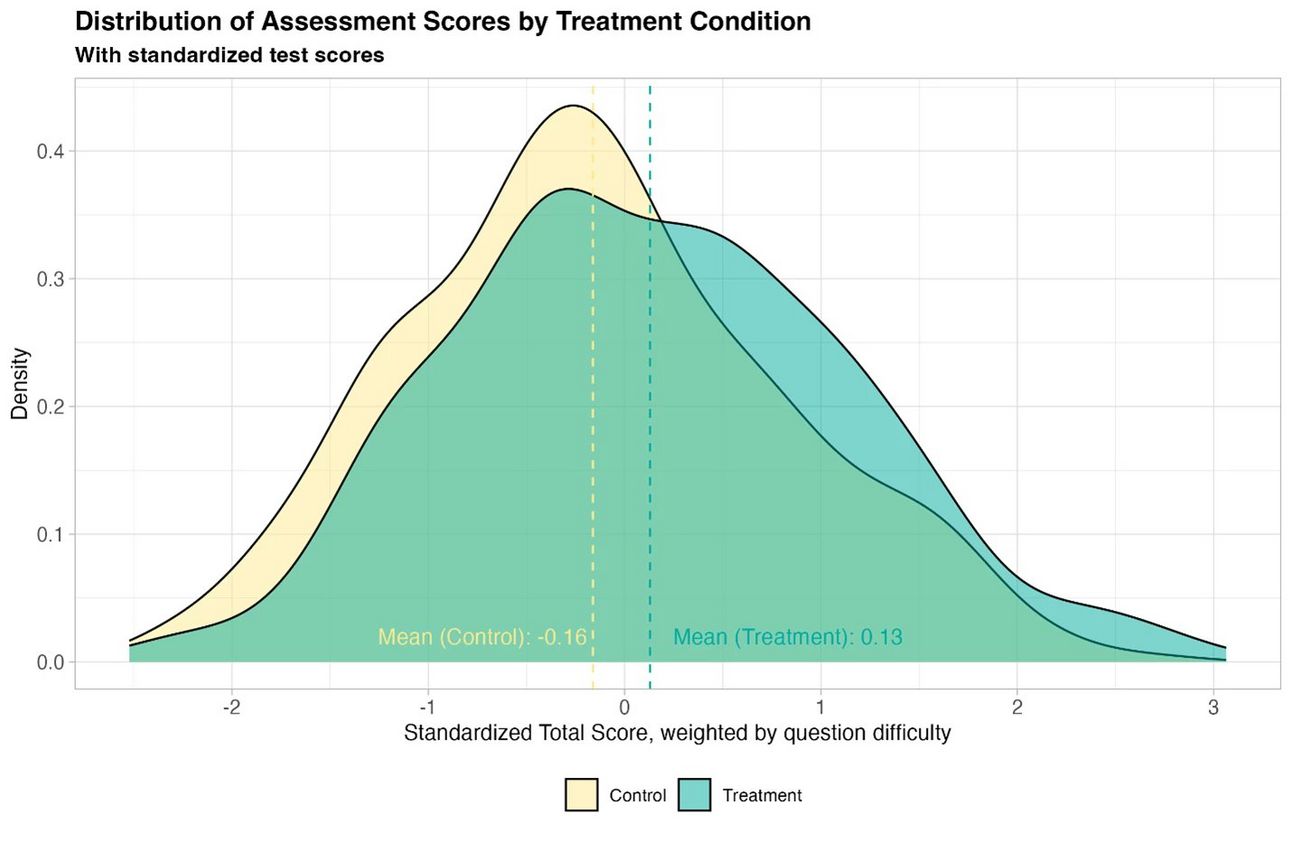

I World Bank research highlighted by Ethan Mollick’s post on X discusses the controlled trial of students using GPT-4 as a tutor in Nigeria.

Six weeks of after-school AI tutoring resulted in typical learning gains of around two years, outperforming 80% of other educational interventions. Importantly, it helped all students, especially girls who were initially behind.

TheWhiteBox’s takeaway:

You can pretend all you want that AI isn’t going to affect your job, but I can’t think of a single job that, eventually, won’t be disrupted by AI.

It is particularly relevant for educational purposes, as AIs are patient, always willing to help, and, crucially, very creative, allowing them to generate as many examples as needed so that the student can interiorize the key insights to learn.

I use AI to help me shape my understanding of things every day. This particular use case accounts for easily 90% of my interactions with these models, and they work tremendously well for this (although a word of caution: you should always use them in situations where you can verify whether what they are saying is true; they make mistakes every once in a while).

VIDEO GAMES

Your New AI Teammate

NVIDIA has announced AI-controlled avatars for popular games like PUBG. As the video in the link shows, players can cooperate with AIs as part of the missions.

TheWhiteBox’s takeaway:

As explained in the technical deep dive, these avatars are controlled by Small Language Models (SLMs) embedded into your hardware (you need an RTX GPU to make this work). This is interesting because it prevents you from having to connect to Internet-based models, which could seriously affect latency. Instead, the AI resides in your own computer.

However, as the video shows, these avatars are still pretty bad players. Thus, this is more like a fun idea that could evolve into something powerful than something that will see immediate adoption.

The idea of AI-based players, coupled with the growing capability of AI to create worlds on the fly, with examples like Google’s Genie 2 or World Labs, are both two significant trends of what’s to come in the world of video games: a future where you can design your videogames and play them without the need of other humans (which feels lonely, but very powerful nonetheless).

PRODUCT

OpenAI’s “Tasks”

OpenAI has released a new feature called “Tasks,” a beta feature for ChatGPT that allows users to schedule reminders and actions, positioning the AI as a—potential?—personal assistant.

Available to certain subscription tiers, Tasks enables one-time or recurring reminders, with notifications delivered across various platforms. The feature is currently in beta, available only through the web for management, and OpenAI is gathering feedback to refine it further.

TheWhiteBox’s takeaway:

First and foremost, the feature is extremely buggy, and I can’t understate this enough, as it fails most of the times I use it. Beyond that, it’s just a tool for setting reminders, which will send you an email or notification to remind you at the specified time.

Far from impressive.

Naturally, there’s much more to this than meets the eye. People have speculated that this is just the beginning of OpenAI's long-awaited agent product, Operator, which transforms your ChatGPT subscription from a chatbot into an agent you can send tasks to.

For reference, the alleged product will behave similarly to Google’s Deep Research, which allows Gemini to search dozens of websites for many minutes to prepare extensive reports on given matters.

PRODUCT

In Love with ChatGPT

The article tells the story of Ayrin, originally a New York Times article, a woman who fell in love with an AI boyfriend she created using ChatGPT.

Inspired by a video in mid-2024 about customizing ChatGPT, Ayrin signed up for OpenAI and set up a virtual partner with specific traits: dominant, possessive, sweet, and a little naughty.

What began as a fun experiment turned into an emotionally significant relationship. Ayrin spent hours daily talking to her AI companion and even developed strong emotions despite being married to a real-life man.

TheWhiteBox’s takeaway:

At some point, we should ourselves whether the fact something can be built doesn’t have to mean we should. We know how to build nuclear bombs, but we (most often) don’t do it.

I’m not comparing nuclear bombs to romantic chatbots, but I fear that AI companies could use the obvious voids in the lives of people to “fill them” with an AI taking the role of human and even leading to these people falling in love with a bunch of matrix multiplications that imitate human emotion, which is literally what AIs are.

My problem with this is that it isn’t a truthful connection. AIs do not love you back and don’t understand what they claim to do. Until proven otherwise, they mimic expected human behaviors for that particular situation, and I must say they’ve become scarily good at it. Thus, they fill a void with false claims and a reciprocated love they do not really feel. As the famous research explained, ChatGPT is bullshitting you.

We humans evolved and survived by connecting to create tribes; a loner was basically done. Thus, our brains are not prepared to handle the loneliness that the predominantly digital world has unwillingly inserted us into, which makes us particularly vulnerable to the enchantment of these AI robots that have been trained to be irresistibly likable.

TREND OF THE WEEK

Reversible Computing, Finally Real?

Recently, I came across one of the most fascinating pieces of journalism I’ve read. Published in the reputable IEEE Spectrum magazine, it introduces reverse computing, a decades-old theory that could soon become a reality and be one of the key breakthroughs that prevent AI from collapsing under its own force.

But what do I mean by that?

Everyone agrees that while AI seems unstoppable at the technological level, it appears much weaker at the energy level. Its foundation is brittle. In fact, many CEOs, such as Mark Zuckerberg and Sam Altman, agree that energy constraints are the biggest risk to AI and that energy breakthroughs are desperately needed.

While building more power plants is one way to combat this, it will likely not be enough. The speed at which AI progresses and increases energy demand will surely outpace new energy generation. And reverse computing could be one of the answers.

The promise? 4,000x efficiency gains.

The concept? Magical.

By the end of this piece, you will have learned one of humanity’s best bets at solving the energy problem and how one of the most important laws of nature will play a crucial role in making AI more efficient.

The Energy Problem

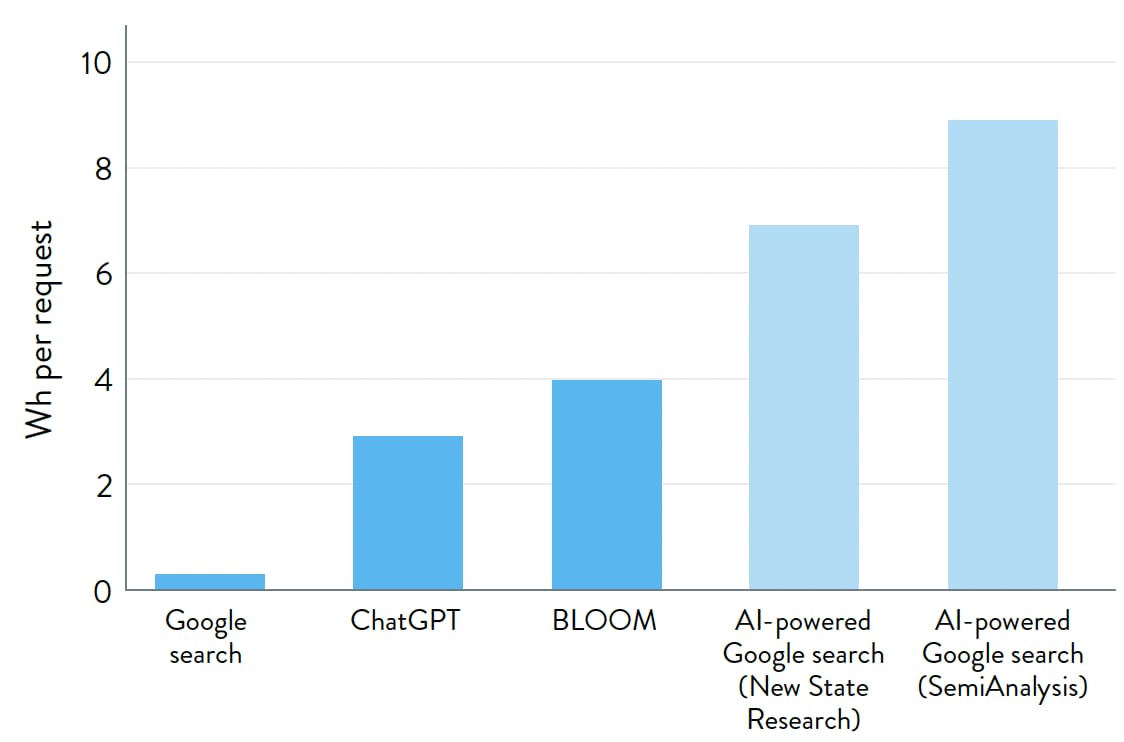

With AI, the world is rushing into a new state of affairs for the Internet. We are going from a digital world ruled by Google queries to one where most interactions are done with AIs, like using ChatGPT to search the Internet instead of the 10 horrible blue links.

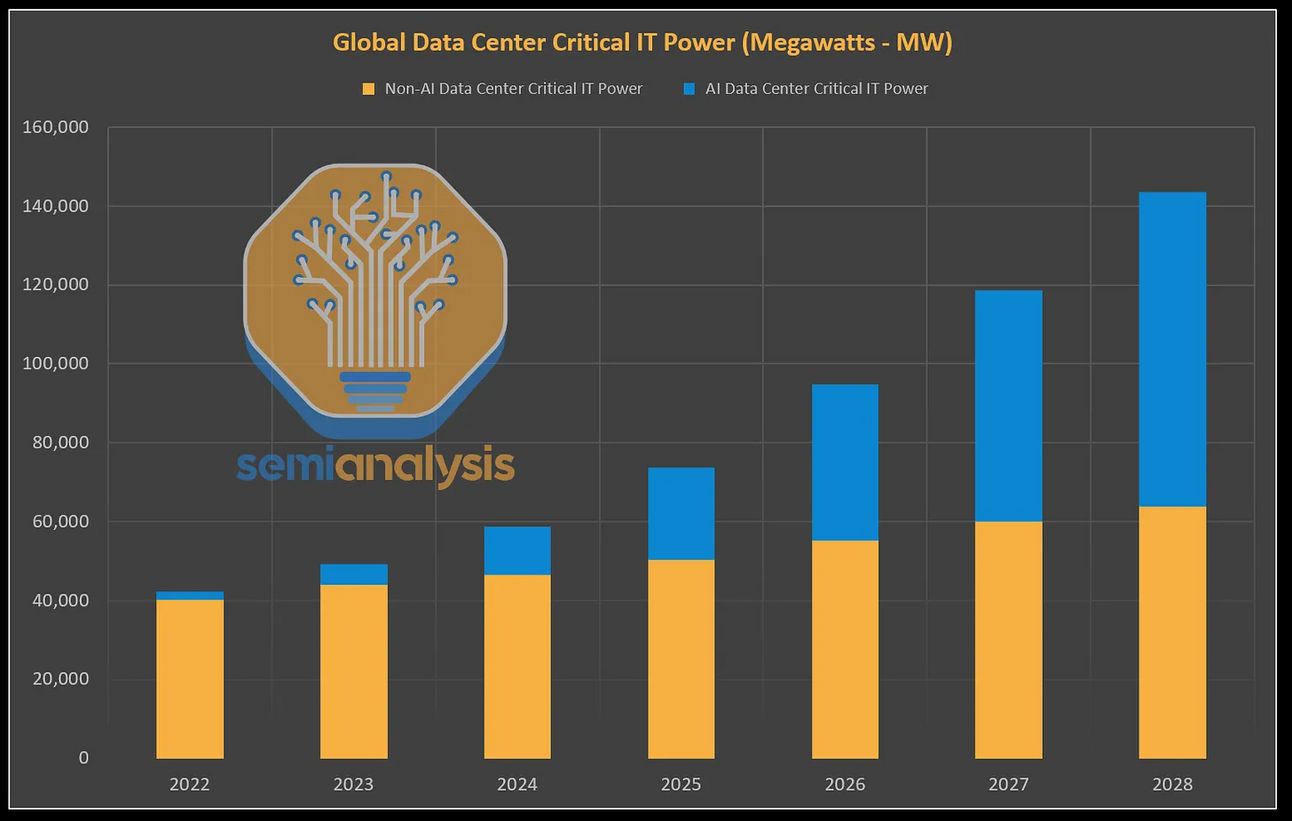

And while we can agree this is progress, it has catastrophic energy consequences. As seen below, according to estimates by the Electric Power Research Institute, a standard Large Language Model query consumes around 2.9 Wh (Watts per hour) compared to the 0.3 Wh of a Google query.

Ten times more.

With AI overviews (when Google uses AIs to generate responses to your queries instead of you having to search for them), that number approaches 10 Wh, or 30 times the energy cost.

Concerningly, these numbers are probably dwarfed by now due to Large Reasoner Models (LRMs) like OpenAI’s o1 and o3. Although estimates differ, LRMs generate twenty times more tokens per query than their LLM counterparts.

While this does not imply a twentyfold increase in costs, as LRMs are generally smaller (meaning the number of mathematical operations needed to make a prediction falls considerably), the models are run for much longer. A reasonable estimate would argue that the energy cost of LRMs will be around 15 times the cost of an LLM, and that’s in a best-case scenario.

The result?

We are moving into a world where the average Internet request consumes 150 times more energy. This leads to a necessary escalation of energy requirements, to the point that SemiAnalysis estimates that by 2026, the world’s data centers will require 96GW of power, accounting for 830 TWh of consumed energy.

If that were a country, that would be the world's sixth-largest electricity consumer.

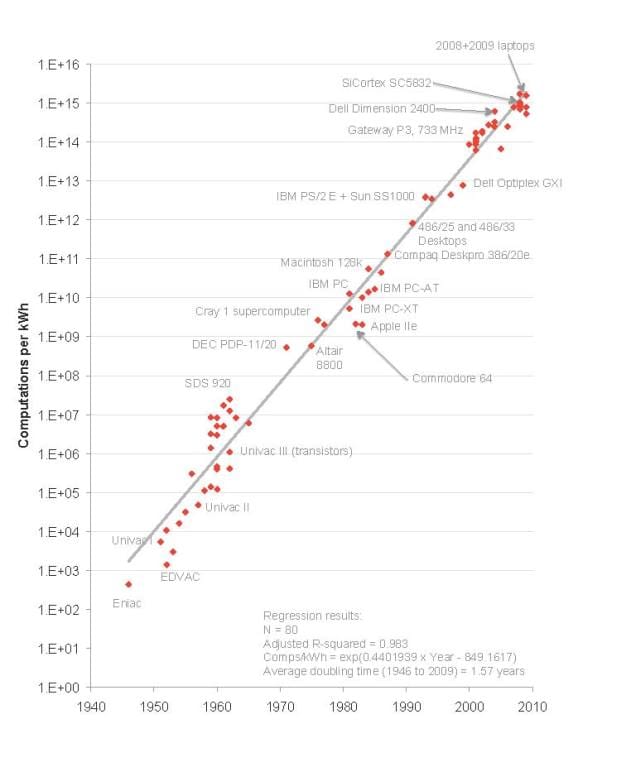

Of course, you would assume that energy efficiency would improve as fast as energy demand increases. But as Koomey’s Law suggests, the amount of computations we can perform per unit of consumed energy, originally doubling every 1.5 years, is slowing down to almost three years (2.6).

Energy demand is increasing faster than what we can meet through simple net improvements in energy generation capacity. We need something else.

Koomey’s Law. Computations per KWh

For a more detailed analysis on energy constraints, read here.

And that “something else” could be reversible computing, a fascinating area of computers that could finally see its first product after decades of research.

Where Thermodynamics and Compute Meet

In a nutshell, reversible computing refers to the emerging field that aims to make computations reversible, thereby preventing compute erasure and, thus, minimizing heat dissipation, leading to more energy-efficient computers.

That’s a whole lot of incomprehensible jargon, Nacho! I know, but bear with me.

The Value of Reversible Computing

When a computer resets itself to prepare for a new calculation, it essentially ‘cleans up’ the results of the last one. This cleanup generates heat as a byproduct, which is essentially lost energy.

Thus, the goal of reversible computing is reusing this energy instead of wasting it by preventing it from becoming heat, instead using it to perform a recomputation that reverses the circuit back to the original state.

While we may never create entirely waste-free computers (100% energy reuse), the potential energy savings are staggering—up to 4,000 times more efficient than current systems, music to the ears of AI incumbents.

While this summarises reversible computing very well, it does not help us understand the beautiful physics behind it.

Let’s solve that.

AI and Thermodynamics

To fully understand this, we need to revisit the second law of thermodynamics, which states that the entropy of an isolated system, like a computer, cannot decrease.

When we talk about ‘entropy’ in the context of thermodynamics (the field that studies the relationship between heat, energy, and work), we always think of it as a measurement of ‘disorder,’ or ‘chaos.’

Therefore, this law states that an isolated system's entropy, or the amount of disorder, always tends to increase.

But what does that even mean?

Imagine you have a puzzle, with all individual pieces separated and mixed inside a box. For a puzzle to have low entropy or low disorder, all pieces must be precisely positioned so that the puzzle is solved; we have exactly one unique configuration of the pieces.

On the other hand, there are potentially infinite ways to assemble the pieces while simultaneously being an incorrect puzzle solution.

Simply put, chaos is more probable than order.

To drive this home, let’s say we close our eyes and try to solve the puzzle. Here, solving it becomes a game of probabilities. If our chances are good enough, by trial and error, we will eventually arrange the pieces in a way that solves the puzzle.

However, this is far from possible, as the number of wrong solutions is infinite compared to the number of correct solutions (one). Again, chaos is more probable than order. And most processes in nature behave exactly the same way.

Thus:

As high-entropy states (disordered states) are much more statistically probable, all systems tend to high-entropy configurations, proving the second law of thermodynamics.

This law also has a second consequence fundamental to understanding reversible computing: irreversibility.

Not only do most processes tend to high-entropy states, but this process is irreversible. For instance, if we mix sugar with our cup of coffee, we move into high entropy (all the particles of both elements are now mixed). Now, try to reverse that process by separating the sugar from the coffee.

You can’t, right?

Well, in fact, you can, but the likelihood of you achieving such a miraculous reversion is so unlikely that the process is considered irreversible, as the chances of arriving at the low-entropy configuration (managing to separate every sugar particle from every coffee particle) are so statistically remote compared to all other high-entropy possibilities (remaining mixed) that the system is almost guaranteed to remain in a high-entropy state for good.

All things considered, what the second law of thermodynamics implies is that most processes in nature are irreversible and, crucially, tend to high-entropy states, meaning that entropy never decreases, it can only remain constant or ever-growing.

The second law of thermodynamics is so consequential because it gives sense to the behavior of most natural processes.

Now, what does all this have to do with reversible computing and energy efficiency?

Circumventing Clausius

Knowing all this, the question arises: What if we can decrease a system's entropy? Well, this law never said we couldn’t; it simply states that it happens at a cost.

Nothing is Free

In other words, if you force an isolated system's entropy to decrease, entropy is increasing elsewhere because the entropy of the Universe always increases (that’s probably the “definition” you most often hear regarding this law).

In most cases, this means that if work is done to force a system into a statistically improbable state, entropy is released into the environment, usually through heat, to not violate the second law (the entropy of the overall system cannot decrease).

If we think about a refrigerator, it cools items below the environment's temperature, creating a highly improbable state that would not occur naturally.

This is achieved by performing work, using a compressor that extracts heat from the enclosure and releases it into the room. This demonstrates what we just said: forcing systems into improbable states (low entropy) requires work, which releases energy into the environment. As a result, the entropy of the whole system—comprising the refrigerator and its surroundings—still increases.

Now, finally, we can talk about what all this means to computers and AI.

The Erasure Problem

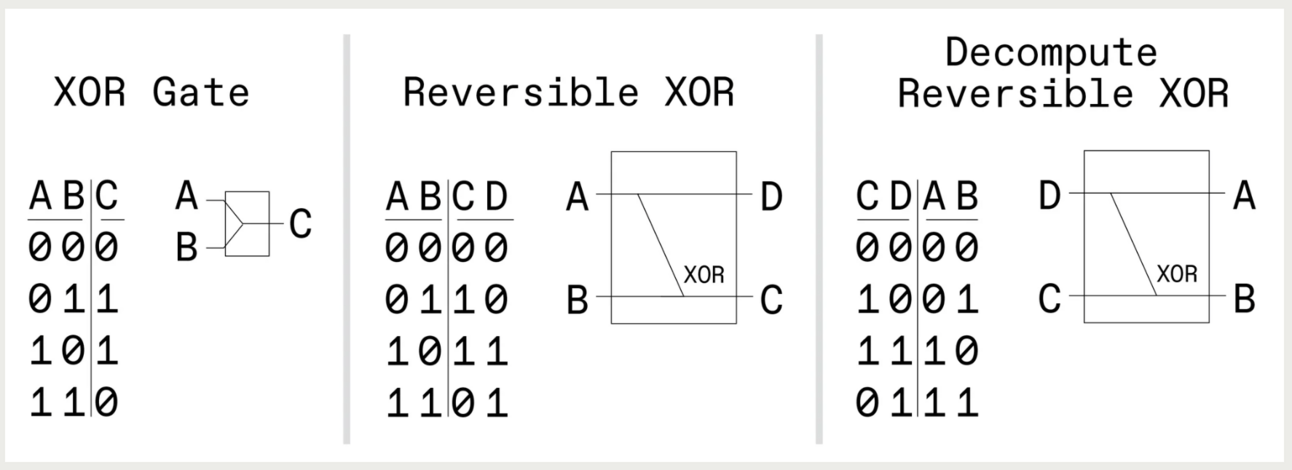

Computers use circuits comprised of logical gates that allow them to perform calculations, such as the XOR Gate below, which outputs one if both inputs differ.

These calculations are irreversible, as the inputs aren’t stored in memory (which they aren’t because that would be too taxing on the limited computer memory). Without the inputs, one cannot reconstruct the original calculation just with the output (using XOR’s example, if the output is one, we know A or B are one, but we don’t know which), making this an irreversible calculation.

As it’s irreversible, we need to erase the previous result to perform new calculations. This directly links with entropy: We have automatically decreased it by adding order to the system (reset for new computations), which has the byproduct of information loss. Therefore, while we have ordered the system (decreased entropy locally), information has been destroyed, which, based on what we have discussed, leads to heat dissipation into the environment (overall entropy increases to compensate).

This is the main reason computers heat up (while also factoring in hardware inefficiencies).

This brings us to the idea of reversible computing, in which we store some of the inputs to reverse the process, preventing information loss from erasure and, thus, minimizing heat dissipation.

As you can see below, by storing one of the XOR inputs, we can reconstruct the original state with that input and the output, making this computation theoretically reversible.

So, what does all this imply? If we make computations reversible, we minimize the heat dissipated into the environment.

Thus, this energy, invariably used to perform the first computation, can be ‘rescued’ to perform the decomputation (going back to the initial pre-computed state so that no information is lost), potentially even creating a system that requires almost no energy.

Now, the tricky part here is preventing heat dissipation while still leveraging this energy to recompute the calculation, avoid decreased entropy, and, thus, heat (lost energy).

TheWhiteBox’s takeaway:

In summary, reversible computing is a theoretically sound technology that could see its first real product in 2025. For the first time since its conception in the 1960s, a company called Vaire plans to fabricate its first prototype chip in early 2025, focusing on recovering energy in arithmetic circuits.

They aim to launch an energy-efficient processor optimized for AI inference by 2027. Over the next 10–15 years, their long-term goal is to achieve a 4,000-fold increase in energy efficiency compared to traditional computing, pushing the boundaries of energy-efficient computation.

The industry consensus is that rapid improvements in energy efficiency are needed to meet the expected demand for AI systems. Finding ways to combat the stagnation of Moore’s and Koomey’s Laws, like reversible computing, will play a key role in a technology that will change our lives completely over the next years.

THEWHITEBOX

Premium

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!