THEWHITEBOX

TLDR;

Welcome back! This week, we take a look at Google’s AI-discovered cancer drug, new models by Anthropic and Google, new mega deals including AMD and Oracle, new AI consumer hardware coming from Apple, NVIDIA’s first-ever AI ‘Home GPU’, and research that states GPT-5 is halfway to AGI.

Enjoy!

THEWHITEBOX

Other premium content

On Tuesday, we took a detailed look at the latest grand deal between OpenAI and Broadcom, new interesting news from companies like Cisco, the Netherlands’ unthinkable attack on China, and for Full Premium subscribers, a deep dive into what might be the most exciting AI IPO ever.

Turn AI into Your Income Engine

Ready to transform artificial intelligence from a buzzword into your personal revenue generator?

HubSpot’s groundbreaking guide "200+ AI-Powered Income Ideas" is your gateway to financial innovation in the digital age.

Inside you'll discover:

A curated collection of 200+ profitable opportunities spanning content creation, e-commerce, gaming, and emerging digital markets—each vetted for real-world potential

Step-by-step implementation guides designed for beginners, making AI accessible regardless of your technical background

Cutting-edge strategies aligned with current market trends, ensuring your ventures stay ahead of the curve

Download your guide today and unlock a future where artificial intelligence powers your success. Your next income stream is waiting.

FRONTIER AI COMPANIES

Three Mega-Rich Corporations Walk into a Bar…

A consortium led by BlackRock, NVIDIA, and Microsoft has agreed to purchase Aligned Data Centers for $40 billion. The company owns, operates, or plans to build up to 5 Gigawatts of data-center capacity across 50 sites.

This deal is the first significant investment by the Artificial Intelligence Infrastructure Partnership (AIP), a fund formed to scale AI-centric infrastructure. They aim to initially deploy $30 billion in equity (possibly rising to $100 billion including debt).

The transaction is expected to close in the first half of 2026, subject to regulatory approval.

TheWhiteBox’s takeaway:

This is an interesting deal. First, it shows how expensive this industry is. $40 billion is a huge number, even for these guys, and shows the premium being placed on AI-ready infrastructure.

It can also help determine “the market value of an operated GW”, at around $8 billion, which is quite the sum, but may make more sense than building it yourself, which requires around $50 billion.

Of course, all this means that the industry is consolidating fast, to the point that the same subset of companies will own all data center capacity. In other words, AI compute will be in the hands of the few. This isn’t necessarily bad, but we both know what happens when we see such consolidation: suppliers have a lot of power over consumers.

That is why I don’t expect having to rely on external providers for these models and aspire to run most of my workloads locally.

CHIPS

AMD and Oracle’s Partnership

AMD and Oracle have announced an expanded partnership under which Oracle Cloud Infrastructure will deploy 50,000 AMD Instinct MI450 GPUs starting in 2026.

The system will use AMD’s new “Helios” rack design with MI450 GPUs, next-generation EPYC CPUs, and Pensando networking. This marks the first large-scale public AI supercluster on Oracle Cloud powered by AMD. Financial terms were not disclosed, and deployment will begin in 2026.

TheWhiteBox’s takeaway:

Do you notice what I did? Look at the timings (H2 2026) and the product (AMD’s MI450 GPUs). We both know who’s at the center of all this: OpenAI.

Nevertheless:

OpenAI announced a $300 billion deal with Oracle starting in 2027

OpenAI also announced a 10GW in AMD MI450 GPUs starting in H2 2026

This is just the circle jerk consolidating. These aren’t totally separate deals. The $300 billion deal with Oracle will include many of the GWs that OpenAI signed with AMD (and NVIDIA). Of course, they won’t tell you this because they need AMD and Oracle stocks to pop, but it’s mostly the same deals rephrased in different ways.

FRONTIER AI COMPANIES

Anthropic’s Business is booming.

Anthropic is positioning itself for aggressive revenue growth. It expects to hit ~$9 billion in “run-rate” revenue by the end of 2025 (it’s reportedly at $7 billion already, up from $5 billion in August) and then to more than double—or even nearly triple—that in 2026, with targets between $20 billion and $26 billion.

Those potential numbers would put them in the same revenue territory as OpenAI, which projects around $30 billion as soon as Q3 2026, implying that Anthropic is actually catching up in revenues.

Key drivers include:

strong enterprise demand (80% of its current revenue comes from business customers),

expansion into governmental and international markets,

new lower-cost models like Haiku (more on this below) aimed at broader adoption,

and scale in code-generation products like Claude Code (which is approaching a $1 billion run rate).

But how is this company managing to compete with OpenAI despite the latter having 800 million users?

TheWhiteBox’s takeaway:

The answer comes down to the same argument we continue to make time and time again in this newsletter: most AI demand is not monetizable, especially when the larger part of your offering is focused on end consumers.

Of the 800 million weekly active users that OpenAI acknowledges, only 5%, or 40 million, are paying subscribers. This is a very low number, especially considering we are talking about none other than the ChatGPT product the entire world knows of.

On the other hand, Anthropic’s user base is easily ten times smaller or more, but it has a much higher degree of conversion to paid and, crucially, is much more monetizable. The reason is that most of Anthropic’s users are enterprises and coders, both of which are economically more capable of spending and more inclined to generate larger amounts of usage.

As both OpenAI and Anthropic charge their APIs based on usage (tokens in/tokens out), Anthropic’s users consume a disproportionate amount of tokens, leading to much higher revenue per user.

But why does Anthropic monetize better? It’s simple, they aim for two particular cohorts:

Enterprises. The cohort of people with deeper pockets, aka enterprises

Coders. The cohort of people who can extract the most value from LLMs, coders

Anthropic literally doesn’t care about the rest of us. And truth be told, this bet is paying off handsomely.

POLICY

Anthropic’s Tax Reform

Talking about Anthropic, they’ve also released a blog post sharing some ideas on how to prepare for AI disruption.

Among other things, the hot AI startup comments on the following:

It argues that the scale and speed of AI’s disruption are uncertain, so policy must adapt to different scenarios (mild, moderate, or fast-moving).

It presents nine categories of policy proposals (organized by scenario severity) across workforce, taxation, infrastructure, and revenue systems.

For all scenarios, proposals include worker upskilling grants, tax incentives for retraining/retention, closing corporate tax loopholes, and speeding permits for AI infrastructure.

In more disruptive scenarios, they consider trade-adjustment programs for AI displacement, taxes on compute or token generation, sovereign wealth funds to share AI returns, modernizing value-added taxes, and new revenue structures (e.g., low-rate wealth taxes).

And this is the reason I don’t really like Anthropic.

TheWhiteBox’s takeaway:

While some of the suggestions are clearly well-intended, no company in Silicon Valley, and probably the world, is lobbying the Government (especially California) more heavily than they are.

Talking about California, they are the first US state implementing regulation for companion chatbots, SB 243, going live January 1st. After the rise of instances in which users, especially young people, even reach the point of suicide after conversations with AI chatbots, the regulation will require age verification (OpenAI has already implemented it), warnings, and establish substantial penalties.

I’m not a fan of regulation, especially those that intend to cripple competition, but this one feels like it's pretty much necessary considering these models increase retention (higher usage) thanks to their sycophancy (tendency to agree with the user, which is what causes the psychosis and even suicide events). This is actually a real risk from AI companions, so I’m happy to see it regulated.

At first, they look genuinely concerned… while building the very thing they are concerned about. Yes, Oppenheimer was scared, too, while developing the atomic bomb, but you can’t help but wonder whether there are “other reasons.”

Especially when you read between the lines, especially regarding taxing companies per generated token. How convenient; taxing the primary source of income so you can dry out the competition.

In reality, most of their proposals always include one big “unintended” consequence: curtailing AI development to them and a few others. That is, most of their policy proposals read the following way: “AI is bad, very bad, potentially human-ending. That’s why only we should be building it.“

Besides the stinking stench of condescension, the "I’m better than you” vibes hide the fact that this company would be much more profitable and successful if the Government simply put its lower-capitalized competitors out of business.

It’s almost too perfect, and the cynical in me can’t help but wonder: “If you’re so scared, why don’t you shut down operations and build a lobbying company?” Honestly, you can’t get it both ways, and claiming to be ‘dead-scared’ about the very same thing they are creating is not a very convincing way to prevent others from suspecting all you want to do is ban training AIs for anyone who isn’t them.

That said, if AI truly displaces millions, we need taxes to compensate for the revenue loss while also potentially expanding benefits. I’m not a fan of uncontrolled welfarism, but you can’t expect to displace millions and simply let them starve.

But if you want a tax that is fair to all AI providers, just tax the watts, not the tokens. Tokens are extremely capital-intensive, so taxing them implies that AI will be a game for a few rich companies. However, if you tax the consumed energy, you let more suppliers compete. You might think they are one and the same, but it’s not, because by doing so, you benefit from two things:

You incentivize the Government to build more power (more Government revenues), which is a key strategic pillar for any developed country, while also decreasing the average costs of living (few things drive up inflation more than energy costs)

You incentivize suppliers to be operationally excellent; the more tokens they can generate per watt, the more money they make. As AIs are smarter the more tokens they generate, you aren’t creating the incentive for suppliers to be cheap, which would create a massive performance difference between well-capitalized companies (like Anthropic, how convenient) and the rest.

I’m all for regulation, but not one that curbs competition. Regulation is meant to protect customers, not create oligopolies.

BIOTECH

Google’s New Model Discovers “Cancer drug”

Google launched C2S-Scale 27B, a 27-billion-parameter model in the Gemma family, aimed at interpreting single-cell biology.

The model predicted a novel therapy hypothesis: combining a CK2 inhibitor (silmitasertib) with low-dose interferon selectively boosts antigen presentation in tumor cells within an “immune-context-positive” environment, but not under neutral conditions. Lab experiments confirmed the prediction: the combination induced a ~50 % increase in antigen presentation in a cell line unseen during training.

But what does all this even mean?

TheWhiteBox’s takeaway:

In plain English, most tumors are “cold,” meaning they do not generate anti-immune reactions until later in the process. For some time, they are invisible to the immune system. The model proposes a new hypothesis that has been proven correct: a new therapy makes these tumors detectable to the immune system.

The “groundbreaking” thing here is that, although Silmitasertib has been known by humans for a while, it has not yet been applied in this context.

Of course, hypers have taken this and reached as far as saying we are going to cure cancer in three years. The truth is, real experts in the field claim this isn’t that groundbreaking of a discovery and that it is an intensely studied element; the difference is that it was considered more as an antiviral, just not for this particular use. Also, it was validated on living cells, not living organisms.

Bottom line, this is not world-changing, but it’s another step in AI’s relentless path to becoming humanity’s primary tool for discovery.

MODELS

Anthropic Releases Claude 4.5 Haiku

Anthropic has been on a roll this week. Besides exploding revenues and moral superiority complexes, they also have time to, well, do what they do: release models.

This time, it’s Claude 4.5 Haiku, the smallest and fastest version of the Claude family, most likely a distilled version of Claude 4.5 Sonnet, their frontier model.

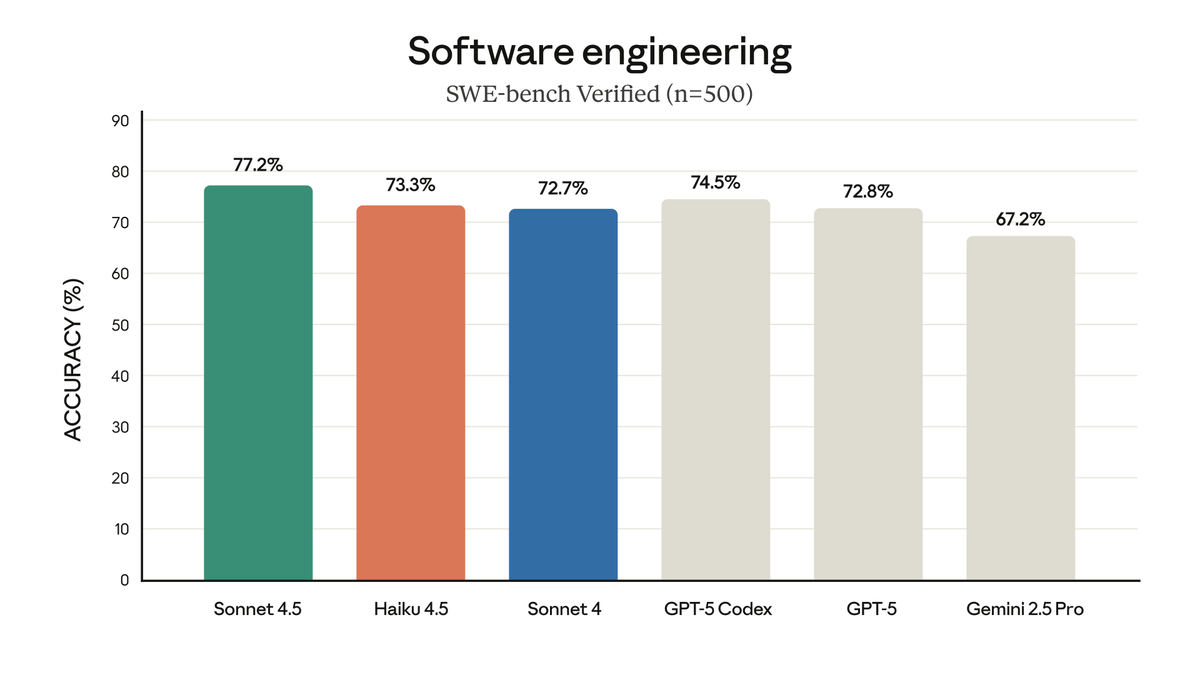

As you can see above, the model is nearly as good as the top model in coding tasks, offering better performance per cost than the previous frontier, and is also very competitive with other models in that frontier, like GPT-5 or Gemini 2.5 Pro.

If this graph extrapolates to other tasks and benchmarks, it’s basically the best model on a performance per cost basis, especially considering its price is $1 per million input tokens and $5 per million output tokens (for comparison, GPT-5 is $1.5/$10).

But… is it really the cheapest US model, though?

TheWhiteBox’s takeaway:

While this model appears cheaper on a unit basis, Anthropic’s models have a particular set of characteristics that make them quite expensive:

They are mainly used for coding, which processes and generates a considerable amount of tokens

They are much more wordy than the average model

The tokenizer is more granular (fewer bytes per token), which means the models need to generate more tokens to say the same thing as other models.

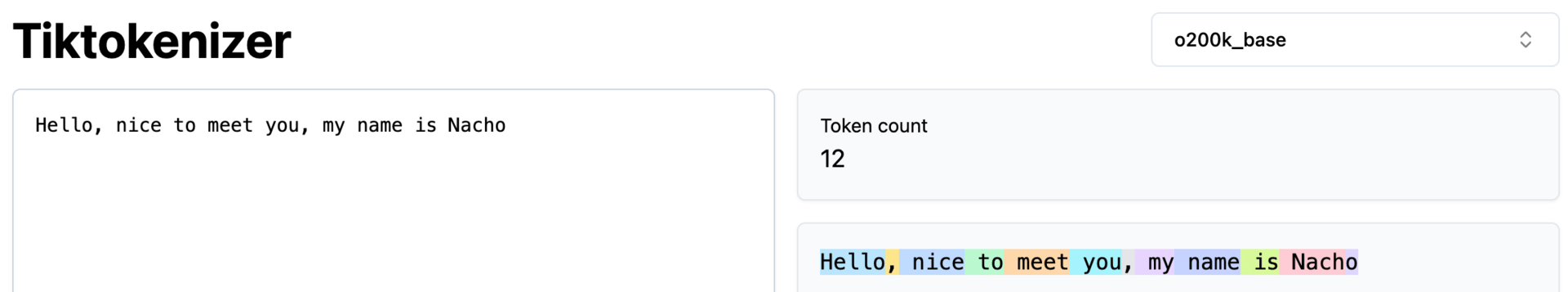

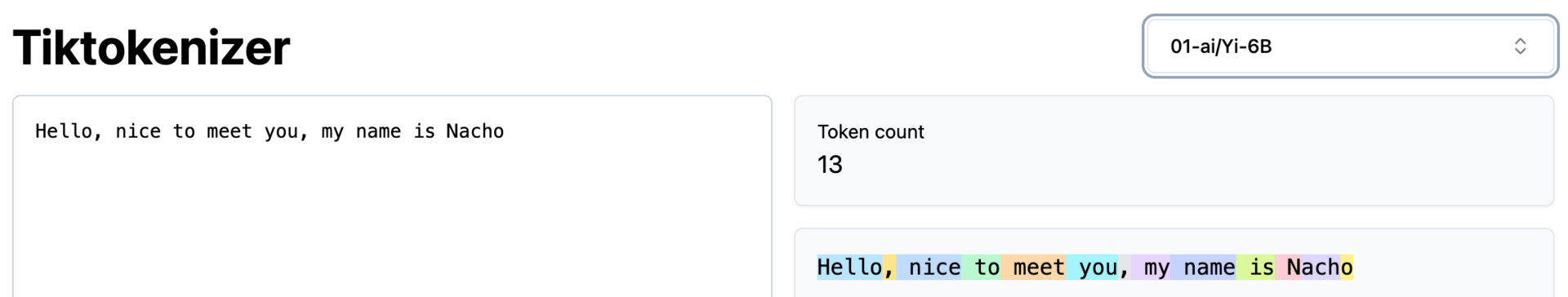

But what does the third point mean? AI models chunk text into parts known as tokens. Each of these tokens is processed independently, meaning that the more tokens there are, the more compute you need.

And as you can see below, models tend to chunk sentences slightly differently, generating a different number of tokens.

For instance, looking at the images below, the model using the bottom ‘chunker’, with 13 tokens, will require slightly more compute than the one above (assuming all else is equal). Thus, what I’m implying in the third point is that Anthropic’s models tend to create more granular chunks (more processing compute and more generated tokens), which translates to higher costs.

Therefore, it’s improbable that Anthropic’s new model is cheaper than GPT-5. And as a lesson, it’s vital that you do not take the numbers they publicly share for granted; test models and see which one is cheapest for you.

RESEARCH

How close are we to AGI?

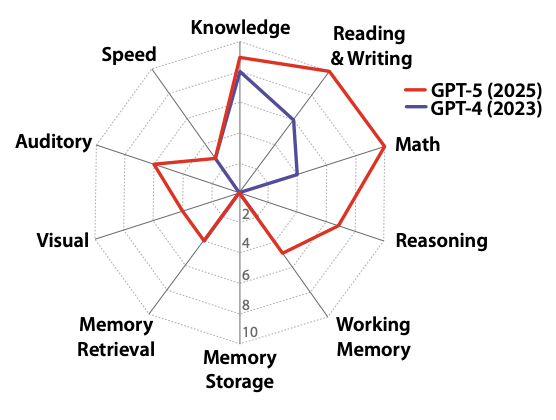

In the latest effort to define once and for all ‘what AGI is’, a group of researchers (including famous researchers like Yoshua Bengio, Gary Marcus, former Google CEO Eric Schmidt, or Dan Hendryks), has released what’s probably the most thorough try at making this definition, benchmarking GPT-4 and GPT-5 in the process, giving quite the headline:

GPT-5 is 58% of the way to AGI. But what is AGI then?

They define it as “an AI system that can match or exceed the cognitive versatility and proficiency of a well-educated adult.” The framework grounds this definition in the Cattell-Horn-Carroll (CHC) theory of human intelligence, decomposing general intelligence into ten core cognitive domains that together span the breadth of human cognition:

General Knowledge

Reading and Writing Ability

Mathematical Ability

On-the-Spot Reasoning

Working Memory

Long-Term Memory Storage

Long-Term Memory Retrieval

Visual Processing

Auditory Processing

Speed

All domains are weighted equally (10% each), with 100% meaning achieving parity with a well-educated adult.

Where do AIs shine? GPT-5 performs best in Mathematical Ability, General Knowledge, and Reading and Writing Ability, each nearing or reaching the upper single digits out of a possible 10% per domain.

And where are AIs really lagging? AIs show their greatest deficits in long-term memory and related foundational cognitive processes, scoring multiple 0% in areas like Long-Term Memory Storage (MS) and Long-Term Memory Retrieval (MR).

This results in the following graph that measures GPT-5’s progress to AGI according to all these areas:

Other weak areas include visual reasoning and cross-modal processing speed. Still, the authors emphasize that memory deficits are the single most significant gap separating current AI from human-level general intelligence.

TheWhiteBox’s takeaway:

The paper is interesting because it lays the foundation for interesting conversations. But the main result, the lack of continual learning, is all but new.

Modern AI models suffer from “knowledge cutoff” illness; they have a detached period of learning, and once that training is finished, they no longer learn anything new.

The issue is that continually training models over time is not only incredibly expensive, but it also causes catastrophic forgetting. In plain English, every time you retrain an AI model, the model is learning something new at the expense of forgetting “something else” we don’t quite know until we use the model.

So, the question here is: how do we create a model that can continually learn, just like humans do, without destroying performance? Humans also forget things with time that we don’t refresh as often, but the difference is that there’s a set of core knowledge components, core skills, that we never forget.

Except for special cases like Alzheimer’s, we never forget how to walk, how to read, how to speak. Of course, we practice all of them to some extent every day, but no matter how little we read, we don’t forget how to. And key facts of our knowledge are always present too. We don’t simply forget what the US’s capital is.

Protecting those skills and knowledge while we allow models to learn new stuff, adapting to the changing world in front of them, remains one of the key open questions in AI.

HARDWARE

Apple Announces M5 MacBook

Finally, Apple has officially launched the new 14-inch MacBook Pro powered by its M5 chip, marking the company’s next major leap in on-device AI performance.

The M5, fabricated on a 3-nanometer process, integrates a 10-core GPU with a dedicated neural accelerator in each core, boosting AI compute throughput by up to four times compared to the M4.

Fun fact: Long where the days when 3-nanometer process actually meant transistors were 3 nanometers in size. Today, it’s just a marketing gimmick. That said, these transistors are smaller than those on a NVIDIA top-line Blackwell GPU (4-nanometers), which means we are finally entering the new generation of chips.

Moreover, CPU performance is about 15 percent faster, while graphics tasks see up to a 1.6× improvement. The machine retains the same chassis, display, and port layout as the M4 model but adds faster SSDs (memory), with configurations up to 4 TB, and higher unified memory bandwidth at 153 GB/s.

So, what to make of it?

TheWhiteBox’s takeaway:

The reason I’m mentioning this release at all is that, despite the hardware upgrade, no M5 Pro or Max variants have been announced yet. This signals that the release is primarily a performance boost and, specifically, an AI-centric refresh rather than a complete hardware redesign.

The reason is the memory bandwidth improvement, which almost reaches 200 GB/s. But why is this important for AI? Well, because it’s the main bottleneck.

While we always mention how much computation AI models require, data movement is what actually determines whether AIs are fast or not, particularly during the inference phase (when people actually use the models).

Too long to explain here, but a considerable portion of the total of things going on inside a GPU during an AI workload is just moving data in and out of memory, times where the cores aren’t computing anything ‘waiting’ for data to arrive. And how much data can you move per second is precisely this memory bandwidth.

In fact, as I mentioned, it’s the main bottleneck. Therefore, it’s crucial that if you’re hunting for a computer to run AI models, you optimize for memory bandwidth above everything else (you also need strong GPU cores, but I really mean when I say powerful cores are of little use if data movement is too slow, because your cores are sitting idle most of the time).

Strategically speaking, Apple is hinting that the next generation of Apple M5 computers, the Pro and Max, will likely be the most powerful AI consumer hardware on the planet, meaning Apple is positioning itself to be the leader in AI consumer hardware, a pretty niche market these days, but one that I believe will become very large in the future.

And talking about consumer-end hardware…

HOME GPUs

NVIDIA Finally Ships the DGX Spark, Its First ‘Home GPU’

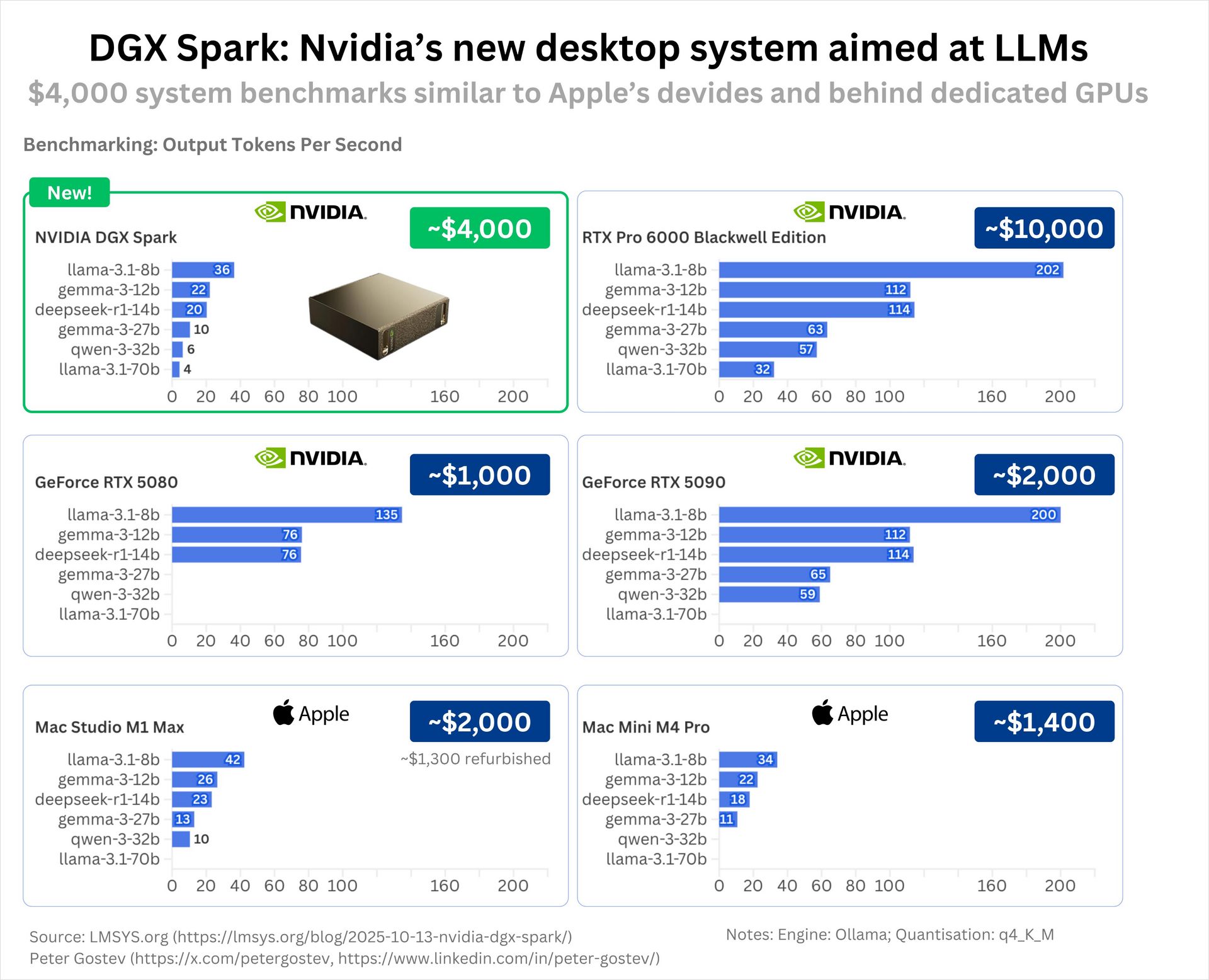

At last, we finally have NVIDIA’s first AI-native home GPU available, for the “cheap” price of $4,000. This little box (almost fits in a normal-sized hand) packs 100 TeraFLOPs of compute power, four times as much as top Apple hardware.

But is this hardware any good? Well, depends on how you use it.

TheWhiteBox’s takeaway:

The biggest issue is the very poor memory bandwidth of ~250 GB/s, around 100 GB/s more than Apple’s new MacBook we have just discussed, but well below other consumer hardware like the very laptop I’m using to write these words, the M3 Max MacBook Pro, a 2023 computer that rocks +540 GB/s. Needless to say, it trails data center-grade GPUs by a huge margin, as these are well above the 8,000 GB/s at this point.

In fact, LMSYS did an analysis and the results are quite disheartening, with models running considerably slower than more affordable GPUs like NVIDIA’s own RTX 5090, primarily focused on video games, but a year-old GPUs (and cheaper). They went as far as creating the fascinating chart below, which compares the performance across models and GPUs.

So, is this a rare NVIDIA flop? Well, not quite. You see, all of the models being tested above are being tested for inference, not training. And it’s in training where this beast shines.

The reason for this is that, as we explained in the Mac story above, AI inference requires significant data movement, which is not ideal for a piece of hardware with “just” 273 GB/s of memory bandwidth.

But luckily, training is much more “compute-heavy,” meaning that much less data has to be moved by unit of computation, which is perfect for a product so powerful computation-wise.

In fact, in tests by Exo Labs, the amount of produced tokens per second of this product multiples by four if you run it on computation-heavy regimes like training or inference prefill.

But here’s the thing. Even for training, it underperforms other, more accessible NVIDIA GPUs like the RTX 5090, so the question here is: to whom is this product targeted?

Perhaps its smallish physical size is excellent for researchers training models while they are on the move (an RTX 5090 is much larger and not precisely ergonomic).

It has 128 GB of memory, meaning that even if the memory is slow, it’s huge and crucial for running large models or processing many tokens.

Overall, if not for the memory bandwidth, it would be an instant buy for any researcher. That said, I believe most will stick with buying RTX 5090s or wait for the Apple M5 Max and call it a day.

VIDEO MODELS

Google Releases Veo 3.1

As we hinted in our Tuesday Premium newsletter, Google has released Veo 3.1, its newest video generation model, and the results speak for themselves. It’s absurdly impressive. Among the things it can do:

Compound backgrounds, people, and objects into a coherent scene, including audio

Receive the first and final frames, and the model fills in the gap

Extend short clips with new frames

The model seems to be leaning heavily into the creative side, meaning you can create implausible scenes that feel real, similar to what Sora 2 does.

TheWhiteBox’s takeaway:

Nobody is on Google DeepMind’s level when it comes to pure AI (not counting the product on top). Google’s “issues” are the go-to-market strategy; they don’t have the taste that Sam Altman from OpenAI clearly dominates like no one else.

We still do not know how this model ranks on benchmarks, however. But considering Veo 3 was the state-of-the-art, it’s probably safe to assume this is the new SOTA.

Closing Thoughts

A very complete week full of varied news, from new top models to more infrastructure deals to new consumer-end hardware releases.

But perhaps the biggest takeaway for me is that, despite skepticism, top AI Labs are displaying impressive revenue growth, a sign that the technology works. The question here is: will revenue grow fast enough?

Or will hardware and infrastructure spending grow so large, so fast, that investors lose faith in AI making solid returns?

Nobody knows the answer to that question, but I will say: while I understand the pressure to get a return fast, I prefer AI being used to cure cancer than being used to create cancer (social media AI apps like Sora) just to make money faster.

Some AI revolutions are like a hot stew on a cold Winter night; they need time to cook, but are worth the wait.

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]