🏝 TheTechOasis 🏝

Talking to an AI chatbot that generates impressive text like ChatGPT is really cool.

Now, talking to an AI chatbot that generates text AND images is way cooler… and something ChatGPT doesn’t offer.

But GILL, Carnegie Mellon University’s new AI chatbot, does.

And you can see it with your own eyes below:

GILL is the first-ever AI chatbot that’s capable of understanding both images and text while also being capable of producing both images and text.

And if being a first in the most innovative industry in the world isn’t already impressive, it was also cheap as hell to train.

But how does GILL work?

When Text Meets Images

Creating an AI model that works well in one modality (text, images, etc.) is already pretty challenging. But creating a model that works across several modalities is something rarely seen.

In fact, when GPT-4 was presented to the world, everyone was amazed that one unique model was capable of understanding text and images as GPT-4 did.

And even though its image processing features aren’t released yet (you can check them here), GPT-4 is only multimodal when it comes to processing data, not generating it.

GILL, on the other hand, not only can process both modalities, but it can also generate both, as seen in the previous GIF.

But to achieve this, they had to overcome an important challenge.

Making Images and Text ‘Talk’

Even though we humans are capable of understanding both images and text naturally, this isn’t an easy concept to explain to machines.

To do so, we transform text and images into vectors—called embeddings—that extract their meaning while allowing machines to treat them.

This embeddings represent the model’s understanding of our world, where vectors that represent similar concepts are grouped, and those that don’t are separated.

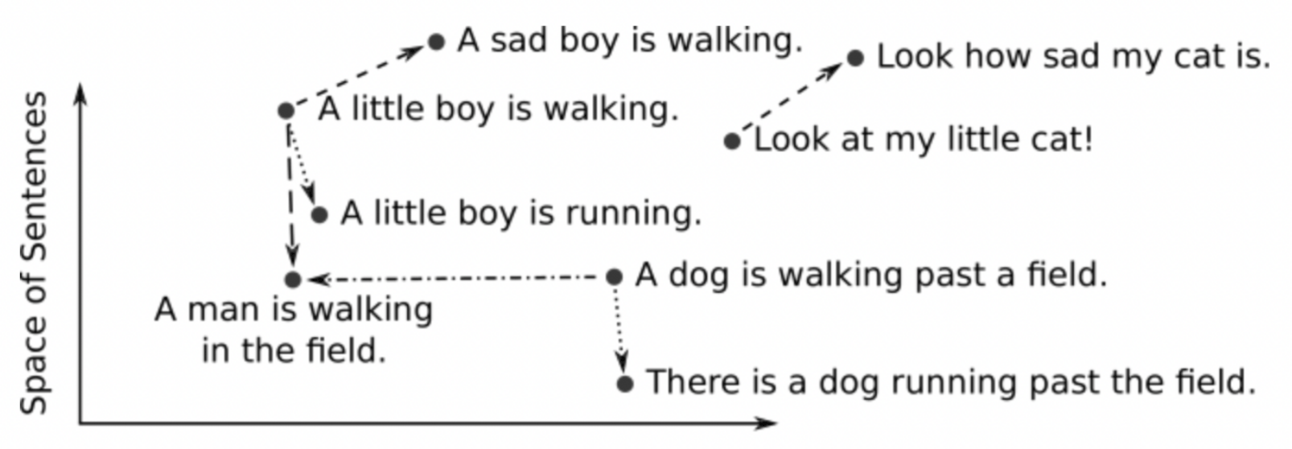

For instance, if we take the visual example below of a text-only embedding space like the one ChatGPT has, concepts like “A little boy is walking” and the same but “running” are close together, while separate from “Look how sad my cat is”.

If you ever wondered how machines understand our world, there you have it.

But the problem is that every model has its own representation of our world, which means that ChatGPT’s embeddings (text-to-text) aren’t valid, for instance, for Stable Diffusion (text-to-image).

This is a problem because if we want a model to process and generate multiple modalities at once, we need to create an embedding space that groups embeddings from all modalities into one unique space.

And that’s precisely what GILL does.

When Maps Are The Solution

GILL does five things:

Process text

Process images

Generate text

Generate

Retrieve images

To do this, it needs to be capable of:

Processing images and text simultaneously

Decide when to output a text or an image

When outputting images, decide if it needs to generate or retrieve the image from a database

To pull this off, the researchers did the following:

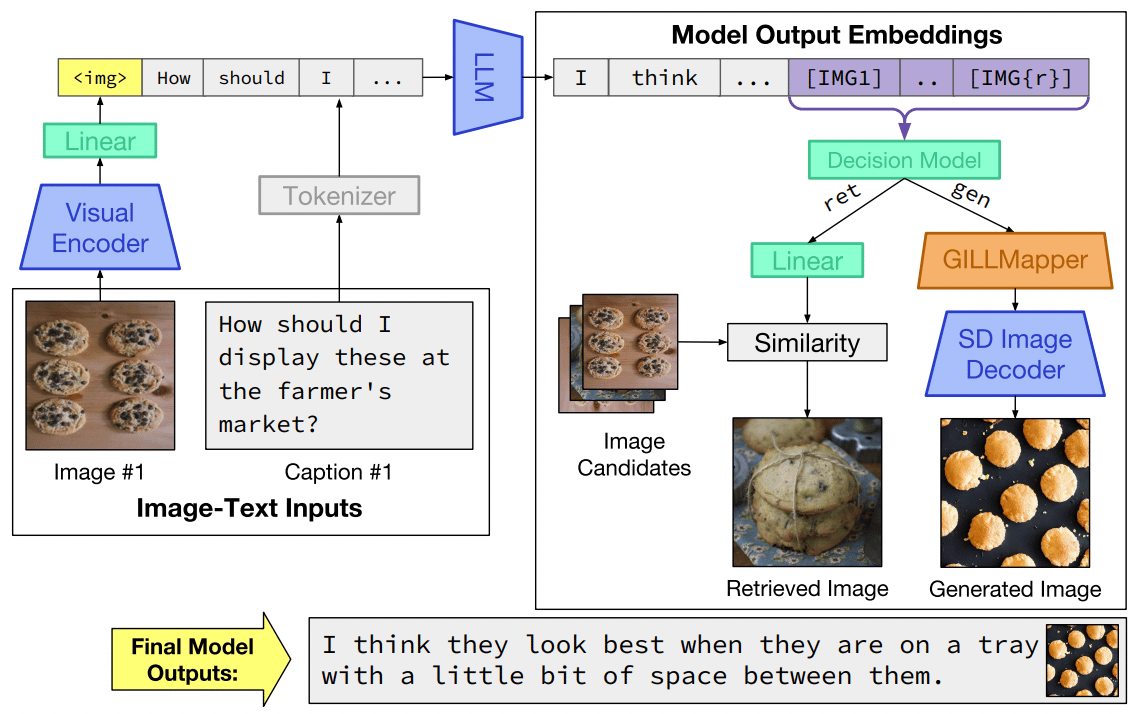

To process images and/or text, they assembled a transformation matrix that took images and transformed their embeddings into something that the text-only LLM would understand.

Next, they enhanced the LLM so that not only it was capable of generating text, but also signaling when an image was required

If the model detected it was time to output an image, they developed a decision module that, considering the natural occurrence of the required image, would decide to retrieve it from a database or generate it with Stable Diffusion

In simple terms, this meant that if you required GILL to generate a “pig with wings” the model would understand that the image had to be generated, as that didn’t exist

Finally, if it had to generate an image, they used GILLMapper, a neural network that transformed the text-embeddings from the LLM into embeddings the text-to-image model, Stable Diffusion, would understand

Et voilà.

As this is hard to follow, let’s see an example:

Left side of the image:

The model receives an image of several cookies and the request “How should we display them?”

Then, the model takes the image, transforms it as we described earlier, and introduces it alongside the text embedding of the request

Right side:

The LLM, GILL’s core, outputs the response, that not only includes text, but also an image.

GILL’s decision model then decides if it needs to retrieve a valid image or generate a new one

In this case, it decides that the retrieved image (stacked cookies) isn’t the best option, and decides to generate a new one

Using the GILLMapper, the LLM’s output image tokens are transformed into a valid image embedding for Stable Diffusion (SD in the image) that then generates the final image

Finally, the answer is given “I think they look best… between them.” accompanied by the generated image

And just like that, you have the next generation of conversational chatbots, AI chatbots that can understand images and text, as well as generate images and text, providing a similar experience to the one you have with your friends and family.

GILL is just the beginning.

Key AI concepts you’ve learned by reading this newsletter:

- Multimodality generation

- Embedding space

- Text&Image Chatbots

👾Top AI news for the week👾

🔐 OpenAI launches a million-dollar grant to empower teams looking to build AI-based cybersecurity solutions

😳 Entering the creepy world of AI deep fakes that bring crime victims to life

🤖 Ex-OpenAI employee’s startup shows video of their newest robot home butler

🔷 Greatest Turing test ever proves Chatbots aren’t still at a human level

🤩 A new open-source king, the Falcon LLM

🕹 When gaming and AI collide. Watch NVIDIA’s new video demo where you can talk to the characters in the game… with your own voice

😣 Many global AI and non-AI leaders sign a statement on AI risk and the need to mitigate it