THEWHITEBOX

TLDR;

Welcome back! This week, we take a look at new models and product releases, especially Gemini 3.1 Pro; new billion-dollar investment rounds; new hardware that spits tokens at 16,000 per second; the curious case of Korean stocks, crucial to AI but “ignored” by the rest of the world; and the latest example of a company that might try to use AI to bring people from the dead.

Enjoy!

THEWHITEBOX

Google Drops Gemini 3.1 Pro

Google has released Gemini 3.1 Pro as an upgraded “core intelligence” model. It said rollout starts immediately across multiple surfaces:

for developers in preview via the Gemini API in Google AI Studio (and also via Gemini CLI, Antigravity, and Android Studio),

for enterprises via Vertex AI and Gemini Enterprise,

and for consumers via the Gemini app and NotebookLM.

On performance, Google highlighted improved reasoning and reported a verified 77.1% score on ARC-AGI-2, describing that as more than double the reasoning performance of “3 Pro,” and superior to all other models available today.

Here’s an example of a very impressive use case.

It frames the model as “intelligence applied,” pointing to examples such as generating code-based animated SVGs from text prompts, synthesizing complex systems into dashboards (including an ISS-orbit visualization from public telemetry), building interactive 3D experiences, and translating literary themes into functional website code.

TheWhiteBox’s takeaway:

Overall, the reception seems to be “good” but not “great”, with some experts calling it a “previous generation model” compared to others like GPT-5.3 Codex or Claude Opus 4.6.

However, it seems to have improved in meaningful ways. For instance, the model does seem to have improved hallucinations tremendously, which were a very relevant issue with past models, at least according to Artificial Analysis, dropping from 88% to 50%:

It also takes the top spot in the overall intelligence index:

Naturally, as I always say, benchmarks only take you so far, and real usage will determine whether this model is worthy of the position benchmarks put it in.

Specifically, the key is whether this model can make Google’s coding products become as useful and used as Claude Code or Codex from Anthropic and OpenAI, respectively, which seems to be the killer use case, at least in terms of growing revenues.

THEWHITEBOX

Rork Launches Rork Max

The start-up Rork has introduced Rork Max, a platform focused on creating Apple apps that look absolutely incredible.

It’s basically a vibe-coding app, similar to Claude Code or Codex, but focused exclusively on mobile applications, allowing you to create games and other apps not only for iPhone but also for the Apple Watch, iPad, and even Apple TV.

The video above is not just a teaser but an actual walkthrough (more or less) of how one of the team members builds a copy of Pokémon Go. Quite special.

TheWhiteBox’s takeaway:

Remember when I was saying just two weeks ago that the acceleration was coming? Well, here’s another example for you.

Again, this doesn’t promise full-stack apps in one shot, but always think not of the present, but what we could be capable of doing six months from now.

One of my predictions for 2026 was that anyone who wanted to could be a digital builder by the end of this year, and my prediction is looking very good.

But as I always insist: AI empowers those who want to be empowered. It’s not like AI is going to turn you into a builder without you committing to it.

The biggest gap AI creates is one of action, separating those who truly enjoy creating stuff from those who simply like to use what others build.

Of course, an important disclaimer: please use these apps with care. Today, unless you know what you’re doing, they are just a way for you to build cool products for yourself, but you should be very careful with what you release into the Internet. These AIs still make rookie security mistakes, so you shouldn’t be pushing anything to production without a human developer's supervision.

Business Insider reports that Meta has been granted a patent (granted in late December; filed in 2023) describing an AI system that could “simulate” a user’s social-media activity when they’re absent, including if they take a long break, pause an account, or die.

The idea is to train a large language model (LLM) on user-specific historical activity (posts, likes, comments, messages) so it can keep interacting (e.g., liking, commenting, replying to DMs), and the patent also mentions the possibility of simulated audio/video calls. Gnarly.

Meta told Business Insider it has “no plans to move forward”, but…

TheWhiteBox’s takeaway:

Trivializing death wasn’t on my bingo card for 2026, but here we are. The worst thing is that this product would probably do well; some people can get really attached to these AIs. But the immorality is just on another level.

I have the perception that Meta’s leadership, while brilliant operators (the company is very well run), has been consistently wrong about what society needs or wants.

They got the Metaverse embarrassingly wrong, and they are getting social media wrong if they believe AI-run accounts will make the experience better. If anything, I don’t think Meta realizes that an AI-heavy social media platform loses its entire purpose.

There’s a reason people are pulling out of LinkedIn: it has become a competition to see who gets to publish the most AI-generated blog. It’s just unbearable.

This feature would only work on people so desperate for connection that the only feeling you can have when providing a service is an enduring sense that you are taking advantage of them.

Terrible, terrible use of the technology if it ever materializes. Hope it doesn’t.

MUSIC

Google Releases Music Model Liria

Google has announced Google DeepMind’s Lyria 3. You can create a 30-second track by typing a prompt (genre, mood, joke, memory, etc.) or by uploading a photo/video for inspiration, and Gemini also generates matching cover art for sharing.

They highlight three improvements in Lyria 3:

it can generate lyrics for you,

gives more control over elements like style/vocals/tempo,

and produces more realistic, musically complex tracks.

Google also says every generated track is embedded with SynthID (an imperceptible watermark), and that Gemini’s verification tools can check uploaded audio for SynthID (alongside existing image/video checks). This should make AI-generated music immediately identifiable by its trace, even if it sounds identical to human music.

Lyria 3 is available in English, German, Spanish, French, Hindi, Japanese, Korean, and Portuguese; it’s rolling out on desktop first, then on mobile over the next several days, with higher usage limits for Google AI Plus/Pro/Ultra subscribers.

TheWhiteBox’s takeaway:

As I wrote recently, it’s very tempting to catastrophize when you see this. The end of musicians, right? Well, actually, of “mediocre musicians”.

Recall that AIs struggle to be trained in any area where defining what’s good or bad is hard. Actually, the hardest thing is to optimize for greatness; differentiating good music from great.

That is, AIs can learn to imitate every genre you can imagine, which is pretty much achieved at this point. But the hardest part, getting a model that creates great music, is not a mathematical equation one can simply optimize against.

Because what makes a particular music great? We don’t have an explicit answer to that, and thus we can’t optimize for it.

What I mean by this is that there’s still going to be plenty of room for human greatness, creating music that no AI can aspire to achieve on its own; only aspire to imitate it.

And apart from that, humans also look for connection; sometimes, music is just the excuse. Nobody will go to a concert to see a robotic singer, but I have no doubt in my mind that Taylor Swift will continue to fill stadiums 20 years from now.

I wrote an article on the deeper societal implications of AI content at scale and why AIs aren’t yet remarkable (and might never be) at certain domains like writing or music.

FRONTIER INTELLIGENCE

New AI Lab Raises $1 Billion.

You may wonder, how does a new AI Lab get $1 billion in funding in 2026? Isn’t the game already decided between OpenAI, Anthropic, DeepMind, etc.?

It turns out, some researchers think not (and I agree). The man behind this new AI Lab, Ineffable Intelligence, is none other than David Silver, one of the key figures behind Google DeepMind’s success. Put potentially, and I’m only speculating here, the reason he got so much money has more to do with the approach he’ll follow.

TheWhiteBox’s takeaway:

Nothing reveals more about what he’s trying to do than his 'Welcome to the Era of Experience’ paper, which he released last year with Rich Sutton, one of the founding fathers of AI (creator of the Reinforcement Learning paradigm back in the 1980s, which is all the rage today).

In fact, we pretty much confirm such influence as Rich Sutton himself claimed that Silver’s new Lab will fulfil the era of experience.

And what does this paper suggest?

Currently, AIs are episodic engines, models that live in the current interaction, but do not carry over to future interactions what they learned from this one.

Careful, current models can bring context from past conversations to new ones, but that’s not the same thing; it’s as if you carried over a diary of past interactions to consult on every new interaction you have.

Instead, real experience is about learning from past experiences; it’s not about keeping a cheating diary, but about having your brain learn from that feedback and perform better on new ones without having to recall every single instance from the past.

In short, an AI that actually learns from experience.

Which is to say, what Silver seems to be proposing is an experience feedback loop: act → receive feedback → optimize → act again. This is a fundamentally different behavior from what ChatGPT or Claude represent today, which is more like act → copy into context → act. The latter also involves learning between interactions, but this learning is ephemeral and forgotten once it is out of context.

Crucially, this ability of models to learn from experience might allow them to be smaller without having to retrieve solutions from memory (one of the reasons larger models are better is that they store and can recall more stuff).

In a sense, it’s more about having an AI model that doens’t have to know every single fact in human history, but can suggest possible good solutions, test them, measure feedback, optimize, and repeat, similar to how humans work.

THEWHITEBOX

The Korean Discount

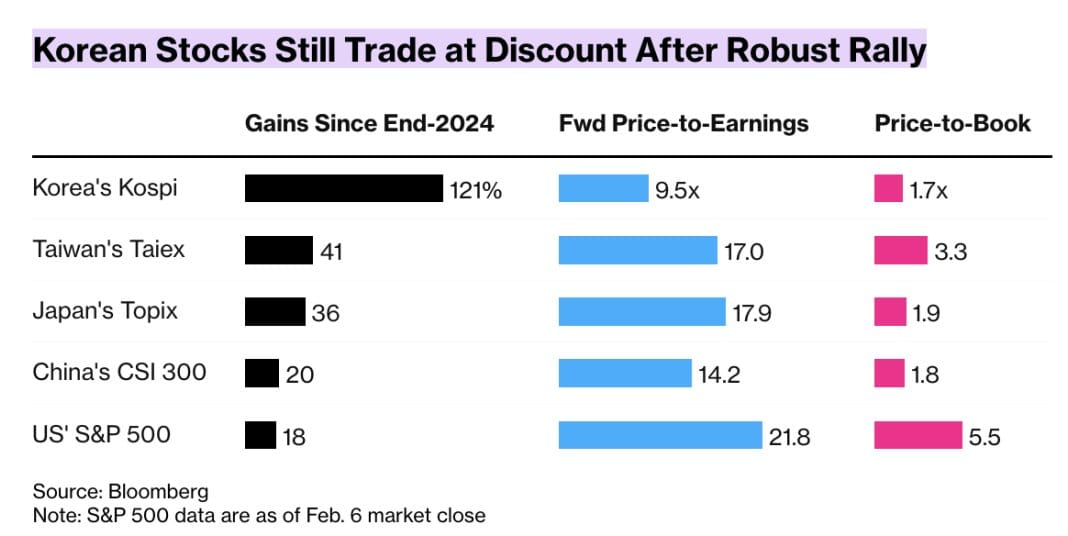

Markets are not rational. We both know that. But one of the prime examples of this irrationality is the Korean discount: companies in the Kospi index, such as Samsung and SK Hynix, trade at a considerable discount relative to similar companies in other markets.

Look at the four companies below, two Korean, two from the US:

Samsung: 7.9x forward P/E

SK Hynix: 5.7x forward P/E

Micron: 10.3x forward P/E

Western Digital: 24.9x forward P/E

These four companies do basically the exact same thing: memory and storage (Western Digital only does storage).

Samsung and SK Hynix are not only more profitable than the other two but also have larger market shares. Yet the other two are in the US index, which, as you can see in the thumbnail above, has an average multiple 2.5x higher than the Korean index's forward price-to-earnings.

The main reason for this is liquidity, and ironically, a self-inflicted wound from Korean regulators, who make it quite hard to invest in Korean stocks.

For example, for these two particular cases, the only way people can invest currently in SK Hynix and Samsung is through their GDRs, stock listings that these companies have in non-Korean indices ($HY9H for Hynix, in Frankfurt, and $SMSN for Samsung, in London). Luckily, this will soon change, as Korean regulators have finally approved Interactive Brokers (and other international brokers) to allow purchases of Korean stocks.

So with these stocks, we could havea combination of two things that could send them on their way up:

Once they become more accessible to the average investor, liquidity will pile in

If demand guidance for 2028 onward materializes, we should see a repricing from multiple over book value to multiple over earnings

The first one is self-explanatory, but the second one requires some points to mention. Currently, the sentiment around memory stocks is that their business is cyclical; good in healthy times, bad in downturns.

When that happens, you can’t just apply a multiple to earnings using methods like DCF (Discounted Cash Flow) because that requires looking into the future and assuming future demand is predictable.

Instead, investors value these stocks relative to their book value (total assets - total liabilities), i.e., what the company actually owns net of liabilities, since current earnings are not indicative of future ones.

Naturally, multiples over book value are smaller. But if demand indeed becomes predictable, or still cyclical but from a much larger demand base, we have two companies that:

Will soon receive a lot of liquidity from international markets

Could also be seen as considerably undervalued

This is all, of course, a hypothesis; nobody actually knows or can predict how markets will behave, but what nobody can explain to me is why Micron has a higher multiple (and valuation) than Hynix despite the latter being better literally everywhere.

HARDWARE

A Chip Achieves 16k Tokens/second. How?

A new start-up, Taalas, has come out of stealth with what is quite possibly one of the most impressive showings I’ve seen. This new hardware company promises (and delivers) up to 16,000 tokens per second, per user.

In fact, you don’t have to take their word or mine; you can literally try it for yourself here. It’s not a joke, it works.

The idea is to create custom silicon for each model, literally etching the model’s architecture into the chip (we’ve already seen something similar being proposed by Etched and their Sohu chip). This, combined with no off-chip memory, means that the data never actually leaves the compute chip, making it much faster to process

Additionally, this requires much less power (about 10 times) and can be built much more cheaply (20 times), or so they claim. Specifically, they mention each Taalas server requiring just 2.5 kW (approximately the same power as your blow dryer), while an NVIDIA Blackwell Ultra requires ~145kW, 58 times more, meaning we might have found a very cost-effective and power-efficient way of deploying AI.

And it doesn’t seem far-fetched considering that current GPUs and other top AI systems require a lot of power to move data in and out of the compute chip.

Intuitively, you can think of this as doing the same exercise but more cleanly, with no wasted power at all, as the chip is purposely designed for that model and that model only.

TheWhiteBox’s takeaway:

The results are incredible; I’ve never seen a model run this fast. Of course, we must take this with a pinch of salt, as we need more data, and most importantly, more proof that this can be scaled.

One big concern is custom silicon: the model frontier changes rapidly, to the point that by the time your hardware is out, your model might be obsolete. They mention LoRA adapters in the blog, suggesting they are designing the platform to support model adaptation, but this remains in question.

All very early to draw more conclusions, but I can’t deny this looks promising and, if it scales, may put into serious question how much money we’re spending on chips.

MODELS

A Foundation Model for EEG

The start-up Zyphra has announced ZUNA, an AI model designed to work with EEG brain-signal recordings (scalp sensors). It’s described as an early step toward “thought-to-text,” but the main focus right now is making EEG data more usable.

ZUNA is meant to handle real-world EEG issues: it can clean noisy signals, fill in missing channels, and estimate signals at different sensor positions if you provide the sensor coordinates. Zyphra argues this helps because EEG datasets are small and inconsistent, so a broadly trained model could transfer better across devices and studies.

In simple terms, it’s an AI that takes in EEG data, cleans it, and returns a better datapoint. But how is that remotely useful? Well, actually, it’s very useful.

TheWhiteBox’s takeaway:

If we recall last week’s discussion on Google’s new bioacoustic model, Perch 2.0, we touched on the idea that the model, despite having been trained mostly on bird sounds, was still capable of identifying whale sounds and even cluster different whale species despite being a new species to them.

The reason behind that “superpower” was that Perch is a bioacoustic foundation model, one that has seen vast amounts of bioacoustic data, to the point that even sounds from “unknown” species are familiar. That’s why it’s called a foundation model; it serves as a foundation for several downstream applications in the field of expertise.

EEGs are important across several fields:

Epilepsy care: detecting and classifying seizures, helping locate where seizures may start, and monitoring for hidden seizures in the hospital.

Sleep studies: identifying sleep stages and supporting diagnosis of sleep disorders.

ICU and operating room monitoring: tracking brain activity in critically ill patients and helping estimate the depth of anesthesia.

Cognitive and brain research: studying attention, perception, language, memory, and decision-making by measuring fast brain responses to tasks.

Brain–computer interfaces (BCI): letting users select letters or make simple control commands for assistive tech using EEG patterns.

Neurofeedback: giving real-time feedback so people can practice relaxation, focus, or other self-regulation.

Consumer and workplace experiments: rough tracking of fatigue/alertness or “engagement,” often less reliable than in clinical/research settings.

Therefore, having a model that can serve as the “foundation” for all these downstream tasks is extremely valuable. And we’ve just taken the first step in that direction.

WORLD MODELS

The Harsh Reality Behind World Models

I don’t normally say this, but The Information actually published something that made me think (they are usually just good at reporting what they hear). This semi-opinionated column on world models is actually very good.

World models are one of the hottest, if not the hottest, avenues of research in AI today, with examples like Google’s Project Genie taking the industry and media by storm.

And this article by The Information tackles the usually-ignored problem with world model sequentiality: the notion that world models can’t be parallelized as well as normal models.

As you probably know, AI runs mainly on GPUs and other ‘accelerator’ hardware. Here, accelerator is the marketing term for a parallelizer, a hardware that speeds up computation by parallelizing it; taking a workload that would take ‘x’ time to execute, dividing it into ‘n’ parts, and thus taking roughly ‘x/n’ time to process it by executing all ‘n’ parts in parallel (there’s always a portion of the workload that can’t be fully paralellized).

The problem is that the workload must be parallelizable in the first place. In layman’s terms, each part has to be unrelated.

This works great with standard Large Language Models (LLMs), as they can serve responses to different users because their responses are completely unrelated. As the bulk of the workload, the actual model, is common across all users, you can use a single model instance to serve them.

With world models is not that easy. World models are AI models that “carry a state”, meaning the AI model has to take in feedback from the environment (the “world” it’s located in), see how the model’s actions impact the environment, and then use both the model’s past actions and the new environment state to make the next action.

It’s no longer just what the model did in the past, but also how it has affected a changing variable, the environment around it.

A perfect example of this is humanoid robots. If tasked with cleaning the dishes, the robot may clean a plate, changing its state from dirty to clean, and it must learn to recognize when the plate is clean enough.

But a world model also needs to be able to identify that the plate is slipping in the robot’s hand and that it’s ceramic, which will likely break if it hits the counter.

Hence, a world model has to factor all this in to make its next move (instead of continuing to clean, stop when it’s clean enough, and also tighten the grip because its robotic hand sensors are detecting the plate slipping).

All that information is unique to that particular user and environment, so it’s not parallelizable across users.

This large payload of “user-only” data increases the workload's overall sequential nature (i.e., subsequent users have to wait until this user’s interactions finish). In practice, that is not feasible at all, so major companies have to take the hard measure of assigning GPUs to individual users.

This means each $40k GPU, designed to parallelize across potentially hundreds of users, is being used on a sequential, batch 1 workload, an absolutely terrifying waste of money.

In practice, this means world model labs have to charge users huge sums (as Claude’s fast mode charges up to $150/million tokens because it serves GPUs with tiny batches), making this a game for the uber-rich.

TheWhiteBox’s takeaway:

To me, this means two things:

This is a beautiful advertisement for non-generative world models like LeCun’s new AMI Lab, whose sole purpose is to build world models that are much smaller and constrained.

It makes it very clear that world models are just as economically asymmetrical as an AI segment gets, putting cash-rich companies like Google in a unique position to sweep the market by outcompeting others purely on economies of scale (or diseconomies of scale, in this case).

THEWHITEBOX

World Labs Announces $1 Billion in Funding

World Labs, an AI start-up founded by Fei-Fei Li, an AI pioneer who teaches at Stanford, has announced a huge $1 billion funding round to continue pursuing the creation of spatial intelligence.

In their view, current AIs lack spatial intelligence, the ability to visualize, manipulate, and navigate the 3D world, even the video ones. Thus, the idea is to train AI models to learn such a core feature of intelligence.

Their core product today is Marble, which lets you create 3D worlds from text, images, or video and immerse yourself in them. Interestingly, you can test Marble for free here; it’s an interesting experience because you can give it images of places close to your heart and immerse yourself in them.

It kind of feels similar to what Google is doing with Project Genie, but without Genie's character interactivity, and is more focused on generating high-fidelity scenes.

TheWhiteBox’s takeaway:

To me, this feels kind of a lesser version of Genie, in that it pursues the same idea, being able to create 3D worlds to train AIs in them, but without the quality and interactivity that make Genie what perhaps is the most impressive AI model in the world.

That said, World Labs’ research has a place in AI, as it’s much more likely that these models learn good spatial intelligence than models like ChatGPT, which were conceived in the realm of text.

Closing Thoughts

A huge amount of releases in just a week, and with no indication that model and product releases are going to slow down. Additionally, we are seeing renewed interest in funding AI start-ups and the emergence of a new hardware type promising unparalleled performance.

However, if you watch carefully, you’ll realize that, beyond the usual suspects raising huge rounds, the “new” start-ups all show distinct, original views that mostly contradict the status quo; they aim to fill the holes that LLMs cannot.

But the overarching sensation remains: besides OpenAI and Anthropic, who else is making money in this space?

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]

SOCIAL MEDIA

Writing From the Grave