Learn from this investor’s $100m mistake

In 2010, a Grammy-winning artist passed on investing $200K in an emerging real estate disruptor. That stake could be worth $100+ million today.

One year later, another real estate disruptor, Zillow, went public. This time, everyday investors had regrets, missing pre-IPO gains.

Now, a new real estate innovator, Pacaso – founded by a former Zillow exec – is disrupting a $1.3T market. And unlike the others, you can invest in Pacaso as a private company.

Pacaso’s co-ownership model has generated $1B+ in luxury home sales and service fees, earned $110M+ in gross profits to date, and received backing from the same VCs behind Uber, Venmo, and eBay. They even reserved the Nasdaq ticker PCSO.

Paid advertisement for Pacaso’s Regulation A offering. Read the offering circular at invest.pacaso.com. Reserving a ticker symbol is not a guarantee that the company will go public. Listing on the NASDAQ is subject to approvals.

THEWHITEBOX

TLDR;

I’ve been on a short holiday for a few days, so I haven’t had the time to write a thoroughly researched Leaders article for this week. Instead, as a one-time thing, I’m giving you another Premium news rundown. It’s packed with insight!

Today, we take a good look at the always-forgotten small models, which, once the industry realizes, will play a vital role in adoption. We also take a look at Google’s insane new image models, Anthropic’s free academy, and interesting market data on Intel, Cohere, and prediction markets, among other relevant news.

Enjoy!

HRMs

It was, in fact, too good to be true

A couple of weeks ago, a group of Chinese researchers presented a ground-breaking result. With just 27 million parameters, 24k times smaller than DeepSeek R1, a model managed to beat this model and other frontier models on the ARC-AGI benchmarks, a highly popular choice to compare top models in reasoning tasks.

The breakthrough was a new hierarchical architecture combining two modules:

High-level module, in charge of things like planning and high-level actions (i.e., pick that cup)

Low-level module, in charge of the low-level actions (i.e., move arm in this specific set of angles to reach the cup)

The idea was inspired by the brain, drawing it closer to ‘human intelligence’, at least on paper. The news went so viral that it forced the ARC AGI team to reproduce the results and check whether the claims were factual, and, well, they were indeed too good to be true.

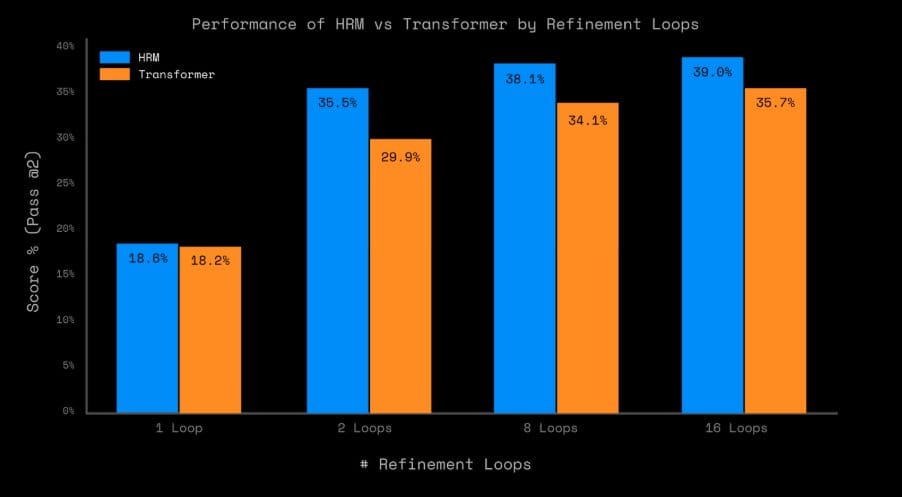

Although the results were legit and reproducible (the reproductions gave slightly lower results, but still validated the claims), the biggest letdown (a great disappointment actually) is that the architecture is not the reason the model worked so well, as comparing it to a normal Transformer (a ChatGPT-style model the size of the original HRM) yields almost identical results.

Instead, the model's surprising results can be attributed to the use of three elements known for years to be good reasoning priors: refinement, memorization, and data augmentation.

The HRM includes a prediction refinement loop, but a Transformer equipped with the same loop reaches similar results.

Through data ablations, the validating team showed that a great deal of the performance could be explained via training only on the samples most similar to the ones being tested on, suggesting strong memorization dependency.

Data augmentations also worked well, but this is nothing new; it has been a textbook method known for decades in this industry.

In a nutshell, we just found a “new” way of getting the exact same conclusions as always.

TheWhiteBox’s takeaway:

This news has a good thing about it, in that it might reignite the interest in small models, which are often overlooked by the AI hype train just for not “being that smart.”

But as I explain in the Liquid AI news in the Product section below, they are extremely important for the future of an industry that could soon find itself supply-constrained in terms of energy.

We have billions of edge devices that are literally out of the compute pool for AI, unused. Thus, training small models that can do the job without having to saturate the billion-dollar data centers will be a necessary requirement for widespread AI.

IMAGE GENERATION

Nano Banana Looks Amazing

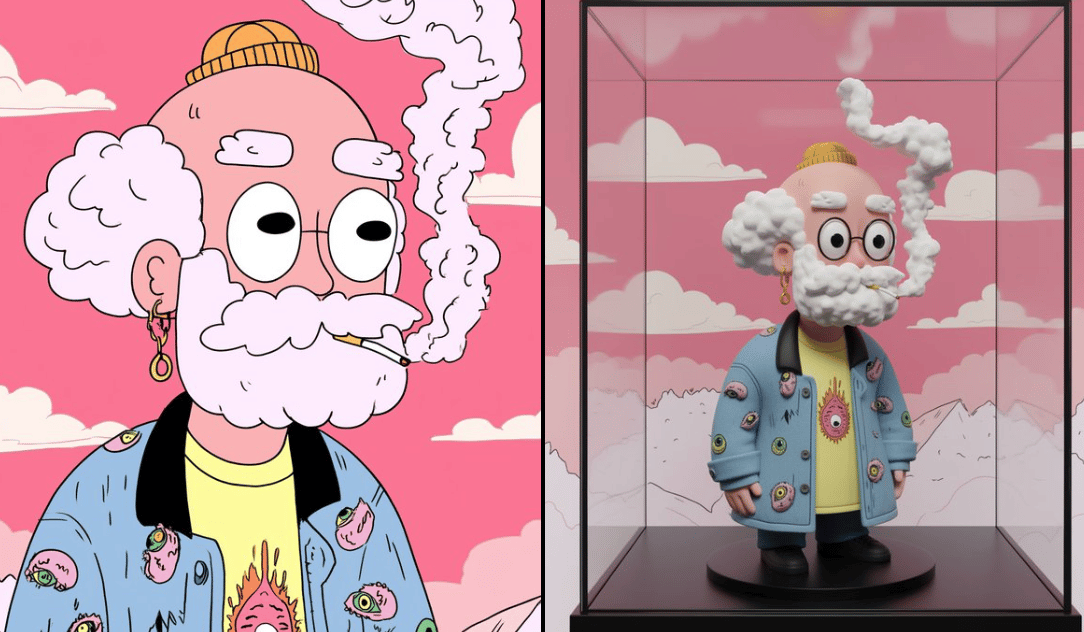

Nano Banana, a model that is thought to be Google’s new native image-generation model to compete with OpenAI’s GPT-4o image generation, is looking sharp. Additionally, Google has released Imagen 4, another impressive image generation model that took no time to take the throne in LMArena.

Google is the king of image generation.

TheWhiteBox’s takeaway:

In this hype-led industry, we often focus on text-based progress, concerned about potential stagnation, but AI is showing no such signs in other domains, such as images or video. Even top researchers like Lucas Beyer (Meta) are beyond impressed by the capabilities of Nano Banana.

With examples such as native image generation, which gives models the capacity to ‘understand’ images and thus draw things like the image above (i.e., “Turned a flat 2D image into a 3D figure“) or the incredible Genie 3, AI may stagnate in some areas, but it’s very alive and well in others.

RESOURCES

Anthropic Launches Free Courses

Anthropic has launched its Academy, offering free courses to help you level up your AI game using Claude models. It tackles areas like prompting, Claude Code, RAG, Model Context Protocol, and other key parts of the status quo.

TheWhiteBox’s takeaway:

Great initiative. It’s heavily biased toward Claude and the Anthropic product stack, aiming to attract more developers and users, but it’s nonetheless great news for anyone looking to join the AI train using resources generated by an AI leader.

CLOUD VS LOCAL

Local Trails Cloud Models by 9 Months

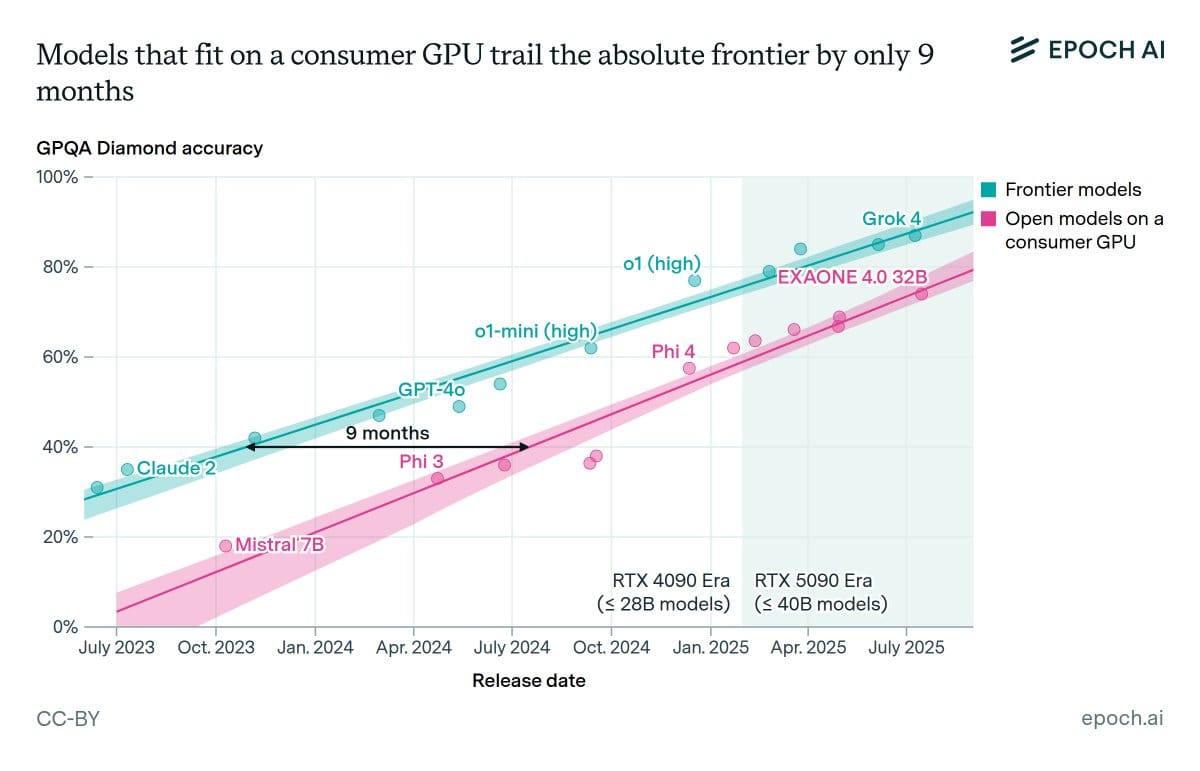

Via a series of tweets, Epoch AI has given us a data-driven view of how much local models, models that can be run on consumer hardware (affordable hardware for the average bloke), trail behind cutting-edge models running on prohibitively expensive GPUs.

And the answer is nine months, meaning that the researchers estimate that we should have consumer-grade models matching GPT-5 or Grok 4 by Q2 2026.

They note that smaller models tend to be overfitted to the benchmarks used for comparison, so they estimate the real difference to be slightly larger than what the data shows.

TheWhiteBox’s takeaway:

I’m a big believer in the value of having strong hardware at home that allows you to run as many AI processes as you need locally as possible, alleviating the risks of getting rate-limited or even preventing you from accessing the models in case of high demand.

Severe AI access rationing is something I don’t think we can discard if demand starts outpacing supply. And if that’s the case, and AI really becomes a differentiating factor, having the capacity to run most of your workloads locally could be a serious advantage.

COMPUTER VISION

Meta Open-Sources DINOv3

Meta has launched a new computer vision model, DINOv3, that excels at various computer vision tasks: object detection, image segmentation, and depth estimation, while guaranteeing stable performance across the entire video.

TheWhiteBox’s takeaway:

We are discussing what’s possibly the most advanced image and video encoder on the planet, a key component of any multimodal model these days, responsible for processing images and video and enabling language models to decode that information into text.

If you’re not following, most multimodal models (ChatGPT, Gemini, Claude) are made of at least two components:

Encoder, in charge of processing images or video data. This is what DINO is,

a Decoder, in charge of processing the user’s text request plus the image/video they send, and generating a response back in text, images, or whatever modality the task requires. It’s important to note that this decoder can be multimodal; it can generate text, images, and video, representing the most significant component by far of the entire system.

For Meta, this approach is a good way to gain some mindshare while their superintelligence team delivers what’s expected of them, which should be nothing short of a frontier model considering the billions of dollars they’ve invested in top AI talent from top competitors (mainly OpenAI).

VENTURE CAPITAL

Dry Powder is Drying Up

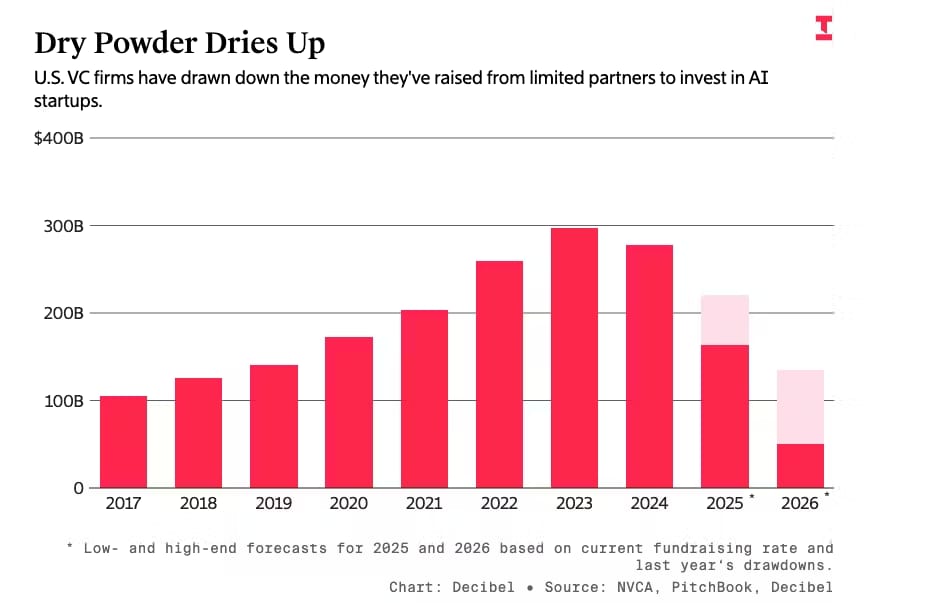

An article by The Information illustrates how much money VCs are pouring into AI startups, considerably draining their ‘dry powder’ (money raised from Limited Partners by the VCs), at risk of falling below the 100 billion mark for the first time in almost ten years.

Examples like Perplexity, which is reportedly in talks to raise money at $20 billion, making it the third investment round in three months, show how money-hungry these startups are and how deep VC trust in this technology is. But money is running out.

TheWhiteBox’s takeaway:

I’ve said many times that VCs play a crucial role in keeping the dream alive, but most of them will eventually become the real suckers in this entire story.

Most AI startups resemble money-grabs rather than genuine businesses. By "genuine," I mean companies that address a real problem, have a viable business model, and possess a defensible moat. Seems common sense, right? Well, most AI startups don’t meet any of those three metrics.

The truth is, most AI startups are scaffolds, or AI wrappers, tools that ‘augment’ models, making them better by making them “smarter”. Nothing wrong with making an AI model smarter at first, the issue is that you’re betting against the model itself; you’re betting the model won’t get better over time to the point it doesn’t require your scaffold.

And you already know how that bet has panned out until now. The expected reality is that AI models will get better, and one day, startups valued in the billions will become bullet points in Google’s Gemini 4.0 presentation.

The real path to startup success in AI is agentic tooling, an industry that, for now, is surprisingly vacant in both startups and interest, because it’s much sexier to invest in a Devin (which has just raised $500 million at almost $10 billion valuation) or a Cursor that “makes models better” instead of AI startups building the much less attractive, low-margin agentic tools.

But the question is: for how long can VCs pretend the future of software is, in fact, not sexy?

INTEL

US Gov to Take Stake in Intel

It has been revealed that the Trump administration is reportedly negotiating with Intel over the possibility of the U.S. government acquiring an equity stake in the chipmaker. These talks follow a White House meeting between President Trump and Intel CEO Lip‑Bu Tan.

If realized, the stake would support the construction of Intel’s delayed fabrication facilities in New Albany and Licking County, Ohio, facilities that are now several years behind their initially planned completion dates.

The precise size or terms of any potential stake remain unspecified, and the report stresses that discussions are still fluid and may not culminate in a deal.

TheWhiteBox’s takeaway:

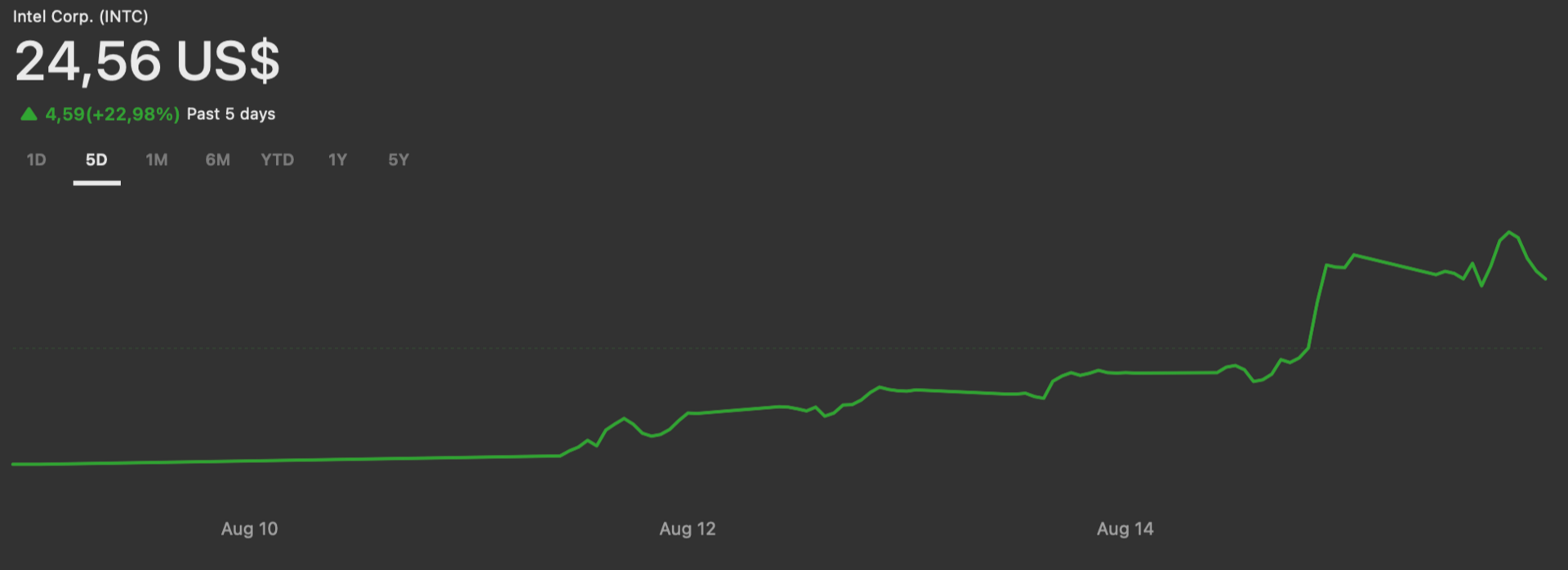

Markets loved the idea, and Intel is up 23% over the last five days:

The reason for this is obvious. While there are no reasons to believe in the company, the fact that the US Government is willing to take a stake in it clarifies its stance: they won’t let it fail.

Showing ‘national champion’ proof is music to the ears of investors who want Intel to succeed as one of the US’s top chip manufacturers, potentially surpassing smaller players like GlobalFoundries (which is also struggling considerably).

While the US is forcing TSMC to invest in the US, TSMC and the Taiwanese government aren’t dumb and will not allow top talent and state-of-the-art node processing (2nm and below) to leave Taiwan.

Due to national security concerns regarding China, the fact that invading Taiwan would destroy that portion of the market is a known deterrent for invasion, known as the ‘Silicon Shield’.

Therefore, just like China has SMIC, the US desperately needs a manufacturing champion, and all roads lead to Intel, even if Intel insists on giving everyone plenty of reasons not to trust them.

VENTURE CAPITAL

Cohere Triumphs Again

Cohere has announced a new funding round, for an undisclosed amount, valuing the company at $6.8 billion, with participation of companies like NVIDIA and AMD.

Unlike other players like OpenAI or Google, with a more open playbook, Cohere is specifically focused on enterprise AI, developing models that may not be Maths Olympics gold medalers, but deliver strong performance in areas that enterprises value, like RAG, multilinguality, and deployability (their models usually fit in one or two GPUs at most).

TheWhiteBox’s takeaway:

In my 2025 predictions, I said that companies like Mistral or Cohere were clear acquisition targets, and only the fact that they had ‘national champion’ status, for France and Canada, respectively, could save them from that reality.

This national dependency is now more real than ever. Both companies, like meaningful revenues, struggle to compete with giants like OpenAI or Anthropic, and their alleged moats (cost-performance ratios for Mistral and enterprise focus for Cohere) are not entirely clear. Thus, it’s not surprising to see the extensive involvement of their respective governments and top pension funds in every investment round.

Despite this, they still require vast amounts of money to play the game, making them some of the companies with the highest valuation multiples over projected revenues of the entire industry. These two Labs should have ceased to exist a long time ago, and we know why that’s not the case.

GAMBLING

Betting on All Things AI

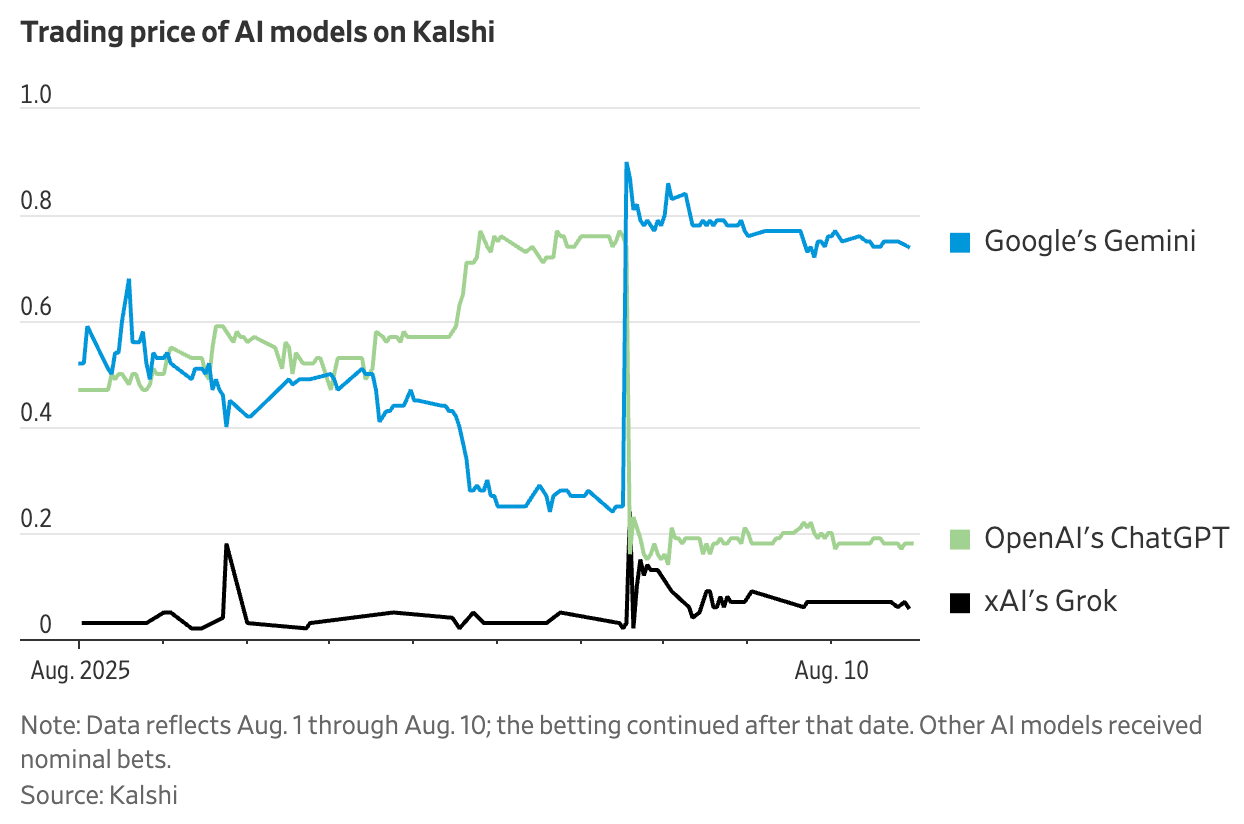

A new way of making money has come to AI, and it’s one for the books: gambling. Although this is a trend I’ve been seeing since OpenAI’s Sam Altman's ousting back in late 2023, the pool sizes are now so large that media like The Wall Street Journal has echoed this new trend, highlighting how people can make money betting against each other on which model is best this month, which Lab will be ahead by the end of the year, and so on.

For instance, there is more than $7 million bet in the best model by the end of the year, signaling growing interest in gambling hard-earned money in anything and everything.

TheWhiteBox’s takeaway:

Not a fan of gambling myself (my judge mom, who has had her fair share of gambling addiction disqualifications in court, has ensured that was the case), but prediction markets are a great way to estimate the wisdom of the crowds regarding things like AI.

It’s severely vibe-led, speculative, and whatever you want, but it certainly has some sort of signal. And the larger the pool, the more it reflects the sentiment of the real population. That’s not a Kalshi ad, it’s literally how statistics work; the larger your data distribution, the closer it is to the real, underlying distribution.

And in this regard, people seem to have lost faith in OpenAI at least partially, and Google leads comfortably. I can’t blame them; it’s becoming tough to bet against Google.

CHINA

China Opens to Top AI Researchers

China has announced a new working visa for top AI researchers. The only requisites are:

Being below 45 (apparently)

Coming from a renowned Chinese or foreign university or having worked at a top institution

TheWhiteBox’s takeaway:

I don’t think we can overstate how surprising this is, a shift towards openness that is as unexpected as it is telling to how important AI is to China’s interests.

For the US, this couldn’t come at a worse time, as international researchers, despite their tremendous value (just look at what Meta is paying for researchers), are struggling to keep their visas unless their job is stable.

Talent remains a crucial edge, a battle the US simply cannot afford to lose. On the flip side, it’s great to see China embrace openness (not just from the AI model perspective, but also regarding talent).

LOREM IPSUM

Liquid AI Launches Fastest Small Models

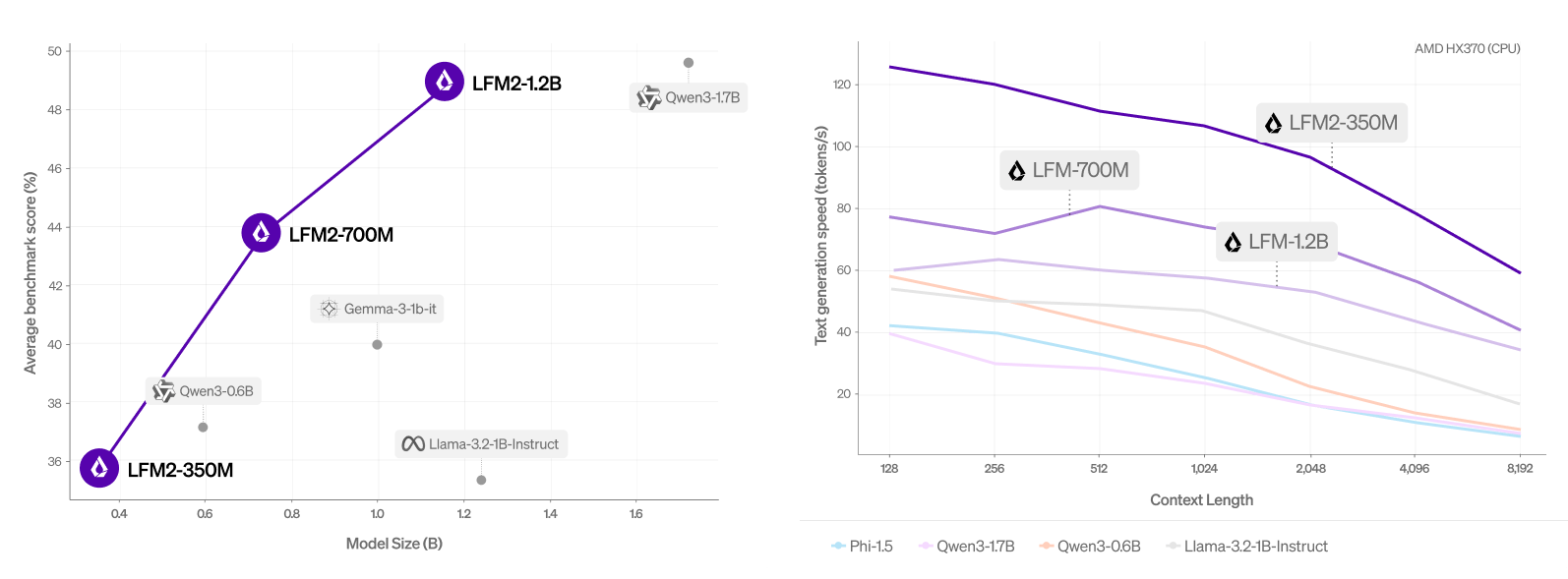

Liquid AI, a team of star researchers from mainly MIT, has launched the second version of their Liquid Foundation Models, or LFMs, and they are now claimed to be the fastest on-device Generative AI models on the market, reaching in some cases up to 500 tokens/second on a MacBook M3 Max.

With a hybrid architecture of convolution and grouped-query attention blocks (a somewhat different approach to most labs, which focus exclusively on attention blocks), LFM2 models (350M–1.2B params) deliver up to 2× faster CPU speeds than Qwen3 and train 3× more efficiently than LFM1, the previous version.

By relying more heavily on convolutions, they reduce overall cost complexity for sequence length to the sub-linear domain. In layman’s terms, an all-attention model has quadratic costs relative to input sequence length (doubling the sequence quadruples cost) while convolutions have linear complexity. By combining the two, you don’t quite get linear cost scaling, but sub-linear, which at large sequences makes a huge difference.

Simply put, LFMs require much less compute compared to standard models.

Trained on ~10T tokens of data, they match or beat larger peers like Gemma 3 1B and Qwen3-1.7B. LFM2 supports 32k context and runs across CPU, GPU, and NPUs (Neural Processing Units, a type of accelerator that trades off speed with battery life, which is why they are so heavily used on battery devices like smartphones or laptops).

TheWhiteBox’s takeaway:

I’ve tried the models, and I will say they will underwhelm you if you test them on things you usually ask ChatGPT. They are too small to have developed the appropriate level of “intelligence” to solve complex tasks.

But does that mean these models are useless? No, Liquid AI sees a $1T+ market by 2035 for compact, private models, and that could very well be true.

Instead of looking to compare them to large models, we are talking about models designed to be instant, for cases that require extreme speeds and very constrained environments, like modest smartphones or laptops.

While such small models aren’t great options for general-purpose requirements, there’s probably huge untapped potential for taking models of such sizes and training them for a very particular use case.

The mantra of small models is to do just one thing, but doing it great.

I would go as far as to say that businesses would obtain much better results on AI if they parted ways with their ‘ChatGPT for everything’ aspirations and instead focused on training/fine-tuning small models that, with proper training on the task, would obliterate ChatGPT’s performance, no questions asked.

In fact, we may be forced to look into small models even if we don’t want to the moment any of the Big Tech companies show any sign of slowing CAPEX investment. In the event of energy undersupply, which is very likely, models capable of running on consumer-end hardware may be the only means to achieve actual planetary-scale adoption.

And even if we have the electrical power, saying we want 5-GW data centers, the types you need to run the large models and meet expected demand, is one thing. Building them, with a slow and undersized grid, is a totally different story.

But that’s a story for another day.

On a final note, I should go on and just say it: Apple should acquire Liquid AI. Focused on small, fast models built for compute-constrained environments (even being optimized to be run on the CPU), sounds like the perfect match for Apple, all the while having a MIT-based strong team with a sub-10-billion valuation ($2 billion in the latest round). We are talking about a few days’ free cash flow for Apple. This is a no-brainer.

TINY AI

Gemma 3 270M is Here

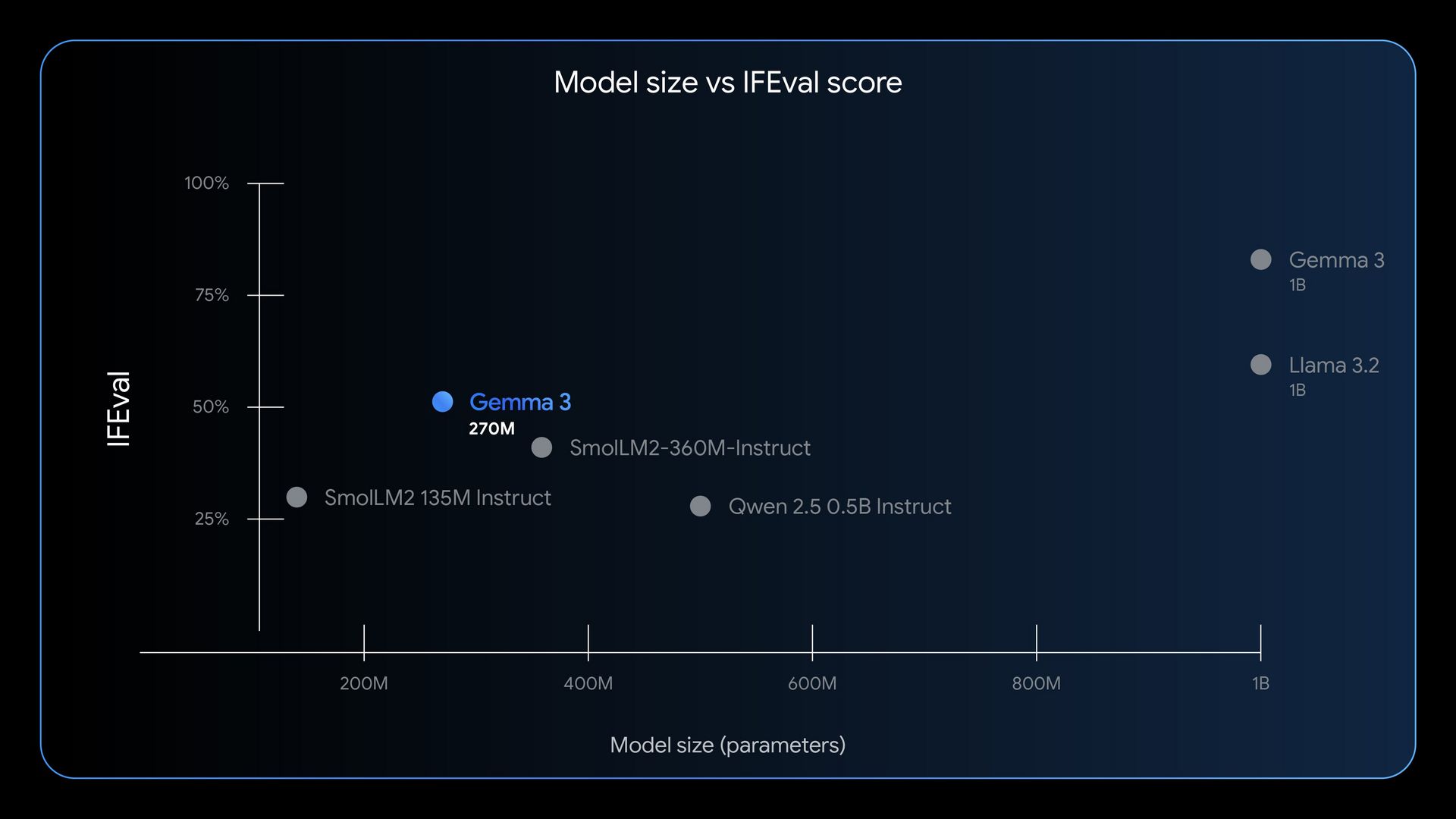

Google has released its latest minute model, a 270-million-parameter model with impressive performance for its size, significantly outperforming all other models under one billion parameters (adjusted to size).

It’s explicitly trained for instruction following tasks, meaning the model is very good at doing what was instructed, despite being so small. Another highlight is its data efficiency, burning only 0.75% of a Pixel 9 battery after 25 entire conversations.

TheWhiteBox’s takeaway:

As you may have guessed from the last news on HRMs, I’m very excited about small models. They are fast, very cheap, and can be run locally, meaning you don’t need Internet or trust the security of your model provider to prevent people from stealing your data.

Months from now, we will ask ourselves why we were ever accepting sending sensitive data to OpenAI servers; some tasks must remain local, period. As Google itself stated in the release, you wouldn’t use a sledgehammer to hang a picture frame.

WORLD MODELS

Genie 3 is just incredible

Genie 3 is just a breathtaking model; I've been more impressed with it than any other AI model I've seen, probably ever. The fact that you can turn paintings into 3D worlds, at your choice, all the while making them interactable, is just unfathomable.

TheWhiteBox’s takeaway:

But why is this so impressive?

When considering models like ChatGPT, they operate by leveraging something we call attention. Each word in the input sequence is represented as a vector, capturing the key attributes of each word, and these vectors are multiplied with each other; the more similar two vectors are, the more semantic information they share and, thus, they ‘attend’ to each other.

For example, in the sequence ‘The green forest’, ‘forest’ becomes green by attending to the word ‘green.’

AI models working with images operate similarly, but instead of text, they process patches of pixels, which also interact with each other to construct objects and other structures within the image. But video is totally different beast.

In video, attention can not only be applied at the image level (videos are concatenations of images known as ‘frames’). If you apply attention only that way, the model loses temporal coherence because it cannot make, for lack of a better term, different frames in the video talk to each other. Therefore, we perform what we call ‘spatiotemporal’ attention, in which this attention mechanism is not only applied at the frame level, but also on past frames.

Simply put, each patch of pixels will attend not only to other pixels in its frame, but also to past frames. Therefore, if the current frame shows a gorilla, the model must ensure that this gorilla is coherent with the gorilla shown in past frames (i.e., if the gorilla was behind a wall, it cannot instantly appear in front of it).

Consequently, when generating new frames of the video, spatiotemporal attention ensures that new generations are not only structurally coherent (the frame depicts something logical) but also temporally coherent (logical based on previous frames).

But Genie takes it a step further, as these newly generated frames are not only conditioned on past frames, they are also conditioned on the real-time decisions of the user interacting with the world the model is creating.

Using the gorilla example (assuming it’s a playable character the user is controlling), the gorilla will only appear in front of the wall if the user guides it through the door. In layman’s terms, new generations are not only conditioned on spatiotemporal coherence, but also on the real-time decisions of the user who decides what happens next, adding a new level of complexity for the model.

And the fact that this highly complex prediction dance works is just a statement of how impressive Genie 3 is.

Closing Thoughts

This week has been a great week for small models, who might be ready to become important again (they are strategically fundamental; we have not yet realized it as an industry).

In money markets, AI continues to mature, which has both positive and negative connotations. The good news is that the market is so relevant that it is forcing countries like China to reopen in search of scarce talent, while also seeing gambling take a growing presence. And while VC money doesn’t appear to be slowing (quite the contrary), there are so many legs to the party, and at this rate, VCs will soon run out of money to deploy.

Without VC support, cash-burning startups may run out of money months or even weeks after the previous round, with no one else remaining to fund them. With data center companies already heavily relying on bank loans and even private credit funds (high-risk loans) or even special vehicles to trade instant cash for future cash flows, this could soon be the new norm. Money is still money, but this could give the entire industry a ‘bubble smell’ nobody wants.

On a final note, the architecture that had generated so much excitement over the last few weeks, the HRM, turned out to be a sort of false alarm; in an industry that represents innovation like AI seems to do, the underlying tech is surprisingly static, with the same few breakthroughs, some several decades old, representing the foundation of this entire space and with very few recent innovations actually delivering.

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]