THEWHITEBOX

TLDR;

Welcome back! This week, we have a long list of fascinating news, from amputated robots that still move, great models from both the US and China, and even a floating power plant; yes, this newsletter is that varied.

Enjoy!

THEWHITEBOX

Things You’ve Missed By Not Being Premium

On Tuesday, we take a look at widely different news, from:

deep dives into xAI and Anthropic’s businesses and struggles,

the different approaches to robotics coming from China and the US,

investors making stupid decisions (again),

and of course, technological improvements, mainly open-source but also including the new efficiency king, Grok 4 Fast.

Subscribe today and get double the insights into your inbox every week!

/

WORLD MODELS

Meta Presents the First Coding World Model

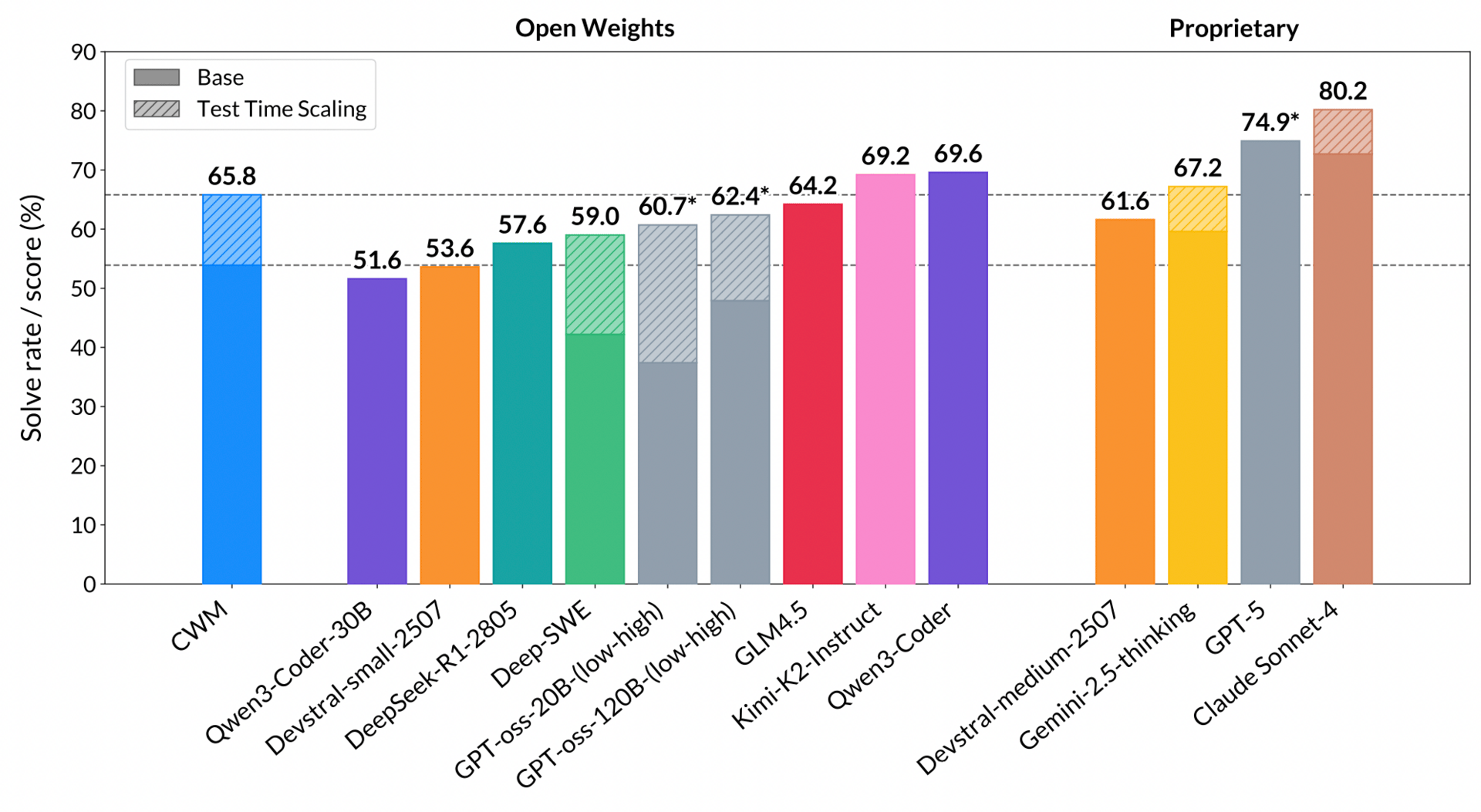

Meta’s Superintelligence Lab, the new team created after the massive Llama 4 failure that led Mark Zuckerberg to panic and poach dozens of top researchers from competing labs, has presented its first model: CWM, standing for Coding World Model.

The model showcases robust metrics for its size (just 32 billion parameters, way smaller than frontier models), competing in many aspects with top models like Gemini 2.5 Pro.

This might sound like just another coding LLM, but it’s different in many aspects. The most significant change stems from the training procedure.

Typically, models are trained using a technique known as ‘imitation learning.’ They are given vast amounts of data (code in this case) and have to learn to imitate it, replicating it in the literal sense. This is akin to teaching kids to write well using dictation exercises. Eventually, the kid learns how to write via rote repetition.

This works, but as you may imagine, it isn’t a particularly great way to create intelligence, in the same way most human learning isn’t about copying texts.

Thus, we then add a ‘post-training phase’ in which the model isn’t guided toward the answer. Instead, we give them a task, the result, and a pat on the back.

Here, the model must find its way to the answer, and we help it by providing intermediate signals to indicate whether the model is heading in the right direction (think of the game ‘hot and cold’). This is called reinforcement learning because we reinforce good choices and penalize bad ones.

This is a superior training method to develop the ‘I’ in ‘AI’ because models must actually figure out how to arrive at the answer instead of simply copying it.

The point I’m trying to get to is that tables are turning, and the latter training method is gaining prevalence as a share of total training.

In other words, the ‘guiding stage’ where models rotely imitate data is less and less important, and the ‘figure it out yourself’ phase is becoming more relevant (Grok 4 was the first model to allocate more compute to RL than to imitation learning).

And what does this mean to the industry?

In my opinion, this means we’re still very early, and much of the progress we’ve seen so far is not only not indicative of future progress (as we’re using a fundamentally different approach), but it could actually be undermining AI’s real potential that could soon be realized.

ADOPTION

How Good Are AIs In Real Problems?

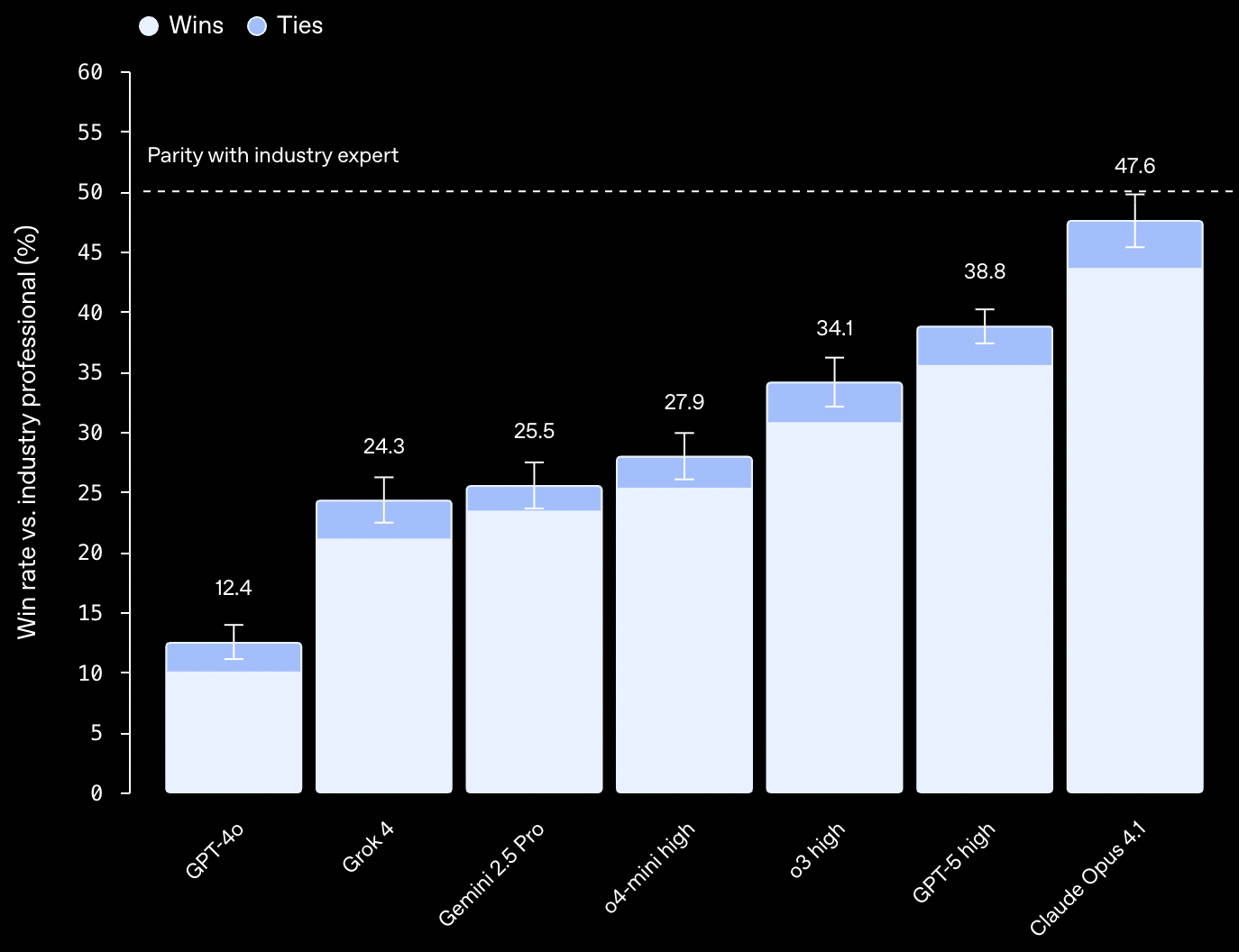

GDPEval is a new evaluation from OpenAI that tests AI models on economically valuable, real-world tasks across 44 occupations, drawn from industries that contribute significantly to GDP.

Tasks are realistic deliverables (documents, slides, diagrams, etc.), crafted and reviewed by domain experts. Models’ outputs are judged by experienced professionals, compared blindly to human deliverables.

Early results show frontier models nearing expert quality, sometimes outperforming in certain task types, and doing so much faster and cheaper (in inference cost) than human experts, with Anthropic’s Claude Opus 4.1 having the best results overall.

TheWhiteBox’s takeaway:

This is absolutely great. It’s about time AIs start being evaluated for their real economic value, rather than how well they can replicate competitive programming competitions that nobody asked for.

While OpenAI acknowledges limitations (one-shot tasks, lack of interactivity, task ambiguity) and plans to expand GDPval’s scope and realism over time, this approach is justified because it will now incentivize AI Labs to optimize toward real value, not just numbers on a blackboard.

AGENTS

MCP Craze Continues with Apple and Google

The Model Context Protocol (MCP), a new protocol that enables AI agents to utilize other tools in a standardized manner, is experiencing massive adoption. Now, Google and Apple join the fray.

Google has introduced another MCP, this time for Chrome Developer Tools, meaning your coding agent can now see and debug its own software creations using Chrome.

On the other hand, Apple is going to introduce an MCP layer inside its hardware products, allowing apps to expose features to agents as part of the App Intents feature. For instance, it can be used by apps on an iPhone to offer their services to the iPhone’s main agent (Apple Intelligence), which can use them without having to interact with the app itself (e.g., Apple’s AI model using MCP to connect to the Gmail app to read and reply to an email).

TheWhiteBox’s takeaway:

While MCP is not exempt from issues, especially security-wise, it’s simply a necessary abstraction for AI agents to work properly.

Instead of the agents having to do everything, they can simply be in charge of planning and logical execution, while the actual execution is carried out within the tools (in Apple’s case, the other apps on the iPhone), reducing complexity and the risk of failure.

For Apple, it’s also a matter of leveraging its distribution. The Apple ecosystem of apps is a multi-billion-dollar opportunity for them; they simply cannot miss. For them, having MCP integration automatically hands agents access to millions of applications; a way to make Apple Intelligence agentic with exactly zero required investment beyond making their AI model MCP-supporting.

With their great hardware and huge distribution, they have all they need to succeed. The problem? They are Apple and suck at software, which is precisely the area where others shine.

FRONTIER RESEARCH

Alibaba’s First Frontier Model

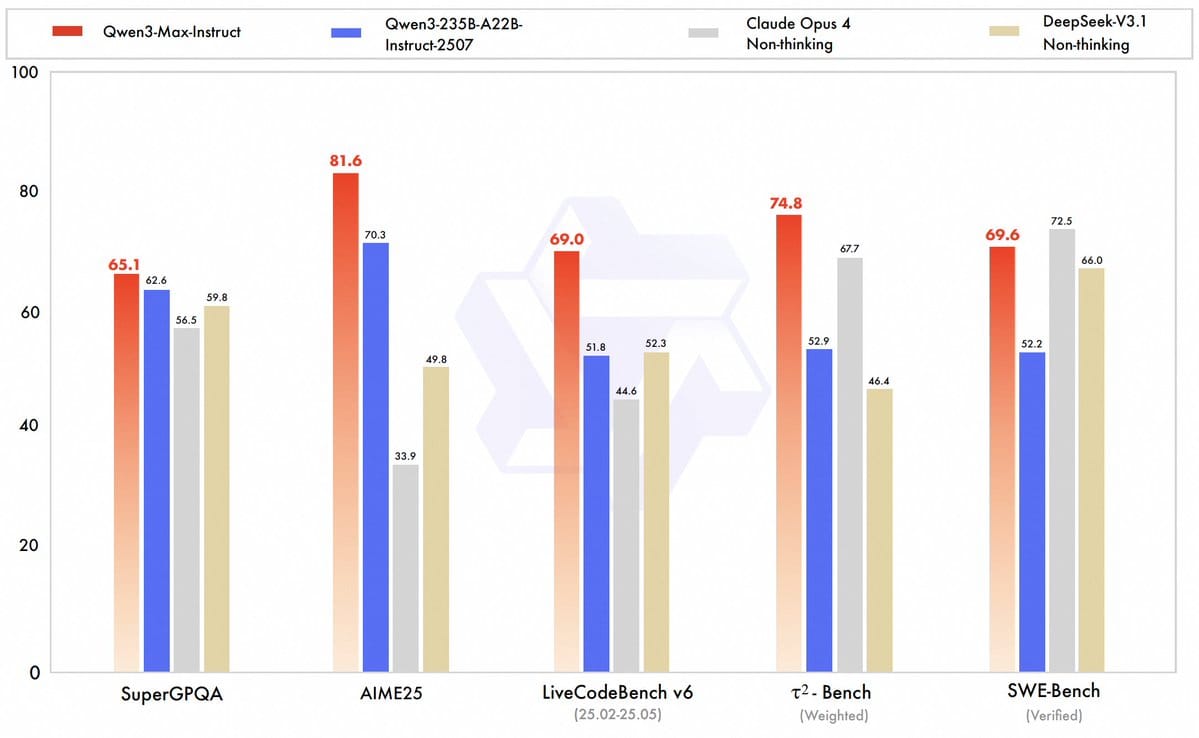

Alibaba, the Chinese e-commerce giant, is also a leading AI lab, as evidenced by its Qwen model series, which are arguably the best open models in the world. It has now finally developed its first true frontier AI model, Qwen 3 Max, which matches most top US models in most benchmarks and vibe evaluations.

Interestingly, despite not being a reasoning model (it’s not trained to generate chains of thought to answer every question, a technique that can make models break problems into steps to facilitate problem-solving), it is surprisingly “smart.”

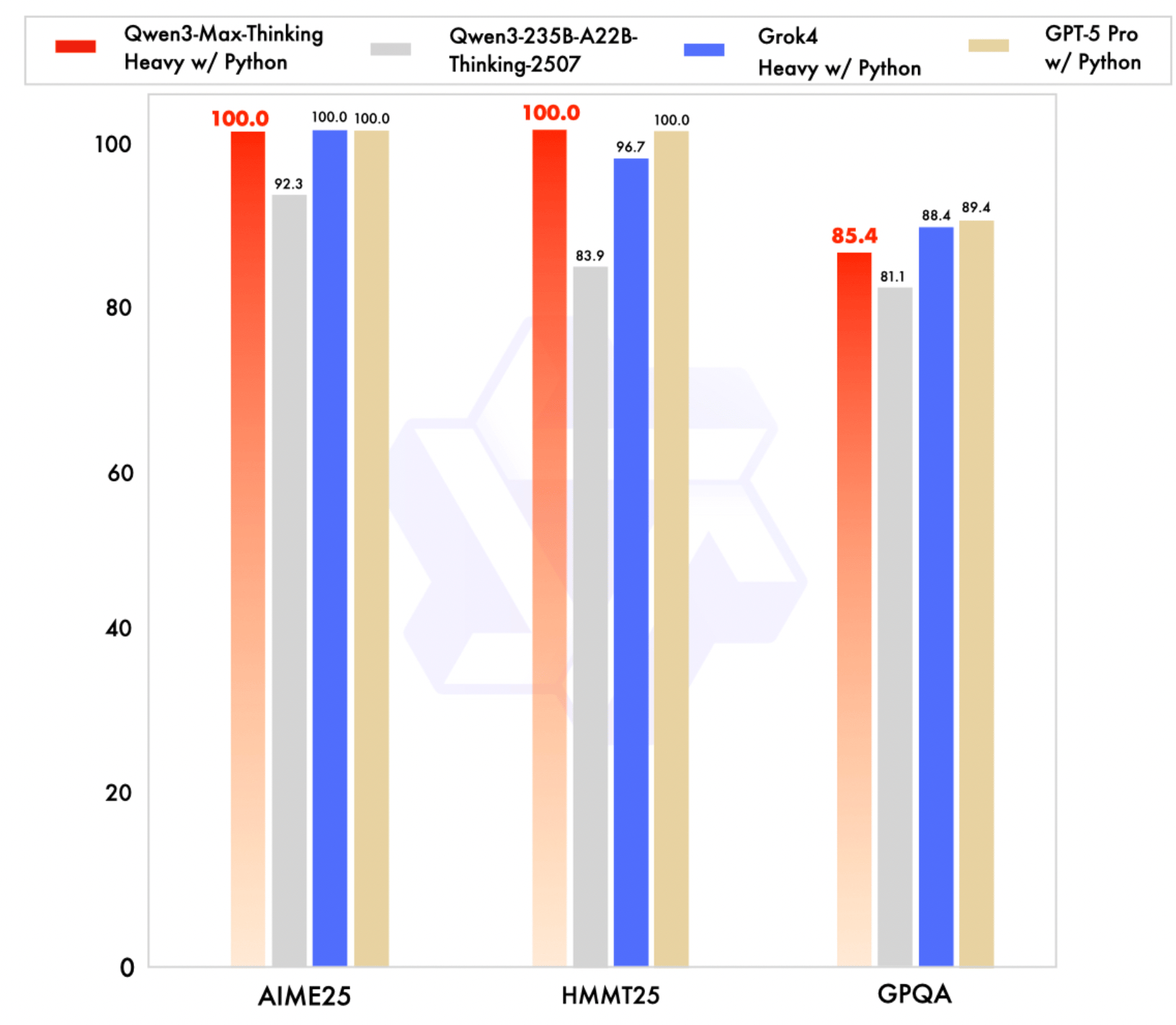

And when thinking is dialed up (becoming a reasoning model), it competes with GPT-5 Pro and Grok 4 Heavy, two models that are, in fact, a conglomerate of models working together or at least running parallel executions to choose the best solution (it’s safe to say that this Qwen 3 Max Thinking mode most likely does the exact same thing).

TheWhiteBox’s takeaway:

The story that surprised no TheWhiteBox reader. We’ve been calling the arrival of Chinese frontier models for more than a year.

And while DeepSeek R1 set the stage, offering frontier-like performance with the models of the time (earlier in 2025), this model is competing with the true frontier today, either in a multi-agent setting (several Grok 4 models collaborating) or a best-of-N approach (parallel executions by several GPT-5 models), and in a remarkably impressive fashion.

Importantly, unlike Grok 4, which has been estimated to cost around $500 million, it’s safe to say Qwen 3 Max did not cost that, mainly because Alibaba doesn’t have the compute or the capital to do so.

Beyond China matters, it’s so clear to me that AI in itself will never be a moat. As always, moats will come from superior product experiences and great distribution. To me, that signals that this industry is extremely overcapitalized and overvalued, with companies racking up billion-dollar valuations for being able to craft a couple of good prompts on top of an OpenAI API.

You don’t need hundreds of millions of dollars to do that, which means you, dear founder in SF who feels like a billionaire for having a 20% stake on a $5 billion valuation startup that didn’t exist two years ago, have no moat and will soon have 5-person-team competitors with hundreds of times smaller revenue objectives because they did not raise at a valuation that was never healthy in the first place (or will never raise at all).

And the best thing of all is that this model is free. This industry is going to be a bloodbath.

ENERGY

China Presents First Floating Wind Power Plant

Although not explicitly an AI news story, China is about to enter mass production of a 1 megawatt (MW) floating wind power plant, a remarkably innovative new type of power plant.

That’s enough power to supply electricity to almost 1,000 US homes (~800 at an average of 10,500 kWh per year).

Europeans consume around a third of that, so that would be enough for ~2,400 homes in that case.

When it comes to energy, is there anyone really daring to come close to China at this point?

TheWhiteBox’s takeaway:

Super cool technology, but this is just 1 MW. You would need a thousand of these, yes, a thousand, to provide enough power to new-generation data centers like Elon’s Colossus 2, Microsoft’s Fairwater, or OpenAI’s Abilene data center.

Thus, would love to know how scalable this is. It seems totally pointless unless they have a way to scale these things to hundreds of MWs. Still super cool, so I thought it was worth sharing.

SOVEREIGN AI

OpenAI For Germany

OpenAI, SAP, and Microsoft have teamed up to launch “OpenAI for Germany”, a sovereign-cloud offering aimed at bringing AI to Germany’s public sector while respecting strict standards around data sovereignty, security, and legal compliance.

The service will be hosted via SAP’s Delos Cloud (powered by Microsoft Azure) and is scheduled to roll out in 2026.

It will allow German government bodies, administrations, and research institutions to use AI tools in areas such as records management, administrative data analysis, and workflow integration, all the while ensuring that the data remains within controlled, local boundaries.

To support this, SAP intends to establish “significant” onshore hardware in Germany (initially targeting 4,000 GPUs) and may expand further depending on demand. The initiative aligns with Germany’s ambition to generate up to 10% of GDP growth from AI by 2030 and supports broader goals of digital sovereignty in Europe.

TheWhiteBox’s takeaway:

AI Labs continue to mature. Enterprises generally do not trust these labs, and government entities even less so, especially in the highly regulated EU environment. Therefore, partnerships that generate trust around these solutions are necessary.

What sounds downright laughable is the German government's naivety in thinking that AI will generate 10% of GDP in value creation by 2030.

Not because the technology isn’t valuable or ready, but because Germans, like most European countries, including mine (which is way worse in this aspect), are masters of talking big game and not doing shit all, instead using innovations as an opportunity to add yet more bureaucracy for no apparent reason beyond justifying the worth of… well, bureaucrats.

If Germany is so enthusiastic about AI, why is that the only AI Lab that emerged from here, Aleph Alpha, is absolutely irrelevant in today’s AI world?

Don’t get me wrong, I’m not against Germans, I’m talking about Europe as a whole. Why is that the only relevant AI Lab in Europe, Mistral, is unequivocally inferior to at least ten other AI Labs coming from the US and China?

Germany and Europe as a whole need to get their act together; no European in their right mind believes any of these commitments will be remotely accurate.

I desperately want to say good things about Europe. But I just can’t.

VC

Cohere Raises $100 Million at $7 Billion

Cohere, Canada’s AI champion, has announced another funding round for $100 million at $7 billion valuation, $200 million more than the previous valuation at $6.8 billion.

What might seem as stellar growth (the company is six years old) pales in comparison to the success of competitors. Nonetheless, xAI is poised to reach a $200 billion valuation in just two years of existence.

Importantly, a significant portion of the money appears to be coming from AMD, NVIDIA’s arch-rival. So, what to make of this announcement?

TheWhiteBox’s takeaway:

A partnership between these two companies makes a lot of sense. The crucial point to note is the combination of Cohere’s tendency toward smaller models, coupled with AMD’s lower price tag and smaller servers.

Cohere is struggling to compete; there’s no point in denying that. Revenue is small, distribution is smaller, and the general skepticism from enterprises to AI, the business they are focused on, doesn’t help.

Similarly, AMD is also struggling to sell chips to larger labs, because AMD’s scale-up (how many GPUs they can pack in every server), networking gear (they don’t have an alternative to Nvidia’s NVSwitch in market), and software issues (ROCm, AMD’s software, is inferior to NVIDIA’s CUDA), make them a viable solution only for smaller models, as frontier models benefit from huge scale-ups and data-center-sized clusters where NVIDIA eats AMD for breakfast (for now).

Casually, where AMD shines, smaller models, is precisely the perfect recipe for Cohere, as that’s precisely what they focus on.

So, Cohere provides AMD with a client that aligns with the reality of AMD today, guaranteeing potentially hundreds of millions in revenue for the latter. Meanwhile, Cohere gains access to cheaper accelerators compared to NVIDIA’s substantial markup while it finds a way to increase its revenues.

LIQUID MODELS

Moonshots ‘OK Computer’ Agent

Chinese AI Lab Moonshot has released its first agentic product, ‘OK Computer’, based on its series of very powerful Kimi models.

The tool is allegedly capable of doing a multitude of amazing things, from creating entire software products to drafting slides, processing millions of Excel rows, and more.

TheWhiteBox’s takeaway:

The video presentation is absolutely incredible. But you know how the song rhymes in these cases: skepticism is the word.

That said, even if the marketing campaign exaggerates the real power of the tool, it’s an elegant way of envisioning the world we’re moving toward: the declarative paradigm I’ve always discussed, a future where humans are limited by their own imagination and can declare what needs to be built.

The consequences, in my book, are clear as day:

Progressive decrease in value in consulting and financial markets, lawyers, and other professional services, as much of their work can be carried out by AIs. These people will still be needed, but not for mundane, execution-heavy tasks; your value in the service business will be measured by the insights you provide, not the billable hours you work.

A potential era of ‘fast-fashion’ software, a term Sam Altman himself used, in which the value and life-cycle of software products will decrease exponentially, in a similar style to how clothing trends come and go. Time-to-product decreases, so competition increases while barriers to entry fall. In a not-so-distant future, even your current customers will be “competitors”; they’ll rely more on their own software engineering capabilities.

Most AI wrapper startups in this market will inevitably fail, as foundation model labs training the AI models will verticalize upward and cannibalize the companies building on top of their models.

With AI, it’s always about what it’ll be instead of what it is right now.

AGENTS

Perplexity and OpenAI’s Personal Assistants

In the current state of affairs, there are a few powerful use cases. As there aren’t many, all AI companies jump into them without hesitation.

One of those use cases is inbox management, where agents manage your emails, allowing you to read, summarize, or reply to email messages in your ever-cluttered inbox.

One particular company that’s probably too accustomed to jumping on anything it sees as potentially valuable is Perplexity, which has just released that, after products like ChatGPT offer similar features (one of the few ‘ChatGPT connectors’ you can integrate your ChatGPT with is Gmail).

To enable this, just head on to the Email Assistant hub and sign in to Gmail or Outlook (only for Max users).

Similarly, ChatGPT has just announced Pulse, a new feature that connects models to your various apps, providing timely updates useful for your everyday life.

TheWhiteBox’s takeaway:

There’s r’t much to say about the new ChatGPT feature. It seems nice; let’s see how it works.

I do have a lot to say about the Perplexity announcement, especially regarding its future. It comes days after The Information reported that Perplexity’s ad business was struggling. If you’re a regular of this newsletter, no surprises here; I have shared my extreme skepticism on the viability of ad businesses run on top of Chat assistants like Perplexity or ChatGPT (for the latter, it seems not like a matter of if, but when).

The reason is simple: how on Earth do you do it? One thing is having to show ten blue links and casually add three sponsored ones on top based on the user’s request, and another is an AI model processing your request and actively choosing sponsored sources instead of non-sponsored ones.

The main issue is that these models are autonomous; they aren’t following scripted rules, but instead optimize which source to choose in ways that we can’t predict most of the time.

So, while you can reach an agreement with a publisher of ‘$10 for 1,000 views’, as a model provider, you can’t easily predict how many inferences from the models you will need to achieve those 1,000 views. If that’s 1,005, great, but if that’s 10,000, the $10 you’re getting is orders of magnitude smaller than the costs of those inferences.

Perplexity’s current model is based on attribution, meaning it employs a revenue-sharing mechanism. Perplexity allocates a percentage of the subscription revenue to publishers who participate in a given source. But based on the report linked above, publishers are far from happy.

The only possible route toward a sustainable ad-based system for these companies is an auction-based marketplace, similar to the current system, in which publishers bid for relevance. However, the complexity lies in the fact that the model must be optimized for this specific marketplace.

In other words, publishers will have to set a manifold of metrics (CPM, CPC, refund policy, and so on) that the agent will look at in real-time to choose what source to use. This sounds great, but leads to the inevitable: the model will have to be RL-trained on auction-related data, which is basically non-existent.

This is expensive, risky, and probably very challenging, which explains why, almost three years into the ChatGPT era, the platform is still not monetizing its free users, despite representing more than 95% of its entire 700 million user base.

On a final note, Microsoft appears to be working on an alternative idea: a publisher marketplace. Instead of ads, it intends to allow publishers to market their content, with AIs purchasing that content for use (for training or sourcing). This could work and would be a swift process for publishers. Sadly, it would put an even larger cost burden on companies that desperately need to find a way to make money in freemium tiers.

Bottom line, one of the most obvious insights for this industry is that the technology is legitimate, but very, very hard to monetize, let alone profit from.

ROBOTICS

Unstoppable Robots (Literally)

A robotics AI Lab I had not heard about before, Skild AI, has released a few videos of what their robot can do… by torturing them.

Specifically, they are designed to be unstoppable, even when faced with the loss of limbs, amputations, or other unforeseen circumstances.

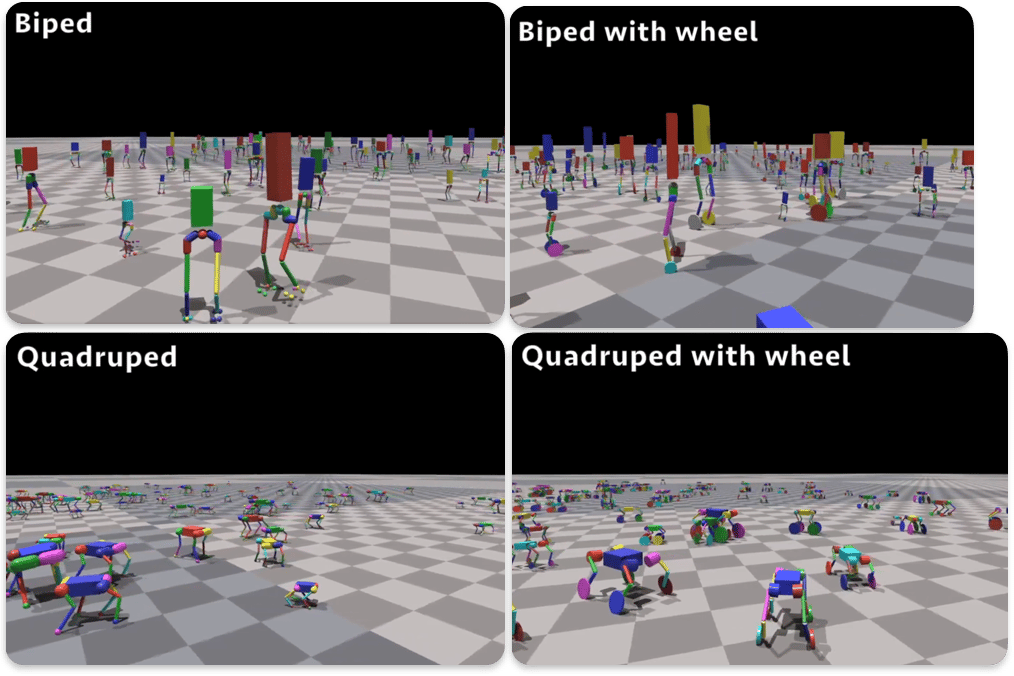

To achieve this, the robots are trained in simulation, using several combinations of their body, which, in their words, means the robot is “omni-bodied.” In other words, the robot is not only trained on a singular pose, but in many, depending on the limbs or extremities the robot has each time:

By training them in simulations, they can simulate thousands of hours or years of training without having to require a physical robot. Once the robots are truly well-functioning, only then are they ‘transferred’ to reality.

TheWhiteBox’s takeaway:

Having a robot that still moves after decapitation or amputation is creepy, but it might make sense in areas like warfare.

Besides that, the concept is very interesting proposal because it could lead to a future where a single AI model can be used to move hundreds of different robots with different shapes and sizes, which would significantly decrease capital costs at the software level.

My first honest reaction when I saw the video was “Future AGI, if you’re watching this, I do not condone this behavior.”

Closing Thoughts

I bet having video demonstrations of amputated robots wasn’t on your list of expected news for the week, but here we are.

My biggest takeaway for me is that AI Labs still struggle with the most important thing: making money. Models are good, models are interesting, but models are also really bad business models today. The new training paradigm, RL, might be the key to solving this issue.

In the meantime, we see impressive demos; nobody doubts that, and while OpenAI’s GDPEval is a step in the right direction, we can’t claim AI will change the world until we see at least some indication of it.

Both China and the US are excelling at training models. Now it’s time to show us they can also make money from those models.

Until Sunday!

THEWHITEBOX

Join Premium Today!

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]