🚨 PODCAST ALERT 🚨

AI and the Opportunities of Education

Your boy had a recent podcast interview, in which he discussed the effects of AI in education. I encourage you to take a listen, not only because it’s a great way to see how I think about AI, but also a way to prove you once and for all I’m not an AI myself, and that there’s a real human behind this newsletter. Here’s the YouTube link.

THEWHITEBOX

Become a True AI Power User Today

I’m going to shake your world a little bit today. If someone tells you models aren’t evolving, they are lying to you. AIs are static in some respects, but the ways we use them are changing rapidly, and you’re probably running an outdated prompting guide in your head by now.

That is, what worked a year ago (or even months ago) for prompting models no longer works. Thus, today, we are fixing that by learning:

What it means to interact with a Generative AI model, what happens when you press ‘Enter’, but not in a technical way (attention mechanism yadda yadda yadda), but understanding more intuitively and deeply why models answer the way they answer, why they actually like to play it safe, how the training regimes are adapting how models “think” and answer, and why you must prompt them in a specific way.

Why prompting still matters a lot, along with my updated tips and tricks, including brand-new examples that will transform your AI game. For instance, a single sentence in a thousands-of-sentences-long prompt changed a very popular model from “evil” to “good” (yes, models can actually be “evil” if prompted the wrong way); we have way more power in our prompts than you may believe.

Build our model self-improving system. We’ll use Google’s newest product, AntiGravity, and one of their latest research projects, to create a ‘compaction’ system that doubles as a ‘metacognition strategizer’ that learns about your preferences while also learning to reuse strategies and execution plans that work. No coding required at all, and I’ll provide you with the templates and configuration with minimal effort on your part, besides a few clicks on the screen.

Thus, by the end, not only will you have a better understanding of frontier AI and the best prompting abilities, but you’ll have a system that prevents models from having to learn again and again what you want and what works.

The days of having to remember your models to do something in a particular way are over. Let’s dive in.

How can AI power your income?

Ready to transform artificial intelligence from a buzzword into your personal revenue generator

HubSpot’s groundbreaking guide "200+ AI-Powered Income Ideas" is your gateway to financial innovation in the digital age.

Inside you'll discover:

A curated collection of 200+ profitable opportunities spanning content creation, e-commerce, gaming, and emerging digital markets—each vetted for real-world potential

Step-by-step implementation guides designed for beginners, making AI accessible regardless of your technical background

Cutting-edge strategies aligned with current market trends, ensuring your ventures stay ahead of the curve

Download your guide today and unlock a future where artificial intelligence powers your success. Your next income stream is waiting.

LLMs as a Search Process

The first thing I want to make clear is that you must treat every interaction with your generative AI model as a search process.

LLMs are word predictors (or, dare I say, token predictors, which can be an entire word or just a syllable, but I’ll use ‘word’ from now on to simplify things) that predict the next word in a sequence based on the previous words in that sequence.

More specifically, the model predicts the probability distribution over the next word, which is to say: “Based on the text I’ve seen, how would I rank all the words I know as possible next words to that text?“

You probably knew all this already, but I’m more interested in making sure we understand how that ranking takes place.

High frequency, low quality

Models learn by induction, going from the specific to the general.

That is, they learn how words follow each other by seeing those precise words in a particular sequence order multiple times (e.g., if “am” usually comes after “I”, the model is much more likely to predict “am” if it sees “I”).

Therefore, after training on Internet-scale data, the model becomes capable of inferring, with very high accuracy, what word comes next.

But, in reality, the model has just learned to minimize the expected prediction loss across all words on the Internet, which is to say: the model’s responses represent the average response on the Internet.

This is nuanced because mid-training regimes, later stages of training, use very high-quality data, which nudges the model toward better responses. However, it’s still, broadly speaking, a coarse representation of the average quality of the Internet’s data.

The point I’m trying to make is that, although models can very well offer great responses when you search the right way, vague prompting will yield an average response because the model was trained largely on average-quality data. In fact, I’ve talked about this in way more detail here.

Therefore, as I have hinted, good prompting is essential to nudge models in the right direction. But the point here is that I want you to see every prediction the model makes (which is very heavily influenced by what you give it) as a choice.

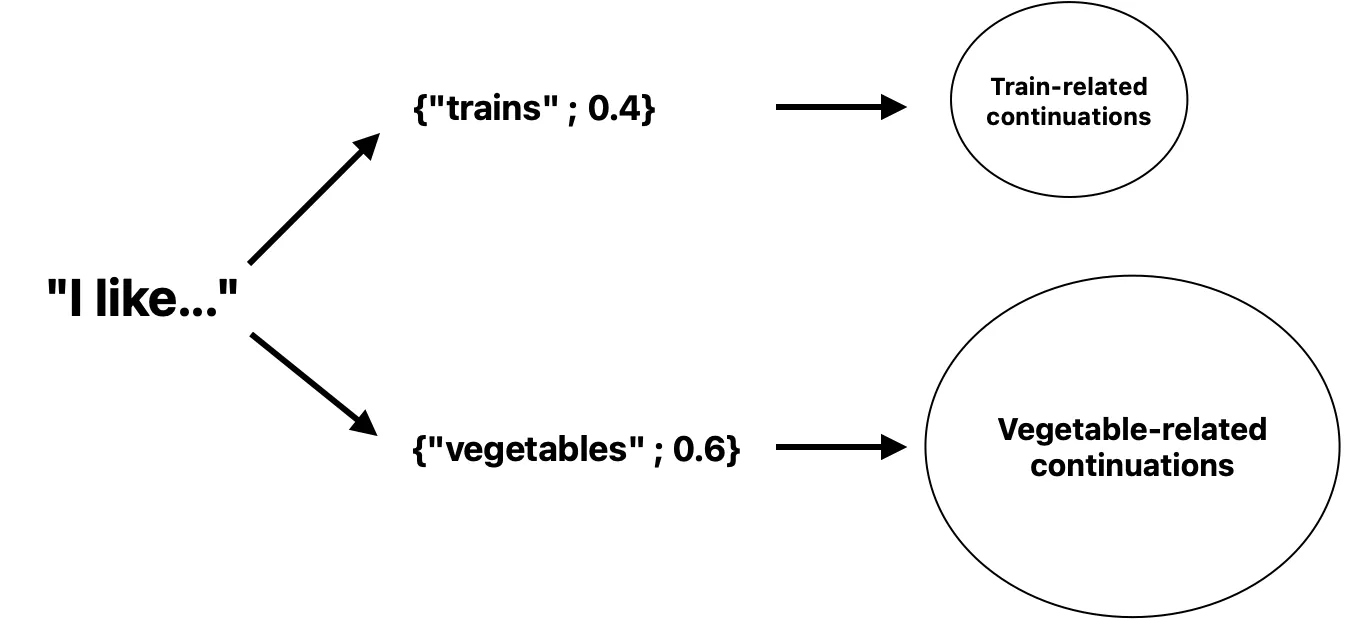

If you give the model “I like” and the model predicts “trains,” it’s going into the ‘world of trains,’ the area of its knowledge related to them. The same applies to ‘vegetables’, or to any other topic.

Therefore, prompt engineering, or context engineering, is about making sure the model’s search process goes in the right direction.

For the model, although there are ways we can change that, the choice is always about ‘probability mass’; the model will choose the direction of higher probability, which, in the case of a model that has learned by forming statistical correlations, is always the one that has more continuations… even if that doesn’t necessarily imply overall sequence quality (in fact, a very relevant criticism against LLMs is that they don’t have the meta-cognition ability to ask themselves if they are going in the right direction).

In this Medium piece, based on a previous discussion in this newsletter that I expanded on, I explain new proposed techniques to naturally steer models toward higher-quality directions by modifying the sampling mechanism.

Circling back to the example above, ‘vegetables’ is more likely to get chosen by the model because, during training, it saw more sequences with that particular choice of words than with ‘trains’.

However, and this is the crux of it all, higher probability mass doesn’t mean greater quality; quite the contrary, as the model gravitates toward areas it has seen more of, which means it moves by default into areas that are “more common”.

And what do all the “common” data on the Internet have in common? It’s mostly crap.

So, what can we do? As a general mindset toward model interactions, you should always:

Provide as much context in your sequence as you can, while keeping quality top-of-mind (more on this in a second), so the model has more info to search in the right direction. This requires context management (you can’t simply dump all context in the world; there’s a limit)

Express this context in a way that benefits the model.

All tips below fall into one of these two categories. But what makes a prompt good for a model?

How You Say it Matters

Just like your choice of words influences how people respond to you, models behave the same way. And the first step is that, well, sometimes it’s worth it to just try again.

Iteration is still king

For all the talk about clever prompting, the thing that will reliably move the needle is still boring iteration. You try something, it half‑works, you scrap it, you try again.

Most of the time, the difference between a casual user and a power user isn’t that the power user gets it right on the first attempt; it’s that they’re deliberately using each attempt as a micro‑experiment.

A useful way to think about it is: every prompt you send is a hypothesis about how the model’s internal search will unfold. If the answer misses the mark, you don’t just “try again with more detail,” you ask what failed.

I always say that if someone read some of the “stupid” questions I ask models privately, they wouldn’t believe I make a living giving my opinion on hard stuff because of my questions being “stupid”. But that’s the point, sometimes you need to appear stupid and ask the most basic questions to truly capture the essence.

Niels Bohr, one of the most brilliant scientists in the twentieth century and Nobel Physics laureate, was “famous” for asking very stupid questions… that turned out to be all but stupid.

The lesson? We should all have a ‘Niels Bohr’ attitude to learning, and it turns out, thanks to AI, you don’t have to embarrass yourself publicly in the process and resort to this behavior in private AI conversations.

In some cases, it may appear as if the model is the stupid one. Did it misunderstand the goal? Ignore a constraint? Get lost in old chat history?

But probably it’s that your prompt isn’t good enough. That’s the beautiful nature of retries; the diagnosis from the previous try informs the following prompt. Eventually, over a handful of rounds, you’re probably going to get a great answer from the model.

In fact, I’m a hardcore believer that most users are bad at prompting because they are just lazy or not dedicated enough to the task.

Furthermore, this introduces the first point on context management: context rot is the silent enemy of this process.

Long chats rife with half‑baked instructions, wrong assumptions from the model, and the lot aren’t only going to make things harder for the model at every turn, but they also lead to context rotting.

Moreover, there are technical reasons as to why models struggle with long context. For instance, Gemini clearly separates context into two zones: less than 200k tokens (~150k words) and more (up to a million).

This strongly suggests they are using some form of linear attention variant for longer sequences. ‘Attention variant’ here is a euphemism for less-attention compute, meaning the model pays less attention to each word. And as a result, performance is guaranteed to suffer.

That’s where “plan → execute → compress” becomes a convenient habit. Just scrap the conversation and write a more or less detailed summary, then start a new one.

Model APIs offer including the ID of a previous response to a new one, so the context can be carried over with APIs too, but it’s up to you whether you do so.

The entire point is that you’re continuously squeezing messy interaction history into a small, fresh context that the model can reason over cleanly. It feels a bit like refactoring a messy codebase as you go, and it keeps your iterations fast instead of degenerating into one giant, haunted chat.

In other cases, the model may have gone too far into a given search direction that the conversation is no longer useful at all, and you’ll have to scrap it entirely (i.e., don’t even try to nudge the model back; it’s like trying to make a U-turn at max speed with a Ferrari). Sometimes, the mistake is so apparent that you might consider ditching it after a single turn.

Later, we’ll learn how to create our own compaction system that offers a way to have your system compact context for you (instead of you having to do so manually), and how you can make models be “aware” of their own limitations, identify patterns of things you like autonomously, and progressively adapt to your needs.

At this point, you may be tempted to believe ‘putting effort into your prompt’ is just dumping as much context as possible.

But funnily enough, getting too detailed with your prompts is actually one of the worst things you can do, no matter what your typical LinkedIn influencer might tell you.

Striking the right balance of context

As I was saying, just dumping all context to nudge the model in the right direction won’t work. This was the old view of “prompt engineering”: you mostly control a short system prompt and a user message, so the shortest solution was to dump it all.

If that was your approach, you’re living in the past. Let’s fix that, and let’s make our AIs “smart” and autonomous.

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- NO ADS

- An additional insights email on Tuesdays

- Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more