For business inquiries, reach me out at [email protected]

THEWHITEBOX

TLDR;

Another week, another extensive list of exciting news, models, and products. This week, we take a deep dive into agents based on news from OpenAI or TML. We also take a look at Grok 4’s excellent game development capabilities, as well as an interesting study that shows how humans are becoming like their AIs.

Finally, we examine a fascinating new study that challenges the way we build our AI models from the ground up, offering compelling results to support their claims.

Enjoy!

PREMIUM CONTENT

Things You Have Missed…

On Tuesday, we examined a shocking research result on AI productivity, a roundup of finance news (including the weirdest M&A drama), new cool products, and the realization that China has made significant progress.

AGENTS

OpenAI Releases ChatGPT Agent

I was about to send this newsletter earlier today, until Sam Altman suddenly announced the release of their first agent product, a new option in the ChatGPT app, called ‘Agent’ that combines deep research and Operator (executing actions on the Internet) into one single system that can perform a variety of long-horizon tasks that, according to its creator, is very powerful.

The tool has access to virtualized environments independent of your computer; it spins up on the fly and executes what you asked for.

TheWhiteBox’s takeaway:

This is a very interesting release that will likely be quite successful, considering OpenAI’s extensive distribution. The product in itself is not that revolutionary, though; products like Manus or Genspark have already introduced this product to large audiences.

Personally, I’m noticing a trend. If coding IDEs and agentic coders have been all the rage for quite some time as the prime Generative AI products, it seems it’s the turn of “productivity-everything” app agents.

Genspark, Context, Shortcut… these are only a few of the AI products along these lines (automating Excel, Word, PowerPoint, and other white-collar apps) we have been talking about for the last weeks, and it seems that the big AI Labs have noticed the trend too and will release similar products.

If they deliver on the promise, we could soon see knowledge work being entirely transformed by AI agents, a promise made to us by AI incumbents a while back.

Moreover, it gives credence to my view about what AI startups should be building; despite the strong revenue products like Genspark are seeing, there’s absolutely zero chance they can compete with the big guys and products like OpenAI’s new Agent.

As all build essentially the same product, capital and distribution matter most, and those are the two things companies like OpenAI or Google have that you most likely don’t.

Being more specific, I believe any AI startup building logic (enhancing the ‘smartness’ of AI models as a business value proposition) will have a very short run that may appear highly successful at first, but will eventually be cannibalized by the model-layer companies.

A perfect example is Claude Code and Cursor. Both rely heavily on Anthropic models, but owning the model as Anthropic gives them a significant edge that translates to a better overall experience. I believe the pattern will repeat for all these startups built on top of these models: acquisition or death, risky business.

So, what should AI startups build? Easy, agent tools.

If you can’t compete with these companies, don’t, and instead build useful tools that their agents can leverage. That is, don’t get in their way; rather, make a product that rides their progress. More intelligent agents will make smarter use of your tool, as they are literally making your business better.

Sadly, most AI founders don’t get this because this approach probably won’t make you a billionaire or isn’t that flashy. They will soon, though, once all comes crashing down thanks to products like ChatGPT’s first agent feature.

FRONTIER MODELS

Humans Are Imitating AIs

According to a recent study, humans are starting to talk like ChatGPT.

Researchers at the Max Planck Institute for Human Development have identified a reversal in the traditional AI-human linguistic influence. Instead of humans merely training AI, people are now subconsciously adopting the AI’s communication style.

By analyzing millions of human writings (emails, papers) that were “polished” by ChatGPT, the team compiled a set of favored “GPT words”, terms like “delve,” “meticulous,” “underscore,” “comprehend,” “bolster,” “swift,” “inquiry,” and “groundbreaking.”

They then tracked these words across over a million hours of YouTube and podcast audio, comparing usage before and after the release of ChatGPT.

And guess what: these GPT-associated words have significantly increased in human speech, indicating a cultural feedback loop where AI language patterns are shaping how we talk.

TheWhiteBox’s takeaway:

An interesting yet predictable result; I’m surprised no one had looked into this earlier. If anything, this study proves that humans have become highly dependent on these AIs.

So what will happen—if it isn’t already happening—when these models start revisioning history? A lie told 1,000 times becomes the truth.

I’ve discussed this in the past; what happens when these models become the gateway to knowledge? When people start using them as a tool to know what’s right or wrong?

The issue is that laypeople see these models as omnipotent beings in possession of truth. For instance, if you use X, you’ll see thousands of people asking: ‘Grok is this true?’ to see what another human said.

But that’s a foolish thing to do, because these models aren’t truth-seeking; they only return the most statistically likely response, which can be true or false depending on the biases of the model’s trainers.

They don’t understand causality, and perhaps they don’t even comprehend what they say (just like standard databases, they can generate things they don’t comprehend).

That a misaligned model thinks that the next word to “Do pigs fly?” is “Yes” does not mean that the model understands what it’s saying and, importantly, doesn’t prove the model thinks it’s true (or false).

We both know AI model labs are weaponizing this; we haven’t just realized it yet.

AI PRODUCT

ChatGPT’s Checkout Feature

According to Reuters, ChatGPT may also be nearing the release of a checkout feature for products purchased through its search engine, a move aimed at generating additional revenue from the entire process.

TheWhiteBox’s takeaway:

The move makes a lot of sense, but I’m curious to see how many people are actually buying stuff using ChatGPT; I’m inclined to say very few.

According to some studies, Google receives more than 34 times the traffic of all chatbots combined, and Google’s AI Overviews are already highly streamlined, as not only does Google have a strong AI model, but it also has the best search-focused AI model.

FRONTIER MODELS

Gemini 3.0 Pro Incoming?

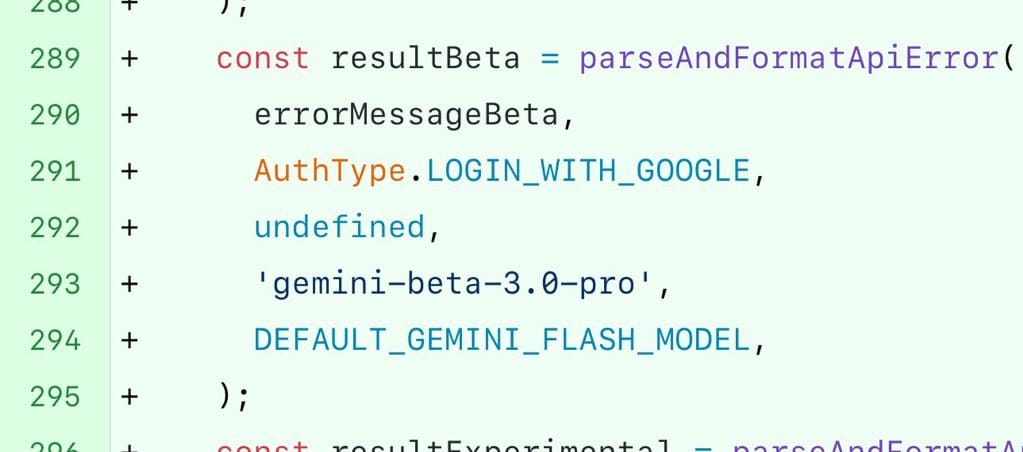

Just a rumor, but the last Gemini CLI commit, a tool developed by Google resembling Anthropic’s Claude Code or OpenAI’s Codex CLI, that allows agentic coding (you command, the AI executes), includes a reference to Gemini 3.0 Pro, which could be the next big model released by Google.

TheWhiteBox’s takeaway:

As I explained last week, I’m very excited about what’s to come over the next six months in this space.

I think we’ve hit a crucial milestone, which is the realization that RL (trial-and-error training, the basis of most powerful models today) works and that agentic training (such as the one exemplified by Kimi K2) is a great prior for creating real AI agents, and that I can’t help but be excited.

Today’s trend of the week kind of challenges this entire vision, but more on that later.

If we truly start delivering long-horizon agents (agents that can work on extremely long tasks), we could begin to see the disruption that we were promised AI would bring.

VIDEOGAMES

Grok 4 is Pretty Good at Creating Videogames

Grok 4 appears to be heavily influenced by its creator’s obsession with gaming (Elon is a diehard gamer) and has developed considerable skill in creating one-shot playable games.

This link includes several examples, from a FlappyBird copy to 3D assets for other games.

TheWhiteBox’s takeaway:

Solid stuff, but the key takeaway here is understanding what this implies. Besides software engineering, few industries are more exposed to AI than video games. Soon, you will be able to create your own video games on the fly and play with your friends.

I'm no gamer (too busy working for you), but it sounds like fun.

The key reason I’m so bullish on AI video games is that the incentives are aligned. And what I mean by this is that AI videogames could be a result not of people actively trying to create them, but AI Labs trying to achieve world models.

Although I share Yann LeCun, Meta’s Chief AI Scientist's sentiment that this is the wrong direction, many AI Labs think otherwise.

They are using next-videoframe prediction as a way to build world models, AI models that can observe the world and predict what will happen next, in a similar fashion to how our brain does the exact same thing (our brains are biological inference models).

Therefore, as a byproduct of creating these models, we could end up with AI models possessing impressive capabilities to generate entire playable worlds in one shot, meaning the videogame industry could be disrupted even by accident.

AI LABS

TML, Product Incoming?

Mira Murati, ex-OpenAI CTO and now CEO and co-founder of Thinking Machine Labs (TML), has shared more information about the project after announcing the securing of a fresh new $2 billion for the project from VCs such as a16z or NVIDIA, at a $12 billion valuation

She states their intention of releasing their first product in the upcoming months.

For reference, TML is a new AI lab that, despite not having any real products or revenues, has amassed huge capital due to its superstar team, formed by top ex-OpenAI and Anthropic researchers.

TheWhiteBox’s takeaway:

The tweet contains several valuable insights that warrant discussion. First, she insists on multimodal AI, meaning that the product will be capable of handling multiple input and output modalities, such as text and video.

This may seem like table stakes these days, but the reality is that most of the ‘multimodal’ products we have today aren’t truly multimodal, but rather single-modality systems stitched together.

While this has the appearance of modality, it’s only in the looks, and the underlying system isn’t really thinking in a multimodal fashion.

A perfect example of this limitation was exemplified by the Mirage system we discussed a few days ago, which addressesed the reality that, despite modern systems' ability to process images and text, they still primarily rely on text to ‘think’. In other words, everything is transformed into text for the model to reason about.

True multimodality implies visualizing mental images or video, in addition to text, or actively imagining the sound of your mother’s voice. Current AI models are not doing these things, so I’m excited about what TML offers here.

She also openly discusses their strong open-source approach, which is a breath of fresh air, seeing how US Labs appear closer by the day. Having a new open-source champion in the US could be absolutely huge.

The last point worth mentioning is that, as we previously disclosed in this newsletter, TML will be heavily focused on customization, specifically developing custom RL models for clients.

Without having any additional information, this could be akin to a Palantir play in AI, a company that actively helps clients develop custom models for their companies using the TML platform.

As you may have guessed from my recent posts, this isn’t just exciting, but actually mandatory; enterprise AI will never work unless it’s customized.

CYBER

Google’s Progress on AI Cybersecurity

Using AI, Google has identified an ‘imminent’ critical flaw in SQLite and prevented its potential exploitation, highlighting another example of how AI models are already being leveraged to mitigate cybersecurity threats.

Google’s AI agent Big Sleep (from DeepMind & Project Zero) discovered CVE‑2025‑6965, a critical memory corruption vulnerability in SQLite (CVSS 7.2), before attackers actively exploited it. The flaw allows malicious SQL injection to trigger an integer overflow, leading to out-of-bounds access and potential arbitrary code execution.

TheWhiteBox’s takeaway:

In this newsletter, we are paying close attention to the AI-led improvements in cybersecurity.

A few weeks ago, we also covered how an AI bot from XBOW became the highest-ELO hacker in the world on the HackerOne platform, showing how AI models are not just toy hackers but are already helping mitigate threats.

Of course, you can take the other side of the coin, can they be used in a nefarious way?

Not only can they, they are. Scammers are enhancing their phishing capabilities by utilizing LLMs to craft emails and create frontends that mimic the original website, luring users into entering their passwords and stealing sensitive information.

In the AI era, all your password-accessed accounts should be MFA-enabled, meaning that you should have at least one other device linked to you to validate actions. Think of this as when your bank asks you for the password it sent to your phone to validate a transaction you’re doing with your laptop.

Another potential great use of these tools is to review vibe-coded apps created by individuals who are unfamiliar with good cybersecurity practices when deploying their digital products. If there’s someone who’s benefiting from the vibe-coding era, that’s a cybersecurity expert.

PRIVATE MARKETS

Anthropic Nears $100 Billion Valuation

According to The Information, Anthropic could be nearing a new funding round that would value the company at $100 billion, a higher valuation than companies like Intel.

The discussions come after a super successful first part of the year for the company, which has multiplied projected revenues by 4 times, reaching $4 billion run rate (the annual revenue over twelve months at last month’s revenues).

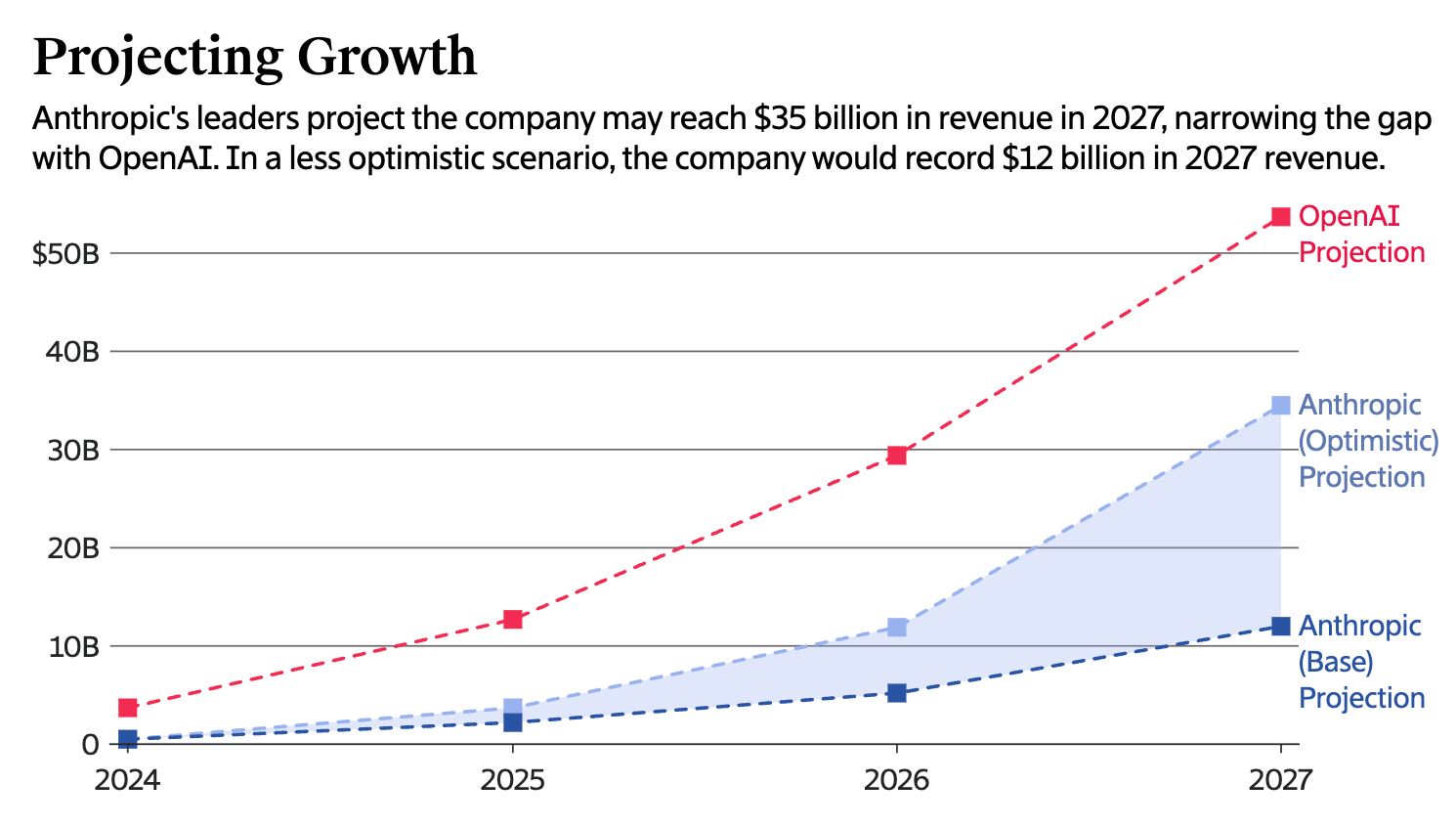

If the funding goes through, Anthropic would be trading at almost 24-ish times projected revenues, which is a very healthy multiple for an AI startup these days. Regarding future revenue projections, Anthropic basically projects the same revenues as OpenAI with a one-year lag, meaning that they plan on meeting OpenAI’s revenue projections a year later than them.

TheWhiteBox’s takeaway:

While I’m a professional Anthropic hater (no other lab gives me the ‘villain vibe’ they do), their bet on going full-on coding and enterprise is paying off.

Coding CLI products, such as Claude Code, are a magical experience. I see myself using tools like Cursor, which are more hands-on coding, less and less in favor of agentic coding tools like Claude Code, where the human’s role is more about clearly expressing what you need, and the AI just does it.

You lose control over what’s going on under the hood, but you won’t care if the model delivers, and there’s something about these tools that makes them work wonderfully well.

The primary concern regarding Anthropic is their non-existent consumer-end penetration (which seems to be a strategic decision more than anything) and the fact that, with ChatGPT having won the brand race, the remaining business must be fought with none other than Google and xAI, which are really strong competitors and probably better-capitalized entities.

Personally, if I had to choose between the three, I’d put Anthropic last in terms of likely winner in the event it were a winner-takes-all situation (it most likely isn’t that at all). But leaving my skepticism toward them aside, it’s hard to argue with their recent revenue growth.

TREND OF THE WEEK

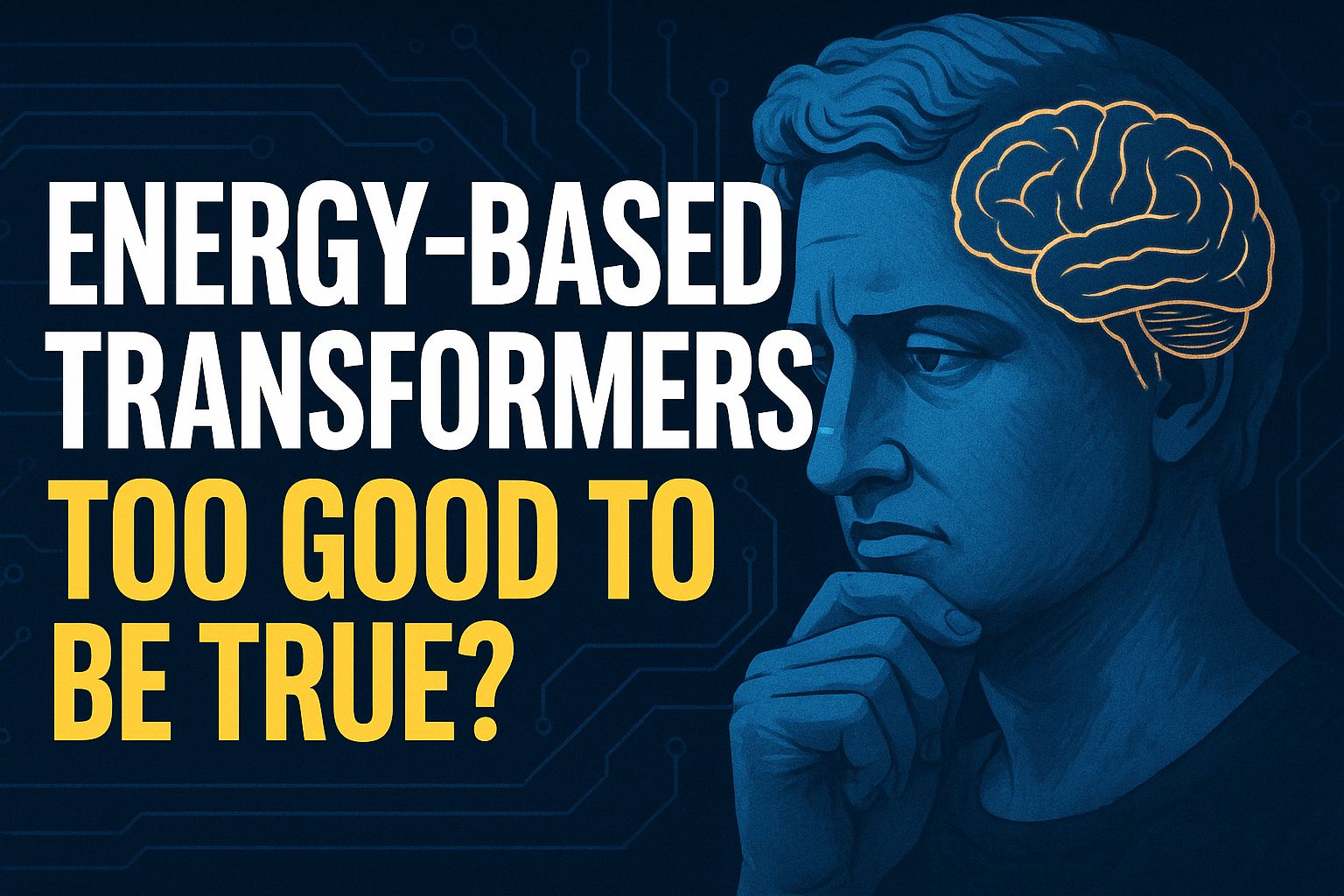

Energy-Based Transformers, Too Good to be True?

In the AI industry, few ideas draw as much cult-like attention as energy-based models. Considered a sort of ‘holy grail’ by some, they have been seen by many as the definitive AI model architecture, but one that felt unattainable due to practical constraints.

But a joint effort between Harvard, Amazon, Stanford, and others may have just changed that.

And why is this important? Well, because it challenges the current paradigm, arguing that while we might not be doing it necessarily wrong, we are certainly suboptimal.

Join me in a fascinating journey to understand a new potential AI model paradigm with deep roots in human psychology and the theory of the thinking mind.

AI and Human Psychology

If you’re a regular of this newsletter, you already know that AI is heavily influenced by the highly influential ‘Thinking Fast and Slow’ theory of the human mind from the late Daniel Kahneman.

Thinking Fast and Slow

Our brain has two thinking modes:

System 1: Fast, unconscious, intuitive. It’s the system you use to answer whenever someone asks you your name; you aren’t reflecting on it, you instinctively answer.

System 2: When faced with a problem you don’t know the answer to instinctively, you enter a conscious, deliberate, and slow thinking mode that allows you to allocate a higher thought effort to the task. If you’re asked to solve world hunger, you’re probably going to use this thinking mode.

And what does this have to do with AI? Simple, when we build AI models, top AI Labs are obsessively following this precise thinking framework.

Going From Fast to Slow Today

Among mainstream AI, we now have two primary model types: non-reasoning and reasoning models.

The former, traditionally known as Large Language Models (LLMs), respond immediately to any task given, making them great at citing facts and other epistemic tasks.

Reasoning models have emerged as an evolution of LLMs that mimic System 2 thinking by allocating a higher degree of compute per task. They think longer on a task by generating a chain of thought that breaks the problem down into steps, leading to a higher chance of a correct answer.

But here we must touch upon several issues with our current preferred method:

Requires extensive post-training. To transform an LLM into a reasoning model, we require exorbitant amounts of compute, perfectly illustrated by Grok 4, which has comfortably surpassed the 1026 compute budget mark, or at least one hundred trillion trillion operations were required to train it.

Extremely data hungry. These models require a significant amount of time to converge. In layman’s terms, they need a lot of data to learn. A lot. All AI Labs have nervously pointed out that we are running out of data to train models on, even though they consume several orders of magnitude more data than any human in history.

We require external verifiers. The post-training regime, dominated by Reinforcement Learning (rewarding good behaviors and punishing bad ones), requires external verification; the model has no internal mechanism to verify its own responses. This not only constrains us to domains that are easily verifiable (such as maths and coding), but can also be very expensive in other domains where more sophisticated models are used to critique the responses of the generator model.

Can’t allocate compute dynamically per prediction. Current reasoning models commit more thought to a task by generating larger chains of thought, but the compute required to make any single prediction (in the case of ChatGPT, predicting a word) is more or less fixed, only marginally increasing with sequence length (assuming we maintain a KV Cache, which we always do).

Thus, several differences with humans arise.

For starters, humans don’t require external verifiers; we verify our responses even before we make them, even though we don’t have a genie in a bottle guiding every decision we make. We are our own genies.

We are highly sample-efficient. As we can self-verify, we can learn in an unsupervised way. In layman’s terms, we learn from data that doesn’t have a correct/wrong tag, and instead learn to model how uncertain we are about it internally. This allows humans to learn with a couple of examples, while our AI models may require hundreds or even thousands.

Humans dynamically allocate compute based on the complexity of the task. Every single prediction our brain makes, every thought we think, will require more or less thought effort based on the perceived complexity (measured uncertainty of our predictions).

Thus, is there a way to make AI behave ‘more human’? And the answer is, well, yes.

Enabling System 2 by Default

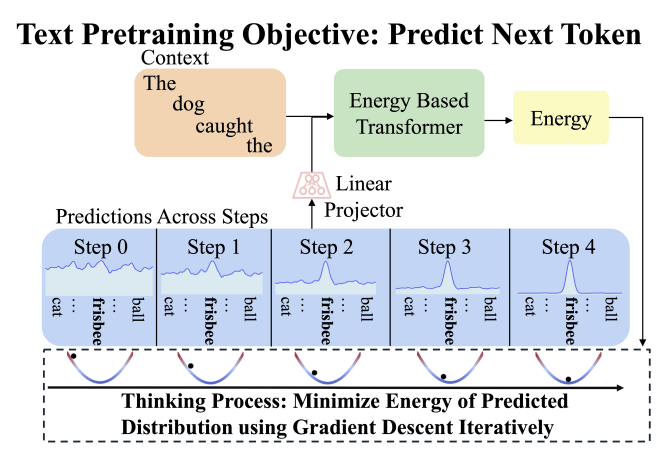

In short, energy-based transformers (EBTs), today’s protagonists, emerge as a way to instill this System 2 thinking mode into AI models by default without all the extra paraphernalia.

Learning a Verifier

The key principle that guides the intuition of EBTs is that verifying is easier than generating. In other words, seeing if a specific maze path solution is correct is faster and easier than finding it.

In a way, our brain behaves similarly; we continuously sample possible solutions that our own brain verifies as likely or not, helping us traverse the ‘space of possible answers.’

EBTs do the same, but how?

The key is to understand what energy is in this case. Energy is a measure of compatibility between an input and a response.

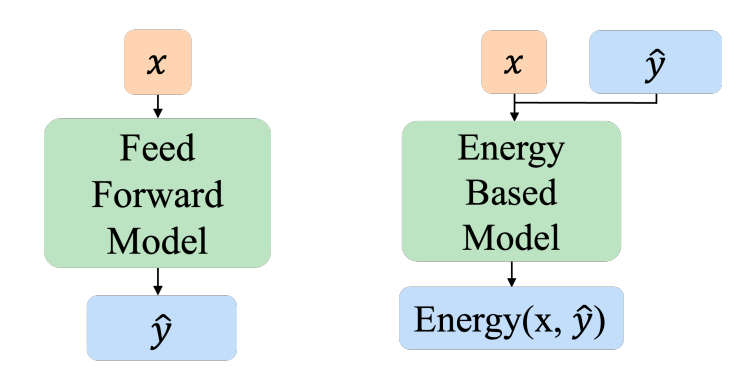

Unlike autoregressive transformers like ChatGPT, which take an input and immediately predict the response (the next word), an energy-based model samples a possible solution, scores (verifies) it internally, assigning an energy value (how likely this continuation is), and refines it on the fly, generating new proposals until it settles for one.

ChatGPT (left) will immediately propose a continuation. An EBT proposes one too, but actively evaluates and refines it until it’s happy (low energy value, aka high compatibility)

But what’s the point?

Simple, in EBTs, every prediction may be subject to internal refinement, which sounds incredibly similar to thinking.

Unlike LLMs, which will see the bottom example “The dog caught the” and immediately respond with the most likely next word, “frisbee”, the EBT will iteratively refine its prediction during inference through several steps until it commits to “frisbee” too.

But isn’t the LLM form factor more optimal, aka faster? We need one step, right?

Sure, but you better hope that first prediction is correct. It might seem faster to immediately respond ‘56,000’ to the question ‘what’s 123×456’ only to realize that the actual answer is ‘56,008’; that’s the point of thinking for longer on a task, that you’re more likely to get it correct.

Reasoning models solve this by generating a step-by-step approach; however, the crucial flaw is that they still allocate the same compute per prediction (per word), which makes them extremely fragile to bad initial predictions. Put another way, their success is excessively dependent on the quality of the initial tokens.

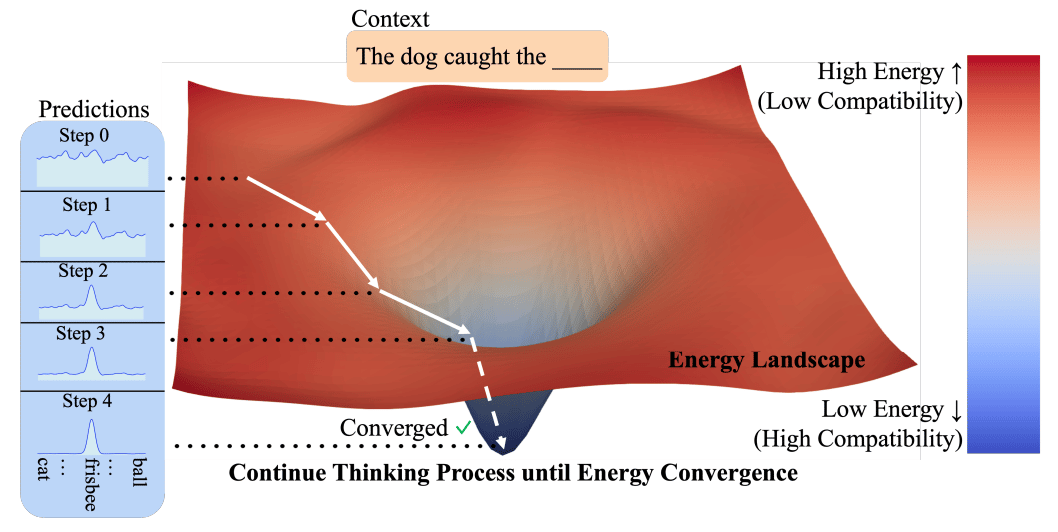

Instead, EBTs will naturally identify the complexity, and from the very first prediction, perform an energy-minimization optimization, which is nothing but internally making a suggestion, evaluating how good it is (measuring the energy, or how compatible they are), and using simple calculus to refine the response until it is happy with.

Under such behavior, it’s much more likely that EBTs choose reasonable solutions from the get-go. Think of it using this human example.

Upon seeing a complex maths problem:

One human instantly starts writing down stuff, committing to a solution path immediately based on intuition, and hopefully reverting if it has chosen wrong

Another human will first internally reflect and then choose a likely path, meaning that the model is already thinking deeply from the very first prediction.

Of course, a highly intuitive LLM will consistently choose the best path. But the likelihood of a model doing so decreases proportionally to the task’s complexity and, importantly, so does the available data for training.

Therefore, following EBTs’ “reflect first, decide later” approach, unlike an LLM’s “decide first, search later”, might be more suitable for challenging tasks.

This means that you can train an EBT to behave both fast and slow using the same unsupervised data (the Internet-scale data we feed to LLMs to imitate).

Over time, the EBT learns an ‘energy landscape’ where correct input-response pairs have low energy, such as ‘what fruit grows in an apple tree → apple’, while unlikely pairs such as ‘what fruit…apple tree → oranges’ have high energy.

The energy-minimization process goes from high-energy (unlikely) candidates to good ones (low energy).

A good way to understand all this is that while both capture the patterns in data, like the patterns in human language, one learns how likely the ‘y ’ word is to follow the ‘x’ group of words, while the other learns to predict the best ‘y’ in every prediction.

But if EBTs are so great, why have we waited so long?

It’s Possible Now

By combining energy-based methods with the parallelizable nature of Transformer architectures, this research team has made energy-based models, traditionally hard to scale (a pipedream), possible.

Through several tests, the team shows that EBTs outperform autoregressive LLMs everywhere:

requiring less compute and less data to achieve better or higher results, showing a 35% improvement.

performing better in out-of-distribution domains (unfamiliar domains)

not requiring carefully designed verifying scaffolding, as the model itself verifies its responses

In short, this is extremely compelling research. But is this a ‘destroyer of worlds’ type of research? Not quite.

EBTs still rely on the same architecture principles of modern models, meaning GPUs are still at the center, and data is as necessary as always. Thus, the dynamics of AI economics remain unchanged.

What we are changing here is the approach to model design, one that is genuinely different from the ‘imitation + reinforcement’ playbook that all prime AI Labs zealously follow. This approach is indeed revolutionary, but it does not invalidate all that we have built until now.

If anything, we might have just made AI more efficient.

Challenges remain, though:

Will these models avoid slowness with this dynamic compute allocation prior?

Will they be scaled to large sizes and see if they outcompete frontier models?

Are there incentives to try this approach?

I believe the answer is yes to all, but we can’t be certain right now.

Closing Thoughts

And that’s all for this week.

The industry seems totally focused on building agentic products, and the VC side appears as healthy as ever. However, we should take our trend of the week as a warning: we are making progress, but that doesn’t mean we are making the right kind of progress.

Until Sunday!

THEWHITEBOX

Premium

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!