LEADERS

Are People Losing Faith in AI?

Over the past few weeks, fear has taken hold, with most indices worldwide declining. Naturally, the blame has been put on AI. And the truth is, they have a very good reason for it.

But why?

Today, we are going to reveal what is going on and why the nervousness of American investors is highly contagious to global indices (which are much more exposed to AI than you may realize), revealing some very concerning metrics on particular large public companies where investors are literally betting they’ll default in the near future.

At the end, we’ll cover a series of recommendations for investors to gauge better how real AI's alleged progress is; a way to know for certain if incumbents are full of shit or not.

Let’s dive in.

How can AI power your income?

Ready to transform artificial intelligence from a buzzword into your personal revenue generator

HubSpot’s groundbreaking guide "200+ AI-Powered Income Ideas" is your gateway to financial innovation in the digital age.

Inside you'll discover:

A curated collection of 200+ profitable opportunities spanning content creation, e-commerce, gaming, and emerging digital markets—each vetted for real-world potential

Step-by-step implementation guides designed for beginners, making AI accessible regardless of your technical background

Cutting-edge strategies aligned with current market trends, ensuring your ventures stay ahead of the curve

Download your guide today and unlock a future where artificial intelligence powers your success. Your next income stream is waiting.

The US Concerns

There are several reasons why US investors are growing nervous. The primary reason is debt, but general suspicions about OpenAI’s buildout and the perception that China has caught up aren’t particularly helping the optimistic case for US AI stocks.

Let’s cover the latter two first, because there’s much more to be said on the first one.

China, China, China

China is becoming a real threat to US AI supremacy. Besides the surprising growing allergy toward American chips (more on that later), they are “waging war” on American companies in two ways: by releasing research on the hardware and software efficiency frontier.

In other words, they are sending two “horrific” thoughts to US investors:

Is the US overcommitting to the compute buildout?

Is the US in the lead at all?

This is primarily based on two numbers: 82% and $4.6 million.

The first one refers to Alibaba’s Aegaeon token-level scheduler, which we recently covered, that has enabled Alibaba to reduce GPU inference usage by 82% compared to previously considered frontier inference strategies.

Put simply, for the same inference workload to be served, the system managed to service that load with 213 GPUs instead of the previous number of 1,192.

This immediately raises the question: how many of these efficiency breakthroughs are US Hyperscalers simply not factoring into their spending projections?

The fears are understandable. It’s clear that efficiency is taking a backseat in US buildouts and research, and money is being spent without regard for efficiency breakthroughs. Instead, companies are literally spending every single dollar they can on this.

It’s less of “this is what I believe would be necessary“ and more of “let’s build as much as we can, and we’ll later see how that supply is met, if ever.“

This is a very risky bet for such an “unprofessional” way of spending money, as:

These buildouts are based on fast-depreciating assets

Require equally impressive energy buildouts

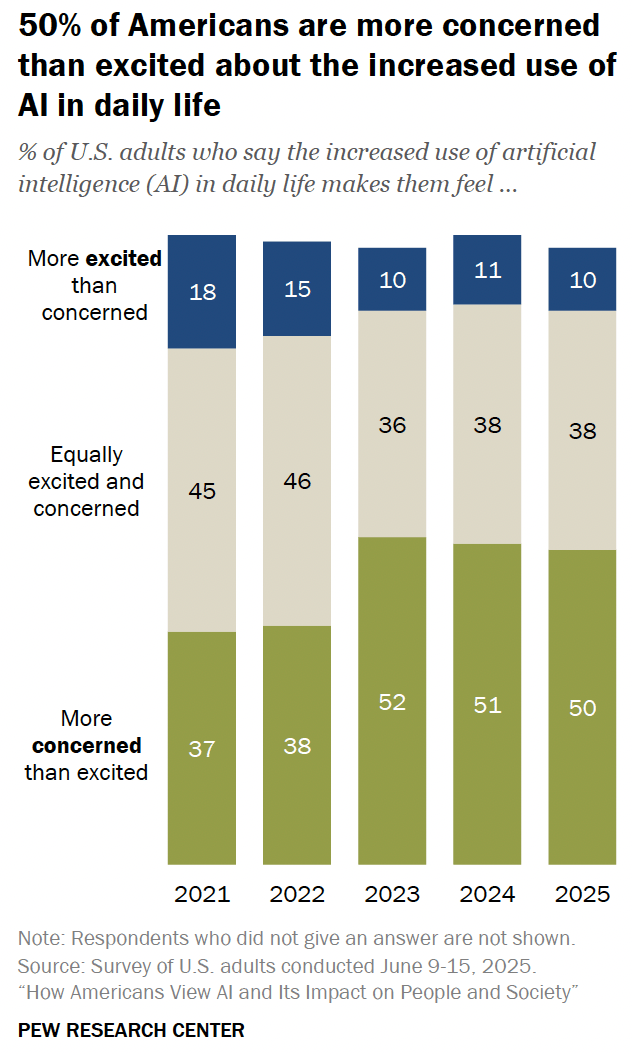

They are notoriously unpopular in the US, with a greater share of Americans being more worried than excited about the technology, while also incurring higher energy prices for homes.

And the business is just objectively worse than their main businesses (much lower margins with higher capital costs), especially when compared to their ‘traditional’, CPU-based cloud businesses.

You don’t have to take my word on the "uncontrolled spending” matter; many of the leaders who spend this money believe so too, with examples like Sam Altman (OpenAI CEO), Sundar Pichai (Google CEO), Satya Nadella (Microsoft CEO), or Mark Zuckerberg (Meta’s CEO). The latter was particularly clear on this, stating he was happy risking “a couple of hundred billion” rather than being late to the AI party.

Therefore, China's release of work showing that we can constrain GPU usage by almost 90% is not something investors are happy to see coming from the land of the dragon. But if that has people nervous, Moonshot’s Kimi K2 Thinking model is as alarming as things get.

As we discussed on Thursday, we might have our first Chinese model potentially being the best model on the planet.

Is it better than GPT-5 Pro or Gemini 2.5 Deep Think? No, but that is not a fair comparison because these two are ‘best-of-N’ systems, where several models are run in parallel and the best one is kept, or deploy humongous amounts of compute per problem, which naturally leads to the best performance.

Thus, both of these techniques obscure the accurate apples-to-apples comparison between GPT-5 or Gemini 2.5 and Kimi K2 Thinking. Notably, Kimi K2 Thinking is one of those “DeepSeek moments” claimed to have been trained with a budget of under $5 million.

This is, once again, total rubbish, not because Moonshot’s claim is false, but because it’s misinterpreted. They were referring to the training run cost (the total cost to get you the model that eventually was deployed), but did not include all the very real overhead typical of AI training (dataset gathering, experiments, salaries, and others) that increase your total spending by a lot.

But two very real conclusions can be drawn and seem non-negotiable:

AI software is a commodity. No AI Lab has software supremacy in AI, and Chinese labs are making this knowledge available to everyone.

Chinese Labs, much more constrained in compute and capital, are obviously much more frugal than US Labs while managing to stay in the race.

Both of which have investors wondering the same thing they asked back when NVIDIA crashed in March: Are we overspending?

Maybe, and OpenAI’s recent moves don’t suggest confidence at all.

OpenAI is nervous

Another topic top of mind for many is OpenAI’s recent controversies. For one, nobody actually understands how a company with a $13 billion run rate (recently updated to $20 billion by December, according to Sam) can spend $1.5 trillion in the next several years.

Who’s going to fund that?

We’ll answer that below, but besides obvious questions, their PR strategy is debatable, after its CFO’s clumsy comments basically begging the US to bail them out if their huge spending commitments overextend, even prompting politicians like Republican Governor Ron DeSantis jumping on this matter and earning considerable praise from several Democrat leaders and voters at a time when both sides can’t even agree on whether the sun rises in the mornings.

How bad were Sarah Friar’s comments to unite both parties on a matter, and during a Federal shutdown? That takes a different level of stupidity from OpenAI.

And while OpenAI is the face of uncertainty in US markets these days, it’s only a symptom of the real risk that keeps investors awake at night: debt.

US, What are You Doin’?

The fact that debt is growing in importance as a key mechanism to fund AI CapEx is an understatement. The shift is visible in hard numbers.

The growing tendency to issue corporate bonds

At the center of all, as you may imagine, we have the Hyperscalers, which have started to rely more on debt. These companies have so much cash that they are investment-grade issuers, meaning that credit agencies believe they are unlikely to default on their loans.

The problem is the rapid growth of this number. US investment‑grade issuance from AI‑heavy Big Tech hit roughly $75 billion in September–October alone, including:

Oracle’s $18 billion September bond sale

Meta’s $30 billion bond issuance, days after arranging a record ~$27 billion private‑credit vehicle with Blue Owl to fund the Hyperion campus in Louisiana

Alphabet adding fresh dollar and euro tranches, with unprecedented 50-year maturity.

That’s way more than what they issued in the entire 2024 year. While all three were oversubscribed (more on why this is very important to the AI buildout later), JPMorgan now estimates AI‑linked issuers make up about 14% of the US IG index, overtaking banks as the dominant sector.

Yes, you read that correctly, AI companies are issuing more corporate bonds than banks.

Oracle’s case is particularly remarkable. And worrying. The same month Oracle sold $18 billion of bonds, it also became the anchor tenant in a separate $18 billion project finance loan for a New Mexico data center campus tied to the “Stargate” initiative, thanks to a consortium of up to 20 different banks. That takes Oracle‑related new debt commitments to roughly $36 billion in six weeks.

But if there’s a company riding debt, it’s Meta; it’s the poster child for the trend. The Hyperion move (which is clearly set up as an off-balance-sheet structure, more on this later) shifts funding away from pure cash flows and toward lease-backed debt. Of course, markets took note: the bond news coincided with an 11% single-day stock drop as investors recalibrated their leverage and payback windows.

Alphabet’s case is the least concerning but still worth mentioning. It raised debt in dollars and euros this month, in addition to a May euro-denominated deal, while increasing 2025 capital expenditure guidance to $91–$93 billion. This is the clearest admission that organic free cash flow will not cover AI capex at the desired speed, even for cash‑rich firms, and the choice is to mix internal cash with market debt.

And what about the spreads? How expensive is this debt?

Oracle’s pricing on the bonds was between 40 and 165 basis points (0.4 and 1.65% more interest than government bonds), depending on the maturity level of each bond (Oracle issued bonds with different maturities)

Meta’s bond issuance was reportedly set at 140 basis points over Treasury bills, or 1.4% more interest.

For Google, with its approximately $25 billion issuance (~US$17.5 billion in USD and €6.5 billion in euros), announced on November 3-4, 2025, the initial “price talk” was about 1.35 percentage points (135 basis points) over Treasuries. The most extended portion ended up at about 1.07 percentage points (107 basis points) over Treasuries.

But what does this mean? This is a cause for monitoring, but we can’t yet panic.

Besides Oracle, the other companies have the cash to fund the AI spending. Hence, the reason they are tapping into the bond market is because, well, they can. It’s cheap capital, as investors are willing to accept interest rates that are not much higher than those requested by the US Government itself.

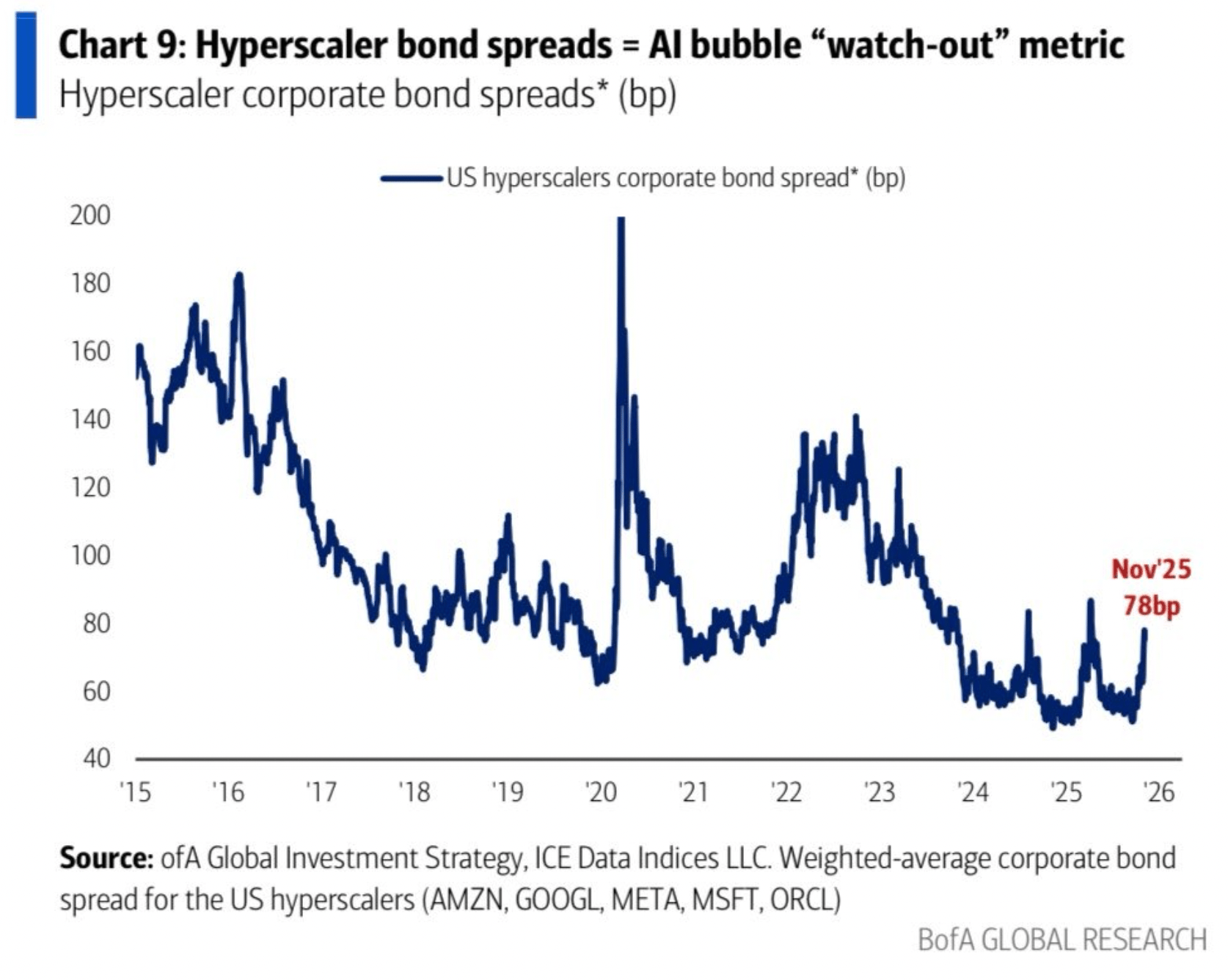

That said, even though it’s early to panic, corporate bond spreads for Hyperscalers are spiking back again to 78 basis points this November, to the point some analysts are citing this metric as something to “watch out” for.

This is a sneak peek of one of the crucial metrics to watch out for in our investor playbook for navigating AI uncertainty, which we’ll see later.

But if corporate bonds are a nice way to gauge investor sentiment toward the AI bubble, what if I told you investors are betting hard that one very prominent public US AI company will default in the next few years?

And more controversial even, that we are repeating the same procedures that led to the 2008 financial crisis?

An Unexpected Nasty Protagonist

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- NO ADS

- An additional insights email on Tuesdays

- Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more