LEADERS

Anti-Hype Guide, Part 2: Andrej Karpathy’s View.

Last week, we saw how Rich Sutton, one of the fathers of Reinforcement Learning (RL), Turing Award in 2025 (the equivalent of the Nobel Prize in Computer Science), bashed the current state of AI, arguing that LLMs were not on the direct course to AGI.

And soon after, Andrej Karpathy, one of the most respected thinkers in the industry (founder of OpenAI, Director of AI at Tesla for several years, and the person who gave the name to things like “hallucinations” and “vibe coding”), had an even harsher take, even reaching the point of saying “RL is terrible,” or that code from AIs is “slop” (jargon for ‘very bad’).

This newsletter will cover things about AI you didn’t even realize you had to be asking yourself. Andrej’s views will make you think about biology, evolution, how dreams could inspire new training methods, and even ghosts.

Finally, he also shared his vision of what might be the defining form factor for AGI, the “cognitive core”. And you’ll be shocked to see how little it has to do with current models.

Let’s dive in.

Want to get the most out of ChatGPT?

ChatGPT is a superpower if you know how to use it correctly.

Discover how HubSpot's guide to AI can elevate both your productivity and creativity to get more things done.

Learn to automate tasks, enhance decision-making, and foster innovation with the power of AI.

To say Karpathy is a legend is an understatement.

He is, without a doubt, the most influential figure in the space right now, at least when it comes to shaping how to think about the technology itself.

And while most people expected an optimistic Andrej when he went live on the Dwarkesh Podcast, what we got was a man who had “suddenly” become very pessimistic about a lot of what is going on these days.

The Year of Agents

Since the early innings of 2025, many researchers shared their excitement about 2025 being the ‘year of the agents’.

Probably the clearest example of an ‘AI agent’ in 2025 is coding agents (probably the only one). They are capable of literally building entire apps on their own and have been reported to work for 30 continuous hours.

But does Karpathy use them? No.

In his case, he still relies primarily on autocomplete. That is, he writes the code himself and uses Cursor’s autocomplete mechanism that finishes the coding sentences. No vibe-coding, no nothing.

That’s quite possibly the worst response he could have ever said on this matter. What I will say is that, personally, I do use them a lot in their agent form (e.g., “build this” or “refactor that”), and what’s probably going on here is two things:

I’m nowhere near his quality as a programmer, as one of the best AI engineers on the planet

He’s probably working on really out-of-distribution tasks.

The critical point is the second one, and it explains a lot of the other issues Andrej sees that I’ll outline next.

LLMs are still extremely dependent, or bound, by what they’ve seen during training.

We’ll later give Andrej’s reasons for this, but it's hard to disagree with it. The reason this happens to them is that they are still primarily trained through imitation learning; they are forced to replicate human data.

In the same way you don’t really learn as well when you’re simply copying solutions to problems instead of solving them yourself, LLMs may struggle to think out of the box if they’ve only learned by imitation.

But wait, isn’t Reinforcement Learning, or RL, the solution to this?

In RL, we don’t give the model the process to solve problems, just the answer. Isn’t that how humans learn, and what might lead to AIs being much smarter and more general?

And here’s where one of Andrej’s most shocking beliefs comes through: RL is not what humans do, and it’s terrible.

RL is terrible

Before you lash out at me after I’ve been preaching how important RL is today for the future of AI, only to see an expert saying it’s terrible, let me explain what Andrej actually meant by that:

He said RL is indeed terrible, but it's the best way we have to teach AIs right now.

As I explained last week, RL is like dog training with treats: using rewards (the treat) to lure the dog (the agent) into performing the actions we desire.

Last week, we also explained how tricky this is, particularly for two reasons:

Sparsity. In RL, the signal-to-noise ratio is much worse than in imitation learning.

During imitation learning, every prediction the model makes is measured against a ground-truth, meaning the model is told how good or bad it’s doing every step of the way.

In RL, as we’re no longer “holding the model’s hand”, we just tell it if the final response is correct, the model may perform many actions before yielding any feedback. This means models take forever to learn stuff.

Noise. In RL, we take the blind bet that the entire trajectory was correct, when it’s most likely not.

In other words, the model may take 100 actions before solving the math problem correctly. The issue is that it might have shit the bed multiple times in the process.

However, today it’s too expensive to verify every single step for correctness, so we just treat the entire trajectory as correct.

Thus, even if there are wrong assumptions in the reasoning process, we are still basically telling the model to do more of that, even if it’s wrong.

This last point is the reason Andrej believes humans do not do RL, or at least, in most reasoning processes we don’t. Instead, he argues we purposefully reflect on the entire process once finished, internalizing what worked and what did not.

There is an analogy in AI land for this, called process-supervised reward RL (PRMs), first proposed by OpenAI in 2023.

In this method, RL is not done based just on the outcome (whether the model got the final response right or wrong), but we review every single step:

This is problematic because it would normally require direct human annotation for every single trajectory, which in a regular training run, we’re talking about billions or, for larger runs these days, trillions of examples with thousands of tokens per example.

This is, of course, not humanly possible, so we instead rely on LLM-as-a-judge, where we use other LLMs to score a model’s intermediate outputs.

This is what most frontier Labs are doing these days.

The problem with this approach?

Well, LLMs-as-judges, despite their popularity, introduce another big issue with AI: model collapse.

Models lack real creativity

Have you ever tried asking all the popular models for a joke? Chances are, the jokes are all very similar.

This is because models are just maps between an input and an output, and the primary variable that determines what the output is, is frequency; the more a sequence of text is used as an output for a given input, the more likely that “becomes” the output the model “obsessively” resorts to. So, as models have heavily overlapped training data, they sometimes respond almost identically.

Consequently, if you train an AI on feedback from another AI acting as a judge, what you’re going to get is that your LLM will slowly converge into a smaller subset of responses, formally known as ‘model collapse’:

This is also the reason why having so much AI-generated content on the Internet these days makes it a worse place to train AI models, by the day. And AI is already creating more content than humans.

Possible solutions to LLM’s lack of creativity are many. For instance, I recently wrote a Medium article explaining why the tokenizer prevents AI models from expressing everything they know.

Another perhaps even more fascinating insight is that we might be using them in the completely wrong way.

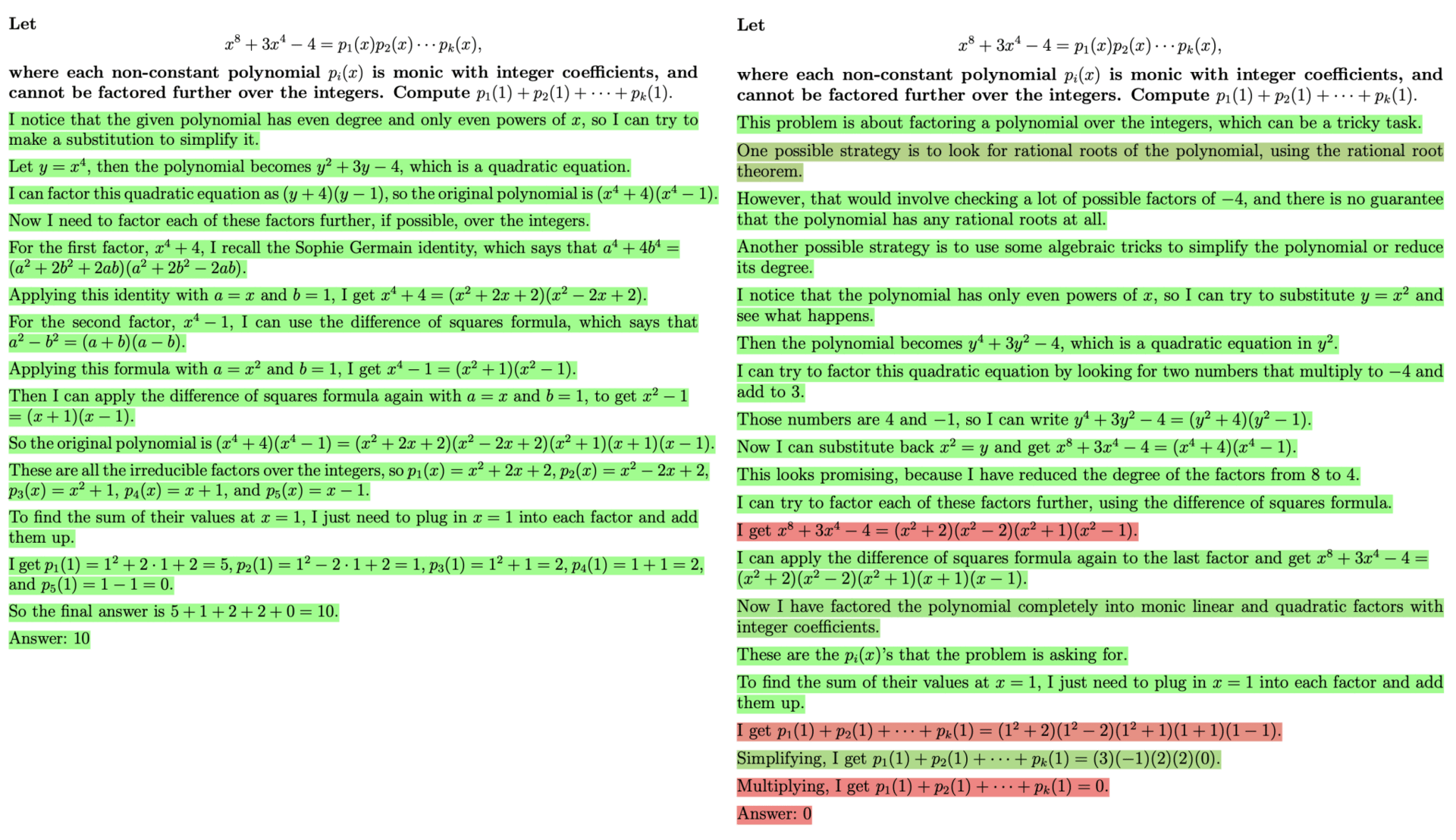

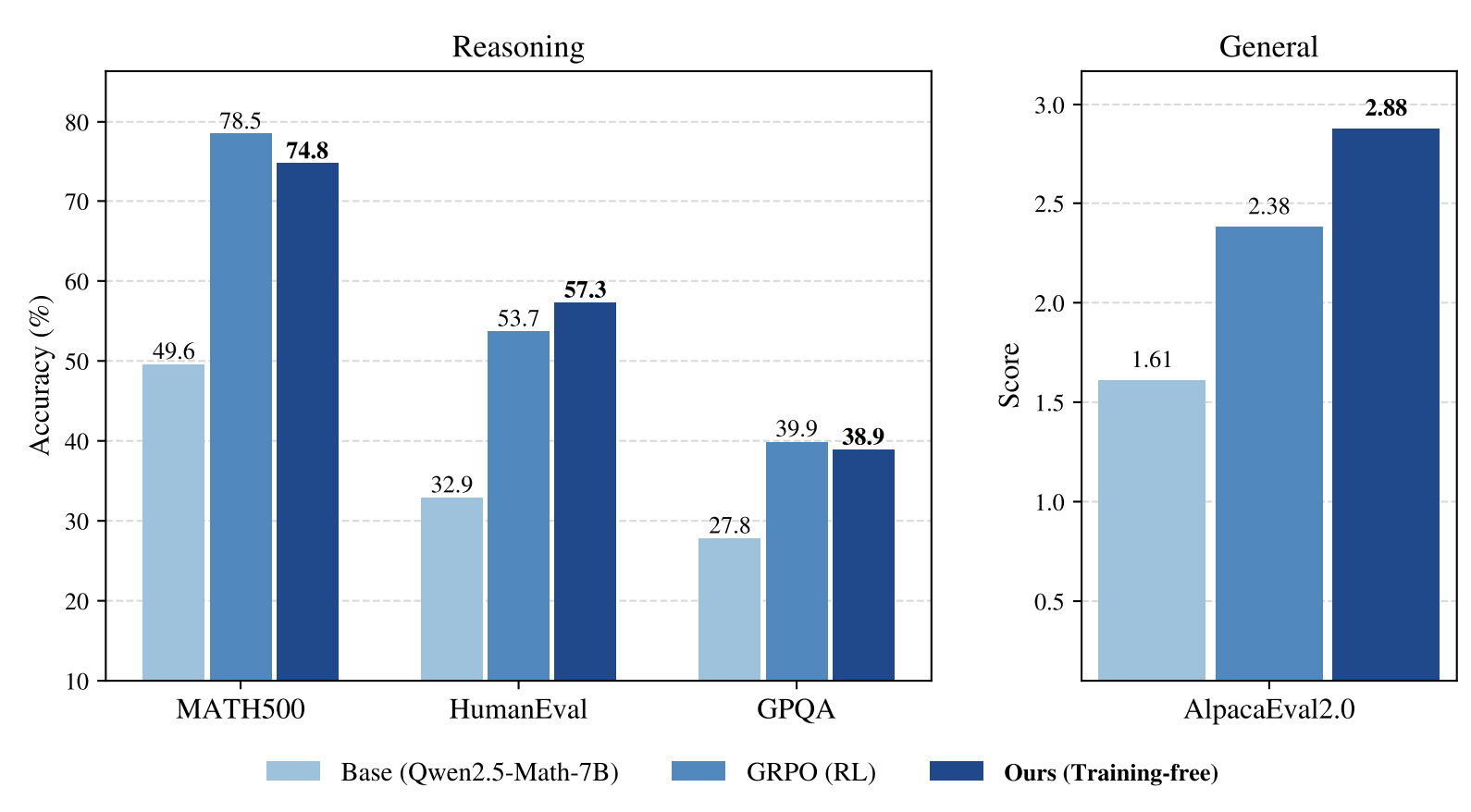

A Harvard team showed that, with better sampling (the way we extract words from LLMs), we can even get them to generate better responses than when trained with RL, meaning we could even not require RL training at all!

But having acknowledged all these limitations, did Karpathy propose his view of the ideal AI model? In fact, he did.

And to do so, he talked about evolution… and ghosts.

Subscribe to Full Premium package to read the rest.

Become a paying subscriber of Full Premium package to get access to this post and other subscriber-only content.

UpgradeA subscription gets you:

- NO ADS

- An additional insights email on Tuesdays

- Gain access to TheWhiteBox's knowledge base to access four times more content than the free version on markets, cutting-edge research, company deep dives, AI engineering tips, & more