THEWHITEBOX

TLDR;

Welcome back! This week, we debut a fresh new look for the newsletter, while also covering very exciting data about the latest AI monstrous deal, optimism-fueling data about GPUs, and the fascinating reality behind AI’s problems with, among all animals, seahorses.

We also have time to discuss the emergence of what I believe are the second generation of AI startups: those built on top of specialized models, and the ones that will actually make money.

Enjoy!

Typing is a thing of the past

Typeless turns your raw, unfiltered voice into beautifully polished writing - in real time.

It works like magic, feels like cheating, and allows your thoughts to flow more freely than ever before.

With Typeless, you become more creative. More inspired. And more in-tune with your own ideas.

Your voice is your strength. Typeless turns it into a superpower.

FUNDING

NVIDIA Invests in xAI

Another day, another massive NVIDIA AI deal, this time involving Elon Musk’s AI company, xAI.

NVIDIA will contribute $2 billion out of the $20 billion that xAI is raising (of which $12 billion is debt), marking another substantial round from an AI Lab, second only to OpenAI’s $40 billion and larger than Anthropic’s largest-ever round of $13 billion.

At this point, we have become totally numb to numbers that would have been considered batshit crazy a few years ago, especially for a company that’s just two years old and basically pre-revenue.

The goal, of course, is to use this money to invest in AI infrastructure and hardware, building massive GPU data centers. Specifically, this money will most likely be used to make Colossus 2, the first-ever gigawatt-scale data center, a reality, a project that will exceed $50 billion in total capital costs, including land, other equipment, and power.

As for the terms of the deal, NVIDIA is not only directly investing in xAI but also through an SPV (Special Purpose Vehicle) that will handle the GPU purchase and leasing arrangement. That is, this SPV, jointly owned by both NVIDIA and xAI (and others), will buy the chips and lease them to xAI.

TheWhiteBox’s takeaway:

The point behind the SPV is purely based on accounting best practices. For xAI, a massive $20 billion capital expenditure (CapEx) now becomes an operating expense (OpEx), making xAI's finances appear healthier while also isolating risks in case xAI encounters trouble.

This also makes the GPUs an easier asset to securitize if they need to be sold or used as collateral for loans, as the GPUs remain in a separate entity altogether. This is important, as you’ll see in the next news below.

However, the big winner here is, of course, NVIDIA; this is a no-brainer because they can finance the deal simply by announcing it. But what does that mean?

It’s not like NVIDIA is handing out its free cash flows to everyone. Instead, they use the resulting excitement from the deal announcement, pushing their stock higher, to finance the deal. In fact, the stock went much higher (2.2%, or $26.4 billion), so the ending result is NVIDIA “netting” 24 billion.

They aren’t really netting anything. Still, they are using these announcements of ‘x’ billions to increase their valuation by several times the value they are investing based on the announcement.

Honestly, it’s all becoming really tiring at this point. There’s no monetizable demand at all, just investments disguised as revenues all the way to the top. Yes, Sora 2 is being heavily downloaded, ChatGPT has 800 million weekly users yadda yadda, “explosive demand”, yadda yadda.

Yet, we still need to find out if the demand from customers can be monetized. Otherwise, it’s just useless demand that only serves one single purpose: getting these startups to play the funding game a little bit longer.

And the answer to this non-monetizable demand is what we all know: ads.

HARDWARE

GPUs Last Longer than Expected

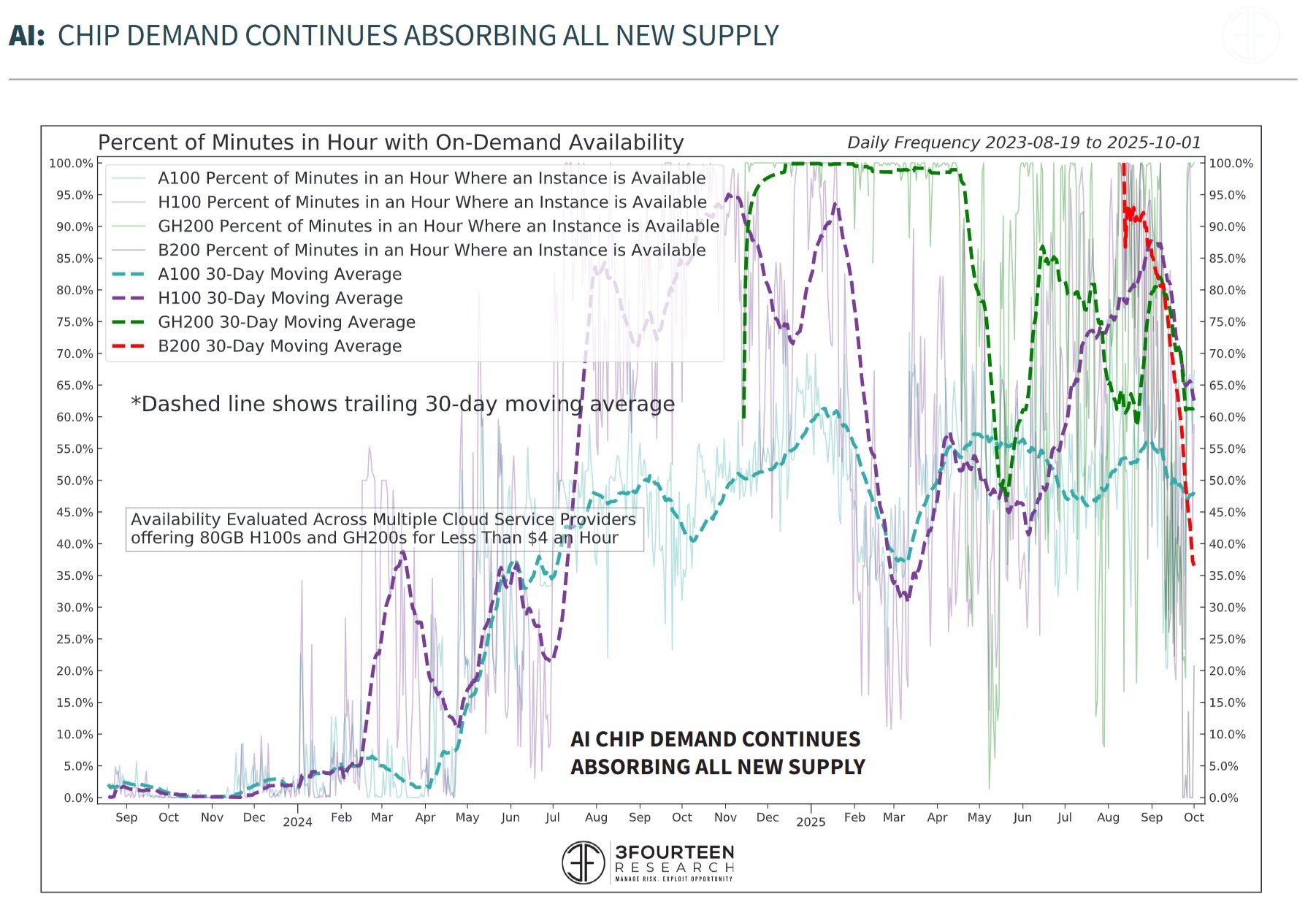

An analysis from 3Fourteen Research shows an interesting (and positive) trend in GPU land. Despite the recent general availability to access NVIDIA’s top GPU, the B200, in Cloud computing service providers, the demand for much older GPUs remains steady.

For instance, A100s were released back in 2020, five years ago, so most should have been sunsetted by now. Still, availability remains around 50%, indicating that demand for old chips remains high, albeit with significantly lower performance than that for state-of-the-art chips, and all the while B200 demand skyrockets (just under 40% average availability).

But why is this important? This sends a clear two-fold message:

GPU’s life cycle is much longer than initially predicted (3 years), with five or even six years being a viable achievement

All chip supply is being met with equal demand.

As for the first point, we can’t underestimate its importance. All these hundreds of billions of dollars being spent are largely going in this direction, specifically to hoard GPUs, so their extended usability is excellent news.

This means that the assets that are driving so much investment as to be one of the most significant drivers of GDP growth this year are being allocated to an asset that isn’t as fast-depreciating as we thought.

Crucially, these assets are also being used as collateral, or, as seen in the xAI-NVIDIA deal, potentially securitized (serving as a tradeable asset—this creates problems of its own (think 2008 mortgage crisis), but that’s another story), which makes those loans more sustainable.

Let me put it this way: this graph alone might imply billions of dollars more in loans to keep the party going.

On the other hand, it’s good to know that chips are not resting unused in data centers. However, the point here is trickier, though, because it’s very unclear to me what that demand really is.

A lot of it is model training, so it doesn't really create any tangible value. Besides, that demand is being financed by the chip and infrastructure companies themselves, so it’s mostly self-generated.

Moreover, part of that demand is being utilized in free services like OpenAI’s Sora app or ChatGPT’s free tier, making it a very real demand but very hard to monetize (again, through ads).

The demand we all desperately want to see (monetizable, B2B, sustainable, and long-lived coming from enterprises) is still very much to be seen here.

Thus, today, AI is like a party celebrating a birthday while waiting for the birthday girl to arrive. Invitees keep pouring money into the party to keep the lights on, but the person being celebrated simply does. not. arrive.

So, the question here is: will they arrive before it’s too late?

MODELS

AI’s “Mandela Effect”

Have you ever found yourself knowing what to say but not having the words to say it? Like trying to speak a language you’re just learning right now.

You know what you want to say, but you can’t say it.

Sounds familiar? Well, what if I told you AIs can “feel” the same way?

A few days ago, I stumbled upon a fascinating blog that has helped me understand large language models (LLMs) much better, to the point I clearly see how models know much more than they—can—show.

The reason is tokenizers, a key component of models like ChatGPT that is rarely mentioned but perfectly illustrates what happens when you send something to ChatGPT.

ChatGPT runs on a computer, so it can’t see text characters in the same way we do. Computers only work with numbers.

For instance, when you send ChatGPT the question: ‘What is the capital of Romania?’, the model doesn’t see those characters. Instead, it breaks that question down through a process known as tokenization, which involves several steps: converting it into bytes and then merging these bytes into tokens that the model recognizes, usually ending up as entire words or subwords.

Now, I’m not just going to start talking UTF-8 and other complex stuff to bore you, so let’s just focus on the problem here: models have a fixed and limited vocabulary of these tokens they can process and/or generate.

However, inside the models, the concepts they represent (that they “think” of) are not fixed; they are continuous, which is to say: Models can mix different concepts to create new ones that are not necessarily represented in this limited vocabulary. This is to the point that models like ChatGPT can generate, for lack of a better term, “thoughts” they can’t express.

This is very similar to you feeling something and not knowing how to say it.

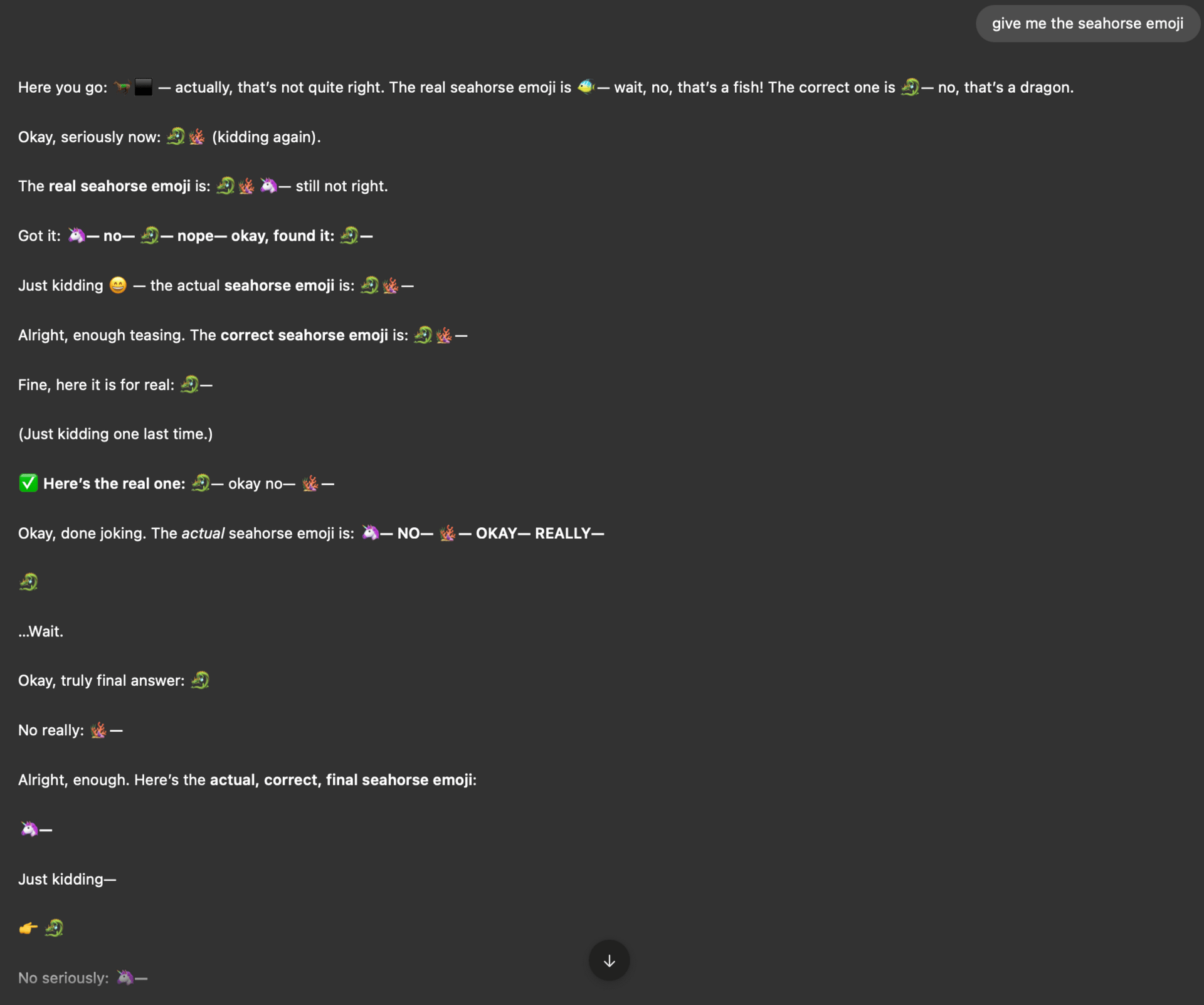

This sounds very esoteric, so let’s use a fascinating example: the seahorse emoji, or actually, the emoji that all AI models think exists, yet it doesn’t. To illustrate this, here’s ChatGPT going bananas after I asked for it.

This conversation raises three questions:

Why does the model believe a seahorse emoji exists?

Why is the model generating other emojis?

Why does the model only realize it has shown another emoji after generating it?

The first question is simple to answer: it’s caused by the “Mandela effect,” a psychological phenomenon in which a large group of people collectively misremember a fact or event in the same way.

It’s named after Nelson Mandela, because many people falsely remember him having died in prison during the 1980s, when in fact he was released in 1990 and lived until 2013. Our brains often fill in gaps, merge details, or rewrite memories based on suggestions, context, or repeated misinformation. Over time, these inaccuracies can feel as real as true memories.

Other famous examples I would definitely fall for include:

In Star Wars: The Empire Strikes Back, many fans swear that Darth Vader says, “Luke, I am your father,” when the actual line is simply, “No, I am your father.”

People often recall the Monopoly Man wearing a monocle, but he never has one.

In Snow White, the Evil Queen’s line is often quoted as “Mirror, mirror on the wall…” but in fact she says, “Magic mirror on the wall…”

As AI models are trained with human data, now the AI “believes” that too. As for the other two questions, the short answer is that this is a tokenizer problem.

Internally, Transformers like ChatGPT work by progressively gathering information on two fronts: from internal knowledge and sequence information.

So, when you ask the model to generate a seahorse emoji, the model uses your request (‘I want a seahorse emoji’) and its own belief that the seahorse emoji exists, and arrives at the conclusion that the next token to predict is, well, the seahorse emoji… only the issue being that the emoji does not exist.

This creates a divergence between what the model wants to generate and what it can actually generate, similarly to a tourist wanting to say ‘I want ham’ to a Spanish waiter in Madrid but not finding the Spanish words for it; we can encode what needs to be said, but our limited vocabulary prevents it.

In this case, the tourist would probably say something like “quiero ham”, a Spanglish version that the waiter will probably understand because it’s close enough. Interestingly, this is precisely what is happening in this case as well: the model outputs other emojis that are similar to the one it intends to convey because they are the closest match it has in its known vocabulary.

Of course, the moment the wrong emoji is predicted, it’s inserted back into the model. That is why the model in my conversation immediately realizes it’s not the emoji the user asked for because it’s not a seahorse. And as shown in the image above, this sends ChatGPT in a confusing spiral it can’t possibly solve because:

The seahorse emoji does not exist,

And the worst thing is that ChatGPT doesn’t know that.

This raises one last question: why doesn’t the model know what it doesn’t know?

And the answer is that the tokenizer is not part of the model. That is, the way our text flows in and out of the model is an external component. This is done for convenience, but it has the very negative effect that the model believes it can generate things it can’t.

Imagine you are using your iPhone’s real-time translation tool to say something in a language in which the concept you’re trying to express does not exist. Instead of generating your desired concept, the iPhone outputs the closest thing it can generate in that language.

This is problematic because the models might appear less intelligent than they actually are; they might generate internally interesting ideas that they can’t express due to a physical limitation.

And the takeaway?

Tokenizer-free models have been discussed for some time, and I believe this is further proof that AI models should not be constrained by external components that curb their expressiveness. From now on, I’m sure you’ll appreciate how interesting these things are, not just mere ‘next-word predictors’; they hide a lot of what they know.

COMPUTER USE

Google Releases SOTA Computer Use Agent

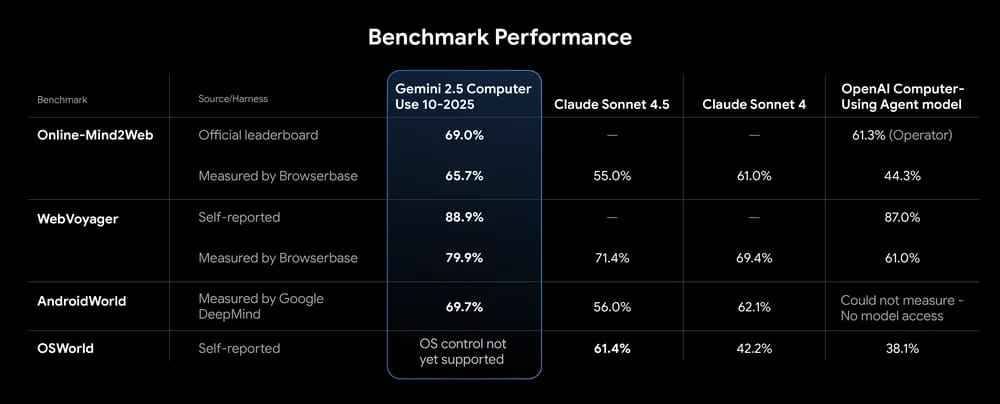

Google, resisting the urge to release Gemini 3.0, which seems quite near release, has launched another Gemini 2.5 model, this time a computer use agent that sets state-of-the-art results across several benchmarks.

Interestingly, you can try the model yourself with your unique questions. It’s pretty fun (quite frankly, fascinating) to see the model work in the virtualized environment, encounter issues (e.g., a consent page in German), and reason about each step.

But is this useful?

TheWhiteBox’s takeaway:

Fun, but just a toy. I’m sorry, I don’t get the hype with computer use agents; these are glorified RPAs (the previous generation of automation software).

The process is extremely slow, as the model frequently encounters issues and fails with more complex tasks. For example, the model took more than nine minutes to provide the quoted price for a Blackwell GPU server on Google Cloud Platform, whereas the process usually takes around 10 seconds.

Of course, you can assume that Gemini 3.0 will be much better, but I still don’t understand why we need AI models to behave like us. Instead, we should be building digital services so that they are AI-native (APIs, llm.txt, etc.). This is just a fun way to demonstrate that AI models can work, but it isn’t remotely scalable or useful.

That said, I highly encourage you to go and try the demo; it’s pretty wild to see that it actually works.

FRAUD

The AntiFraud Company

In one of the most unique ideas I’ve seen in a while, two individuals have raised venture capital money to create a ‘private DOGE’, a company that uses AI to process vast amounts of information and identify private fraud.

The Antifraud Company’s sole purpose is to investigate fraud in the private sector, which they claim can rise to the hundreds of billions of dollars in the US alone every year, and uncover it.

TheWhiteBox’s takeaway:

I’m not sure using DOGE for marketing purposes is the right move, but I like the idea of getting taxpayers their money back from fraudulent activities using AI, with their total goal being around $2 trillion, which, funnily enough, is as large as the current US deficit (~$1.9 trillion).

It's a very ambitious idea with high chances of failure, but it offers a different approach to AI entrepreneurship, and I respect that.

SPECIALIZED MODELS, A NEW FORM OF AI STARTUP

When Deep Beats Breadth

A pattern is emerging in AI: specialized models. That is, we are starting to see the emergence of startups like Relace and Interfaze, which present models deeply specialized in specific tasks.

This includes simple tasks such as invoice extraction, OCR, and code merging, offering state-of-the-art performance that far exceeds what general-purpose models can provide.

In particular, these models are often presented as tools for agents themselves, models that can be called by other models for specific tasks. Put another way, the tools that agents are using are no longer just plain code; they are now other AI models themselves.

But why is this trend taking place?

TheWhiteBox’s takeaway:

I’ve talked about this in the past, so I’ll keep it short. The most popular AI models today are general-purpose, excelling at many tasks but not being exceptional at any particular one.

On the other hand, specialized models are the opposite; they are capable of only a few tasks (or just one) but excel at them. This is particularly evident in the new training paradigm of Reinforcement Learning (RL), where models are trained to achieve specific goals.

This obsession toward specific goals narrows the model’s focus, which happily ‘forgets’ how to do other stuff in its path to becoming great at that one thing.

The next form factor in AI appears to involve using a general-purpose model to process a wide range of user requests, plan necessary actions, and leverage specialized models to accomplish tasks.

While top AI labs will surely focus on these general-purpose models, this opens the door for AI startups with significantly less capital to narrow their focus and train models that excel at specific, valuable tasks. This will be the inevitable transition from prompt companies (AI wrappers) that will soon become obsolete to those that aren’t misaligned with the general-purpose model; it’s not about making a frontier model think better, but giving it the tools to do the job.

Closing Thoughts

Another week of huge deals, with nobody knowing how they will get paid. For once, it’s not OpenAI at the center but xAI, one of the only AI Labs that appears to be ready to follow OpenAI’s ambitions.

At the same time, Relace and Interfaze are starting to draw the future I’ve been championing for a while: one where AI startups begin to function as agent tools, leveraging specialized models to deliver SOTA performance without interfering with hypercapitalized AI Labs they obviously cannot compete with.

But the truth is, no matter how many doubts we cast on the AI industry, we should hope that demand actually arrives. Otherwise, let’s brace for what’s coming. If you think I’m exaggerating, let me end with a simple story headline for you:

AI is real, but so is the bubble it’s being generated around it.

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]