THEWHITEBOX

TLDR;

Welcome to TheWhiteBox, the place to get the recap of the most important things taking place in AI recently.

Today, we have a newsletter packed with insights: new data on o3’s real intelligence, Google’s secret to AGI, OpenAI buying Chrome?, SK Hynix’s quarterly earnings, progress on home-cleaning robots, and sleep-time compute - a solution to the elephant in the room of AI: compute affordability.

Enjoy!

THEWHITEBOX

Things You’ve Missed By Not Being Premium

On Tuesday, we sent an additional insights recap, including how o3 got caught lying, Google’s impressive model spree, reports on AIs being used for firing, & more.

FRONTIER INGELLIGENCE

ARC-AGI publishes o3 results

As you probably know, all AI Labs, from OpenAI to Anthropic, have one thing in mind: Artificial General Intelligence, or AGI, an AI that will be capable of performing most economically valuable work.

And the benchmark to test whether we are making progress in that direction is the ARC-AGI

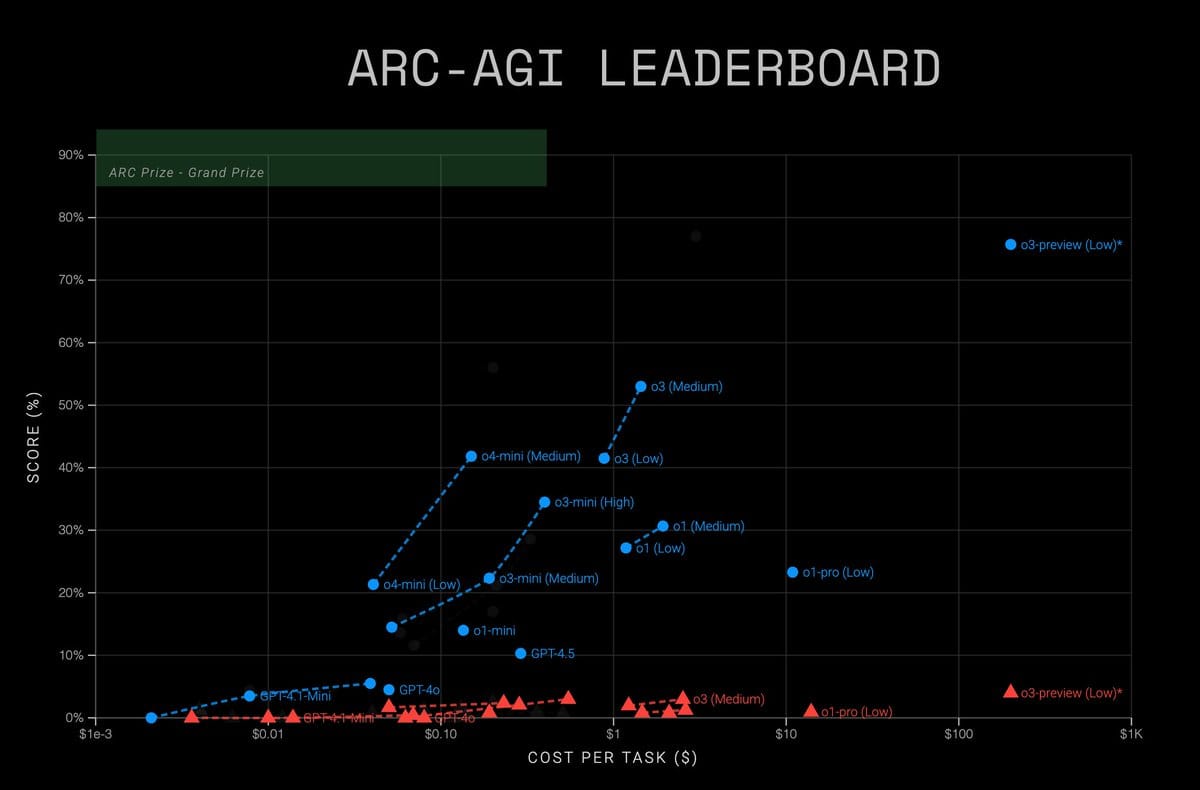

The researchers behind the ARC-AGI challenge have published the results for o3 and o4-mini, both in the original benchmark (which is an earlier version of OpenAI’s o3 saturated—solved) and in the new one, (born as a result of that saturation to test new capabilities).

The o3 model OpenAI released last week performs worse (53%) than its December version, which had access to a much larger amount of test-time compute (allowing the model to think for longer on the task).

Unsurprisingly, both o3 and o4-mini were unable to produce meaningful results in the second benchmark (ARC-AGI-2), achieving below 3%.

In a later blog, researchers explain why they did not disclose the results obtained by o3-high and o4-mini-high, the thresholds with the highest compute capacity, which had better preliminary results across the board. The issue was that these models were unable to answer most of the tasks. As it seems, they enter a rabbit hole of compute they can’t get out of.

This is AGI, said Tyler Cowen. Really?

TheWhiteBox’s takeaway:

Don’t get me wrong, as soon as you interact with o3, you realize the model is the best AI you’ve ever talked to; it just is. The comments are incredibly nuanced; it feels like you are talking to an expert in almost any field you can fathom.

That said, I’m adamant that this is just a very well-executed illusion of intelligence, a well-executed “speaking database,” if I may. But in the key areas that define human intelligence, the precise ones being tested here, like adaptability to new problems and key pattern abstraction (the type of stuff you would be evaluated in if you took an IQ test), you easily start to see the cracks, and performance comes crashing down.

But why does this happen?

How can a ‘PhD-level’ intelligence model (as proclaimed by some), which can give you a top-level report on quantum computing, can’t solve a simple task such as the one below, which a young kid or early teenager could solve easily?

The answer is that we make a mistake by evaluating intelligence as a task complexity problem rather than a task familiarity one. In layman’s terms, we evaluate a model’s intelligence for how complex the hardest problem they can solve instead of how hard the most challenging unfamiliar problem they can solve is.

Notice the difference?

In the former, memorization can save you because, no matter how hard a task is, if you’re highly familiar with it or have memorized it, you can solve it. However, real intelligence comes when you can solve problems you have never been exposed to, problems where memorization won’t help you.

If you frame intelligence that way and test frontier AI models, you’ll see how performance comes crashing down pretty quick.

Nonetheless, the o3 model that marveled the world back in December for its results on this benchmark had been purposely trained for the benchmark. Pure coincidence, or the acceptance that task familiarity is the only way we can get AIs to solve stuff?

But if AI intelligence is so clearly limited, what is the solution then? I believe David Silver from Google Deepmind, who we talk about in the next story, might have the answer.

FRONTIER INGELLIGENCE

Google Deepmind & The Path to AGI

While OpenAI and Anthropic usually make the biggest predictions about AGI for the world to see, the lab that is more low-key and opinionated on the topic is, by far, Google DeepMind. It is the only lab, I believe, that consistently publishes research on the subject, what it means, and how it suggests achieving it.

Now, they have published a podcast with David Silver, VP of Reinforcement Learning (RL) at Google DeepMind, which describes several key intuitions on how Google understands this goal.

The podcast is very easy to follow; both the interviewer and David speak in layman's terms all the time, so I feel comfortable recommending it to everyone.

Of course, they center the entire discussion on RL, as it is David’s area of expertise, but this is a vital topic in today’s AI world because RL is what has led to models like OpenAI’s o3.

He explains the basics of RL, its shortcomings, and, crucially, how he feels the non-verifiable rewards problem might be solved: real-world grounding.

But what is this problem?

If you read this newsletter regularly, you may have noticed how I always insist on the idea that our current reasoning models can only reason on ‘verifiable domains.’

From the way they are trained (reinforcing good behaviors and punishing bad ones), they can only learn in areas where good and bad responses are easily identifiable. For example, when solving a maths problem, you can check whether the answer is correct or not. Therefore, it’s not surprising to see how good they are getting at maths.

But non-verifiable domains, like creative writing, remain unsolved, and in those areas, we can only depend on knowledge compression, or how well AI models have compressed knowledge of the topic to generate ‘reasonably sounding’ responses. For example, they’ve read a lot of Shakespeare, so they can generate ‘Shakespeare-sounding’ verses.

But what’s the difference? What we haven’t figured out is how to “optimize for Shakespeare,” as we don’t know how to measure whether a ‘reasonably sounding’ Shakespeare verse is better than another similar example. This is quite possibly the biggest unanswered question in AI.

In other words, we have learned to imitate Shakespeare, but we don’t know how to train a model to be the living embodiment of Shakespeare beyond just simply giving it a lot of Shakespeare texts. Conversely, in areas like maths, not only can we send these models plenty of math data (so that they become familiar with it), but we can also train them to practice maths during training, not just memorize it, thanks to RL, because we can let it know when it’s doing the right thing and vice versa.

This is the reason no AI has come remotely close to being as good as a writer as humans, but we are seeing AIs becoming superhuman in areas like board games or maths.

If these types of topics in understandable words are of interest to you, you will love the podcast.

M&A

OpenAI Considers Buying Google Chrome

According to a Reuters article, OpenAI might be interested in buying Chrome from Google. Despite Google not being willing to sell Chrome, it might be forced to, based on one of the ongoing antitrust cases it is involved in.

The most significant driver of this antitrust case is Google’s payments to hardware companies like Apple ($20 billion per year) and Samsung so that Chrome was the default browser in their phones (Samsung also uses Google-owned Android fork as the operating system), which might indicate that Google is paying off its distribution sources so that others can’t compete.

An OpenAI executive testifying in the case also mentioned that Google declined the possibility for ChatGPT to use Google as a search engine (it currently uses Bing), which might be another sign of uncompetitiveness.

TheWhiteBox’s takeaway:

Buying Chrome from Google could be a massive hit by OpenAI, having the possibility to use the browser’s insane distribution to put OpenAI’s models in the hands of billions of people (Google offers Gemini through the Chrome browser, which is why it has seen an explosive growth to 350 million monthly active users).

When your product is a commodity, like AI models, distribution matters a lot. This is why Google, with the added value of its much more mature data center business, has it all to win.

But if they are forced to sell Chrome and it ends up in their main AI competitor, things could get much more interesting (currently, there’s no debate Google is winning the AI race).

STARTUPS

Your AI Customer Researcher

Listen Labs has announced a $27 million Series A round led by Sequoia to automate customer research. The tool is designed to streamline customer feedback on your products.

TheWhiteBox’s takeaway:

The tool makes a lot of sense, as gathering customer feedback quickly is truly valuable, especially when you’re building products.

But again, what’s the company’s moat here?

With all due respect, it’s an AI wrapper, most likely an OpenAI wrapper (Sequoia is an OpenAI investor, so they probably get API prices with substantial discounts), featuring a good-looking interface and a series of prompts on top on an external API.

Is this all you need to raise $30 million? Feels hard to believe. The good thing going for this company is that:

I believe the product solves a real problem (streamlining customer feedback), and

better AI will make the product even better, not obsolete.

But I can’t help but wonder: how much effort would other companies need to replicate this precise idea?

Maybe I’m missing something or being too cynical, but my intuition is that the answer is “minimal.” In fact, at the current speed of progress, I would bet that by the end of the year, I can one-shot prompt this entire product with o4 or Gemini 3.0.

For these reasons, I would bet that Listen Labs’ moat here is not the product but the relationship they’ve established with Sequoia: deep pockets and cheap API OpenAI prices. Software is steadily heading to a low-margin world… so maybe that’s the type of moat you actually want.

Is undercutting others’ prices and not having a better product the future of software?

AI HARDWARE

SK Hynix Surprises… For Good

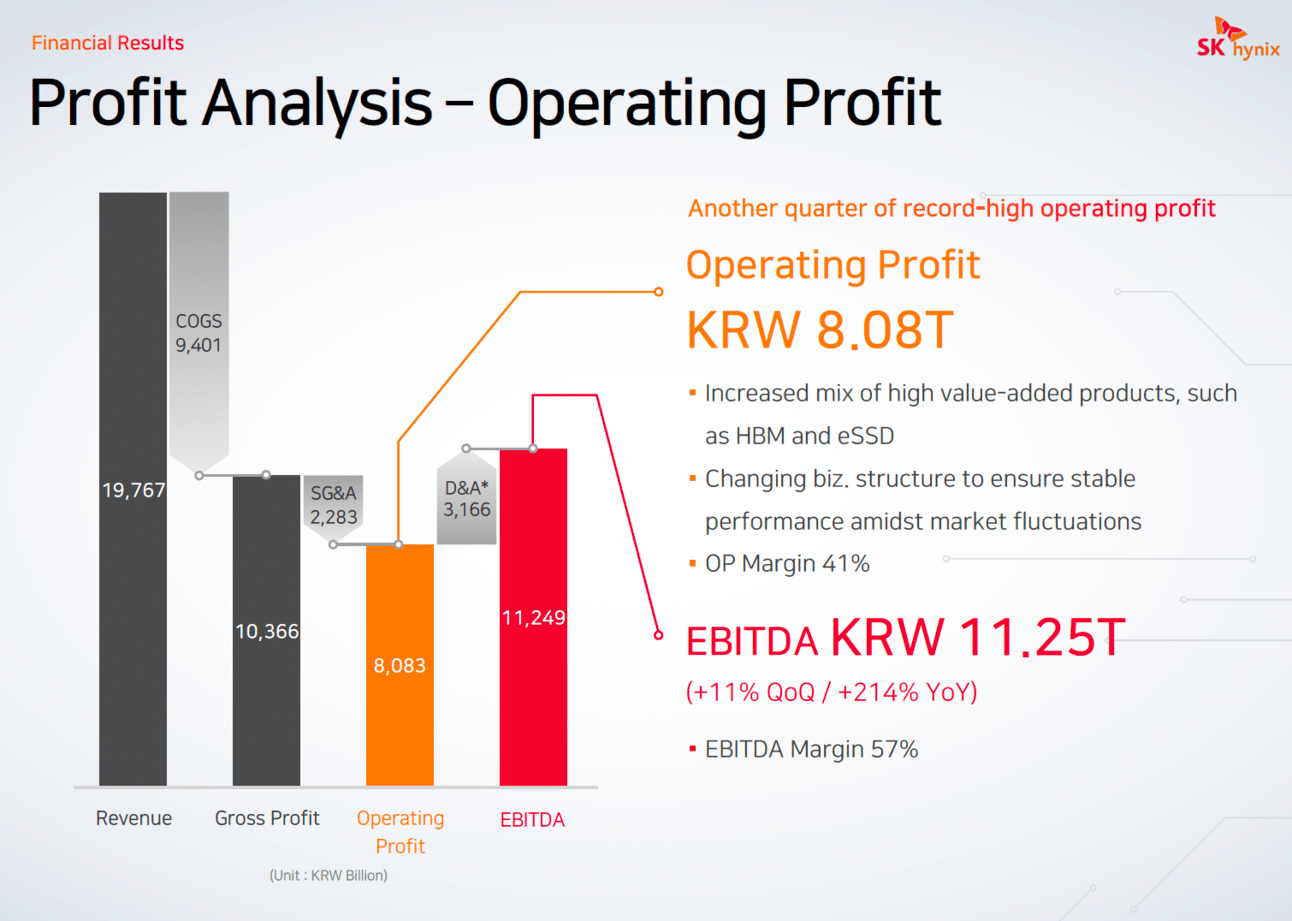

SK Hynix, a manufacturer of AI memory chips and one of three companies that can produce high-bandwidth memory (HBM) chips, has presented its quarterly earnings, beating analyst expectations.

But what's more to their numbers? Well, a lot.

TheWhiteBox’s takeaway:

SK Hynix is one of the key companies to follow when analyzing whether AI CapEx is drying up, as it is NVIDIA’s leading supplier of HBM chips. All NVIDIA AI GPUs use HBM chips, and the same applies to AMD and Huawei.

They show a very healthy 57% EBITDA, which has actually grown 11% from the last quarter, indicating stronger demand for their chips. This isn’t surprising considering that HBM represents one of the most critical pieces in the AI accelerator picture, and whose importance will only but increase.

The reason for this is that the per-GPU HBM size is skyrocketing; NVIDIA has increased from 80/100 GB of HBM per Hopper GPU to 192 GB per each B200 Blackwell GPU, matching both AMD's and Huawei’s respective memory allocations.

The reason for this is once again inference (as I’ve said previously, you can literally predict every single new hardware development simply by looking at how AI inference is evolving).

As reasoning models have high inference-time compute demands, workloads where memory size and speed are the main bottleneck, HBM demand will grow.

In that regard, HBM suppliers (again, only three of them exist, South Korean SK Hynix and Samsung, and the US player Micron) are going to have a field day as long as the AI boom continues because the need for HBM memory is only going to increase (NVIDIA’s next GPU series, Vera Rubin, will grow the HBM number to 288 GB per GPU).

On the flip side, the future of this company has two significant connotations:

HBM is almost exclusively manufactured for AI. If AI CapEx dries out, SK Hynix is in trouble.

HBM is very expensive and takes a significant toll on fabless’ (NVIDIA, AMD…) cost of goods sold (direct costs of building GPUs). HBM3 can reach prices of $110/GB, which means that in cases like NVIDIA’s Blackwell B200, it’s basically more than a third of the retail price. With NVIDIA under pressure by investors to maintain gross margins while the industry is asking for more per-GPU HBM (COGS go up), the “maths aren’t mathing,” so I’m pretty sure NVIDIA is expecting some price deflation from SK Hynix.

Luckily, it seems SK Hynix has a very healthy business (CapEx is 53% of operating profits), so they have strong cash flows and thus the margin to decrease prices if needed.

On a final note, not all AI accelerators require HBM. Players like Cerebras or Groq rely on direct, on-chip memory. However, their market share is currently minute, so most AI accelerators being sold still require HBM.

LIQUID MODELS

Physical Intelligence Shows Pi0.5

Physical Intelligence, a US AI robotics company, has shown its robotics progress with Pi0.5.

Unlike other startups opting for a more humanoid look (Tesla, FigureAI, Boston Dynamics in the US, Unitree in China), they are offering what’s essentially a two-armed robot that, as seen in the video, seems to be capable of performing a manifold of different actions in an unfamiliar home setting, guided by the instructions of the homeowner.

But is that impressive or not?

TheWhiteBox’s takeaway:

Before you feel underwhelmed based on hyped videos coming from companies like Figure or Unitree, let me clarify that the progress shown in the video is impressive for reasons you might not have noticed.

The point of the video is to showcase generalization, the idea that these robots can perform new actions in environments they haven’t seen before, envisioning a future where everyone will have a robotic house cleaner.

And before you disregard the importance of such eventual achievement, it’s literally considered by many a crucial milestone to AGI.

For example, having a functional robotic cleaner is one of the conditions in a bet between famous researcher Richard Socher and an OpenAI co-founder as to whether AGI will be achieved before 2027 (he did not disclose their identity), proving that this is a much more challenging task than it seems.

TREND OF THE WEEK

Sleep-Time Compute, A Path to Affordable Reasoning?

With the advent of reasoning models, models like o3 that can think on tasks for longer and achieve amazing results, the elephant in the room is how on Earth will they be served. Currently, the model providers are all considerably compute-constrained, with alarming examples like Amazon’s recent GPU meltdown on AWS.

Even the most compute-rich of all, Google, is struggling to meet demand, and Sam Altman claimed that saying ‘thank you’ and ‘please’ to OpenAI models is costing them millions of dollars.

Long story short, if reasoning models are coming, too bad because we can’t run them. Thus, this research by Letta and the University of California, Berkeley, is particularly helpful in offering a crucial solution to help address this issue.

Today, you’ll learn the following:

the intricate nature of reasoning models,

the complicated reality of serving them,

and how sleep-time compute might save the day with 5x compute reductions to solve the continuous GPU meltdowns.

Let’s dive in.

Test-Time Compute: Salvation or Doom?

The future of modern Generative AIs rests upon the shoulders of three words: test-time compute.

I’ve explained this several times, so I’ll cut to the chase: test-time compute is the idea when models, upon receiving a request, allocate more compute to the task to increase performance.

Put simply, by making models think for longer on the task, we improve the results.

This makes total sense if we think about tasks like coding or solving a math problem. The longer you think about the task, the more likely you are to get it right. Conversely, try and solve a complex multiplication in a heartbeat; you most likely can’t. Thus, the more time you’re given, the more likely you get it.

However, this has an undesired consequence: the balance of compute shifts to the worst possible form.

When Maths Aren’t Mathing Anymore

In case you aren’t aware, building and running AI is expensive. Its ‘expensiveness’ starts from the very bottom, with the hardware, also known as ‘accelerators’, with NVIDIA’s GPUs being the prime example.

These things retail for $30,000-$40,000 each, and to offer AI services to consumers, you need hundreds of thousands, potentially millions, of them. So, capital costs are already in the billions of dollars range.

However, problems don’t end there; AIs are surprisingly complex to run efficiently. But what does the word ‘efficiently’ mean here?

I went into great detail to explain this in my recent Google deep dive, but we’ll summarize it here in the most intuitive way possible. Measuring if you’re being efficient or not is surprisingly simple to do in AI:

You measure the arithmetic intensity of your workload and compare it to the GPU’s ideal threshold, and that tells you whether you’re killing it or you’re about to fire your engineering team.

Simply put, arithmetic intensity measures the number of operations your GPUs perform per byte of transferred data. But why use such an esoterically-sounding metric?

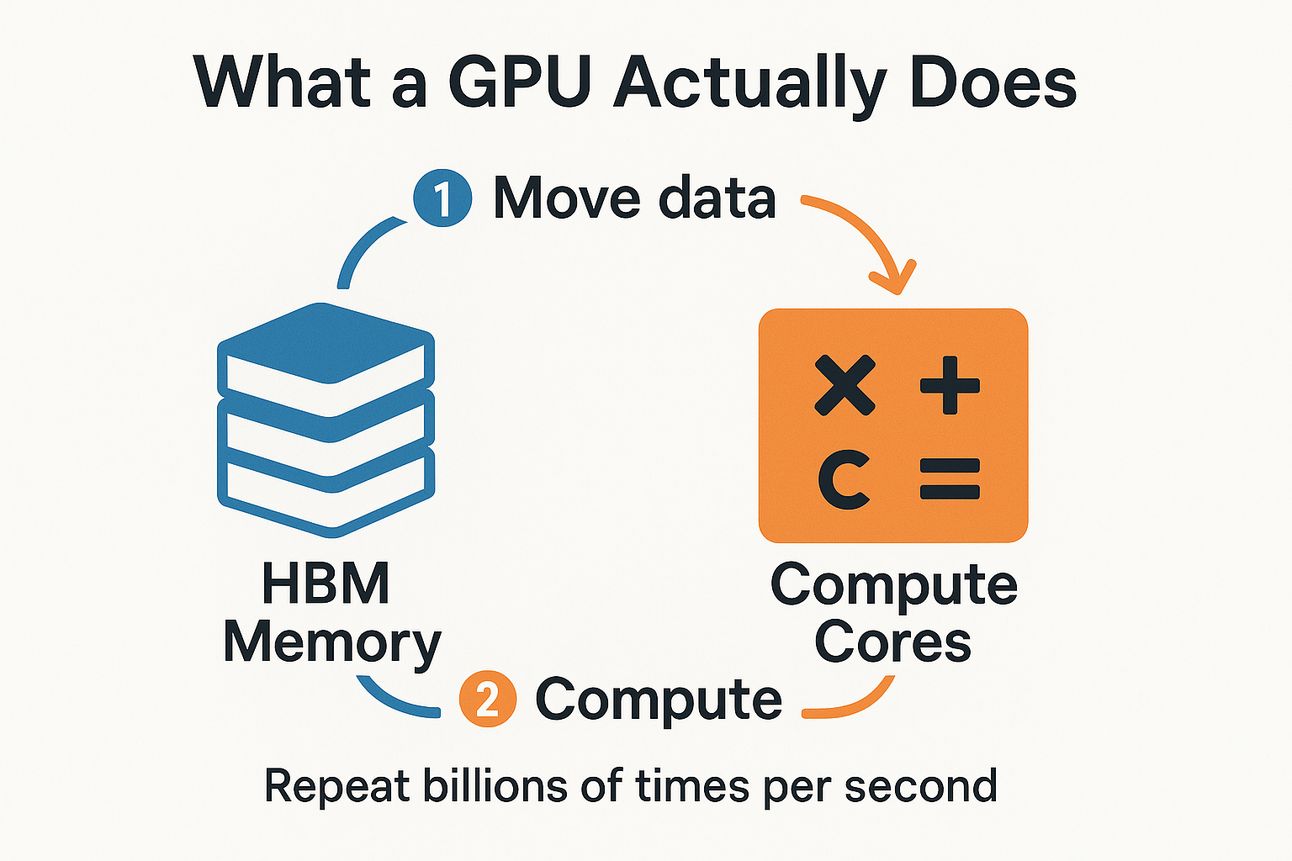

Like any other computer, GPUs do basically two things: calculate data and move data. Data is transferred into the computing cores, calculations are made, and the results are offloaded to memory, and the cycle repeats.

That’s pretty much it.

And although traditional compute is another story (more on this on Sunday), in the AI world, revenues are directly linked to these calculations being made, while data transfers are a necessary evil; they consume power, but do not generate revenue.

Hold this thought not only for later in this piece but forever.

The reason compute = money is that Generative AI workloads generate ‘tokens,’ which can be words, images, videos, or anything else, in response to a user request. These tokens are directly charged to the customer, thus they are quite literally revenue, which is not the case in CPU-based clouds.

Hence, for every watt-hour of energy my GPU consumes, the more energy is spent on computations and not on memory transfers, the more money I’m generating for the same cost.

As we’ll see on Sunday, margins are slim in this business, so this is quite literally a life-or-death situation for these companies, especially for Neoclouds, that don’t have $100 billion in free cash flows from other businesses to cover their expenses.

But where are we getting with all this?

Simply, the nature of reasoning models requires massive data transfers, killing our arithmetic intensity and thus making the business of generating revenue from AI a total nightmare, one where evaluating the return on investment in your AI business makes the person who approved the investment look foolish.

But why?

The Ugly Business of Inference

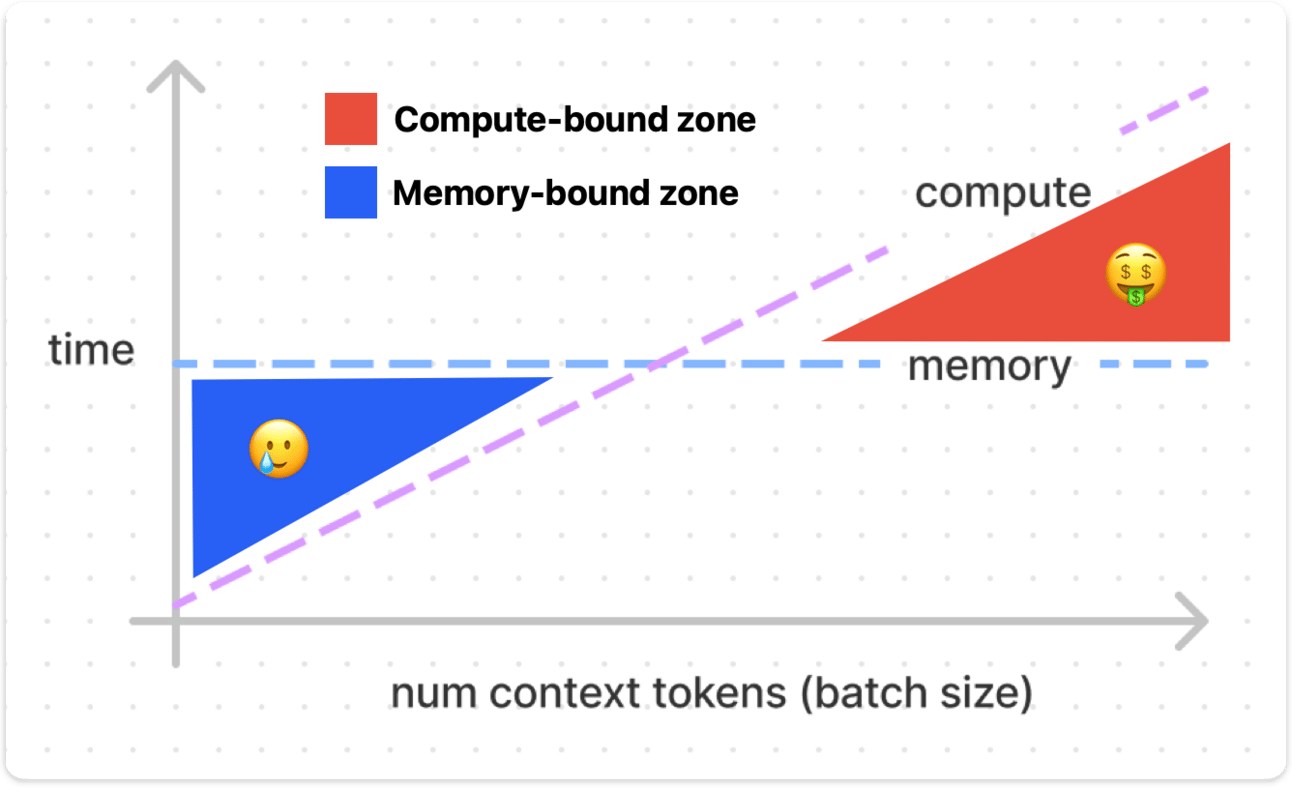

This idea of being above or below the arithmetic intensity threshold introduces the concepts of compute and memory-boundness.

It’s quite simple:

If I’m over the hardware’s arithmetic intensity threshold, I’m compute-bound. This is where I want to be.

if I’m below, I’m memory-bound, where I desperately DON’T want to be.

But what does this mean?

Suppose we have an NVIDIA Blackwell B200, the state-of-the-art AI accelerator hardware. This beast can generate a theoretical peak of 5 petaFLOPs of computational throughput, or 5,000 trillion operations per second. That is one trillion operations per second, five thousand times.

The memory speed is 8 TeraBytes/second, meaning it can move 8 trillion bytes per second. This gives an arithmetic intensity of 625 FLOps {5,000 / 8 = 625} operations per transferred byte.

But what does this mean?

It means that unless your GPU isn’t outputting 625 or more computations per byte of moved data, your workload implies a certain amount of time during which your GPU is consuming energy but not making computations. In layman’s terms, there are moments when the GPU compute cores are idle, waiting for data.

Think of it this way: The compute cores are 625 times faster calculating than the memory chips can move data. Therefore, any output below that number means that your compute cores are underutilized. If the throughput in the period it takes to move one byte is 60 FLOPs, ten times less than the peak, the compute cores will finish the computation very fast, and sit idle 90% of that time waiting for new data.

Okay, but what has that got to do with reasoning models?

I won’t go into detail as I will on Sunday, but AI inference can lead to GPU utilization thresholds of 10% or less, as in the example I just gave.

Your billion-dollar investment is idle 90% of the time while still consuming energy! Great investment!

It's a complete tragedy; you're literally severely underutilizing a depreciating asset with a three-year depreciation schedule (in three years, it's time to buy a new GPU).

Good luck explaining this to your CFO (if you're foolish enough to buy your own GPUs) or to your Sequoia partner if you’re an AI lab or LLM provider.

So, what can we do? Easy, predict and prepare.

Sleep-time Inference

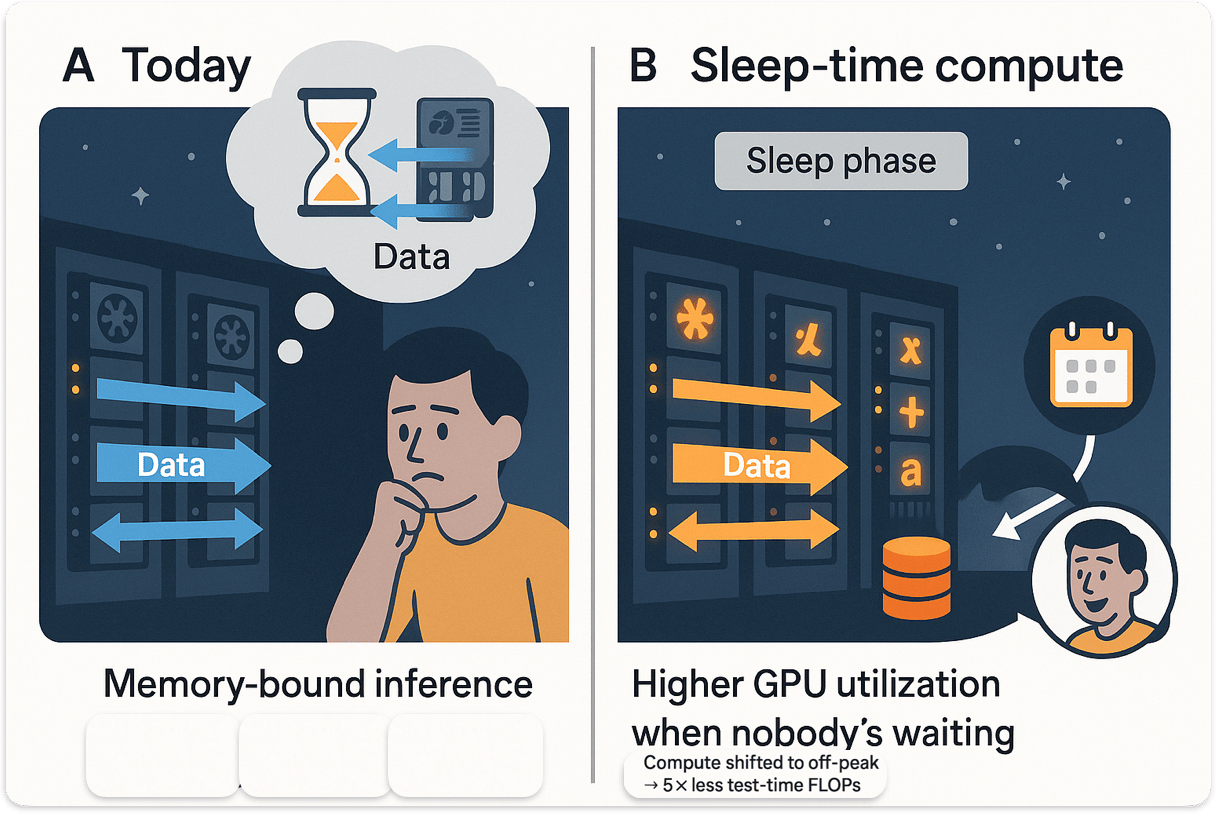

The reason inference is so memory-bound is that it is latency-sensitive, and thus, we cache a lot of the computations. This skyrockets memory transfers, because if your user is waiting forever for a response, they will churn.

But is it really that bad? Yes.

Speed Matters Most

In the famous “Milliseconds make Millions” between Deloitte and Google, they observed:

0.1-second speed improvement drove 10% more consumer spending. Conversely, a delay of that same amount led to a 7% decrease.

2-seconds delays saw a 103% bounce rate increase (the consumer left the website)

Among other eye-opening results.

With AI assistants, this trend is likely to worsen, especially given the fierce competition. Thus, to prevent long latencies, AI providers do several tricks, but one stands above the rest: KV caching.

They cache (store in memory) a significant portion of the computations required during inference, which considerably increases response speed. However, this is a trade-off in terms of GPU efficiency, as it decreases compute throughput while increasing data transfer requirements simultaneously.

With the KV Cache, in the arithmetic intensity ratio formula, the numerator goes down, and the denominator goes up, steering us away from the compute-boundness.

And here’s where reasoning models become a pain.

As they generate long chains of thought to improve accuracy, this means the amount of compute required for inference skyrockets (as well as the KV Cache, thus the memory transfer requirements also skyrocket).

Although numbers aren’t formally stipulated, it’s estimated that reasoning models increase compute by 20x compared to traditional—non-reasoning—models!

Therefore, if reasoning models guarantee that most compute will be inference-based, the providers of these models are going to be left running expensive GPU clusters that are severely underutilized, in turn requiring even more underutilized clusters, creating a flywheel of underutilized, billion-dollar costing assets.

In this reality, no company, not even the Hyperscalers (Microsoft, Google, Amazon, Meta), will make a return. Ever. Lunch is never free, so if returns don’t come soon, support for AI workloads will eventually dry out.

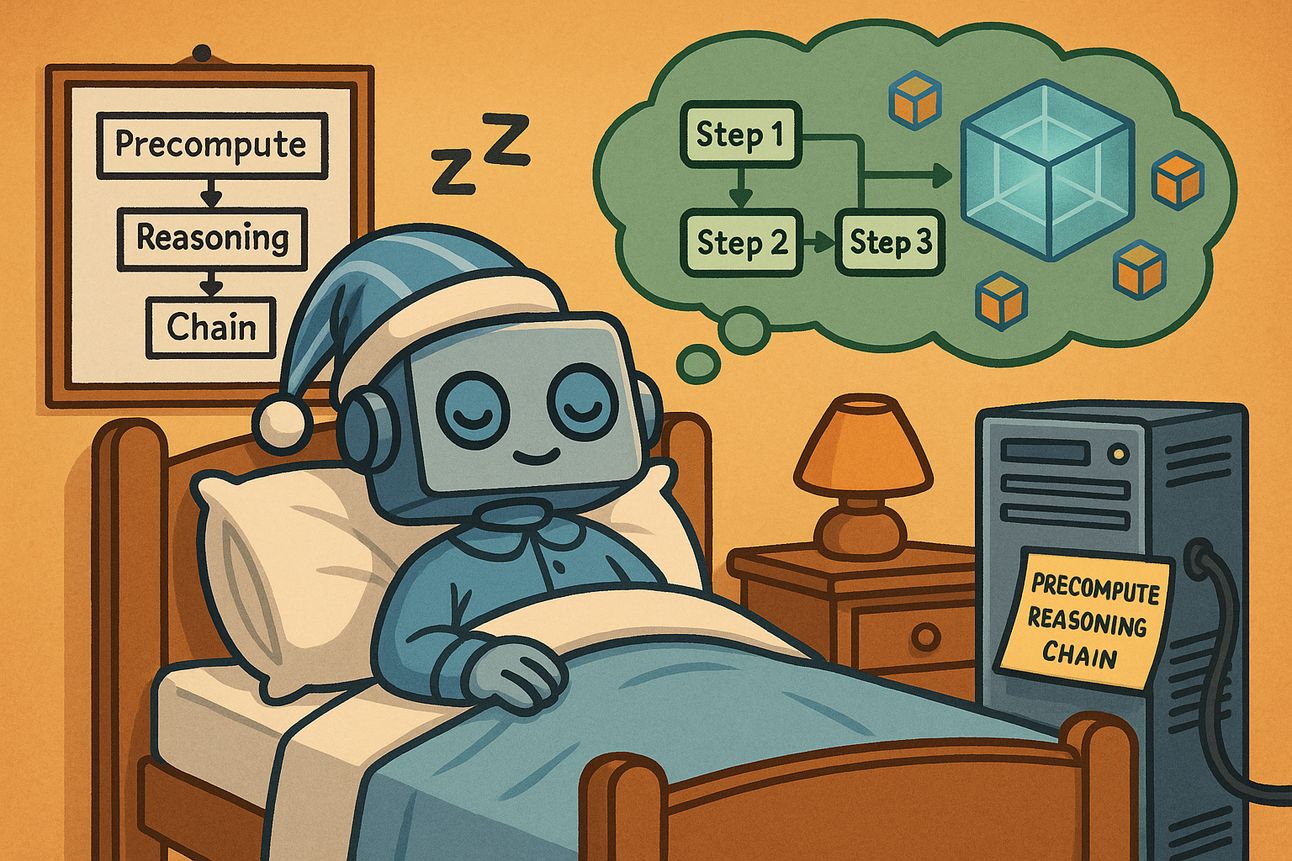

But what if we can pre-compute some of the reasoning chains before the consumer makes the request?

Sleep-time Compute

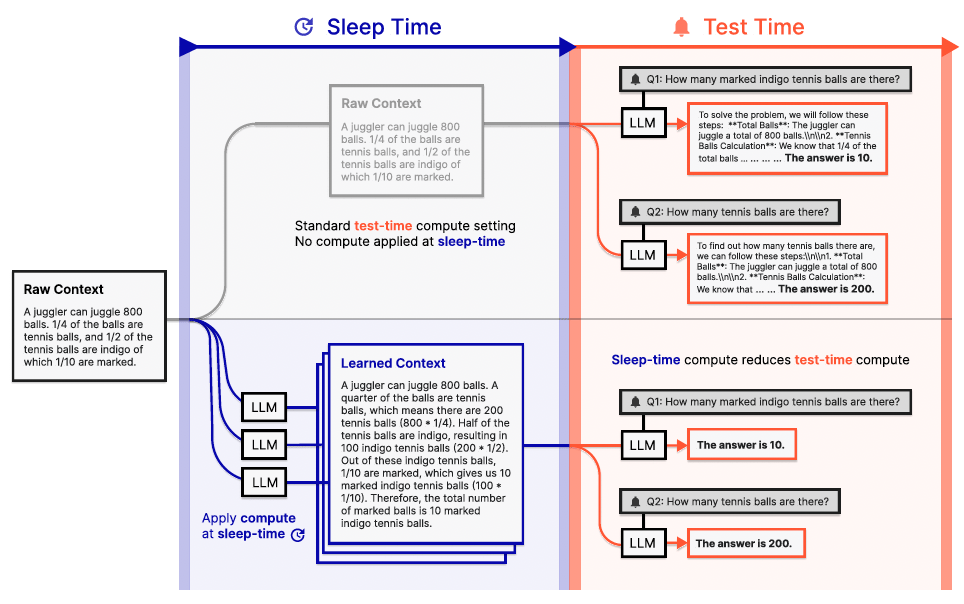

As mentioned, reasoning models work as follows:

They receive input, and they preprocess it.

Before answering, they ‘think,’ generating a sequence of tokens that ‘reason its way’ into the answer (laying out the steps of a math problem to solve the math problem).

They stop thinking and generate the response the user actually sees.

The crucial thing to understand here is that step 2 is crucial to guarantee step 3’s response is correct. That’s the whole point of inference-time compute.

The idea of sleep-time compute is that we can predict what the user will ask, prepare the response (specifically the reasoning chain in step 2) during “sleep time,” and when the user makes the request, we retrieve the response from memory instead of generating it on the fly by adding it as context to the model answering the question, and then the inference model simply outputs the correct answer.

Think of this as receiving the solution to a problem in a cheat sheet during an exam and simply writing down the answer without having to solve the problem, as it has been pre-solved for you:

But how does this solve anything? We are still doing the entire process, just before, right?

The crucial idea here is that we are avoiding inference-time compute, which we want to avoid because latency demands force us to be very GPU inefficient, by precomputing the result at times when latency is not crucial and, thus, we can distribute the workload in a way that is more efficient.

If we recall, inference makes your computation extremely memory-bound as we need to move much more data than the ideal, making GPUs run at a considerable revenue discount.

However, during the sleep phase, we don’t have to cache anything because latency is not an issue (no one is waiting for a response at that particular time). Therefore, instead of having latency as the driver of decisions, we can focus on running the workload in the most efficient way possible, even if it takes longer as no one is waiting for the answer.

For example, some things you can do when latency is not problem are:

Send huge batches to each GPU. The GPU takes longer to answer, but processes/generates many more tokens per second.

You can even also offload some of these compute to less tightly-connected clusters, which can’t be used during inference due to latency concerns. This way, you increase overall utilization of your depreciating assets while severely decreasing inference-time compute as most of the thinking process has been pre-computed.

You can send workloads to older GPUs that, while not good enough for inference latency demands, can process data at their pace during sleep time.

We can increase workloads at better energy prices (prices at night tend to be cheaper), just like when your parents washed clothes at night to save electricity costs,

And more.

In a nutshell, what we are doing is taking the most considerable portion of our inference workload and precomputing it so we decrease inference compute without impacting inference performance.

The Bottleneck was Never Intelligence

For obvious reasons, everyone views the intelligence threshold of AI models as the limiting factor of progress. People really don’t reflect on the idea that progress is nominal, never questioning whether that progress is affordable.

But that has never been the real bottleneck, and energy supply (which we haven’t touched on today but is a problem), as well as compute availability and profitability, are the real elephants in the room.

And reasoning models, which are inference-heavy, aka inefficient to run, are only making the elephant fatter. Hence, sleep-time compute offers a fantastic way to offload some of this inference compute into training-like workloads, which are much more efficient. This opens the door for infrastructure providers to squeeze as much performance as possible, increasing margins and hoping to recoup their billion-dollar investments.

If that doesn’t happen, the only thing we need is for one of the Hyperscalers to cut AI spending; the rest, pressured by investors, will follow, and the AI ivory tower will come crashing down.

THEWHITEBOX

Join Premium Today!

If you like this content, by joining Premium, you will receive four times as much content weekly without saturating your inbox. You will even be able to ask the questions you need answers to.

Until next time!

Give a Rating to Today's Newsletter

For business inquiries, reach me out at [email protected]